In its overall technologies, the space shuttle demanded advances in a host of areas: rocket propulsion, fuel cells and other onboard systems, electronics and computers, and astronaut life support. As an exercise in hypersonics, two issues stood out: configuration and thermal protection. The Air Force supported some of the early studies, which grew seamlessly out of earlier work on Aerospaceplane. At

Douglas Aircraft, for instance, Melvin Root had his two-stage Astro, a fully-reusable rocket-powered concept with both stages shaped as lifting bodies. It was to carry a payload of 37,150 pounds, and Root expected that a fleet of such craft would fly 240 times per year. The contemporary Astrorocket of Martin Marietta, in turn, looked like two flat-bottom Dyna-Soar craft set belly to belly, foreshadowing fully – reusable space shuttle concepts of several years later.23

These concepts definitely belonged to the Aerospaceplane era. Astro dated to 1963, whereas Martin’s Astrorocket studies went forward from 1961 to 1965. By mid-decade, though, the name “Aerospaceplane” was in bad odor within the Air Force. The new concepts were rocket-powered, whereas Aerospaceplanes generally had called for scramjets or LACE, and officials referred to these rocket craft as Integrated Launch and Re-entry Vehicles (ILRVs).24

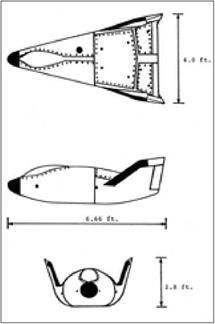

Early contractor studies showed a definite preference for lifting bodies, generally with small foldout wings for use when landing. At Lockheed, Hunter’s Star Clipper introduced the stage-and-a-half configuration that mounted expendable propellant tanks to a reusable core vehicle. The core carried the flight crew and payload along with the engines and onboard systems. It had a triangular planform and fitted neatly into a large inverted V formed by the tanks. The McDonnell Tip Tank concept was broadly similar; it also mounted expendable tanks to a lifting-body core.25

At Convair, people took the view that a single airframe could serve both as a core and, when fitted with internal tankage, as a reusable carrier of propellants. This led to the Triamese concept, whereby a triplet of such vehicles was to form a single ILRV that could rise into the sky. All three were to have thermal protection and would re-enter, flying to a runway and deploying their extendable wings. The concept was excessively hopeful; the differing requirements of core and tankage vehicles proved to militate strongly against a one-size-fits-all approach to airframe design. Still, the Triamese approach showed anew that designers were ready to use their imaginations.26

NASA became actively involved in the ongoing ILRV studies during 1968. George Mueller, the Associate Administrator for Manned Space Flight, took a particular interest and introduced the term “space shuttle” by which such craft came to be known. He had an in-house design leader, Maxime Faget of the Manner Spacecraft Center, who was quite strong-willed and had definite ideas of his own as to how a shuttle should look. Faget particularly saw lifting bodies as offering no more than mixed blessings: “You avoid wing-body interference,” which brings problems of aerodynamics. “You have a simple structure. And you avoid the weight of wings.” He nevertheless saw difficulties that appeared severe enough to rule out lifting bodies for a practical design.

They had low lift and high drag, which meant a dangerously high landing speed. As he put it, “I don’t think it’s charming to come in at 250 knots.” Deployable wings could not be trusted; they might fail to extend. Lifting bodies also posed serious difficulties in development, for they required a fuselage that could do the work of a wing. This ruled out straightforward solutions to aerodynamic problems; the attempted solutions would ramify throughout the entire design. “They are very difficult to develop,” he added, “because when you’re trying to solve one problem, you’re creating another problem somewhere else.”27 His colleague Milton Silveira, who went on to head the Shuttle Engineering Office at MSC, held a similar view;

“If we had a problem with the aerodynamics on the vehicle, where the body was so tightly coupled to the aerodynamics, you couldn’t simply go out and change the wing. You had to change the whole damn vehicle, so if you make a mistake, being able to correct it was a very difficult thing to do.”28

Faget proposed instead to design his shuttle as a two-stage fully-reusable vehicle, with each stage being a winged airplane having low wings and a thermally-protected flat bottom. The configuration broadly resembled the X-15, and like that craft, it was to re-enter with its nose high and with its underside acting as the heat shield.

Faget wrote that “the vehicle would remain in this flight attitude throughout the entire descent to approximately 40,000 feet, where the velocity will have dropped to less than 300 feet per second. At this point, the nose gets pushed down, and the vehicle dives until it reaches adequate velocity for level flight.” The craft then was to approach a runway and land at a moderate 130 knots, half the landing speed of a lifting body.29

During 1969 NASA sponsored a round of contractor studies that examined anew the range of alternatives. In June the agency issued a directive that ruled out the use of expendable boosters such as the Saturn V first stage, which was quite costly. Then in August, a new order called for the contractors to consider only two – stage fully reusable concepts and to eliminate partially-reusable designs such as Star Clipper and Tip Tank. This decision also was based in economics, for a fully-reusable shuttle could offer the lowest cost per flight. But it also delivered a new blow to the lifting bodies.30

There was a strong mismatch between lifting-body shapes, which were dictated by aerodynamics, and the cylindrical shapes of propellant tanks. Such tanks had to be cylindrical, both for ease in manufacturing and to hold internal pressure. This pressure was unavoidable; it resulted from boiloff of cryogenic propellants, and it served such useful purposes as stiffening the tanks’ structures and delivering propellants to the turbopumps. However, tanks did not fit well within the internal volume of a lifting body; in Faget’s words, “the lifting body is a damn poor container.” The Lockheed and McDonnell designers had bypassed that problem by mounting their tanks externally, with no provision for reuse, but the new requirement of full reusability meant that internal installation now was mandatory. Yet although lifting bodies made poor containers, Faget’s wing-body concept was an excellent one. Its fuselage could readily be cylindrical, being given over almost entirely to propellant tankage.31

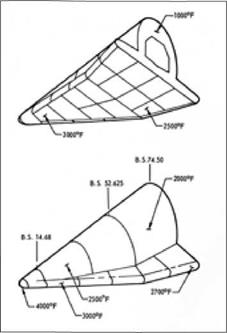

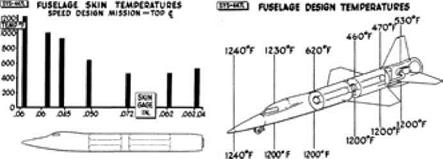

The design exercises of 1969 covered thermal protection as well as configuration. McDonnell Douglas introduced orbiter designs derived from the HL-10 lifting body, examining 13 candidate configurations for the complete two-stage vehicle. The orbiter had a titanium primary structure, obtaining thermal protection from a hot-structure approach that used external panels of columbium, nickel-chromium, and Rene 41. This study also considered the use of tiles made of a “hardened compacted fiber,” which was unrelated to Lockheed’s RSI. However, the company did not recommend this. Those tiles were heavier than panels or shingles of refractory alloy and less durable.32

North American Rockwell took Faget’s two-stage airplane as its preferred approach. It also used a titanium primary structure, with a titanium hot structure protecting the top of the orbiter, which faced a relatively mild thermal environment. For the thermally-protected bottom, North American adopted the work of Lockheed and specified LI-1500 tiles. The design also called for copious use of fiberglass insulation, which gave internal protection to the crew compartment and the cryogenic propellant tanks.33

Lockheed turned the Star Clipper core into a reusable second stage that retained its shape as a lifting body. Its structure was aluminum, as in a conventional airplane. The company was home to LI-1500, and designers considered its use for thermal protection. They concluded, though, that this carried high risk. They recommended instead a hot-structure approach that used corrugated Rene 41 along with shingles of nickel-chromium and columbium. The Lockheed work was independent of that at McDonnell Douglas, but engineers at the two firms reached similar conclusions.34

Convair, home of the Triamese concept, came in with new variants. These included a triplet launch vehicle with a core vehicle that was noticeably smaller than the two propellant carriers that flanked it. Another configuration placed the orbiter on the back of a single booster that continued to mount retractable wings. The orbiter had a primary structure of aluminum, with titanium for the heat-shield supports on the vehicle underside. Again this craft used a hot structure, with shingles of cobalt superalloy on the bottom and of titanium alloy on the top and side surfaces.

Significantly, these concepts were not designs that the companies were prepared to send to the shop floor and build immediately. They were paper vehicles that would take years to develop and prepare for flight. Yet despite this emphasis on the future, and notwithstanding the optimism that often pervades such preliminary design exercises, only North American was willing to recommend RSI as the baseline. Even Lockheed, its center of development, gave it no more than a highly equivocal recommendation. It lacked maturity, with hot structures standing as the approach that held greater promise.35

In the wake of the 1969 studies, NASA officials turned away from lifting bodies. Lockheed continued to study new versions of the Star Clipper, but the lifting body now was merely an alternative. The mainstream lay with Faget’s two-stage fully-reusable approach, showing rocket-powered stages that looked like airplanes. Very soon, though, the shape of the wings changed anew, as a result of problems in Congress.

The space shuttle was apolitical program, funded by federal appropriations, and it had to make its way within the environment of Washington. On Capitol Hill, an influential viewpoint held that the shuttle was to go forward only if it was a national program, capable of meeting the needs of military as well as civilian users. NASA’s shuttle studies had addressed the agency’s requirements, but this proved not to be the way to proceed. Matters came to a head in the mid-1970 as Congressman Joseph Karth, a longtime NASA supporter, declared that the shuttle was merely the first step on a very costly road to Mars. He opposed funding for the shuttle in committee, and when he did not prevail, he made a motion from the floor of the House to strike the funds from NASA’s budget. Other congressmen assured their colleagues that the shuttle had nothing to do with Mars, and Karth’s measure went down to defeat—but by the narrowest possible margin: a tie vote of 53 to 53. In the Senate, NASA’s support was only slightly greater.36

Such victories were likely to leave NASA undone, and the agency responded by seeking support for the shuttle from the Air Force. That service had tried and failed to build Dyna-Soar only a few years earlier; now it found NASA offering a much larger and more capable space shuttle on a silver platter. However, the Air Force was quite happy with its Titan III launch vehicles and made clear that it would work with NASA only if the shuttle was redesigned to meet the needs of the Pentagon. In particular, NASA was urged to take note of the views of the Air Force Flight Dynamics Laboratory (FDL), where specialists had been engaged in a running debate with Faget since early 1969.

The FDL had sponsored ILRV studies in parallel with the shuttle studies of NASA and had investigated such concepts as Lockheed’s Star Clipper. One of its managers, Charles Cosenza, had directed the ASSET program. Another FDL scientist, Alfred Draper, had taken the lead in questioning Faget’s approach. Faget wanted his shuttle stages to come in nose-high and then dive through 15,000 feet to pick up flying speed. With the nose so high, these airplanes would be fully stalled, and the Air Force disliked both stalls and dives, regarding them as preludes to an out-of-control crash. Draper wanted the shuttle to enter its glide while still supersonic, thereby maintaining much better control.

If the shuttle was to glide across a broad Mach range, from supersonic to subsonic, then it would face an important aerodynamic problem: a shift in the wing’s center of lift. Awing generates lift across its entire lower surface, but one may regard this lift as concentrated at a point, the center of lift. At supersonic speeds, this center is located midway between the wing’s leading and trailing edges. At subsonic speeds, this center moves forward and is much closer to the leading edge. To keep an airplane in proper balance, it requires an aerodynamic force that can compensate for this shift.

The Air Force had extensive experience with supersonic fighters and bombers that had successfully addressed this problem, maintaining good control and satisfactory handling qualities from Mach 3 to touchdown. Particularly for large aircraft— the B-58 and XB-70 bombers and the SR-71 spy plane—the preferred solution was a delta wing, triangular in shape. Delta wings typically ran along much of the length of the fuselage, extending nearly to the tail. Such aircraft dispensed with horizontal stabilizers at the tail and relied instead on elevons, control surfaces resembling ailerons that were set at the wing’s trailing edge. Small deflections of these elevons then compensated for the shift in the center of lift, maintaining proper trim and balance without imposing excessive drag. Draper therefore proposed that both stages of Faget’s shuttle have delta wings.37

Faget would have none of this. He wrote that because the only real flying was to take place during the landing approach, a wing design “can be selected solely on the basis of optimization for subsonic cruise and landing.” The wing best suited to this limited purpose would be straight and unswept, like those of fighter planes in World War II. A tail would provide directional stability, as on a conventional airplane, enabling the shuttle to land in standard fashion. He was well aware of the center-of-lift shift but expected to avoid it by avoiding reliance on his wings until the craft was well below the speed of sound. He also believed that the delta would lose on its design merits. To achieve a suitably low landing speed, he argued that the delta would need a large wingspan. A straight wing, narrow in distance between its leading and trailing edges, would be light and would offer relatively little area demanding thermal protection. A delta of the same span, necessary for a moderate landing speed, would have a much larger area than the straight wing. This would add a great deal of weight, while substantially increasing the area that needed thermal protection.38

Draper responded with his own view. He believed that Faget’s straight-wing design would be barred on grounds of safety from executing its maneuver of stall, dive, and recovery. Hence, it would have to glide from supersonic speeds through the transonic zone and could not avoid the center-of-lift problem. To deal with it, a good engineering solution called for installation of canards, small wings set well forward on the fuselage that would deflect to give the desired control. Canards produce lift and would tend to push the main wings farther to the back. They would be well aft from the outset, for they were to support an airplane that was empty of fuel but that had heavy rocket engines at the tail, placing the craft’s center of gravity far to the rear. The wings’ center of lift was to coincide closely with this center of gravity.

Draper wrote that the addition of canards “will move the wings aft and tend to close the gap between the tail and the wing.” The wing shape that fills this gap is the delta, and Draper added that “the swept delta would most likely evolve.”39

Faget had other critics, while Draper had supporters within NASA. Faget’s thoughts indeed faced considerable resistance within NASA, particularly among the highly skilled test and research pilots at the Flight Research Center. Their spokesman, Milton Thompson, was certainly a man who knew how to fly airplanes, for he was an X-15 veteran and had been slated to fly Dyna-Soar as well. But in addition, these aerodynamic issues involved matters of policy, which drove the Air Force strongly toward the delta. The reason was that a delta could achieve high crossrange, whereas Fagets straight wing could not.

Crossrange was essential for single-orbit missions, launched from Vandenberg AFB on the California coast, which were to fly in polar orbit. The orbit of a spacecraft is essentially fixed with respect to distant stars, but the Earth rotates. In the course of a 90-minute shuttle orbit, this rotation carries the Vandenberg site eastward by 1,100 nautical miles. The shuttle therefore needed enough crossrange to cover that distance.

The Air Force had operational reasons for wanting once-around missions. A key example was rapid-response satellite reconnaissance. In addition, the Air Force was well aware that problems following launch could force a shuttle to come down at the earliest opportunity, executing a “once-around abort.” NASA’s Leroy Day, a senior shuttle manager, emphasized this point: “If you were making a polar-type launch out ofVandenberg, and you had Max’s straight-wing vehicle, there was no place you could go. You’d be in the water when you came back. You’ve got to go crossrange quite a few hundred miles in order to make land.”40

By contrast, NASA had little need for crossrange. It too had to be ready for once – around abort, but it expected to launch the shuttle from Florida’s Kennedy Space Center on trajectories that ran almost due east. Near the end of its single orbit, the shuttle was to fly across the United States and could easily land at an emergency base. A 1969 baseline program document, “Desirable System Characteristics,” stated that the agency needed only 250 to 400 nautical miles of crossrange, which Faget’s straight wing could deliver with straightforward modifications.41

Faget’s shuttle had a hypersonic L/D of about 0.5- Draper’s delta-wing design was to achieve an L/D of 1.7, and the difference in the associated re-entry trajectories increased the weight penalty for the delta. A delta orbiter in any case needed a heavier wing and a larger area of thermal protection, and there was more. The straight-wing craft was to have a relatively brief re-entry and a modest heating rate. The delta orbiter was to achieve its crossrange by gliding hypersonically, executing a hypersonic glide that was to produce more lift and less drag. It also would increase both the rate of heating and its duration. Hence, its thermal protection had to be more robust and therefore heavier still. In turn, the extra weight ramified throughout the entire two-stage shuttle vehicle, making it larger and more costly.42

NASA’s key officials included the acting administrator, George Low, and the Associate Administrator for Manned Space Flight, Dale Myers. They would willingly have embraced Faget’s shuttle. But on the military side, the Undersecretary of the Air Force for Research and Development, Michael Yarymovich, had close knowledge of the requirements of the National Reconnaissance Office. He played a key role in emphasizing that only a delta would do.

The denouement came at a meeting in Williamsburg, Virginia, in January 1971. At nearby Yorktown, in 1781, Britain’s Lord Charles Cornwallis had surrendered to General George Washington, thereby ending Americas war of independence. One hundred and ninety years later NASA surrendered to the Air Force, agreeing particularly to build a delta-wing shuttle with full military crossrange of 1,100 miles. In return, though, NASA indeed won the support from the Pentagon that it needed. Opposition faded on Capitol Hill, and the shuttle program went forward on a much stronger political foundation.43

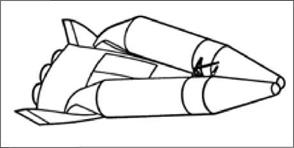

The design studies of 1969 had counted as Phase A and were preliminary in character. In 1970 the agency launched Phase B, conducting studies in greater depth, with North American Rockwell and McDonnell Douglas as the contractors. Initially they considered both straight-wing and delta designs, but the Williamsburg decision meant that during 1971 they were to emphasize the deltas. These remained as two-stage fully-reusable configurations, which were openly presented at an AIAA meeting in July of that year.

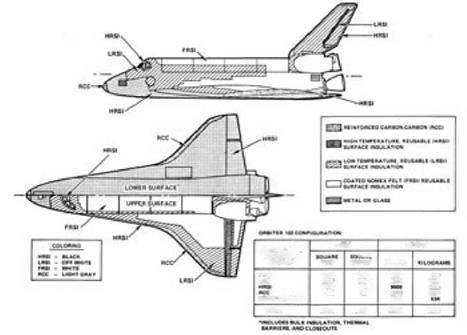

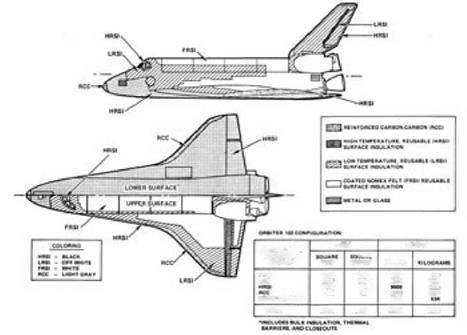

In the primary structure and outer skin of the wings and fuselage, both contractors proposed to use titanium freely. They differed, however, in their approaches to thermal protection. McDonnell Douglas continued to favor hot structures. Most of the underside of the orbiter was covered with shingles of Hastelloy-X nickel superalloy. The wing leading edges called for a load-bearing structure of columbium, with shingles of coated columbium protecting these leading edges as well as other areas that were too hot for the Hastelloy. A nose cap of carbon-carbon completed the orbiter’s ensemble.44

North American had its own interest in titanium hot structures, specifying them as well for the upper wing surfaces and the upper fuselage. Everywhere else possible, the design called for applying mullite RSI directly to a skin of aluminum. Such tiles were to cover the entire underside of the wings and fuselage, along with much of the fuselage forward of the wings. The nose and leading edges, both of the wings, and the vertical fin used carbon-carbon. In turn, the fin was designed as a hot structure with a skin of Inconel 718 nickel alloy.45

By mid-1971, though, hot structures were in trouble. The new Office of Management and Budget had made clear that it expected to impose stringent limits on funding for the shuttle, which brought a demand for new configurations that could

cut the cost of development. Within weeks, the contractors did a major turnabout. They went over to primary structures of aluminum. They also abandoned hot structures and embraced RSI. Managers were aware that it might take time to develop for operational use, but they were prepared to use ablatives for interim thermal protection, switching to RSI once it was ready.46

What brought this dramatic change? The advent of RSI production at Lockheed was critical. This drew attention from Faget, who had kept his hand in the field of shuttle design, offering a succession of conceptual configurations that had helped to guide the work of the contractors. His most important concept, designated MSC – 040, came out in September 1971 and served as a point of reference. It used aluminum and RSI.47

“My history has always been to take the most conservative approach,” Faget declared. Everyone knew how to work with aluminum, for it was the most familiar of materials, but titanium was literally a black art. Much of the pertinent shop – floor experience had been gained within the SR-71 program and was classified. Few machine shops had the pertinent background, for only Lockheed had constructed an airplane—the SR-71—that used titanium hot structure. The situation was worse for columbium and the superalloys because these metals had been used mostly in turbine blades. Lockheed had encountered serious difficulties as its machinists and metallurgists wrestled with the use of titanium. With the shuttle facing cost constraints, no one wanted to risk an overrun while machinists struggled with the problems of other new materials.48

NASA-Langley had worked to build a columbium heat shield for the shuttle and had gained a particularly clear view of its difficulties. It was heavier than RSI but offered no advantage in temperature resistance. In addition, coatings posed serious problems. Silicides showed promise of reusability and long life, but they were fragile and damaged easily. A localized loss of coating could result in rapid oxygen embrittlement at high temperatures. Unprotected columbium oxidized readily, and above the melting point of its oxide, 2,730°F, it could burst into flame.49 “The least little scratch in the coating, the shingle would be destroyed during re-entry,” said Faget. Charles Donlan, the shuttle program manager at NASA Headquarters, placed this in a broader perspective in 1983:

“Phase В was the first really extensive effort to put together studies related to the completely reusable vehicle. As we went along, it became increasingly evident that there were some problems. And then as we looked at the development problems, they became pretty expensive. We learned also that the metallic heat shield, of which the wings were to be made, was by no means ready for use. The slightest scratch and you are in trouble.”50

Other refractory metals offered alternatives to columbium, but even when proposing to use them, the complexity of a hot structure also militated against their selection. As a mechanical installation, it called for large numbers of clips, brackets, stand-offs, frames, beams, and fasteners. Structural analysis loomed as a formidable task. Each of many panel geometries needed its own analysis, to show with confidence that the panels would not fail through creep, buckling, flutter, or stress under load. Yet this confidence might be fragile, for hot structures had limited ability to resist overtemperatures. They also faced the continuing issue of sealing panel edges against ingestion of hot gas during re-entry.51

In this fashion, having taken a long look at hot structures, NASA did an about – face as it turned toward the RSI that Lockheed’s Max Hunter had recommended as early as 1965- The choice of aluminum for the primary structure reflected the excellent insulating properties of RSI, but there was more. Titanium offered a potential advantage because of its temperature resistance; hence, its thermal protection might be lighter. However, the apparent weight saving was largely lost due to a need for extra insulation to protect the crew cabin, payload bay, and onboard systems. Aluminum could compensate for its lack of heat resistance because it had higher thermal conductivity than titanium. Hence, it could more readily spread its heat throughout the entire volume of the primary structure.

Designers expected to install RSI tiles by bonding them to the skin, and for this, aluminum had a strong advantage. Both metals form thin layers of oxide when exposed to air, but that of aluminum is more strongly bound. Adhesive, applied to aluminum, therefore held tightly. The bond with titanium was considerably weaker and appeared likely to fail in operational use at approximately 500°E This was not much higher than the limit for aluminum, 350°F, which showed that the temperature resistance of titanium did not lend itself to operational use.52

The move toward RSI and aluminum simplified the design and cut the development cost. Substantially larger cost savings came into view as well, as NASA moved away from full reusability of its two-stage concepts. The emphasis now was on partial reusability, which the prescient Max Hunter had advocated as far back as 1965 when he placed the liquid hydrogen of Star Clipper in expendable external tanks. The new designs kept propellants within the booster, but they too called for the use of external tankage for the orbiter. This led to reduced sizes for both stages and had a dramatic impact on the problem of providing thermal protection for the shuttles booster.

On paper, a shuttle booster amounted to the world s largest airplane, combining the size of a Boeing 747 or C-5A with performance exceeding that of the X-15- It was to re-enter at speeds well below orbital velocity, but still it needed thermal protection, and the reduced entry velocities did not simplify the design. North American, for one, specified RSI for its Phase В orbiter, but the company also had to show

|

T(.*~ JJJJJ SfcICA riD[P J№ *T

™b*F

СОЛГИН1 . BCnoVLlCV E IIHj4Fj

ИЧЮЕ fcHt-QMlCA* «ЯРЧДТ-Сі ^r-HCWUl-ELl

MLLO вли – COATICl 4JUU FILT (СКІШ-ВМи-ГСИ^ГийІ и№41« IHtVi SH. IDCMI

|

|

JfcL3

|

|

|

Thermal-protection tiles for the space shuttle. (NASA)

|

|

|

■ hlrt>4 гни: іеііся ivim

|

|

|

that it understood hot structures. These went into the booster, which protected its hot areas with titanium, Inconel 718, carbon-carbon, Rene 41, Haynes 188 steel— and coated columbium.

The move toward external tankage brought several advantages, the most important of which was a reduction in staging velocity. When designing a two-stage rocket, standard methods exist for dividing the work load between the two stages so as to achieve the lowest total weight. These methods give an optimum staging velocity. A higher value makes the first stage excessively large and heavy; a lower velocity means more size and weight for the orbiter. Ground rules set at NASA’s Marshall Space Flight Center, based on such optimization, placed this staging velocity close to 10,000 feet per second.

But by offloading propellants into external tankage, the orbiter could shrink considerably in size and weight. The tanks did not need thermal protection or heavy internal structure; they might be simple aluminum shells stiffened with internal pressure. With the orbiter being lighter, and being little affected by a change in staging velocity, a recalculation of the optimum value showed advantage in making the tanks larger so that they could carry more propellant. This meant that the orbiter was to gain more speed in flight—and the booster would gain less. Hence, the booster also could shrink in size. Better yet, the reduction in staging velocity eased the problem of booster thermal protection.

Grumman was the first company to pursue this line of thought, as it studied alternative concepts alongside the mainstream fully reusable designs of McDonnell Douglas and North American. Grumman gave a fully-reusable concept of its own, for purposes of comparison, but emphasized a partially-reusable orbiter that put the liquid hydrogen in two external tanks. The liquid oxygen, which was dense and compact, remained aboard this vehicle, but the low density of the hydrogen meant that its tanks could be bulky while remaining light in weight.

The fully-reusable design followed the NASA Marshall Space Flight Center ground rules and showed a staging velocity of 9,750 feet per second. The external – tank configuration cut this to 7,000 feet per second. Boeing, which was teamed with Grumman, found that this substantially reduced the need for thermal protection as such. The booster now needed neither tiles nor exotic metals. Instead, like the X – 15, it was to use its structure as a heat sink. During re-entry, it would experience a sharp but brief pulse of heat, which a conventional aircraft structure could absorb without exceeding temperature limits. Hot areas continued to demand a titanium hot structure, which was to cover some one-eighth of the booster. However, the rest of this vehicle could make considerable use of aluminum.

How could bare aluminum, without protection, serve in a shuttle booster? It was common understanding that aluminum airframes lost strength due to aerodynamic heating at speeds beyond Mach 2, with titanium being necessary at higher speeds. However, this held true for aircraft in cruise, which faced their temperatures con

tinually. The Boeing booster was to re-enter at Mach 7, matching the top speed of the X-l 5. Even so, its thermal environment resembled a fire that does not burn your hand when you whisk it through quickly Across part of the underside, the vehicle was to protect itself by the simple method of metal with more than usual thickness to cope with the heat. Even these areas were limited in extent, with the contractors noting that “the material gauges [thicknesses] required for strength exceed the minimum heat-sink gauges over the majority of the vehicle.”53

McDonnell Douglas went further. In mid-1971 it introduced its own external – tank orbiter that lowered the staging velocity to 6,200 feet per second. Its winged booster was 82 percent aluminum heat sink. Their selected configuration was optimized from a thermal standpoint, bringing the largest savings in the weight of thermal protection.54 Then in March 1972 NASA selected solid-propellant rockets for the boosters. The issue of their thermal protection now went away entirely, for these big solids used steel casings that were 0.5 inch thick and that provided heat sink very effectively.55

Amid the design changes, NASA went over to the Air Force view and embraced the delta wing. Faget himself accepted it, making it a feature of his MSC-040 concept. Then the Office of Management and Budget asked whether NASA might return to Faget’s straight wing after all, abandoning the delta and thereby saving money. Nearly a year after the Williamsburg meeting, Charles Donlan, acting director of the shuttle program office at Headquarters, ruled this out. In a memo to George Low, he wrote that high crossrange was “fundamental to the operation of the orbiter.” It would enhance its maneuverability, greatly broadening the opportunities to abort a mission and perhaps save the lives of astronauts. High crossrange would also provide more frequent opportunities to return to Kennedy Space Center in the course of a normal mission.

Delta wings also held advantages that were entirely separate from crossrange. A delta orbiter would be stable in flight from hypersonic to subsonic speeds, throughout a wide range of nose-high attitudes. The aerodynamic flow over such an orbiter would be smooth and predictable, thereby permitting accurate forecasts of heating during re-entry and giving confidence in the design of the shuttle’s thermal protection. In addition, the delta vehicle would experience relatively low temperatures of 600 to 800°F over its sides and upper surfaces.

By contrast, straight-wing configurations produced complicated hypersonic flow fields, with high local temperatures and severe temperature changes on the wing, body, and tail. Temperatures on the sides of the fuselage would run from 900 to 1,300°F, making the design and analysis of thermal protection more complex. During transition from supersonic to subsonic speeds, the straight-wing orbiter would experience unsteady flow and buffeting, making it harder to fly. This combination of aerodynamic and operational advantages led Donlan to favor the delta for reasons that were entirely separate from those of the Air Force.56

The Loss of Columbia

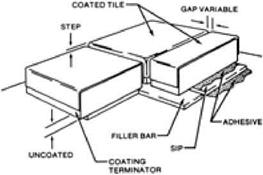

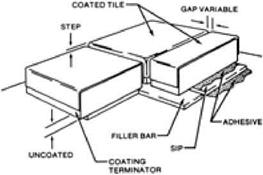

Thermal protection was delicate. The tiles lacked structural strength and were brittle. It was not possible even to bond them directly to the underlying skin, for they would fracture and break due to their inability to follow the flexing of the skin under its loads. Designers therefore placed an intermediate layer between tiles and skin that had some elasticity and could stretch in response to shuttle skin flexing without transmitting excessive strain to the tiles. It worked; there never was a serious accident due to fracturing of tiles.57

The same was not true of another piece of delicate work: a thermal-protection panel made of carbon that had the purpose of protecting one of the wing leading edges. It failed during re-entry in virtually the final minutes of a flight of Columbia, on 1 February 2003. For want of this panel, that spacecraft broke up over Texas in a shower of brilliant fireballs. All aboard were killed.

The background to this accident lay in the fact that for the nose and leading edges of the shuttle, silica RSI was not enough. These areas needed thermal protection with greater temperature resistance, and carbon was the obvious candidate. It was lighter than aluminum and could be protected against oxidation with a coating. It also had a track record, having formed the primary structure of the Dyna-Soar nose cap and the leading edge of ASSET. Graphite was the standard form, but in contrast to ablative materials, it failed to enter the aerospace mainstream. It was brittle and easily damaged and did not lend itself to use with thin-walled structures.

The development of a better carbon began in 1958, with Vought Missiles and Space Company in the forefront. The work went forward with support from the Dyna-Soar and Apollo programs and brought the advent of an all-carbon composite consisting of graphite fibers in a carbon matrix. Existing composites had names such as carbon-phenolic and graphite-epoxy; this one was carbon-carbon.

It retained the desirable properties of graphite in bulk: light weight, temperature resistance, and resistance to oxidation when coated. It had the useful property of actually gaining strength with temperature, being up to 50 percent stronger at 3,000°F than at room temperature. It had a very low coefficient of thermal expansion, which reduced thermal stress. It also had better damage tolerance than graphite.

Carbon-carbon was a composite. As with other composites, Vought engineers fabricated parts of this material by forming them as layups. Carbon cloth gave a point of departure, produced by oxygen-free pyrolysis of a woven organic fiber such as rayon. Sheets of this fabric, impregnated with phenolic resin, were stacked in a mold to form the layup and then cured in an autoclave. This produced a shape made of laminated carbon cloth phenolic. Further pyrolysis converted the resin to its basic carbon, yielding an all-carbon piece that was highly porous due to the loss of volatiles. It therefore needed densification, which was achieved through multiple cycles of reimpregnation under pressure with an alcohol, followed by further pyrolysis. These cycles continued until the part had its specified density and strength.58

Researchers at Vought conducted exploratory studies during the early 1960s, investigating resins, fibers, weaves, and coatings. In 1964 they fabricated a Dyna – Soar nose cap of carbon-carbon, with this exercise permitting comparison of the new nose cap with the standard versions that used graphite and zirconia tiles. In 1966 this firm crafted a heat shield for the Apollo afterbody, which lay leeward of the curved ablative front face. A year and a half later the company constructed a wind-tunnel model of a Mars probe that was designed to enter the atmosphere of that planet.59

These exercises did not approach the full-scale development that Dyna-Soar and ASSET had brought to hot structures. They definitely were in the realm of the preliminary. Still, as they went forward along with Lockheed’s work on silica RSI and GE’s studies of mullite, the work at Vought made it clear that carbon-carbon was likely to take its place amid the new generation of thermal-protection materials.

The shuttle’s design specified carbon-carbon for the nose cap and leading edges, and developmental testing was conducted with care. Structural tests exercised their methods of attachment by simulating flight loads up to design limits, with design temperature gradients. Other tests, conducted within an arc-heated facility, determined the thermal responses and hot-gas leakage characteristics of interfaces between the carbon-carbon and RSI.60

Other tests used articles that represented substantial portions of the orbiter. An important test item, evaluated at NASA-John – son, reproduced a wing leading edge and measured five by eight feet. It had two leading-edge panels of carbon-carbon set side by side, a section of wing structure that included its main spars, and aluminum skin covered with RSI. It had insulated attachments, internal insulation, and interface seals between the carbon-carbon and the RSI. It withstood simulated air loads, launch acoustics, and mission temperature-pressure environments, not once but many times.61

Other tests used articles that represented substantial portions of the orbiter. An important test item, evaluated at NASA-John – son, reproduced a wing leading edge and measured five by eight feet. It had two leading-edge panels of carbon-carbon set side by side, a section of wing structure that included its main spars, and aluminum skin covered with RSI. It had insulated attachments, internal insulation, and interface seals between the carbon-carbon and the RSI. It withstood simulated air loads, launch acoustics, and mission temperature-pressure environments, not once but many times.61

There was no doubt that left to themselves, the panels of carbon-carbon that protected the leading edges would have continued to do so. Unfortunately, they were not left to themselves. During the ascent of Columbia, on 16 January 2003, a

large piece of insulating foam detached itself from a strut that joined the external tank to the front of the orbiter. The vehicle at that moment was slightly more than 80 seconds into the flight, traveling at nearly Mach 2.5. This foam struck a carbon – carbon panel and delivered what proved to be a fatal wound.

Ground controllers became aware of this the following day, during a review of high-resolution film taken at the time of launch. The mission continued for two weeks, and in the words of the accident report, investigators concluded that “some localized heating damage would most likely occur during re-entry, but they could not definitively state that structural damage would result.”62

Yet the damage was mortal. Again, in words of the accident report,

Columbia re-entered Earth’s atmosphere with a pre-existing breach

in the leading edge of its left wing_____ This breach, caused by the foam

strike on ascent, was of sufficient size to allow superheated air (probably exceeding 5,000°F) to penetrate the cavity behind the RCC panel. The breach widened, destroying the insulation protecting the wing’s leading edge support structure, and the superheated air eventually melted the thin aluminum wing spar. Once in the interior, the superheated air began to

destroy the left wing___ Finally, over Texas,…the increasing aerodynamic

forces the Orbiter experienced in the denser levels of the atmosphere overcame the catastrophically damaged left wing, causing the Orbiter to fall out of control.63

It was not feasible to go over to a form of thermal protection, for the wing leading edges, that would use a material other than carbon-carbon and that would be substantially more robust. Even so, three years of effort succeeded in securing the foam and the shuttle returned to flight in July 2006 with foam that stayed put.

In addition, people took advantage of the fact that most such missions had already been intended to dock with the International Space Station. It now became a rule that the shuttle could fly only if it were to go there, where it could be inspected minutely prior to re-entry and where astronauts could stay, if necessary, until a damaged shuttle was repaired or a new one brought up. In this fashion, rather than the thermal protection being shaped to fit the needs of the missions, the missions were shaped to fit the requirements of having safe thermal protection.64

As early as August 1959, the Flight Dynamics Laboratory at Wright-Patter – son Air Force Base launched an in-house study of a small recoverable boost-glide vehicle that was to test hot structures during re-entry. From the outset there was strong interest in problems of aerodynamic flutter. This was reflected in the concept name: ASSET or Aerother – modynamic/elastic Structural Systems Environmental Tests.

As early as August 1959, the Flight Dynamics Laboratory at Wright-Patter – son Air Force Base launched an in-house study of a small recoverable boost-glide vehicle that was to test hot structures during re-entry. From the outset there was strong interest in problems of aerodynamic flutter. This was reflected in the concept name: ASSET or Aerother – modynamic/elastic Structural Systems Environmental Tests.

an impregnant in LI-1500 compromised its reusability. In contrast to earlier entry vehicle concepts, Star Clipper was large, offering exposed surfaces that were sufficiently blunt to benefit from the Allen-Eggers principle. They had lower temperatures and heating rates,

an impregnant in LI-1500 compromised its reusability. In contrast to earlier entry vehicle concepts, Star Clipper was large, offering exposed surfaces that were sufficiently blunt to benefit from the Allen-Eggers principle. They had lower temperatures and heating rates,

Jet engines have functioned at speeds as high as Mach 3.3. However, such an engine must accelerate to reach that speed and must remain operable to provide control when decelerating from that speed. Engine designers face the problem of “compressor stall,” which arises because compressors have numerous stages or rows of blades and the forward stages take in more air than the rear stages can accommodate. Gerhard Neumann of General Electric, who solved this problem, states that when a compressor stalls, the airflow pushes forward “with a big bang and the pilot loses all his thrust. Its violent; we often had blades break off during a stall.”

Jet engines have functioned at speeds as high as Mach 3.3. However, such an engine must accelerate to reach that speed and must remain operable to provide control when decelerating from that speed. Engine designers face the problem of “compressor stall,” which arises because compressors have numerous stages or rows of blades and the forward stages take in more air than the rear stages can accommodate. Gerhard Neumann of General Electric, who solved this problem, states that when a compressor stalls, the airflow pushes forward “with a big bang and the pilot loses all his thrust. Its violent; we often had blades break off during a stall.”

Other tests used articles that represented substantial portions of the orbiter. An important test item, evaluated at NASA-John – son, reproduced a wing leading edge and measured five by eight feet. It had two leading-edge panels of carbon-carbon set side by side, a section of wing structure that included its main spars, and aluminum skin covered with RSI. It had insulated attachments, internal insulation, and interface seals between the carbon-carbon and the RSI. It withstood simulated air loads, launch acoustics, and mission temperature-pressure environments, not once but many times.61

Other tests used articles that represented substantial portions of the orbiter. An important test item, evaluated at NASA-John – son, reproduced a wing leading edge and measured five by eight feet. It had two leading-edge panels of carbon-carbon set side by side, a section of wing structure that included its main spars, and aluminum skin covered with RSI. It had insulated attachments, internal insulation, and interface seals between the carbon-carbon and the RSI. It withstood simulated air loads, launch acoustics, and mission temperature-pressure environments, not once but many times.61

No other research airplane had ever flown with such thrusters, although the X-1B conducted early preliminary experiments and the X-2 came close to needing them in 1956. During a flight in September of that year, the test pilot Iven Kinche – loe took it to 126,200 feet. At that altitude, its aerodynamic controls were useless. Kincheloe

No other research airplane had ever flown with such thrusters, although the X-1B conducted early preliminary experiments and the X-2 came close to needing them in 1956. During a flight in September of that year, the test pilot Iven Kinche – loe took it to 126,200 feet. At that altitude, its aerodynamic controls were useless. Kincheloe