The Shuttle was one of the last major aircraft to rely almost entirely on wind tunnels for studies of its aerodynamics. There was much interest in an alternative: the use of supercomputers to derive aerodynamic data through solution of the governing equations of airflow, known as the Navier-Stokes equations. Solution of the complete equations was out of the question, for they carried the complete physics of turbulence, with turbulent eddies that spanned a range of sizes covering several orders of magnitude. But during the 1970s, investigators made headway by dro – ing the terms within these equations that contained viscosity, thereby suppressing turbulence.[629]

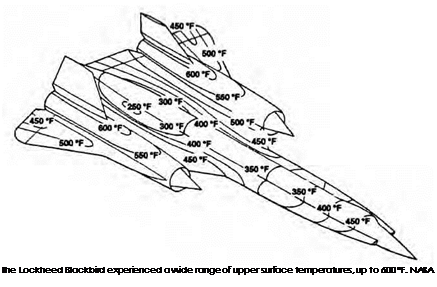

People pursued numerical simulation because it offered hope of overcoming the limitations of wind tunnels. Such facilities usually tested small models that failed to capture important details of the aerodynamics of full-scale aircraft. Other errors arose from tunnel walls and model supports. Hypersonic flight brought its own restrictions. No installation had the power to accommodate a large model, realistic in size, at the velocity and temperatures of reentry.[630]

By piecing together results from specialized facilities, it was possible to gain insights into flows at near-orbital speeds. The Shuttle reentered at Mach 27. NASA Langley had a pair of wind tunnels that used helium, which expands to very high flow velocities. These attained Mach 20, Mach 26, and even Mach 50. But their test models were only a few inches in size, and their flows were very cold and could not duplicate the high temperatures of atmosphere entry. Shock tunnels, which heated and compressed air using shock waves, gave true temperature up to Mach 17 while accommodating somewhat larger models. Yet their flow durations were measured in milliseconds.[631]

During the 1970s, the largest commercially available mainframe computers included the Control Data 7600 and the IBM 370-195.[632] These sufficed to treat complete aircraft—but only at the lowest level of approximation, which used linearized equations and treated the airflow over an airplane as a small disturbance within a uniform free stream. The full Navier-Stokes equations contained 60 partial derivatives; the linearized approximation retained only 3 of these terms. It nevertheless gave good accuracy in computing lift, successfully treating such complex configurations as a Shuttle orbiter mated to its 747. The next level of approximation restored the most important nonlinear terms and treated transonic and hypersonic flows, which were particularly difficult to simulate in wind tunnels. The inadequacies of wind tunnel work had brought such errors as faulty predictions of the location of shock waves along the wings of the C-141, an Air Force transport. In flight test, this plane tended to nose downward, and its design had to be modified at considerable expense.

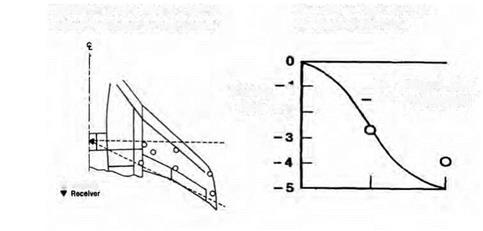

Computers such as the 7600 could not treat complete aircraft in transonic flow, for the equations were more complex and the computation requirements more severe. HiMAT, a highly maneuverable NASA experimental aircraft, flew at Dryden and showed excessive drag at Mach 0.9. Redesign of its wing used a transonic-flow computational code and approached the design point. The same program, used to reshape the wing of the Grumman Gulfstream, gave considerable increases in range and fuel economy while reducing the takeoff distance and landing speed.[633]

During the 1970s, NASA’s most powerful computer was the Illiac IV, at Ames Research Center. It used parallel processing and had 64 processing units, achieving speeds up to 25 million operations per second. Built by Burroughs Corporation with support from the Pentagon, this machine was one of a kind. It entered service at Ames in 1973 and soon showed that it could run flow-simulation codes an order of magnitude more rapidly than a 7600. Indeed, its performance foreshadowed the Cray-1, a true supercomputer that became commercially available only after 1976.

The Illiac IV was a research tool, not an instrument of mainstream Shuttle development. It extended the reach of flow codes, treating threedimensional inviscid problems while supporting simulations of viscous flows that used approximate equations to model the turbulence.[634] In the realm of Space Shuttle studies, Ames’s Walter Reinhardt used it to run a three-dimensional inviscid code that included equations of atmospheric chemistry. Near-peak-entry heating of the Shuttle would be surrounded by dissociated air that was chemically reacting and not in chemical equilibrium. Reinhardt’s code treated the full-scale orbiter during entry and gave a fine example of the computational simulation of flows that were impossible to reproduce in ground facilities.[635]

Such exercises gave tantalizing hints of what would be done with computers of the next generation. Still, the Shuttle program was at least a decade too early to use computational simulations both routinely and effectively. NASA therefore used its wind tunnels. The wind tunnel program gave close attention to low-speed flight, which included approach and landing as well as separation from the 747 during the 1977 flight tests of Enterprise.

In 1975, Rockwell built a $1 million model of the orbiter at 0.36 scale, lemon yellow in color and marked with the blue NASA logo. It went into the 40- by 80-foot test section of Ames’s largest tunnel, which was easily visible from the adjacent freeway. It gave parameters for the astronauts’ flight simulators, which previously had used data from models at 3-percent scale. The big one had grooves in its surface that simulated the gaps between thermal protection tiles, permitting assessment of the consequences of the resulting roughness of the skin. It calibrated and tested systems for making aerodynamic measurements during flight test and verified the design of the elevons and other flight control surfaces as well as of their actuators.[636]

Other wind tunnel work strongly influenced design changes that occurred early in development. The most important was the introduction of the lightweight delta wing late in 1972, which reduced the size of the solid boosters and chopped 1 million pounds from the overall weight. Additional results changed the front of the external tank from a cone to an ogive and moved the solid boosters rearward, placing their nozzles farther from the orbiter. The modifications reduced drag, minimized aerodynamic interference on the orbiter, and increased stability by moving the aerodynamic center aft.

The activity disclosed and addressed problems that initially had not been known to exist. Because both the liquid main engines and the solids had nozzles that gimbaled, it was clear that they had enough power to provide control during ascent. Aerodynamic control would not be necessary, and managers believed that the orbiter could set its elevons in a single position through the entire flight to orbit. But work in wind tunnels subsequently showed that aerodynamic forces during ascent would impose excessive loads on the wings. This required elevons to move while in powered flight to relieve these loads. Uncertainties in the

wind tunnel data then broadened this requirement to incorporate an active system that prevented overloading the elevon actuators. This system also helped the Shuttle to fly a variety of ascent trajectories, which imposed different elevon loads from one flight to the next.[637]

Much wind tunnel work involved issues of separation: Enterprise from its carrier aircraft, solid boosters from the external tank after burnout. At NASA Ames, a 14-foot transonic tunnel investigated problems of Enterprise and its 747. Using the same equipment, engineers addressed the separation of an orbiter from its external tank. This was supposed to occur in near-vacuum, but it posed aerodynamic problems during an abort.

The solid boosters brought their own special issues and nuances. They had to separate cleanly; under no circumstances could a heavy steel casing strike a wing. Small solid rocket motors, mounted fore and aft on each booster, were to push them away safely. It then was necessary to understand the behavior of their exhaust plumes, for these small motors were to blast into onrushing airflow that could blow their plumes against the orbiter’s sensitive tiles or the delicate aluminum skin of the external tank. Wind tunnel tests helped to define appropriate angles of fire while also showing that a short, sharp burst from the motors was best.[638]

Prior to the first orbital flight in 1981, the program racked up 46,000 wind tunnel hours. This consisted of 24,900 hours for the orbiter, 17,200 for the mated launch configuration, and 3,900 for the carrier aircraft program. During the 9 years from contract award to first flight, this was equivalent to operating a facility 16 hours a day, 6 days a week. Specialized projects demanded unusual effort, such as an ongoing attempt to minimize model-to-model and tunnel-to-tunnel discrepancies. This work alone conducted 28 test series and used 14 wind tunnels.[639]

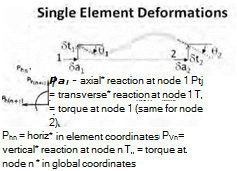

Structural tests complemented the work in aerodynamics. The mathematics of structural analysis was well developed, with computer

programs called NASTRAN that dealt with strength under load while addressing issues of vibration, bending, and flexing. The equations of NASTRAN were linear and algebraic, which meant that in principle they were easy to solve. The problem was that there were too many of them, for the most detailed mathematical model of the orbiter’s structure had some 50,000 degrees of freedom. Analysts introduced abridged versions that cut this number to 1,000 and then relied on experimental tests for data that could be compared with the predictions of the computers.[640]

There were numerous modes of vibration, with frequencies that changed as the Shuttle burned its propellants. Knowledge of these frequencies was essential, particularly in dealing with "pogo.” This involved a longitudinal oscillation like that of a pogo stick, with propellant flowing in periodic surges within its main feed line. Such surges arose when their frequency matched that of one of the structural modes, producing resonance. The consequent variations in propellant-flow rate then caused the engine thrust to oscillate at that same rate. This turned the engines into sledgehammers, striking the vehicle structure at its resonant frequency, and made the pogo stronger. It weakened only when consumption of propellant brought a further change in the structural frequency that broke the resonance, allowing the surges to die out.

Pogo was common; it had been present on earlier launch vehicles. It had brought vibrations with acceleration of 9 g’s in a Titan II, which was unacceptably severe. Engineering changes cut this to below 0.25 g, which enabled this rocket to launch the manned Gemini spacecraft. Pogo reappeared in Apollo during the flight of a test Saturn V in 1968. For the Shuttle, the cure was relatively simple, calling for installation of a gas-filled accumulator within the main oxygen line. This damped the pogo oscillations, though design of this accumulator called for close understanding of the pertinent frequencies.[641]

The most important structural tests used actual flight hardware, including the orbiter Enterprise and STA-099, a full-size test article that later became the Challenger. In 1978, Enterprise went to NASA Marshall, where the work now included studies on the external tank. For vibrational tests, engineers assembled a complete Shuttle by mating Enterprise to such a tank and to a pair of dummy solid boosters. One problem that these models addressed came at lift-off. The ignition of the three main engines imposes a sudden load of more than 1 million pounds of thrust. This force bends the solid boosters, placing considerable stress at their forward attachments to the tank. If the solid boosters were to ignite at that moment, their thrust would add to the stress.

To reduce the force on the attachment, analysts took advantage of the fact that the solid boosters would not only bend but would sway back and forth somewhat slowly, like an upright fishing rod. The strain on the attachment would increase and decrease with the sway, and it was possible to have the solid boosters ignite at an instant of minimum load. This called for delaying their ignition by 2.7 seconds, which cut the total load by 25 percent. The main engines fired during this interval, which consumed propellant, cutting the payload by 600 pounds. Still, this was acceptable.[642]

While Enterprise underwent vibration tests, STA-099 showed the orbiter’s structural strength by standing up to applied forces. Like a newborn baby that lacks hair, this nascent form of Challenger had no thermal-protection tiles. Built of aluminum, it looked like a large fighter plane. For the structural tests, tiles were not only unnecessary; they were counterproductive. The tiles had no structural strength of their own that had to be taken into account, and they would have received severe damage from the hydraulic jacks that applied the loads and forces.

STA-099 and Columbia had both been designed to accommodate a set of loads defined by a database designated 5.1. In 1978, there was a new database, 5.4, and STA-099 had to withstand its loads without acquiring strains or deformations that would render it unfit for flight. Yet in an important respect, this vehicle was untestable; it was not possible to validate the strength of its structural design merely by applying loads with those jacks. The Shuttle structure had evolved under such strong emphasis on saving weight that it was necessary to take full account

of thermal stresses that resulted from temperature differences across structural elements during reentry. No facility existed that could impose thermal stresses on so large an object as STA-099, for that would have required heating the entire vehicle.

STA-099 and Columbia had both been designed to withstand ultimate loads 140 percent greater than those of the 5.1 database. The structural tests on STA-099 now had to validate this safety factor for the new 5.4 database. Unfortunately, a test to 140 percent of the 5.4 loads threatened to produce permanent deformations in the structure. This was unacceptable, for STA-099 was slated for refurbishment into Challenger. Moreover, because thermal stresses could not be reproduced over the entire vehicle, a test to 140 percent would sacrifice the prospect of building Challenger while still leaving questions as to whether an orbiter could meet the safety factor of 140 percent.

NASA managers shaped the tests accordingly. For the entire vehicle, they used the jacks to apply stresses only up to 120 percent of the 5.4 loads. When the observed strains proved to match closely the values predicted by stress analysis, the 140 percent safety factor was deemed to be validated. In addition, the forward fuselage underwent the most severe aerodynamic heating, yet it was relatively small. It was subjected to a combination of thermal and mechanical loads that simulated the complete reentry stress environment in at least this limited region. STA – 099 then was given a detailed and well-documented posttest inspection. After these tests, STA-099 was readied as the flight vehicle Challenger, joining Columbia as part of NASA’s growing Shuttle fleet.[643]

![]() Much of the history of aircraft design in the postwar era is encapsulated by the remarkable work of NACA-NASA engineer Richard T. Whitcomb. Whitcomb, a transonic and supersonic pioneer, gave to aeronautics the wasp-waisted area ruled transonic airplane, the graceful and highly efficient supercritical wing, and the distinctive wingtip winglet. But he also contributed greatly to the development of advanced wind tunnel design and testing. His life offers insights into the process of aeronautical creativity and the role of the genius figure in advancing flight.

Much of the history of aircraft design in the postwar era is encapsulated by the remarkable work of NACA-NASA engineer Richard T. Whitcomb. Whitcomb, a transonic and supersonic pioneer, gave to aeronautics the wasp-waisted area ruled transonic airplane, the graceful and highly efficient supercritical wing, and the distinctive wingtip winglet. But he also contributed greatly to the development of advanced wind tunnel design and testing. His life offers insights into the process of aeronautical creativity and the role of the genius figure in advancing flight.

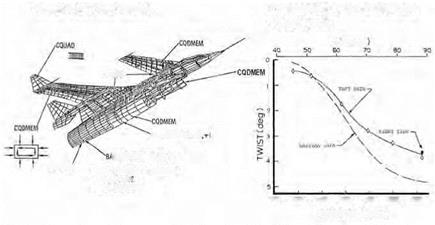

Comparison Ground Test Data

Comparison Ground Test Data НІМАТ Wing with Electro-Optical Deflection Measurement System

НІМАТ Wing with Electro-Optical Deflection Measurement System