Ablation

In 1953, on the eve of the Atlas go-ahead, investigators were prepared to consider several methods for thermal protection of its nose cone. The simplest was the heat sink, with a heat shield of thick copper absorbing the heat of re-entry. An alternative approach, the hot structure, called for an outer covering of heat-resistant shingles that were to radiate away the heat. A layer of insulation, inside the shingles, was to protect the primary structure. The shingles, in turn, overlapped and could expand freely.

A third approach, transpiration cooling, sought to take advantage of the light weight and high heat capacity of boiling water. The nose cone was to be filled with this liquid; strong g-forces during deceleration in the atmosphere were to press the water against the hot inner skin. The skin was to be porous, with internal steam pressure forcing the fluid through the pores and into the boundary layer. Once injected, steam was to carry away heat. It would also thicken the boundary layer, reducing its temperature gradient and hence its rate of heat transfer. In effect, the nose cone was to stay cool by sweating.41

Still, each of these approaches held difficulties. Though potentially valuable, transpiration cooling was poorly understood as a topic for design. The hot-structure concept raised questions of suitably refractory metals along with the prospect of losing the entire nose cone if a shingle came off. The heat-sink approach was likely to lead to high weight. Even so, it seemed to be the most feasible way to proceed, and early Atlas designs specified use of a heat-sink nose cone.42

The Army had its own activities. Its missile program was separate from that of the Air Force and was centered in Huntsville, Alabama, with the redoubtable Wer – nher von Braun as its chief. He and his colleagues came to Huntsville in 1950 and developed the Redstone missile as an uprated V-2. It did not need thermal protection, but the next missile would have longer range and would certainly need it.43

Von Braun was an engineer. He did not set up a counterpart of Avco Research Laboratory, but his colleagues nevertheless proceeded to invent their way toward a nose cone. Their concern lay at the tip of a rocket, but their point of departure came at the other end. They were accustomed to steering their missiles by using jet vanes, large tabs of heat-resistant material that dipped into the exhaust. These vanes then deflected the exhaust, changing the direction of flight. Von Brauns associates thus had long experience in testing materials by placing them within the blast of a rocket engine. This practice carried over to their early nose-cone work.44

The V-2 had used vanes of graphite. In November 1952, these experimenters began testing new materials, including ceramics. They began working with nose – cone models late in 1953. In July 1954 they tested their first material of a new type: a reinforced plastic, initially a hard melamine resin strengthened with glass fiber. New test facilities entered service in June 1955, including a rocket engine with thrust of 20,000 pounds and a jet diameter of 14.5 inches.45

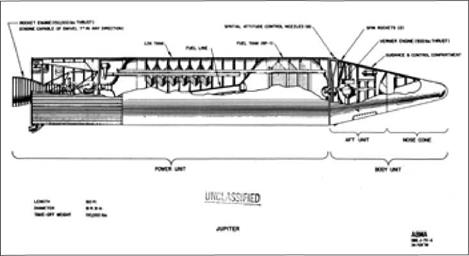

The pace accelerated after November of that year, as Von Braun won approval from Defense Secretary Charles Wilson to proceed with development of his next missile. This was Jupiter, with a range of 1,500 nautical miles.46 It thus was markedly less demanding than Atlas in its thermal-protection requirements, for it was to re-enter the atmosphere at Mach 15 rather than Mach 20 and higher. Even so, the Huntsville group stepped up its work by introducing new facilities. These included a rocket engine of 135,000 pounds of thrust for use in nose-cone studies.

The effort covered a full range of thermal-protection possibilities. Transpiration cooling, for one, raised unpleasant new issues. Convair fabricated test nose cones with water tanks that had porous front walls. The pressure in a tank could be adjusted to deliver the largest flow of steam when the heat flux was greatest. But this technique led to hot spots, where inadequate flow brought excessive temperatures. Transpiration thus fell by the wayside.

Heat sink drew attention, with graphite holding promise for a time. It was light in weight and could withstand high temperatures. But it also was a good heat conductor, which raised problems in attaching it to a substructure. Blocks of graphite also contained voids and other defects, which made them unusable.

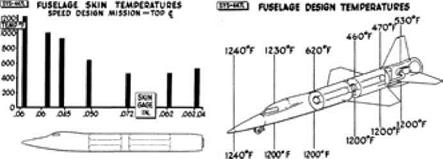

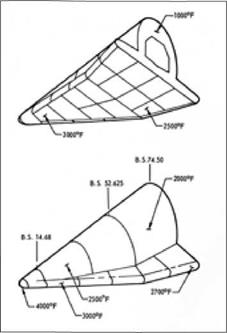

By contrast, hot structures held promise. Researchers crafted lightweight shingles of tungsten and molybdenum backed by layers of polished corrugated steel and aluminum, to provide thermal insulation along with structural support. When the shingles topped 3,250°F, the innermost layer stayed cool and remained below 200°E Clearly, hot structures had a future.

The initial work with a reinforced plastic, in 1954, led to many more tests of similar materials. Engineers tested such resins as silicones, phenolics, melamines, Teflon, epoxies, polyesters, and synthetic rubbers. Filler materials included soft glass, fibers of silicon dioxide and aluminum silicate, mica, quartz, asbestos, nylon, graphite, beryllium, beryllium oxide, and cotton.

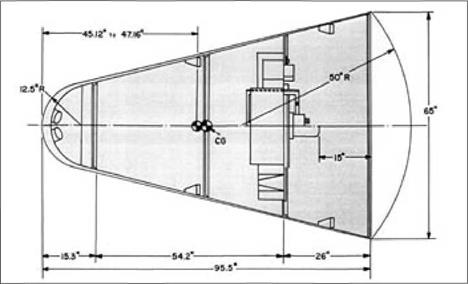

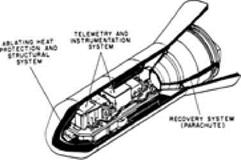

|

Jupiter missile with ablative nose cone. (U. S. Army) |

Fiber-reinforced polymers proved to hold particular merit. The studies focused on plastics reinforced with glass fiber, with a commercially-available material, Micarta 259-2, demonstrating noteworthy promise. The Army stayed with this choice as it moved toward flight test of subscale nose cones in 1957. The first one used Micarta 259-2 for the plastic, with a glass cloth as the filler.47

In this fashion the Army ran well ahead of the Air Force. Yet the Huntsville work did not influence the Atlas effort, and the reasons ran deeper than interservice rivalry. The relevance of that work was open to question because Atlas faced a far more demanding re-entry environment. In addition, Jupiter faced competition from Thor, an Air Force missile of similar range. It was highly likely that only one would enter production, so Air Force designers could not merely become apt pupils of the Army. They had to do their own work, seeking independent approaches and trying to do better than Von Braun.

Amid this independence, George Sutton came to the re-entry problem. He had received his Ph. D. at Caltech in 1955 at age 27, jointly in mechanical engineering and physics. His only experience within the aerospace industry had been a summer job at the Jet Propulsion Laboratory, but he jumped into re-entry with both feet after taking his degree. He joined Lockheed and became closely involved in studying materials suitable for thermal protection. Then he was recruited by General Electric, leaving sunny California and arriving in snowy Schenectady, New York, early in 1956.

Heat sinks for Atlas were ascendant at that time, with Lester Lees’s heat-transfer theory appearing to give an adequate account of the thermal environment. Sutton was aware of the issues and wrote a paper on heat-sink nose cones, but his work soon led him in a different direction. There was interest in storing data within a small capsule that would ride with a test nose cone and that might survive re-entry if the main cone were to be lost. This capsule needed its own thermal protection, and it was important to achieve light weight. Hence it could not use a heat sink. Sutton’s management gave him a budget of $75,000 to try to find something more suitable.48

This led him to re-examine the candidate materials that he had studied at Lockheed. He also learned that other GE engineers were working on a related problem. They had built liquid propellant rocket engines for the Army’s Hermes program, with these missiles being steered by jet vanes in the fashion of the V-2 and Redstone. The vanes were made from alternating layers of glass cloth and thermosetting resins. They had become standard equipment on the Hermes A-З, but some of them failed due to delamination. Sutton considered how to avoid this:

“I theorized that heating would char the resin into a carbonaceous mass of relatively low strength. The role of the fibers should be to hold the carbonaceous char to virgin, unheated substrate. Here, low thermal conductivity was essential to minimize the distance from the hot, exposed surface to the cool substrate, to minimize the mass of material that had to be held by the fibers as well as the degradation of the fibers. The char itself would eventually either be vaporized or be oxidized either by boundary layer oxygen or by C02 in the boundary layer. The fibers would either melt or also vaporize. The question was how to fabricate the material so that the fibers interlocked the resin, which was the opposite design philosophy to existing laminates in which the resin interlocks the fibers. 1 believed that a solution might be the use of short fibers, randomly oriented in a soup of resin, which was then molded into the desired shape. 1 then began to plan the experiments to test this hypothesis.”49

Sutton had no pipeline to Huntsville, but his plan echoed that of Von Braun. He proceeded to fabricate small model nose cones from candidate fiber-reinfo reed plastics, planning to test them by immersion in the exhaust of a rocket engine. GE was developing an engine for the first stage of the Vanguard program; prototypes were at hand, along with test stands. Sutton arranged for an engine to produce an exhaust that contained free oxygen to achieve oxidation of the carbon-rich char.

He used two resins along with five types of fiber reinforcement. The best performance came with the use of Refrasil reinforcement, a silicon-dioxide fiber. Both resins yielded composites with a heat capacity of 6,300 BTU per pound or greater. This was astonishing. The materials had a density of 1.6 times that of water. Yet they absorbed more than six times as much heat, pound for pound, as boiling water!50

Here was a new form of thermal protection: ablation. An ablative heat shield could absorb energy through latent heat, when melting or evaporating, and through sensible heat, with its temperature rise. In addition, an outward flow of ablating volatiles thickened the boundary layer, which diminished the heat flux. Ablation promised all the advantages of transpiration cooling, within a system that could be considerably lighter and yet more capable.51

Sutton presented his experimental results in June 1957 at a technical conference held at the firm of Ramo-Wooldridge in Los Angeles. This company was providing technical support to the Air Forces Atlas program management. Following this talk, George Solomon, one of that firm’s leading scientists, rose to his feet and stated that ablation was the solution to the problem of thermal protection.

The Army thought so too. It had invented ablation on its own, considerably earlier and amid far deeper investigation. Indeed, at the moment when Sutton gave his talk, Von Braun was only two months away from a successful flight test of a subscale nose cone. People might argue whether the Soviets were ahead of the United States in missiles, but there was no doubt that the Army was ahead of the Air Force in nose cones. Jupiter was already slated for an ablative cone, but Thor was to use heat sink, as was the intercontinental Atlas.

Already, though, new information was available concerning transition from laminar to turbulent flow over a nose cone. Turbulent heating would be far more severe, and these findings showed that copper, the best heat-sink material, was inadequate for an ICBM. Materials testing now came to the forefront, and this work needed new facilities. A rocket-engine exhaust could reproduce the rate of heat transfer, but in Kantrowitz’s words, “a rocket is not hot enough.”52 It could not duplicate the temperatures of re-entry.

A shock tube indeed gave a suitably hot flow, but its duration of less than a millisecond was hopelessly inadequate for testing ablative materials. Investigators needed a new type of wind tunnel that could produce a continuous flow, but at temperatures far greater than were available. Fortunately, such an installation did not have to reproduce the hypersonic Mach numbers of re-entry; it sufficed to duplicate the necessary temperatures within the flow. The instrument that did this was the arc tunnel.

It heated the air with an electric arc, which amounted to a man-made stroke of lightning. Such arcs were in routine use in welding; Avco’s Thomas Brogan noted that they reached 6500 K, “a temperature which would exist at the [tip] of a blunt body flying at 22,000 feet per second.” In seeking to develop an arc-heated wind tunnel, a point of departure lay in West Germany, where researchers had built a “plasma jet.”53

This device swirled water around a long carbon rod that served as the cathode. The motion of the water helped to keep the arc focused on the anode, which was also of carbon and which held a small nozzle. The arc produced its plasma as a mix of very hot steam and carbon vapor, which was ejected through the nozzle. This invention achieved pressures of 50 atmospheres, with the plasma temperature at the nozzle exit being measured at 8000 K. The carbon cathode eroded relatively slowly, while the water supply was easily refilled. The plasma jet therefore could operate for fairly long times.54

At NACA-Langley, an experimental arc tunnel went into operation in May 1957- It differed from the German plasma jet by using an electric arc to heat a flow of air, nitrogen, or helium. With a test section measuring only seven millimeters square, it was a proof-of-principle instrument rather than a working facility. Still, its plasma temperatures ranged from 5800 to 7000 K, which was well beyond the reach of a conventional hypersonic wind tunnel.55

At Avco, Kantrowitz paid attention when he heard the word “plasma.” He had been studying such ionized gases ever since he had tried to invent controlled fusion. His first arc tunnel was rated only at 130 kilowatts, a limited power level that restricted the simulated altitude to between 165,000 and 210,000 feet. Its hot plasma flowed from its nozzle at Mach 3.4, but when this flow came to a stop when impinging on samples of quartz, the temperature corresponded to flight velocities as high as 21,000 feet per second. Tests showed good agreement between theory and experiment, with measured surface temperatures of 2700 К falling within three percent of calculated values. The investigators concluded that opaque quartz “will effectively absorb about 4000 BTU per pound for ICBM and [intermediate-range] trajectories.”56

In Huntsville, Von Brauns colleagues found their way as well to the arc tunnel. They also learned of the initial work in Germany. In addition, the small California firm of Plasmadyne acquired such a device and then performed experiments under contract to the Army. In 1958 Rolf Buhler, a company scientist, discovered that when he placed a blunt rod of graphite in the flow, the rod became pointed. Other investigators attributed this result to the presence of a cool core in the arc-heated jet, but Sutton succeeded in deriving this observed shape from theory.

This immediately raised the prospect of nose cones that after all might be sharply pointed rather than blunt. Such re-entry bodies would not slow down in the upper atmosphere, perhaps making themselves tempting targets for antiballistic missiles, but would continue to fall rapidly. Graphite still had the inconvenient features noted previously, but a new material, pyrolytic graphite, promised to ease the problem of its high thermal conductivity.

Pyrolytic graphite was made by chemical vapor deposition. One placed a temperature-resistant form in an atmosphere of gaseous hydrocarbons. The hot surface broke up the gas molecules, a process known as pyrolysis, and left carbon on the surface. The thermal conductivity then was considerably lower in a direction normal to the surface than when parallel to it. The low value of this conductivity, in the normal direction, made such graphite attractive.57

Having whetted their appetites with the 130-kilowatt facility, Avco went on to build one that was two orders of magnitude more powerful. It used a 15-megawatt power supply and obtained this from a bank of 2,000 twelve-volt truck batteries, with motor-generators to charge them. They provided direct current for run times of up to a minute and could be recharged in an hour.58

With this, Avco added the high-power arc tunnel to the existing array of hypersonic flow facilities. These included aerodynamic wind tunnels such as Beckers, along with plasma jets and shock tubes. And while the array of ground installations proliferated, the ICBM program was moving toward a different kind of test: full – scale flight.

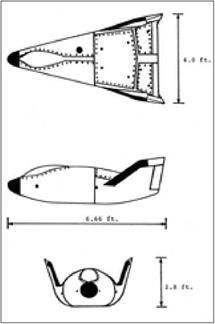

As early as August 1959, the Flight Dynamics Laboratory at Wright-Patter – son Air Force Base launched an in-house study of a small recoverable boost-glide vehicle that was to test hot structures during re-entry. From the outset there was strong interest in problems of aerodynamic flutter. This was reflected in the concept name: ASSET or Aerother – modynamic/elastic Structural Systems Environmental Tests.

As early as August 1959, the Flight Dynamics Laboratory at Wright-Patter – son Air Force Base launched an in-house study of a small recoverable boost-glide vehicle that was to test hot structures during re-entry. From the outset there was strong interest in problems of aerodynamic flutter. This was reflected in the concept name: ASSET or Aerother – modynamic/elastic Structural Systems Environmental Tests.