Stellar Cataclysm

The formation of a black hole is just one half of the story when a massive star dies. As the star reaches the end of its life and all fusion energy sources are exhausted, it suffers a dramatic gravitational collapse. The crushing force of in-falling gas squeezes the core into a black hole, but much of the mass rebounds outward and the outflowing gas meets more in-falling gas and heats it momentarily to billions of degrees. Heavy elements like gold and silver and platinum are created in that thermonuclear blast and they surf into space to become part of future generations of stars.34 This is a supernova.

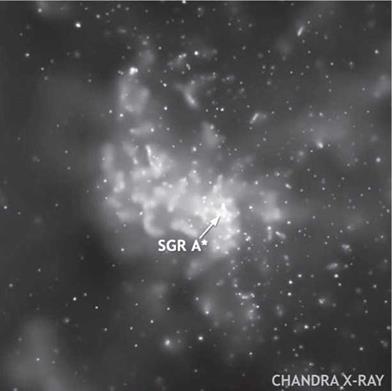

For centuries and millennia after the supernova event, outrush – ing gas interacts with the interstellar medium to form a delicate filigree of nebulosity and filaments of glowing gas. Supernova remnants are most clearly seen in X-rays, and with Chandra their structure can be studied in great detail. The spectrometers on Chandra have mapped the ejection of iron and silicon and oxygen and nitrogen, elements essential for planet-building and for life.35 Cosmic alchemy is part of our story since every carbon and oxygen atom in every person was once part of a previous generation of stars. The imagers on Chandra have observed the shock-heated filaments in enough detail to model the process that accelerates particles to within a fraction of a percent of the speed of light (plate 18).

Chandra has observed supernova remnants at different stages of their evolution and used them to piece together the expansion history of a typical dying star. This research has an intriguing connection to human history. Tycho’s supernova of 1572 in the constellation of Cassiopeia and the Crab Nebula supernova of 1054 in the constellation of Taurus were two of the brightest “guest” stars of the last millennium, visible to the naked eye and in the historical record of many cultures.36 Recently, Chandra data led to a revised age for the supernova remnant RCW 86, and the detonation date of AD 185 perfectly matches the notation of a guest star in records of the Chinese court astronomers, making this the first supernova in recorded human history.37

At the center of a supernova remnant is either a black hole or a neutron star. Neutron stars aren’t as exotic as black holes, but they are quite bizarre. The pressure of gravity forces protons to merge with electrons and create pure neutron material; without the electrical repulsion to keep the particles separate they are as close together as marbles in a jar. A neutron star is like a giant atomic nucleus with an atomic number of 1057. Chandra can see neutron stars via the high-energy phenomena spawned by their intense magnetic fields. A neutron star has a magnetic field of about 1012 Gauss, equivalent to 10 billion refrigerator magnets. They are spinning at typical speeds of 60 rpm and sometimes as quickly as 45,000 rpm. Imagine something a million times the mass of the Earth that’s the size of a city and spinning nearly a thousand times a second! Chandra images of dozens of neutron stars have revealed glowing clouds of magnetized particles blowing away from the neutron star like a wind.38