We consider the estimated LACE-ACES performance very optimistic. In several cases complete failure of the project would result from any significant performance degradation from the present estimates…. Obviously the advantages claimed for the system will not be available unless air can be condensed and purified very rapidly during flight. The figures reported indicate that about 0.8 ton of air per second would have to be processed.

In conventional, i. e., ordinary commercial equipment, this would require a distillation column having a cross section on the order of 500 square feet…. It is proposed to increase the capacity of equipment of otherwise conventional design by using centrifugal force. This may be possible, but as far as the Committee knows this has never been accomplished.

On other propulsion systems:

When reduced to a common basis and compared with the best of current technology, all assumed large advances in the state-of-the-art…. On the basis of the best of current technology, none of the schemes could deliver useful payloads into orbits.

On vehicle design:

We are gravely concerned that too much emphasis may be placed on the more glamorous aspects of the Aerospace Plane resulting in neglect of what appear to be more conventional problems. The achievement of low structural weight is equally important… as is the development of a highly successful propulsion system.

Regarding scramjets, the panel was not impressed with claims that supersonic combustion had been achieved in existing experiments:

These engine ideas are based essentially upon the feasibility of diffusion deflagration flames in supersonic flows. Research should be immediately initiated using existing facilities… to substantiate the feasibility of this type of combustion.

The panelists nevertheless gave thumbs-up to the Aerospaceplane effort as a continuing program of research. Their report urged a broadening of topics, placing greater emphasis on scramjets, structures and materials, and two-stage-to-orbit configurations. The array of proposed engines were “all sufficiently interesting so that research on all of them should be continued and emphasized.”65

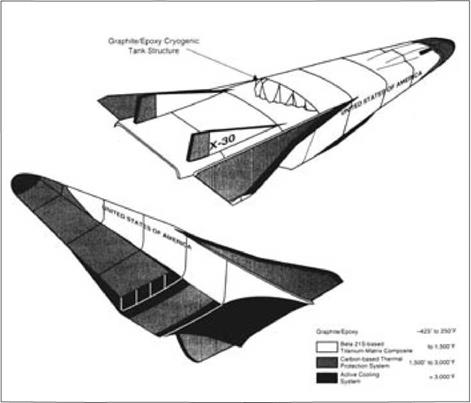

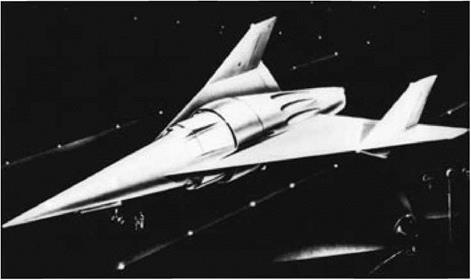

As the studies went forward in the wake of this review, new propulsion concepts continued to flourish. Lockheed was in the forefront. This firm had initiated company-funded work during the spring of 1959 and had a well-considered single-stage concept two years later. An artists rendering showed nine separate rocket nozzles at its tail. The vehicle also mounted four ramjets, set in pods beneath the wings.

Convair’s Space Plane had used separated nitrogen as a propellant, heating it in the LACE precooler and allowing it to expand through a nozzle to produce thrust. Lockheed’s Aerospace Plane turned this nitrogen into an important system element, with specialized nitrogen rockets delivering 125,000 pounds of thrust. This certainly did not overcome the drag produced by air collection, which would have turned the vehicle into a perpetual motion machine. However, the nitrogen rockets made a valuable contribution.66

Lockheed’s Aerospaceplane concept. The alternate hypersonic in-flight refueling system approach called for propellant transfer at Mach 6. (Art by Dennis Jenkins)

|

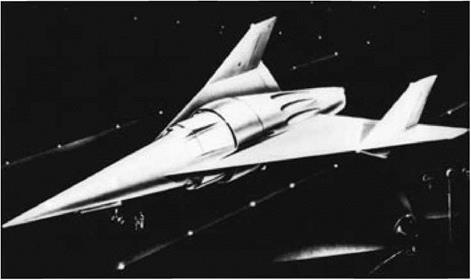

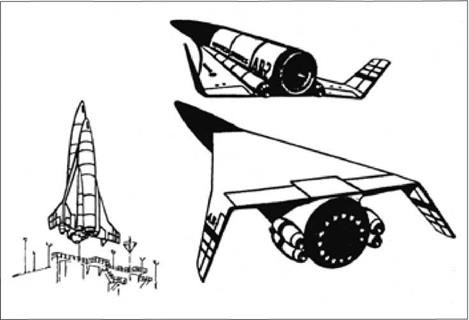

Republic’s Aerospaceplane concept showed extensive engine-airframe integration. (Republic Aviation)

|

For takeoff, Lockheed expected to use Turbo-LACE. This was a LACE variant that sought again to reduce the inherently hydrogen-rich operation of the basic system. Rather than cool the air until it was liquid, Turbo-Lace chilled it deeply but allowed it to remain gaseous. Being very dense, it could pass through a turbocompressor and reach pressures in the hundreds of psi. This saved hydrogen because less was needed to accomplish this cooling. The Turbo-LACE engines were to operate at chamber pressures of 200 to 250 psi, well below the internal pressure of standard rockets but high enough to produce 300,000 pounds of thrust by using turbocom – pressed oxygen.67

Republic Aviation continued to emphasize the scramjet. A new configuration broke with the practice of mounting these engines within pods, as if they were turbojets. Instead, this design introduced the important topic of engine-airframe integration by setting forth a concept that amounted to a single enormous scramjet fitted with wings and a tail. A conical forward fuselage served as an inlet spike. The inlets themselves formed a ring encircling much of the vehicle. Fuel tankage filled most of its capacious internal volume.

This design study took two views regarding the potential performance of its engines. One concept avoided the use of LACE or ACES, assuming again that this craft could scram all the way to orbit. Still, it needed engines for takeoff so turboramjets were installed, with both Pratt & Whitney and General Electric providing candidate concepts. Republic thus was optimistic at high Mach but conservative at low speed.

The other design introduced LACE and ACES both for takeoff and for final ascent to orbit and made use of yet another approach to derichening the hydrogen. This was SuperLACE, a concept from Marquardt that placed slush hydrogen rather than standard liquid hydrogen in the main tank. The slush consisted of liquid that contained a considerable amount of solidified hydrogen. It therefore stood at the freezing point of hydrogen, 14 K, which was markedly lower than the 21 К of liquid hydrogen at the boiling point.68

SuperLACE reduced its use of hydrogen by shunting part of the flow, warmed in the LACE heat exchanger, into the tank. There it mixed with the slush, chilling again to liquid while melting some of the hydrogen ice. Careful control of this flow ensured that while the slush in the tank gradually turned to liquid and rose toward the 21 К boiling point, it did not get there until the air-collection phase of a flight was finished. As an added bonus, the slush was noticeably denser than the liquid, enabling the tank to hold more fuel.69

LACE and ACES remained in the forefront, but there also was much interest in conventional rocket engines. Within the Aerospaceplane effort, this approach took the name POBATO, Propellants On Board At Takeoff. These rocket-powered vehicles gave points of comparison for the more exotic types that used LACE and scramjets, but here too people used their imaginations. Some POBATO vehicles ascended vertically in a classic liftoff, but others rode rocket sleds along a track while angling sharply upward within a cradle.70

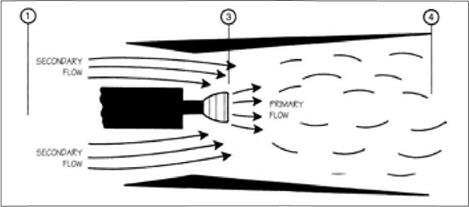

In Denver, the Martin Company took rocket-powered craft as its own, for this firm expected that a next-generation launch vehicle of this type could be ready far sooner than one based on advanced airbreathing engines. Its concepts used vertical liftoff, while giving an opening for the ejector rocket. Martin introduced a concept of its own called RENE, Rocket Engine Nozzle Ejector (RENE), and conducted experiments at the Arnold Engineering Development Center. These tests went forward during 1961, using a liquid rocket engine, with nozzle of 5-inch diameter set within a shroud of 17-inch width. Test conditions corresponded to flight at Mach 2 and 40,000 feet, with the shrouds or surrounding ducts having various lengths to achieve increasingly thorough mixing. The longest duct gave the best performance, increasing the rated 2,000-pound thrust of the rocket to as much as 3,100 pounds.71

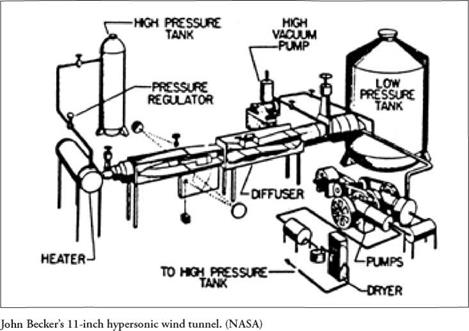

A complementary effort at Marquardt sought to demonstrate the feasibility of LACE. The work started with tests of heat exchangers built by Garrett AiResearch that used liquid hydrogen as the working fluid. A company-made film showed dark liquid air coming down in a torrent, as seen through a porthole. Further tests used this liquefied air in a small thrust chamber. The arrangement made no attempt to derichen the hydrogen flow; even though it ran very fuel-rich, it delivered up to 275 pounds of thrust. As a final touch, Marquardt crafted a thrust chamber of 18-inch diameter and simulated LACE operation by feeding it with liquid air and gaseous hydrogen from tanks. It showed stable combustion, delivering thrust as high as 5,700 pounds.72

Within the Air Force, the SAB’s Ad Hoc Committee on Aerospaceplane continued to provide guidance along with encouraging words. A review of July 1962 was less skeptical in tone than the one of 18 months earlier, citing “several attractive arguments for a continuation of this program at a significant level of funding”:

It will have the military advantages that accrue from rapid response times and considerable versatility in choice of landing area. It will have many of the advantages that have been demonstrated in the X-15 program, namely, a real pay-off in rapidly developing reliability and operational pace that comes from continuous re-use of the same hardware again and again. It may turn out in the long run to have a cost effectiveness attractiveness… the cost per pound may eventually be brought to low levels. Finally, the Aerospaceplane program will develop the capability for flights in the atmosphere at hypersonic speeds, a capability that may be of future use to the Defense Department and possibly to the airlines.73

Single-stage-to-orbit (SSTO) was on the agenda, a topic that merits separate comment. The space shuttle is a stage-and-a-half system; it uses solid boosters plus a main stage, with all engines burning at liftoff. It is a measure of progress, or its lack, in astronautics that the Soviet R-7 rocket that launched the first Sputniks was also stage-and-a-half.74 The concept of SSTO has tantalized designers for decades, with these specialists being highly ingenious and ready to show a can-do spirit in the face of challenges.

This approach certainly is elegant. It also avoids the need to launch two rockets to do the work of one, and if the Earth’s gravity field resembled that of Mars, SSTO would be the obvious way to proceed. Unfortunately, the Earth’s field is considerably stronger. No SSTO has ever reached orbit, either under rocket power or by using scramjets or other airbreathers. The technical requirements have been too severe.

The SAB panel members attended three days of contractor briefings and reached a firm conclusion: “It was quite evident to the Committee from the presentation of nearly all the contractors that a single stage to orbit Aerospaceplane remains a highly speculative effort.” Reaffirming a recommendation from its I960 review, the group urged new emphasis on two-stage designs. It recommended attention to “development of hydrogen fueled turbo ramjet power plants capable of accelerating the first

stage to Mach 6.0 to 10.0____ Research directed toward the second stage which

will ultimately achieve orbit should be concentrated in the fields of high pressure hydrogen rockets and supersonic burning ramjets and air collection and enrichment systems. n

Convair, home of Space Plane, had offered single-stage configurations as early as I960. By 1962 its managers concluded that technical requirements placed such a vehicle out of reach for at least the next 20 years. The effort shifted toward a two-stage concept that took form as the 1964 Point Design Vehicle. With a gross takeoff weight of700,000 pounds, the baseline approach used turboramjets to reach Mach 5. It cruised at that speed while using ACES to collect liquid oxygen, then accelerated anew using ramjets and rockets. Stage separation occurred at Mach 8.6 and 176,000 feet, with the second stage reaching orbit on rocket power. The pay – load was 23,000 pounds with turboramjets in the first stage, increasing to 35,000 pounds with the more speculative SuperLACE.

The documentation of this 1964 Point Design, filling 16 volumes, was issued during 1963. An important advantage of the two-stage approach proved to lie in the opportunity to optimize the design of each stage for its task. The first stage was a Mach 8 aircraft that did not have to fly to orbit and that carried its heavy wings, structure, and ACES equipment only to staging velocity. The second-stage design showed strong emphasis on re-entry; it had a blunted shape along with only modest requirements for aerodynamic performance. Even so, this Point Design pushed the state of the art in materials. The first stage specified superalloys for the hot underside along with titanium for the upper surface. The second stage called for coated refractory metals on its underside, with superalloys and titanium on its upper surfaces.76

Although more attainable than its single-stage predecessors, the Point Design still relied on untested technologies such as ACES, while anticipating use in aircraft structures of exotic metals that had been studied merely as turbine blades, if indeed they had gone beyond the status of laboratory samples. The opportunity nevertheless existed for still greater conservatism in an airbreathing design, and the man who pursued it was Ernst Steinhoff. He had been present at the creation, having worked with Wernher von Braun on Germany’s wartime V-2, where he headed up the development of that missiles guidance. After I960 he was at the Rand Corporation, where he examined Aerospaceplane concepts and became convinced that single-stage versions would never be built. He turned to two-stage configurations and came up with an outline of a new one: ROLS, the Recoverable Orbital Launch System. During 1963 he took the post of chief scientist at Holloman Air Force Base and proceeded to direct a formal set of studies.77

The name of ROLS had been seen as early as 1959, in one of the studies that had grown out of SR-89774, but this concept was new. Steinhoff considered that the staging velocity could be as low as Mach 3. At once this raised the prospect that the first stage might take shape as a modest technical extension of the XB-70, a large bomber designed for flight at that speed, which at the time was being readied for flight test. ROLS was to carry a second stage, dropping it from the belly like a bomb, with that stage flying on to orbit. An ACES installation would provide the liquid oxidizer prior to separation, but to reach from Mach 3 to orbital speed, the second stage had to be simple indeed. Steinhoff envisioned a long vehicle resembling a torpedo, powered by hydrogen-burning rockets but lacking wings and thermal protection. It was not reusable and would not reenter, but it would be piloted. A project report stated, “Crew recovery is accomplished by means of a reentry capsule of the Gemini-Apollo class. The capsule forms the nose section of the vehicle and serves as the crew compartment for the entire vehicle.”78

ROLS appears in retrospect as a mirror image of NASA’s eventual space shuttle, which adopted a technically simple booster—a pair of large solid-propellant rockets—while packaging the main engines and most other costly systems within a fully – recoverable orbiter. By contrast, ROLS used a simple second stage and a highly intricate first stage, in the form of a large delta-wing airplane that mounted eight turbojet engines. Its length of 335 feet was more than twice that of a B-52. Weighing 825,000 pounds at takeoff, ROLS was to deliver a payload of 30,000 pounds to orbit.79

Such two-stage concepts continued to emphasize ACES, while still offering a role for LACE. Experimental test and development of these concepts therefore remained on the agenda, with Marquardt pursuing further work on LACE. The earlier tests, during I960 and 1961, had featured an off-the-shelf thrust chamber that had seen use in previous projects. The new work involved a small LACE engine, the MAI 17, that was designed from the start as an integrated system.

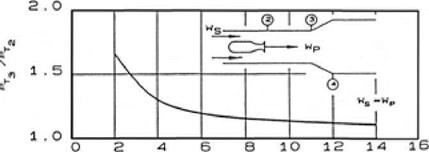

LACE had a strong suit in its potential for a very high specific impulse, I. This is the ratio of thrust to propellant flow rate and has dimensions of seconds. It is a key measure of performance, is equivalent to exhaust velocity, and expresses the engine’s fuel economy. Pratt & Whitney’s RL10, for instance, burned hydrogen and oxygen to give thrust of 15,000 pounds with an I of 433 seconds.80 LACE was an airbreather, and its I could be enormously higher because it took its oxidizer from the atmosphere rather than carrying it in an onboard tank. The term “propellant flow rate” referred to tanked propellants, not to oxidizer taken from the air. For LACE this meant fuel only.

The basic LACE concept produced a very fuel-rich exhaust, but approaches such as RENE and SuperLACE promised to reduce the hydrogen flow substantially. Indeed, such concepts raised the prospect that a LACE system might use an optimized mixture ratio of hydrogen and oxidizer, with this ratio being selected to give the highest I. The MAI 17 achieved this performance artificially by using a large flow of liquid hydrogen to liquefy air and a much smaller flow for the thrust chamber. Hot-fire tests took place during December 1962, and a company report stated that “the system produced 83% of the idealized theoretical air flow and 81% of the idealized thrust. These deviations are compatible with the simplifications of the idealized analysis.”81

The best performance run delivered 0.783 pounds per second of liquid air, which burned a flow of 0.0196 pounds per second of hydrogen. Thrust was 73 pounds; I reached 3,717 seconds, more than eight times that of the RL10. Tests of the MAI 17 continued during 1963, with the best measured values of Is topping 4,500 seconds.82

In a separate effort, the Marquardt manager Richard Knox directed the preliminary design of a much larger LACE unit, the MAI 16, with a planned thrust of

10,0 pounds. On paper, it achieved substantial derichening by liquefying only one-fifth of the airflow and using this liquid air in precooling, while deeply cooling the rest of the airflow without liquefaction. A turbocompressor then was to pump this chilled air into the thrust chamber. A flow of less than four pounds per second of liquid hydrogen was to serve both as fuel and as primary coolant, with the anticipated I exceeding 3,000 seconds.83

New work on RENE also flourished. The Air Force had a cooperative agreement with NASA’s Marshall Space Flight Center, where Fritz Pauli had developed a subscale rocket engine that burned kerosene with liquid oxygen for a thrust of 450 pounds. Twelve of these small units, mounted to form a ring, gave a basis for this new effort. The earlier work had placed the rocket motor squarely along the center – line of the duct. In the new design, the rocket units surrounded the duct, leaving it unobstructed and potentially capable of use as an ejector ramjet. The cluster was tested successfully at Marshall in September 1963 and then went to the Air Forces AEDC. As in the RENE tests of 1961, the new configuration gave a thrust increase of as much as 52 percent.84

While work on LACE and ejector rockets went forward, ACES stood as a particularly critical action item. Operable ACES systems were essential for the practical success of LACE. Moreover, ACES had importance distinctly its own, for it could provide oxidizer to conventional hydrogen-burning rocket engines, such as those of ROLS. As noted earlier, there were two techniques for air separation: by chemical methods and through use of a rotating fractional distillation apparatus. Both approaches went forward, each with its own contractor.

In Cambridge, Massachusetts, the small firm of Dynatech took up the challenge of chemical separation, launching its effort in May 1961. Several chemical reactions appeared plausible as candidates, with barium and cobalt offering particular promise:

2BaO, / 2BaO + 02 2Co304 ^ 6CoO + 02

The double arrows indicate reversibility. The oxidation reactions were exothermic, occurring at approximately 1,600°F for barium and 1,800°F for cobalt. The reduction reactions, which released the oxygen, were endothermic, allowing the oxides to cool as they yielded this gas.

Dynatechs separator unit consisted of a long rotating drum with its interior divided into four zones using fixed partitions. A pebble bed of oxide-coated particles lined the drum interior; containment screens held the particles in place while allowing the drum to rotate past the partitions with minimal leakage. The zones exposed the oxide alternately to high-pressure ram air for oxidation and to low pressure for reduction. The separation was to take place in flight, at speeds of Mach 4 to Mach 5, but an inlet could slow the internal airflow to as little as 50 feet per second, increasing the residence time of air within a unit. The company proposed that an array of such separators weighing just under 10 tons could handle 2,000 pounds per second of airflow while producing liquid oxygen of 65 percent purity.85

Ten tons of equipment certainly counts within a launch vehicle, even though it included the weight of the oxygen liquefaction apparatus. Still it was vastly lighter than the alternative: the rotating distillation system. The Linde Division of Union Carbide pursued this approach. Its design called for a cylindrical tank containing the distillation apparatus, measuring nine feet long by nine feet in diameter and rotating at 570 revolutions per minute. With a weight of 9,000 pounds, it was to process 100 pounds per second of liquefied air—which made it 10 times as heavy as the Dynatech system, per pound of product. The Linde concept promised liquid oxygen of 90 percent purity, substantially better than the chemical system could offer, but the cited 9,000-pound weight left out additional weight for the LACE equipment that provided this separator with its liquefied air.8S

A study at Convair, released in October 1963, gave a clear preference to the Dynatech concept. Returning to the single-stage Space Plane of prior years, Convair engineers considered a version with a weight at takeoff of 600,000 pounds, using either the chemical or the distillation ACES. The effort concluded that the Dynatech separator offered a payload to orbit of 35,800 using barium and 27,800 pounds with cobalt. The Linde separator reduced this payload to 9,500 pounds. Moreover, because it had less efficiency, it demanded an additional 31,000 pounds of hydrogen fuel, along with an increase in vehicle volume of 10,000 cubic feet.87

The turn toward feasible concepts such as ROLS, along with the new emphasis on engineering design and test, promised a bright future for Aerospaceplane studies. However, a commitment to serious research and development was another matter. Advanced test facilities were critical to such an effort, but in August 1963 the Air Force canceled plans for a large Mach 14 wind tunnel at AEDC. This decision gave a clear indication of what lay ahead.88

A year earlier Aerospaceplane had received a favorable review from the SAB Ad Hoc Committee. The program nevertheless had its critics, who existed particularly within the SAB’s Aerospace Vehicles and Propulsion panels. In October 1963 they issued a report that dealt with proposed new bombers and vertical-takeoff-and – landing craft, as well as with Aerospaceplane, but their view was unmistakable on that topic:

The difficulties the Air Force has encountered over the past three years in identifying an Aerospaceplane program have sprung from the facts that the requirement for a fully recoverable space launcher is at present only vaguely defined, that today’s state-of-the-art is inadequate to support any real hardware development, and the cost of any such undertaking will be extremely large…. [T]he so-called Aerospaceplane program has had such an erratic history, has involved so many clearly infeasible factors, and has been subject to so much ridicule that from now on this name should be dropped. It is also recommended that the Air Force increase the vigilance that no new program achieves such a difficult position.89

Aerospaceplane lost still more of its rationale in December, as Defense Secretary Robert McNamara canceled Dyna-Soar. This program was building a mini-space shuttle that was to fly to orbit atop a Titan III launch vehicle. This craft was well along in development at Boeing, but program reviews within the Pentagon had failed to find a compelling purpose. McNamara thus disposed of it.90

Prior to this action, it had been possible to view Dyna-Soar as a prelude to operational vehicles of that general type, which might take shape as Aerospaceplanes. The cancellation of Dyna-Soar turned the Aerospaceplane concept into an orphan, a long-term effort with no clear relation to anything currently under way. In the wake of McNamara’s decision, Congress deleted funds for further Aerospaceplane studies, and Defense Department officials declined to press for its restoration within the FY 1964 budget, which was under consideration at that time. The Air Force carried forward with new conceptual studies of vehicles for both launch and hypersonic cruise, but these lacked the focus on advanced airbreathing propulsion that had characterized Aerospaceplane.91

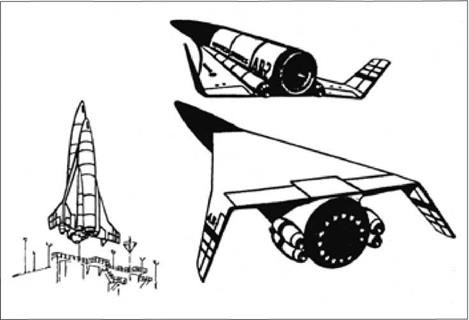

There nevertheless was real merit to some of the new work, for this more realistic and conservative direction pointed out a path that led in time toward NASA’s space shuttle. The Martin Company made a particular contribution. It had designed no Aerospaceplanes; rather, using company funding, its technical staff had examined concepts called Astro rockets, with the name indicating the propulsion mode. Scram – jets and LACE won little attention at Martin, but all-rocket vehicles were another matter. A concept of 1964 had a planned liftoff weight of 1,250 tons, making it intermediate in size between the Saturn I-B and Saturn V. It was a two-stage fully – reusable configuration, with both stages having delta wings and flat undersides. These undersides fitted together at liftoff, belly to belly.

|

Martin’s Astrorocket. (U. S. Air Force)

|

The design concepts of that era were meant to offer glimpses of possible futures, but for this Astrorocket, the future was only seven years off. It clearly foreshadowed a class of two-stage fully reusable space shuttles, fitted with delta wings, that came to the forefront in NASA-sponsored studies of 1971- The designers at Martin were not clairvoyant; they drew on the background of Dyna-Soar and on studies at NASA – Ames of winged re-entry vehicles. Still, this concept demonstrated that some design exercises were returning to the mainstream.92

Further work on ACES also proceeded, amid unfortunate results at Dynatech. That company’s chemical separation processes had depended for success on having a very large area of reacting surface within the pebble-bed air separators. This appeared achievable through such means as using finely divided oxide powders or porous particles impregnated with oxide. But the research of several years showed that the oxide tended to sinter at high temperatures, markedly diminishing the reacting surface area. This did not make chemical separation impossible, but it sharply increased the size and weight of the equipment, which robbed this approach of its initially strong advantage over the Linde distillation system. This led to abandonment of Dynatech’s approach.93

Linde’s system was heavy and drastically less elegant than Dynatech’s alternative, but it amounted largely to a new exercise in mechanical engineering and went forward to successful completion. A prototype operated in test during 1966, and

while limits to the company’s installed power capacity prevented the device from processing the rated flow of 100 pounds of air per second, it handled 77 pounds per second, yielding a product stream of oxygen that was up to 94 percent pure. Studies of lighter-weight designs also proceeded. In 1969 Linde proposed to build a distillation air separator, rated again at 100 pounds per second, weighing 4,360 pounds. This was only half the weight allowance of the earlier configuration.94

In the end, though, Aerospaceplane failed to identify new propulsion concepts that held promise and that could be marked for mainstream development. The program’s initial burst of enthusiasm had drawn on a view that the means were in hand, or soon would be, to leap beyond the liquid-fuel rocket as the standard launch vehicle and to pursue access to orbit using methods that were far more advanced. The advent of the turbojet, which had swiftly eclipsed the piston engine, was on everyone’s mind. Yet for all the ingenuity behind the new engine concepts, they failed to deliver. What was worse, serious technical review gave no reason to believe that they could deliver.

In time it would become clear that hypersonics faced a technical wall. Only limited gains were achievable in airbreathing propulsion, with single-stage-to-orbit remaining out of reach and no easy way at hand to break through to the really advanced performance for which people hoped.

Whiteheads point of departure lay in the fundamental equations of fluid flow: the Navier-Stokes equations, named for the nineteenth-century physicists Claude – Louis-Marie Navier and Sir George Stokes. They form a set of nonlinear partial differential equations that contain 60 partial derivative terms. Their physical content is simple, comprising the basic laws of conservation of mass, momentum, and energy, along with an equation of state. Yet their solutions, when available, cover the entire realm of fluid mechanics.2

Whiteheads point of departure lay in the fundamental equations of fluid flow: the Navier-Stokes equations, named for the nineteenth-century physicists Claude – Louis-Marie Navier and Sir George Stokes. They form a set of nonlinear partial differential equations that contain 60 partial derivative terms. Their physical content is simple, comprising the basic laws of conservation of mass, momentum, and energy, along with an equation of state. Yet their solutions, when available, cover the entire realm of fluid mechanics.2

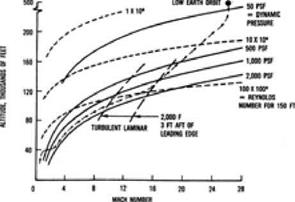

growth of a small disturbance as one followed the flow downstream. When it had grown by a factor of 22,000—e10, with N = 10—the analyst accepted that transition to turbulence had occurred.7

growth of a small disturbance as one followed the flow downstream. When it had grown by a factor of 22,000—e10, with N = 10—the analyst accepted that transition to turbulence had occurred.7

The mechanical properties of metals depend on their finegrained structure. An ingot of metal consists of a mass of interlaced grains or crystals, and small grains give higher strength. Quenching, plunging hot metal into water, yields small grains but often makes the metal brittle or hard to form. Alloying a metal, as by adding small quantities of

The mechanical properties of metals depend on their finegrained structure. An ingot of metal consists of a mass of interlaced grains or crystals, and small grains give higher strength. Quenching, plunging hot metal into water, yields small grains but often makes the metal brittle or hard to form. Alloying a metal, as by adding small quantities of