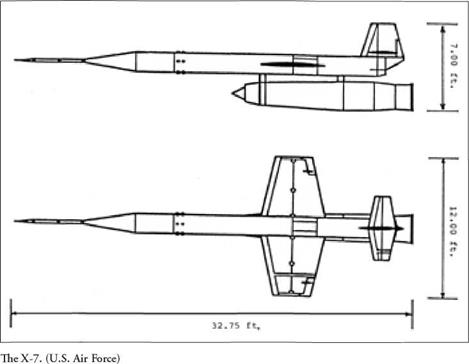

The airflow within a ramjet was subsonic. This resulted from its passage through one or more shocks, which slowed, compressed, and heated the flow. This was true even at high speed, with the Mach 4.31 flight of the X-7 also using a subsonic-combustion ramjet. Moreover, because shocks become stronger with increasing Mach, ramjets could achieve greater internal compression of the flow at higher speeds. This increase in compression improved the engine’s efficiency.

|

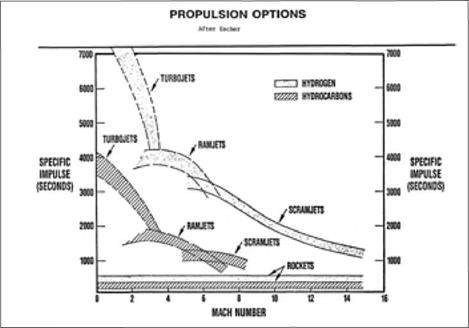

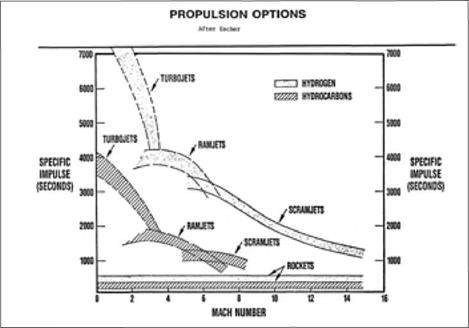

Comparative performance of scramjets and other engines. Airbreathers have veiy high performance because they are “energy machines,” which burn fuel to heat air. Rockets have much lower performance because they are “momentum machines,” which physically expel flows of mass.

(Courtesy of William Escher)

|

Still, there were limits to a ramjet’s effectiveness. Above Mach 5, designers faced increasingly difficult demands for thermal protection of an airframe and for cooling of the ramjet duct. With the internal flow being very hot, it became more difficult to add still more heat by burning fuel, without overtaxing the materials or the cooling arrangements. If the engine were to run lean to limit the temperature rise in the combustor, its thrust would fall off. At still higher Mach levels, the issue of heat addition through combustion threatened to become moot. With high internal temperatures promoting dissociation of molecules of air, combustion reactions would not go to completion and hence would cease to add heat.

A promising way around this problem involved doing away with a requirement for subsonic internal flow. Instead this airflow was to be supersonic and was to sustain combustion. Right at the outset, this approach reduced the need for internal cooling, for this airflow would not heat up excessively if it was fast enough. This relatively cool internal airflow also could continue to gain heat through combustion. It would avoid problems due to dissociation of air or failure of chemical reactions in combustion to go to completion. On paper, there now was no clear upper limit to speed. Such a vehicle might even fly to orbit.

Yet while a supersonic-combustion ramjet offered tantalizing possibilities, right at the start it posed a fundamental issue: was it feasible to burn fuel in the duct of such an engine without producing shock waves? Such shocks could produce severe internal heating, destroying the benefits of supersonic combustion by slowing the flow to subsonic speeds. Rather than seeking to achieve shock-free supersonic combustion in a duct, researchers initially bypassed this difficulty by addressing a simpler problem: demonstration of combustion in a supersonic free-stream flow.

The earliest pertinent research appears to have been performed at the Applied Physics Laboratory (APL), during or shortly after World War II. Machine gunners in aircraft were accustomed to making their streams of bullets visible by making every twentieth round a tracer, which used a pyrotechnic. They hoped that a gunner could walk his bullets into a target by watching the glow of the tracers, but experience showed that the pyrotechnic action gave these bullets trajectories of their own. The Navy then engaged two research centers to look into this. In Aberdeen, Maryland, Ballistic Research Laboratories studied the deflection of the tracer rounds themselves. Near Washington, DC, APL treated the issue as a new effect in aerodynamics and sought to make use of it.

Investigators conducted tests in a Mach 1.5 wind tunnel, burning hydrogen at the base of a shell. A round in flight experienced considerable drag at its base, but the experiments showed that this combustion set up a zone of higher pressure that canceled the drag. This work did not demonstrate supersonic combustion, for while the wind-tunnel flow was supersonic, the flow near the base was subsonic. Still, this work introduced APL to topics that later proved pertinent to supersonic-combustion ramjets (which became known as scramjets).17

NACA’s Lewis Flight Propulsion Laboratory, the agency’s center for studies of engines, emerged as an early nucleus of interest in this topic. Initial work involved theoretical studies of heat addition to a supersonic flow. As early as 1950, the Lewis investigators Irving Pinkel and John Serafini treated this problem in a two-dimensional case, as in flow over a wing or past an axisymmetric body. In 1952 they specifically treated heat addition under a supersonic wing. They suggested that this might produce more lift than could be obtained by burning the same amount of fuel in a turbojet to power an airplane.18

This conclusion immediately raised the question of whether it was possible to demonstrate supersonic combustion in a wind tunnel. Supersonic tunnels produced airflows having very low pressure, which added to the experimental difficulties. However, researchers at Lewis had shown that aluminum borohydride could promote the ignition of pentane fuel at air pressures as low as 0.03 atmosphere. In 1953 Robert Dorsch and Edward Fletcher launched a research program that sought to ignite pure borohydride within a supersonic flow. Two years later they declared that they had succeeded. Subsequent work showed that at Mach 3, combustion of this fuel under a wing more than doubled the lift.19

Also at Lewis, the aerodynamicists Richard Weber and John MacKay published the first important open-literature study of theoretical scramjet performance in 1958. Because they were working entirely with equations, they too bypassed the problem of attaining shock-free flow in a supersonic duct by simply positing that it was feasible. They treated the problem using one-dimensional gas dynamics, corresponding to flow in a duct with properties at any location being uniform across the diameter. They restricted their treatment to flow velocities from Mach 4 to 7.

They discussed the issue of maximizing the thrust and the overall engine efficiency. They also considered the merits of various types of inlet, showing that a suitable choice could give a scramjet an advantage over a conventional ramjet. Supersonic combustion failed to give substantial performance improvements or to lead to an engine of lower weight. Even so, they wrote that “the trends developed herein indicate that the [scramjet] will offer superior performance at higher hypersonic flight speeds.”20

An independent effort proceeded along similar lines at Marquardt, where investigators again studied scramjet performance by treating the flow within an engine duct using one-dimensional gasdynamic theory. In addition, Marquardt researchers carried out their own successful demonstration of supersonic combustion in 1957. They injected hydrogen into a supersonic airflow, with the hydrogen and the air having the same velocity. This work overcame objections from skeptics, who had argued that the work at NACA-Lewis had not truly demonstrated supersonic combustion. The Marquardt experimental arrangement was simpler, and its results were less equivocal.21

The Navy’s Applied Physics Laboratory, home ofTalos, also emerged as an early center of interest in scramjets. As had been true at NACA-Lewis and at Marquardt, this group came to the concept by way of external burning under a supersonic wing. ‘William Avery, the leader, developed an initial interest in supersonic combustion around 1955, for he saw the conventional ramjet facing increasingly stiff competition from both liquid rockets and afterburning turbojets. (Two years later such competition killed Navaho.) Avery believed that he could use supersonic combustion to extend the performance of ramjets.

His initial opportunity came early in 1956, when the Navy’s Bureau of Ordnance set out to examine the technological prospects for the next 20 years. Avery took on the task of assembling APL’s contribution. He picked scramjets as a topic to study, but he was well aware of an objection. In addition to questioning the fundamental feasibility of shock-free supersonic combustion in a duct, skeptics considered that a hypersonic inlet might produce large pressure losses in the flow, with consequent negation of an engine’s thrust.

Avery sent this problem through Talos management to a young engineer, James Keirsey, who had helped with Talos engine tests. Keirsey knew that if a hypersonic ramjet was to produce useful thrust, it would appear as a small difference between

two large quantities: gross thrust and total drag. In view of uncertainties in both these numbers, he was unable to state with confidence that such an engine would work. Still he did not rule it out, and his “maybe” gave Avery reason to pursue the topic further.

Avery decided to set up a scramjet group and to try to build an engine for test in a wind tunnel. He hired Gordon Dugger, who had worked at NACA-Lewis. Dugger’s first task was to decide which of several engine layouts, both ducted and unducted, was worth pursuing. He and Avery selected an external-burning configuration with the shape of a broad upside-down triangle. The forward slope, angled downward, was to compress the incoming airflow. Fuel could be injected at the apex, with the upward slope at the rear allowing the exhaust to expand. This approach again bypassed the problem of producing shock-free flow in a duct. The use of external burning meant that this concept could produce lift as well as thrust.

Dugger soon became concerned that this layout might be too simple to be effective. Keirsey suggested placing a very short cowl at the apex, thereby easing problems of ignition and combustion. This new design lent itself to incorporation within the wings of a large aircraft of reasonably conventional configuration. At low speeds the wide triangle could retract until it was flat and flush with the wing undersurface, leaving the cowl to extend into the free stream. Following acceleration to supersonic speed, the two shapes would extend and assume their triangular shape, then function as an engine for further acceleration.

Wind-tunnel work also proceeded at APL. During 1958 this center had a Mach 5 facility under construction, and Dugger brought in a young experimentalist named Frederick Billig to work with it. His first task was to show that he too could demonstrate supersonic combustion, which he tried to achieve using hydrogen as his fuel. He tried electric ignition; an APL history states that he “generated gigantic arcs,” but “to no avail.” Like the NACA-Lewis investigators, he turned to fuels that ignited particularly readily. His choice, triethyl aluminum, reacts spontaneously, and violently, on contact with air.

“The results of the tests on 5 March 1959 were dramatic,” the APL history continues. “A vigorous white flame erupted over the rear of [the wind-tunnel model] the instant the triethyl aluminum fuel entered the tunnel, jolting the model against its support. The pressures measured on the rear surface jumped upward.” The device produced less than a pound of thrust. But it generated considerable lift, supporting calculations that had shown that external burning could increase lift. Later tests showed that much of the combustion indeed occurred within supersonic regions of the flow.22

By the late 1950s small scramjet groups were active at NACA-Lewis, Marquardt, and APL. There also were individual investigators, such as James Nicholls of the University of Michigan. Still it is no small thing to invent a new engine, even as an extension of an existing type such as the ramjet. The scramjet needed a really high-level advocate, to draw attention within the larger realms of aerodynamics and propulsion. The man who took on this role was Antonio Ferri.

He had headed the supersonic wind tunnel in Guidonia, Italy. Then in 1943 the Nazis took control of that country and Ferri left his research to command a band of partisans who fought the Nazis with considerable effectiveness. This made him a marked man, and it was not only Germans who wanted him. An American agent, Мое Berg, was also on his trail. Berg found him and persuaded him to come to the States. The war was still on and immigration was nearly impossible, but Berg persuaded William Donovan, the head of his agency, to seek support from President Franklin Roosevelt himself. Berg had been famous as a baseball catcher in civilian life, and when Roosevelt learned that Ferri now was in the hands of his agent, he remarked, “I see Berg is still catching pretty well.”23

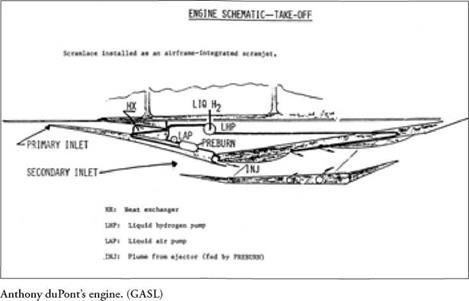

At NACA-Langley after the war, he rose in management and became director of the Gas Dynamics Branch in 1949. He wrote an important textbook, Elements of Aerodynamics of Supersonic Flows (Macmillan, 1949). Holding a strong fondness for the academic world, he took a professorship at Brooklyn Polytechnic Institute in 1951, where in time he became chairman of his department. He built up an aerodynamics laboratory at Brooklyn Poly and launched a new activity as a consultant. Soon he was working for major companies, drawing so many contracts that his graduate students could not keep up with them. He responded in 1956 by founding a company, General Applied Science Laboratories (GASL). With financial backing from the Rockefellers, GASL grew into a significant center for research in high-speed flight.24

He was a formidable man. Robert Sanator, a former student, recalls that “you had to really want to be in that course, to learn from him. He was very fast. His mind was constantly moving, redefining the problem, and you had to be fast to keep up with him. He expected people to perform quickly, rapidly.” John Erdos, another ex-student, adds that “if you had been a student of his and later worked for him, you could never separate the professor-student relationship from your normal working relationship.” He remained Dr. Ferri to these people, never Tony, even when they rose to leadership within their companies.25

He came early to the scramjet. Taking this engine as his own, he faced its technical difficulties squarely and asserted that they could be addressed, giving examples of approaches that held promise. He repeatedly emphasized that scramjets could offer performance far higher than that of rockets. He presented papers at international conferences, bringing these ideas to a wider audience. In turn, his strong professional reputation ensured that he was taken seriously. He also performed experiments as he sought to validate his claims. More than anyone else, Ferri turned the scramjet from an idea into an invention, which might be developed and made practical.

His path to the scramjet began during the 1950s, when his work as a consultant brought him into a friendship with Alexander Kartveli at Republic Aviation. Louis Nucci, Ferris longtime colleague, recalls that the two men “made good sparks. They were both Europeans and learned men; they liked opera and history.” They also complemented each other professionally, as Kartveli focused on airplane design while Ferri addressed difficult problems in aerodynamics and propulsion. The two men worked together on the XF-103 and fed off each other, each encouraging the other to think bolder thoughts. Among the boldest was a view that there were no natural limits to aircraft speed or performance. Ferri put forth this idea initially; Kartveli then supported it with more detailed studies.26

The key concept, again, was the scramjet. Holding a strong penchant for experimentation, Ferri conducted research at Brooklyn Poly. In September 1958, at a conference in Madrid, he declared that steady combustion, without strong shocks, had been accomplished in a supersonic airstream at Mach 3-0. This placed him midway in time between the supersonic-combustion demonstrations at Marquardt and at APL.27

Shock-free flow in a duct continued to loom as a major problem. The Lewis, Marquardt, and APL investigators had all bypassed this issue by treating external combustion in the supersonic flow past a wing, but Ferri did not flinch. He took the problem of shock-free flow as a point of departure, thereby turning the ducted scramjet from a wish into a serious topic for investigation.

In supersonic wind tunnels, shock-free flow was an everyday affair. However, the flow in such tunnels achieved its supersonic Mach values by expanding through a nozzle. By contrast, flow within a scramjet was to pass through a supersonic inlet and then be strongly heated within a combustor. The inlet actually had the purpose of producing a shock, an oblique one that was to slow and compress the flow while allowing it to remain supersonic. However, the combustion process was only too likely to produce unwanted shocks, which would limit an engines thrust and performance.

Nicholls, at Michigan, proposed to make a virtue of necessity by turning a combustor shock to advantage. Such a shock would produce very strong heating of the flow. If the fuel and air had been mixed upstream, then this combustor shock could produce ignition. Ferri would have none of this. He asserted that “by using a suitable design, formation of shocks in the burner can be avoided.”28

Specifically, he started with a statement by NACA’s Weber and MacKay on combustors. These researchers had already written that the combustor needed a diverging shape, like that of a rocket nozzle, to overcome potential limits on the airflow rate due to heat addition (“thermal choking”). Ferri proposed that within such a combustor, “fuel is injected parallel to the stream to eliminate formation of shocks…. The fuel gradually mixes with the air and burns…and the combustion process can take place without the formation of shocks.” Parallel injection might take place by building the combustor with a step or sudden widening. The flow could expand as it passed the step, thereby avoiding a shock, while the fuel could be injected at the step.29

Ferri also made an intriguing contribution in dealing with inlets, which are critical to the performance ofscramjets. He did this by introducing a new concept called “thermal compression.” One approaches it by appreciating that a process of heat addition can play the role of a normal shock wave. When an airflow passes through such a shock, it slows in speed and therefore diminishes in Mach, while its temperature and pressure go up. The same consequences occur when a supersonic airflow is heated. It therefore follows that a process of heat addition can substitute for a normal shock.30

Practical inlets use oblique shocks, which are two-dimensional. Such shocks afford good control of the aerodynamics of an inlet. If heat addition is to substitute for an oblique shock, it too must be two-dimensional. Heat addition in a duct is one-dimensional, but Ferri proposed that numerous small burners, set within a flow, could achieve the desired two-dimensionality. By turning individual burners on or off, and by regulating the strength of each ones heating, he could produce the desired pattern of heating that in fact would accomplish the substitution of heating for shock action.31

Why would one want to do this? The nose of a hypersonic aircraft produces a strong bow shock, an oblique shock that accomplishes initial compression of the airflow. The inlet rides well behind the nose and features an enclosing cowl. The cowl, in turn, has a lip or outer rim. For best effectiveness, the inlet should sustain a “shock-on-lip” condition. The shock should not impinge within the inlet, for only the lip is cooled in the face of shock-impingement heating. But the shock also should not ride outside the inlet, or the inlet will fail to capture all of the shock – compressed airflow.

To maintain the shock-on-lip condition across a wide Mach range, an inlet requires variable geometry. This is accomplished mechanically, using sliding seals that must not allow leakage of very hot boundary-layer air. Ferris principle of thermal compression raised the prospect that an inlet could use fixed geometry, which was far simpler. It would do this by modulating its burners rather than by physically moving inlet hardware.

Thermal compression brought an important prospect of flexibility. At a given value of Mach, there typically was only one arrangement of a variable-geometry inlet that would produce the desired shock that would compress the flow. By contrast, the thermal-compression process might be adjusted at will simply by controlling the heating. Ferri proposed to do this by controlling the velocity of injection of the fuel. He wrote that “the heat release is controlled by the mixing process, [which]

depends on the difference of velocity of the air and of the injected gas.” Shock-free internal flow appeared feasible: “The fuel is injected parallel to the stream to eliminate formation of shocks [and] the combustion process can take place without the formation of shocks.” He added,

“The preliminary analysis of supersonic combustion ramjets…indicates that combustion can occur in a fixed-geometry burner-nozzle combination through a large range of Mach numbers of the air entering the combustion region. Because the Mach number entering the burner is permitted to vary with flight Mach number, the inlet and therefore the complete engine does not require variable geometry. Such an engine can operate over a large range of flight Mach numbers and, therefore, can be very attractive as an accelerating engine.”32

There was more. As noted, the inlet was to produce a bow shock of specified character, to slow and compress the incoming air. But if the inflow was too great, the inlet would disgorge its shock. This shock, now outside the inlet, would disrupt the flow within the inlet and hence in the engine, with the drag increasing and the thrust falling off sharply. This was known as an unstart.

Supersonic turbojets, such as the Pratt & Whitney J58 that powered the SR-71 to speeds beyond Mach 3, typically were fitted with an inlet that featured a conical spike at the front, a centerbody that was supposed to translate back and forth to adjust the shock to suit the flight Mach number. Early in the program, it often did not work.33 The test pilot James Eastham was one of the first to fly this spy plane, and he recalls what happened when one of his inlets unstarted.

“An unstart has your foil and undivided attention, right then. The airplane gives a very pronounced yaw; then you are very preoccupied with getting the inlet started again. The speed falls off; you begin to lose altitude. You follow a procedure, putting the spikes forward and opening the bypass doors. Then you would go back to the automatic positioning of the spike— which many times would unstart it again. And when you unstarted on one side, sometimes the other side would also unstart. Then you really had to give it a good massage.”34

The SR-71 initially used a spike-positioning system from Hamilton Standard. It proved unreliable, and Eastham recalls that at one point, “unstarts were literally stopping the whole program.”35 This problem was eventually overcome through development of a more capable spike-positioning system, built by Honeywell.36 Still, throughout the development and subsequent flight career of the SR-71, the positioning of inlet spikes was always done mechanically. In turn, the movable spike represented a prime example of variable geometry

Scramjets faced similar issues, particularly near Mach 4. Ferris thermal-compression principle applied here as well—and raised the prospect of an inlet that might fight against unstarts by using thermal rather than mechanical arrangements. An inlet with thermal compression then might use fixed geometry all the way to orbit, while avoiding unstarts in the bargain.

Ferri presented his thoughts publicly as early as I960. He went on to give a far more detailed discussion in May 1964, at the Royal Aeronautical Society in London. This was the first extensive presentation on hypersonic propulsion for many in the audience, and attendees responded effusively.

One man declared that “this lecture opened up enormous possibilities. Where they had, for lack of information, been thinking of how high in flight speed they could stretch conventional subsonic burning engines, it was now clear that they should be thinking of how far down they could stretch supersonic burning engines.” A. D. Baxter, a Fellow of the Society, added that Ferri “had given them an insight into the prospects and possibilities of extending the speed range of the airbreathing engine far beyond what most of them had dreamed of; in fact, assailing the field which until recently was regarded as the undisputed regime of the rocket.”37

Not everyone embraced thermal compression. “The analytical basis was rather weak,” Marquardt’s Arthur Thomas commented. “It was something that he had in his head, mostly. There were those who thought it was a lot of baloney.” Nor did Ferri help his cause in 1968, when he published a Mach 6 inlet that offered “much better performance” at lower Mach “because it can handle much higher flow.” His paper contained not a single equation.38

But Fred Billig was one who accepted the merits of thermal compression and gave his own analyses. He proposed that at Mach 5, thermal compression could increase an engine’s specific impulse, an important measure of its performance, by 61 percent. Years later he recalled Ferris “great capability for visualizing, a strong physical feel. He presented a full plate of ideas, not all of which have been realized.”39

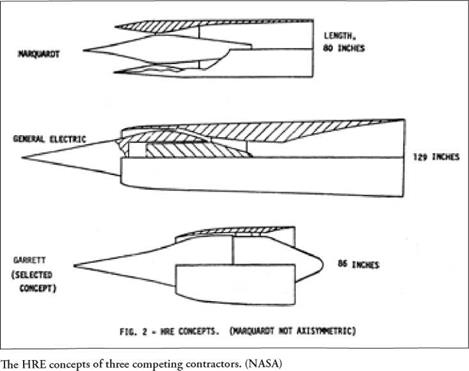

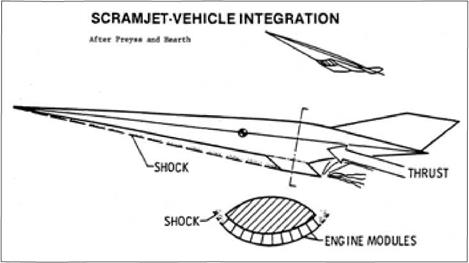

Podded engines like the HRE cannot do this. The axisymmetry of the HRE made it easy to study because it had a two-dimensional layout, but it was not suitable for an operational engine. The scramjet that indeed could capture and process most of the airflow is known as an airframe-integrated engine, in which much of the aircraft serves as part of the propulsion system. Its layout is three-dimensional and hence is more complex, but only an airframe-integrated concept has the additional power that can make it practical for propulsion.

Podded engines like the HRE cannot do this. The axisymmetry of the HRE made it easy to study because it had a two-dimensional layout, but it was not suitable for an operational engine. The scramjet that indeed could capture and process most of the airflow is known as an airframe-integrated engine, in which much of the aircraft serves as part of the propulsion system. Its layout is three-dimensional and hence is more complex, but only an airframe-integrated concept has the additional power that can make it practical for propulsion. Paper studies of airframe-integrated concepts began at Langley in 1968, breaking completely with those of HRE. These investigations considered the

Paper studies of airframe-integrated concepts began at Langley in 1968, breaking completely with those of HRE. These investigations considered the Within the Hypersonic Propulsion Branch, John Henry and Shimer Pinckney developed the initial concept. Their basic installation was a module, rectangular in shape, with a number of them set side by side to encircle the lower fuselage and achieve the required high capture of airflow. Their inlet had a swept opening that angled backward at 48 degrees.

Within the Hypersonic Propulsion Branch, John Henry and Shimer Pinckney developed the initial concept. Their basic installation was a module, rectangular in shape, with a number of them set side by side to encircle the lower fuselage and achieve the required high capture of airflow. Their inlet had a swept opening that angled backward at 48 degrees.

Northam and Anderson wrote that it “was also tested at Mach 4 and demonstrated good performance without combustor – inlet interaction.”27

Northam and Anderson wrote that it “was also tested at Mach 4 and demonstrated good performance without combustor – inlet interaction.”27

The name “Bomarc” derives from the contractors Boeing and the Michigan Aeronautical Research Center, which conducted early studies. It was a single – stage, ground-launched antiaircraft missile that could carry a nuclear warhead. A built-in liquid-propellant rocket provided boost; it was replaced by a solid rocket in a later version. Twin ramjets sustained cruise at Mach 2.6. Range of the initial operational model was 250 miles, later extended to 440 miles.9

The name “Bomarc” derives from the contractors Boeing and the Michigan Aeronautical Research Center, which conducted early studies. It was a single – stage, ground-launched antiaircraft missile that could carry a nuclear warhead. A built-in liquid-propellant rocket provided boost; it was replaced by a solid rocket in a later version. Twin ramjets sustained cruise at Mach 2.6. Range of the initial operational model was 250 miles, later extended to 440 miles.9 Paralleling Bomarc, the Navy pursued an independent effort that developed a ship-based antiaircraft missile named Talos, after a mythical defender of the island of Crete. It took shape at a major ramjet center, the Applied Physics Laboratory (APL) of Johns Hopkins University. Like Bomarc, Talos was nuclear-capable; Jane’s gave its speed as Mach 2.5 and its range as 65 miles.

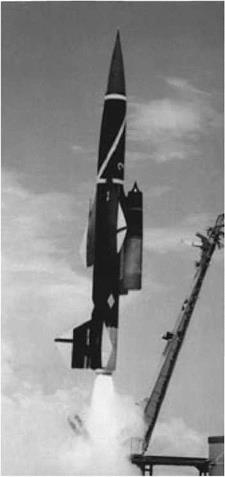

Paralleling Bomarc, the Navy pursued an independent effort that developed a ship-based antiaircraft missile named Talos, after a mythical defender of the island of Crete. It took shape at a major ramjet center, the Applied Physics Laboratory (APL) of Johns Hopkins University. Like Bomarc, Talos was nuclear-capable; Jane’s gave its speed as Mach 2.5 and its range as 65 miles. An initial version first flew in 1952, at New Mexico’s White Sands Missile Range. A prototype of a nuclear-capable version made its own first flight in December 1953. The Korean War had sparked development of this missile, but the war ended in mid-1953 and the urgency diminished. When the Navy selected the light cruiser USS Galveston for the first operational deployment of Talos, the conversion of this ship became a four-year task. Nevertheless, Talos finally joined the fleet in 1958, with other cruisers installing it as well. It remained in service until 1980.13

An initial version first flew in 1952, at New Mexico’s White Sands Missile Range. A prototype of a nuclear-capable version made its own first flight in December 1953. The Korean War had sparked development of this missile, but the war ended in mid-1953 and the urgency diminished. When the Navy selected the light cruiser USS Galveston for the first operational deployment of Talos, the conversion of this ship became a four-year task. Nevertheless, Talos finally joined the fleet in 1958, with other cruisers installing it as well. It remained in service until 1980.13 with launches of complete missiles taking place at Cape Canaveral. The first four were flops; none even got far enough to permit ignition of the ramjets. In mid-July of

with launches of complete missiles taking place at Cape Canaveral. The first four were flops; none even got far enough to permit ignition of the ramjets. In mid-July of The missile accelerated on rocket power and leveled off, the twin ramjet engines ignited, and it stabilized in cruise at 64,000 feet. It continued in this fashion for half an hour. Then, approaching the thousand-mile mark in range, its autopilot initiated a planned turnaround to enable this Navaho to fly back uprange. The turn was wide, and ground controllers responded by tightening it under radio control. This disturbed the airflow near the inlet of the right ramjet, which flamed out. The missile lost speed, its left engine also flamed out, and the vehicle fell into the Atlantic. It had been airborne for 42 minutes, covering 1,237 miles.15

The missile accelerated on rocket power and leveled off, the twin ramjet engines ignited, and it stabilized in cruise at 64,000 feet. It continued in this fashion for half an hour. Then, approaching the thousand-mile mark in range, its autopilot initiated a planned turnaround to enable this Navaho to fly back uprange. The turn was wide, and ground controllers responded by tightening it under radio control. This disturbed the airflow near the inlet of the right ramjet, which flamed out. The missile lost speed, its left engine also flamed out, and the vehicle fell into the Atlantic. It had been airborne for 42 minutes, covering 1,237 miles.15

Moreover, General Skantze was advancing into high-level realms of command, where he could make his voice heard. In August 1982 he went to Air Force Headquarters, where he took the post of Deputy Chief of Staff for Research, Development, and Acquisition. This gave him responsibility for all Air Force programs in these areas. In October 1983 he pinned on his fourth star as he took an appointment as Air Force Vice Chief of Staff. In August 1984 he became Commander of the Air Force Systems Command.36

Moreover, General Skantze was advancing into high-level realms of command, where he could make his voice heard. In August 1982 he went to Air Force Headquarters, where he took the post of Deputy Chief of Staff for Research, Development, and Acquisition. This gave him responsibility for all Air Force programs in these areas. In October 1983 he pinned on his fourth star as he took an appointment as Air Force Vice Chief of Staff. In August 1984 he became Commander of the Air Force Systems Command.36

the speed of a spaceplane. In literally a week and a half, the entire Air Force senior command was briefed.”

the speed of a spaceplane. In literally a week and a half, the entire Air Force senior command was briefed.”

Rockwell International McDonnell Douglas General Dynamics Boeing Lockheed

Rockwell International McDonnell Douglas General Dynamics Boeing Lockheed vehicle was not to reach orbit entirely on slush – fueled scramjets but was to use a rocket for final ascent.

vehicle was not to reach orbit entirely on slush – fueled scramjets but was to use a rocket for final ascent. The model had “two knifelike, very thin, swept-back wings.” Mounted at its center of gravity, it “rotated at the slightest touch.” When the test began, a technician opened a valve to start the airflow. In Dornberger’s words,

The model had “two knifelike, very thin, swept-back wings.” Mounted at its center of gravity, it “rotated at the slightest touch.” When the test began, a technician opened a valve to start the airflow. In Dornberger’s words,