ESA, ‘Spacelab,’ and the Second Wave of American Imports

By 1972, European space programs were in the most severe of their many political crises. ELDO’s first Europa II launch had failed, and an international commission was investigating the organization’s many flaws. German and Italian leaders backed away from their commitments to ELDO. The United States offered the Europeans a part in the new shuttle program as a way to cut development costs and to discourage the European launcher program. In addition, the United States sent ambiguous signals to European governments on its willingness to launch European communications satellites. All European governments agreed on the criticality of satellite telecommunications, but ESRO’s charter did not allow commercial applications, and the telecommunications organization CETS had no satellite design capability. A sense of crisis pervaded negotiations among the European nations concerning their space programs.

Each of the member states of ELDO, ESRO, and CETS confronted the myriad problems and opportunities differently. The United Kingdom decisively turned its back on launch vehicles in favor of communications satellites. British leaders believed it wiser to use less expensive American launchers, so as to concentrate scarce resources on profitable communications satellites. Doubting that the United States would ever launch European satellites that would compete with the American satellite industry, French leaders insisted on developing a European launch vehicle. German leaders became disillusioned with launchers and were committed to cooperation with the United States. Because of their embarrassing failures on ELDO’s third stage, they wanted to acquire American managerial skills. Italy wanted to ensure a ‘‘just return’’ on its investments in the European programs by having a larger percentage of contracts issued to Italian firms. For smaller countries to participate in space programs, they needed a European program.

The result of these interests was the package deal of 1972 that created the ESA. Britain received its maritime communications satellite. France got its favorite program, a new launch vehicle called Ariane. Germany acquired its cooperative program with the United States, Spacelab, a scientific laboratory that fit in the shuttle orbiter payload bay. The Italians received a guarantee of higher returns on Italy’s investments. All member states had to fund the basic operations costs of the new ESA and participate in a mandatory science program. Beyond that, participation was voluntary, based upon an a-la-carte system where the countries could contribute as they saw fit on projects of their choice. European leaders liquidated ELDO and made ESRO the basis for

ESA, which came into official existence in 1975. ESTEC Director Roy Gibson became ESRO’s new director-general and would become the first leader of ESA.70

Two factors drove the further Americanization of European space efforts: the hard lessons of ELDO’s failure, and cooperation with NASA on Spacelab. By 1973, both ELDO and ESRO had adopted numerous American techniques, leading to a convergence of their management methods. On the Europa III program, ELDO finally used direct contracting through work package management, augmented by phased planning to estimate costs. ESRO managers had done the same in the TD-1 program and in ESRO’s next major project, COS-B. ESRO’s two largest new projects, Ariane and Spacelab, inherited these lessons.

For Ariane, ESRO made CNES the prime contractor. CNES had responsibilities and authority that ELDO never had. ESRO required that CNES provide a master plan with a work breakdown structure, monthly reports with PERT charts, expenditures, contract status, and technical reports. CNES would deliver a report each month to ESRO and would submit to an ESRO review each quarter. CNES could transfer program funds if necessary within the project but had to report these transfers to ESRO. ESRO kept quality assurance functions in-house to independently monitor the program. Reviewers from ESRO required that CNES further develop its initial Work Breakdown Structure, which was ‘‘neither complete nor definitive,’’ and specify more clearly its interface responsibilities. The French took no chances after the disaster of ELDO and fully applied these techniques, as well as extending test programs developed for ELDO and for French national programs such as Dia – mant. French managers and engineers led the Ariane European consortium to spectacular success by the early 1980s, capturing a large share of the commercial space launch market.71

While Ariane used some American management methods,72 Spacelab brought another wave of American organizational imports to Europe. This suited Spacelab’s German sponsors, who wanted to learn more about American management. NASA required that the Europeans mimic its structure and methods. ESRO reorganized its headquarters structure to match that of NASA, with a Programme Directorate at ESRO headquarters, and a project office at ESTEC reporting to the center director. The NASA-ESRO agreement

required that ESRO provide a single contact person for the program and that this person be responsible for schedules, budgets, and technical efforts. Contrary to its earlier practice, ESRO placed its Spacelab head of project coordination with the technical divisions such as power systems, and guidance and control. ESRO used phased project planning, eventually selecting German company ERNO as prime contractor. ERNO contracted with American aerospace company McDonnell Douglas as systems engineering consultant, while NASA gave ESRO the results of earlier American design studies and described its experience with Skylab.73

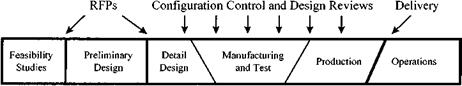

To ensure that the program could be monitored, NASA imposed a full slate of reviews and working groups on ESRO and its Spacelab contractors. NASA held the Preliminary Requirements Review in November 1974, the Subsystems Requirements Review in June 1975, the Preliminary Design Review in 1976, the Critical Design Review in 1978, and the Final Acceptance Reviews in 1981 and 1982. Just as in the earlier ESRO-I and ESRO-II projects, NASA and ESRO instituted a Joint Spacelab Working Group that met every other month to work out interfaces and other technical issues. Spacelab managers and engineers organized working sessions with their NASA counterparts, including the Spacelab Operations Working Group, the Software Coordination Group, and the Avionics Ad Hoc Group. European scientists coordinated with the Americans through groups such as the NASA/ESRO Joint Planning Group. The two organizations instituted joint annual reviews by the NASA administrator and the ESRO director-general and established liaison offices at each other’s facilities.74

ESRO did not easily adapt to NASA’s philosophy. From the American viewpoint, ESRO managers could not adequately observe contractors’ technical progress prior to hardware delivery, so NASA pressed ESRO to ‘‘penetrate the contractor.’’ NASA brought more than 100 people from the United States for some Spacelab reviews, whereas in the early years of Spacelab, ESRO’s entire team was 120 people. Heinz Stoewer, Spacelab’s first project manager, pressed negotiations all the way to the ESRO director-general to reduce NASA’s contingent. According to Stoewer, NASA’s presence was so overwhelming that ‘‘we could only survive those early years by having a close partnership between ourselves and Industry.’’75

NASA engineers and managers strove to break this unstated partnership

and force ESA76 managers to adopt NASA’s approach. During 1975, NASA and ESA engineers worked on the interface specifications between Spacelab and the shuttle orbiter. During the first Spacelab preliminary design in June 1976, NASA engineers and managers criticized their ESA counterparts for their lack of penetrating questions. To emphasize the point, NASA rejected ERNO’s preliminary design. ESA finally placed representatives at ERNO to monitor and direct the contractor more forcefully.77

One of the major problems uncovered during the Preliminary Design Reviews was a large underestimate of effort and costs for software development. These problems bubbled up to the ESA Council, leading to a directive to improve software development and cost estimation. ESTEC engineers investigated various software development processes and eventually decided to use the NASA Jet Propulsion Laboratory’s software standard as the basis for ESA software specifications. ESA arranged for twenty-one American software engineers and managers from TRW to assist. TRW programmers initially led the effort but gave responsibility to their European counterparts as they acquired the necessary skills. With this help, software became the first subsystem qualified for Spacelab.78

Another critical problem was Spacelab’s large backlog of changes, which resulted from a variety of causes. Organizational problems magnified the technical complexity of the project, which the Europeans had underestimated. One major problem was that ESA allowed ERNO to execute ‘‘make-work’’ changes without ESA approval. ESA, whether or not it agreed with a change, had to repay the contractor. Another problem was the relationship between ESA and NASA. The agreement between the two organizations specified that each would pay for changes to its hardware, regardless of where the change originated. For example, if NASA decided to make a change to the shuttle that required a change to Spacelab, ESA had to pay for the Spacelab modification. This situation occurred frequently at the program’s beginning, leading to protests by ESA executives.79

Spacelab managers solved their change control problems through several methods. First, they altered their lenient contractor change policy; ESA approval would be required for all modifications. Second, ESA management resisted NASA’s continuing changes. In negotiations by Director-General Roy Gibson, NASA executives finally realized the negative impact that changes to the shuttle had on Spacelab. As ESA’s costs mounted, NASA managers came to understand that they could not make casual changes that affected the European module. The sheer volume of changes to Spacelab reached the point where ERNO and ESA could not process individual changes quickly enough to meet the schedule. In a ‘‘commando-type operation,’’ ESA and ERNO negotiated an Omnibus Engineering Change Proposal that covered many of the changes in a single document. ERNO managers decided to begin engineering work on changes before they were approved, taking the risk that they might not recoup the costs through negotiations.80

Technical and managerial problems led also to personnel changes at ESA and ERNO. At ESA, Robert Pfeiffer replaced Heinz Stoewer as project manager in March 1977, while Michel Bignier took over as the program director in November 1976. At ERNO, Ants Kutzer replaced Hans Hoffman. Kutzer, who was Hoffman’s deputy at the time, was well known as the former ESRO – II project manager and a promoter of American management methods. After his stint at ESRO, Kutzer became manager of the German Azur and Helios spacecraft projects. For a time, ESA Director-General Roy Gibson acted as the de facto program manager because of Spacelab’s interactions with NASA, which required negotiations and communications at the highest level.81

ESA and ERNO eventually passed the second Preliminary Design Review in November 1976 by developing some 400 volumes of material. The program still had a number of hurdles to overcome, of which growing costs were the most difficult. Upon ESA’s creation, the British insisted that ESA, like ESRO, have strong cost controls. For a-la-carte programs such as Spacelab, when costs reached 120% of the originally agreement, contributing countries could withdraw from the project. Spacelab was the first program to pass the 120% threshold. After serious negotiations and severe cost cutting, the member states agreed to continue with a new cap of 140%. Eventually, ESA delivered Spacelab to NASA and the module flew successfully on a number of shuttle missions in the 1980s.82

The transfer of American systems engineering and project management methods to ESA was effectively completed by 1979, with the creation of the Systems Engineering Division at ESTEC, headed by former Spacelab project manager Stoewer. According to Stoewer, one of the System Engineering Division’s main purposes was to ensure ‘‘more comprehensive technical support to mission feasibility and definition studies.’’ The Systems Engineering Division established an institutional home for systems analysis and systems engineering, which along with the Project Control Division ensured that ESA would use systems management for years to come.83

Conclusion

ESRO began as an organization dedicated to the ideals of science and European integration. The CERN model of an organization controlled by scientists, for scientists, greatly influenced early discussions about a new organization for space research. However, space was unlike high-energy physics in the diversity of its constituency, the greater involvement of the military and industry, and the predominance of engineers in building satellites. Engineering, not science, became ESRO’s dominant force.

From the start, ESRO personnel looked to the United States for management models. Leaders of ESRO’s early projects asked for and received American advice through joint NASA-ESRO working groups for ESRO-I and ESRO- II. The United States offered ESRO two free launches on its Scout rockets but would not allow the Europeans to launch until they met American concerns at working group meetings, project reviews, and a formal Flight Readiness Review. On HEOS, executives and engineers from the loose European industrial consortium led by Junkers traveled to California for advice, where Lockheed advised much closer cooperation, along with a detailed management section in its proposal. ESRO’s selection of the Junkers team by a large margin made other European companies take notice, leading to tightly knit industrial consortia that at least paid lip service to strong project management.

When ESRO’s member states refused to let ESRO carry forward its surplus in 1967, they immediately caused a financial crisis. Significant cost overruns on TD-1 and TD-2, along with strong pressure to develop telecommunications satellites by issuing a prime contract to industry, spurred ESRO to beef up its management methods. ESRO adopted from NASA models phased planning, stronger configuration control, work package management, and an MIS at each ESRO facility.

American influence persisted through the 1970s. On Spacelab, NASA imposed its full slate of methods onto ESA. Some, such as contractor penetration, ESA initially resisted, before technical problems weakened the Europeans’ bargaining position. ESA managers and engineers eagerly adopted other methods, such as software engineering, once they realized the need for them. These became the standards for ESA projects thereafter.

Europeans created an international organization that developed a series of successful scientific and commercial satellites, captured the lion’s share of the world commercial space launch market, and pulled even with NASA in technical capability. To do so, they adapted NASA’s organizational methods to their own environment. In the process, ESRO changed from an organization to support scientists, into ESA, an engineering organization to support national governments and industries. ESA’s organizational foundation, like that of NASA and the air force, was systems management.

EIGHT