With advent of piloted orbital flight, NASA anticipated the potential effects of lightning upon launch vehicles in the Mercury, Gemini, and Apollo programs. Sitting atop immense boosters, the spacecraft were especially vulnerable on their launch pads and in the liftoff phase. One NASA lecturer warned his audience in 1965 that explosive squibs, detonators, vapors, and dust were particularly vulnerable to static electrical detonation; the amount of energy required to initiate detonation was "very small,” and, as a consequence, their triggering was "considerably more frequent than is generally recognized.”[146]

As mentioned briefly, on November 14, 1969, at 11:22 a. m. EST, Apollo 12, crewed by astronauts Charles "Pete” Conrad, Richard F. Gordon, and Alan L. Bean, thundered aloft from Launch Complex 39A at the Kennedy Space Center. Launched amid a torrential downpour, it disappeared from sight almost immediately, swallowed up amid dark, foreboding clouds that cloaked even its immense flaring exhaust. The rain clouds produced an electrical field, prompting a dual trigger response initiated by the craft. As historian Roger Bilstein wrote subsequently:

Within seconds, spectators on the ground were startled to see parallel streaks of lightning flash out of the cloud back to the launch pad. Inside the spacecraft, Conrad exclaimed "I don’t know what happened here. We had everything in the world drop out.” Astronautics Pete Conrad, Richard Gordon, and Alan Bean, inside the spacecraft, had seen a brilliant flash of light inside the spacecraft, and instantaneously, red and yellow warning lights all over the command module panels lit up like an electronic Christmas tree. Fuel cells stopped working, circuits went dead, and the electrically operated gyroscopic platform went tumbling out of control.

The spacecraft and rocket had experienced a massive power failure. Fortunately, the emergency lasted only seconds, as backup power systems took over and the instrument unit of the Saturn V launch vehicle kept the rocket operating.[147]

The electrical disturbance triggered the loss of nine solid-state instrumentation sensors, none of which, fortunately, was essential to the safety or completion of the flight. It resulted in the temporary loss of communications, varying between 30 seconds and 3 minutes, depending upon the particular system. Rapid engagement of backup systems permitted the mission to continue, though three fuel cells were automatically (and, as subsequently proved, unnecessarily) shut down. Afterward, NASA incident investigators concluded that though lightning could be triggered by the long combined length of the Saturn V rocket and its associated exhaust plume, "The possibility that the Apollo vehicle might trigger lightning had not been considered previously.”[148]

Apollo 12 constituted a dramatic wake-up call on the hazards of mixing large rockets and lightning. Afterward, the Agency devoted extensive efforts to assessing the nature of the lightning risk and seeking ways to mitigate it. The first fruit of this detailed study effort was the issuance, in August 1970, of revised electrodynamic design criteria for spacecraft. It stipulated various means of spacecraft and launch facility protection, including

1. Ensuring that all metallic sections are connected electrically (bonded) so that the current flow from a lightning stroke is conducted over the skin without any caps where sparking would occur or current would be carried inside.

2. Protecting objects on the ground, such as buildings, by a system of lightning rods and wires over the outside to carry the lightning stroke to the ground.

3. Providing a cone of protection for the lightning protection plan for Saturn Launch Complex 39.

4. Providing protection devices in critical circuits.

5. Using systems that have no single failure mode; i. e., the Saturn V launch vehicle uses triple-redundant circuitry on the auto-abort system, which requires two out of three of the signals to be correct before abort is initiated.

6. Appropriate shielding of units sensitive to electromagnetic radiation.[149]

|

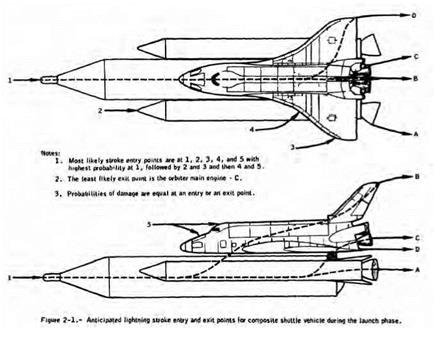

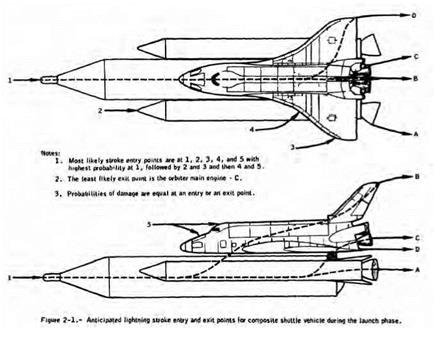

A 1 973 NASA projection of likely paths taken by lightning striking a composite structure Space Shuttle, showing attachment and exit points. NASA.

|

The stakes involved in lightning protection increased greatly with the advent of the Space Shuttle program. Officially named the Space Transportation System (STS), NASA’s Space Shuttle was envisioned as a routine space logistical support vehicle and was touted by some as a "space age DC-3,” a reference to the legendary Douglas airliner that had galvanized air transport on a global scale. Large, complex, and expensive, it required careful planning to avoid lightning damage, particularly surface burnthroughs that could constitute a flight hazard (as, alas, the loss of Columbia would tragically demonstrate three decades subsequently). NASA predicated its studies on Shuttle lightning vulnerabilities on two major strokes, one having a peak current of 200 kA at a current rate of change of 100 kA per microsecond (100 kA / 10-6 sec), and a second of 100 kA at a current rate of change of 50 kA / 10-6 sec. Agency researchers also modeled various intermediate currents of lower energies. Analysis indicated that the Shuttle and its launch stack (consisting of the orbiter, mounted on a liquid fuel tank flanked by two solid – fuel boosters) would most likely have lightning entry points at the tip of its tankage and boosters, the leading edges of its wings at mid-span

and at the wingtip, on its upper nose surface, and (least likely) above the cockpit. Likely exit points were the nozzles of the two solid-fuel boosters, the trailing-edge tip of the vertical fin, the trailing edge of the body flap, the trailing edges of the wing tip, and (least likely) the nozzles of its three liquid-fuel Space Shuttle main engines (SSMEs).[150] Because the Shuttle orbiter was, effectively, a large delta aircraft, data and criteria assembled previously for conventional aircraft furnished a good reference base for Shuttle lightning prediction studies, even studies dating to the early 1940s. As well, Agency researchers undertook extensive tests to guard against inadvertent triggering of the Shuttle’s solid rocket boosters (SRBs), because their premature ignition would be catastrophic.[151]

Prudently, NASA ensured that the servicing structure on the Shuttle launch complex received an 80-foot lightning mast plus safety wires to guide strikes to the ground rather than through the launch vehicle. Dramatic proof of the system’s effectiveness occurred in August 1983, when lightning struck the launch pad of the Shuttle Challenger before launching mission STS-8, commanded by Richard

H. Truly. It was the first Shuttle night launch, and it subsequently proceeded as planned.

The hazards of what lightning could do to a flight control system (FCS) was dramatically illustrated March 26, 1987, when a bolt led to the loss of AC-67, an Atlas-Centaur mission carrying FLTSATCOM 6, a TRW, Inc., communications satellite developed for the Navy’s Fleet Satellite Communications system. Approximately 48 seconds after launch, a cloud-to-ground lightning strike generated a spurious signal into the Centaur launch vehicle’s digital flight control computer, which then sent a hard-over engine command. The resultant abrupt yaw overstressed the vehicle, causing its virtual immediate breakup. Coming after the weather-related loss of the Space Shuttle Challenger the previous year, the loss of AC-67 was particularly disturbing. In both cases, accident investigators found that the two Kennedy teams had not taken adequate account of meteorological conditions at the time of launch.[152]

The accident led to NASA establishing a Lightning Advisory Panel to provide parameters for determining whether a launch should proceed in the presence of electrical activity. As well, it understandably stimulated continuing research on the electrodynamic environment at the Kennedy Space Center and on vulnerabilities of launch vehicles and facilities at the launch site. Vulnerability surveys extended to in-flight hardware, launch and ground support equipment, and ultimately almost any facility in areas of thunderstorm activity. Specific items identified as most vulnerable to lightning strikes were electronic systems, wiring and cables, and critical structures. The engineering challenge was to design methods of protecting those areas and systems without adversely affecting structural integrity or equipment performance.

To improve the fidelity of existing launch models and develop a better understanding of electrodynamic conditions around the Kennedy Center, between September 14 and November 4, 1988, NASA flew a modified single-seat single-engine Schweizer powered sailplane, the Special Purpose Test Vehicle (SPTVAR), on 20 missions over the spaceport and its reservation, measuring electrical fields. These trials took place in consultation with the Air Force (Detachment 11 of its 4th Weather Wing had responsibility for Cape lightning forecasting) and the New Mexico Institute of Mining and Technology, which selected candidate cloud forms for study and then monitored the realtime acquisition of field data. Flights ranged from 5,000 to 17,000 feet, averaged over an hour in duration, and took off from late morning to as late as 8 p. m. The SPTVAR aircraft dodged around electrified clouds as high as 35,000 feet, while taking measurements of electrical fields, the net airplane charge, atmospheric liquid water content, ice particle concentrations, sky brightness, accelerations, air temperature and pressure, and basic aircraft parameters, such as heading, roll and pitch angles, and spatial position.[153]

After the Challenger and AC-67 launch accidents, the ongoing Shuttle program remained a particular subject of Agency concern, particularly the danger of lightning currents striking the Shuttle during rollout, on the pad, or upon liftoff. As verified by the SPTVAR survey, large currents (greater than 100 kA) were extremely rare in the operating area. Researchers concluded that worst-case figures for an on-pad strike ran from 0.0026 to 0.11953 percent. Trends evident in the data showed that specific operating procedures could further reduce the likelihood of a lightning strike. For instance, a study of all lightning probabilities at Kennedy Space Center observed, "If the Shuttle rollout did not occur during the evening hours, but during the peak July afternoon hours, the resultant nominal probabilities for a >220 kA and >50 kA lightning strike are 0.04% and 0.21%, respectively. Thus, it does matter ‘when’ the Shuttle is rolled out.”[154] Although estimates for a triggered strike of a Shuttle in ascent were not precisely determined, researchers concluded that the likelihood of triggered strike (one caused by the moving vehicle itself) of any magnitude on an ascending launch vehicle is 140,000 times likelier than a direct hit on the pad. Because Cape Canaveral constitutes America’s premier space launch center, continued interest in lightning at the Cape and its potential impact upon launch vehicles and facilities will remain major NASA concerns.

![]() The intense U. S. research and development programs on high-angle – of-attack technology of the 1970s and 1980s ushered in a new era of carefree maneuvering for tactical aircraft. New options for close-in combat were now available to military pilots, and more importantly, departure/spin accidents were dramatically reduced. Design tools had been sharpened, and the widespread introduction of sophisticated digital flight control systems finally permitted the implementation of automatic departure and spin prevention systems. These advances did not go unnoticed by foreign designers, and emerging threat aircraft were rapidly developed and exhibited with comparable high-angle-of-attack capabilities.[1321] As the Air Force and Navy prepared for the next generation of fighters to replace the F-15 and F-14, the integration of superior maneuverability at high angles of attack and other performance – and signature-related capabilities became the new challenge.

The intense U. S. research and development programs on high-angle – of-attack technology of the 1970s and 1980s ushered in a new era of carefree maneuvering for tactical aircraft. New options for close-in combat were now available to military pilots, and more importantly, departure/spin accidents were dramatically reduced. Design tools had been sharpened, and the widespread introduction of sophisticated digital flight control systems finally permitted the implementation of automatic departure and spin prevention systems. These advances did not go unnoticed by foreign designers, and emerging threat aircraft were rapidly developed and exhibited with comparable high-angle-of-attack capabilities.[1321] As the Air Force and Navy prepared for the next generation of fighters to replace the F-15 and F-14, the integration of superior maneuverability at high angles of attack and other performance – and signature-related capabilities became the new challenge.