While Boeing and Douglas were reporting on early phases of their HSCT studies, the U. S. Congress approved an ambitious new program for HighSpeed Research (HSR) in NASAs budget for FY 1990. This effort envisioned Government and industry sharing the cost, with NASA taking the lead for the first several years and industry expanding its role as research progressed. (Because of the intermingling of sensitive and proprietary information, much of the work done during the HSR program was protected by a limited distribution system, and some has yet to enter the public domain.) Although the aircraft companies made some early progress on lower-boom concepts for the HSCT, they identified the need for more sonic boom research by NASA, especially on public acceptability and minimization techniques, before they could design a practical HSCT able to cruise over land.[470]

Because solving environmental issues would be a prerequisite to developing the HSCT, NASA structured the HSR program into two phases. Phase I—focusing on engine emissions, noise around airports, and sonic booms, as well as preliminary design work—was scheduled for 1990-1995. Among the objectives of Phase I were predicting HCST sonic boom signatures, determining feasible reduction levels, and finding a scientific basis on which to set acceptability criteria. After hopefully making sufficient progress on the environmental problems, Phase II would begin in 1994. With more industry participation and greater funding, it would focus on economically realistic airframe and propulsion technologies and was hoped to have extended until 2001.[471]

When NASA convened its first workshop for the entire High-Speed Research program in Williamsburg, VA, from May 14-16, 1991, the headquarters status report on sonic boom technology warned that "the importance of reducing sonic boom cannot be overstated.” One of the Douglas studies had projected that even by 2010, overwater-only routes would account for only 28 percent of long-range air traffic, but with overland cruise, the proposed HSCT could capture up to 70 percent of all such traffic. Based on past experience, the study admitted that research on low boom designs "is viewed with some skepticism as to its practical application. Therefore an early assessment is warranted.”[472]

NASA, its contractors, academic grantees, and the manufactures were already busy conducting a wide range of sonic boom research projects. The main goals were to demonstrate a waveform shape that could be acceptable to the public, to prove that a viable airplane could be built to generate such a waveform, to determine that such a shape would not be too badly disrupted during its propagation through the atmosphere, and to estimate that the economic benefit of overland supersonic flight would make up for any performance penalties imposed by a low-boom design.[473]

During the next 3 years, NASA and its partners went into a full-court press against the sonic boom. They began several dozen major experiments and studies, the results of which were published in reports and presented at conferences and workshops dealing solely with the sonic boom. These were held at the Langley Research Center in February 1992,[474] the Ames Research Center in May 1993,[475] the Langley Center in June 1994,[476] and again at Langley in September 1995.[477] The workshops, like the sonic boom effort itself, were organized into three major

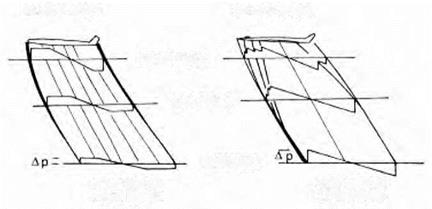

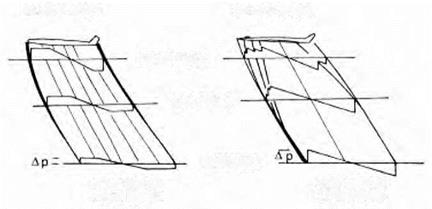

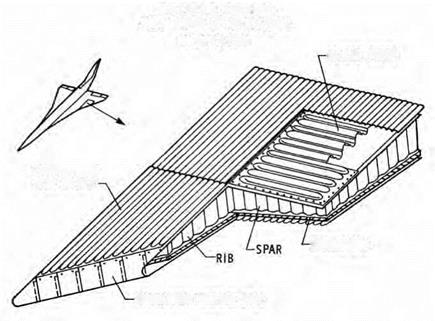

HIGH BOOM LOW DRAG

HIGH BOOM LOW DRAG

Figure 6. Low-boom/high-drag paradox. NASA.

areas of research: (1) configuration design and analysis (managed by Langley’s Advanced Vehicles Division), (2) atmospheric propagation, and (3) human acceptability (both managed by Langley’s Acoustics Division). The reports from these workshops were each well over 500 pages long and included dozens of papers on the progress or completion of various projects.[478]

The HSR program precipitated major advances in the design of supersonic configurations for reduced sonic boom signatures. Many of these were made possible by the new field of computational fluid dynamics (CFD). Researchers were now able to use complex computational algorithms processed by supercomputers to calculate the nonlinear aspects of near-field shock waves, even at high Mach numbers and angles of attack. Results could be graphically displayed in mesh and grid formats that emulated three dimensions. (In simple terms: before CFD, the nonlinear characteristics of shock waves generated by a realistic airframe had involved too many variables and permutations to calculate by conventional means.)

The Ames Research Center, with its location in the rapidly growing Silicon Valley area, was a pioneer in applying CFD capabilities to aerodynamics. At the 1991 HSR workshop, an Ames team led by Thomas Edwards and including modeling expert Samsun Cheung predicted that "in many ways, CFD paves the way to much more rapid progress

in boom minimization. . . . Furthermore, CFD offers fast turnaround and low cost, so high-risk concepts and perturbations to existing geometries can be investigated quickly.”[479]

At the same time, Christine Darden and a team that included Robert Mack and Peter G. Coen, who had recently devised a computer program for predicting sonic booms, used very realistic 12-inch wind tunnel models (the largest yet to measure for sonic boom). Although the model was more realistic than previous ones and validating much about the designs, including such details as engine nacelles, signature measurements in Langley’s4 by 4 Unitary Wind Tunnel and even Ames 9 by 7 Unitary Wind Tunnel still left much to be desired.[480] During subsequent workshops and at other venues, experts from Ames, Langley and their local contractors reported optimistically on the potential of new CFD computer codes— with names like UPS3D, OVERFLOW, AIRPLANE, and TEAM—to help design configurations optimized for both constrained sonic booms and aerodynamic efficiency. In addition to promoting the use of CFD, former Langley employee Percy "Bud” Bobbitt of Eagle Aeronautics pointed out the potential of hybrid laminar flow control (HLFC) for both aerodynamic and low-boom purposes.[481] At the 1992 workshop, Darden and Mack admitted how recent experiments at Langley had revealed limitations in using near-field wind tunnel data for extrapolating sonic boom signatures.[482]

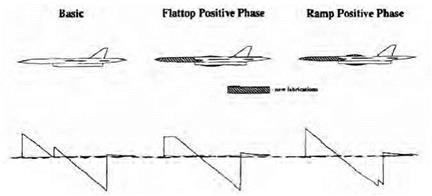

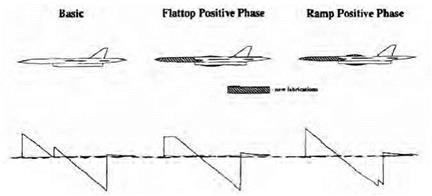

Even the numbers-crunching capability of supercomputers was not yet powerful enough for CFD codes and the grids they produced to accurately depict effects beyond the near field, but the use of parallel computing held the promise of eventually doing so. It was becoming apparent that, for most aerodynamic purposes, CFD was the design tool of the future, with wind tunnel models becoming more a means of verification. As just one example of the value of CFD methods, Ames researchers were able to design an airframe that generated a type of multishock signature that might reach the ground with a quieter sonic boom than either the ramp or flattop wave forms that were a goal of traditional minimization theories.[483] Although not part of the HSCT effort, Ames and its contractors also used CFD to continue exploring the possible advantages of obliquewing aircraft, including sonic boom minimization.[484]

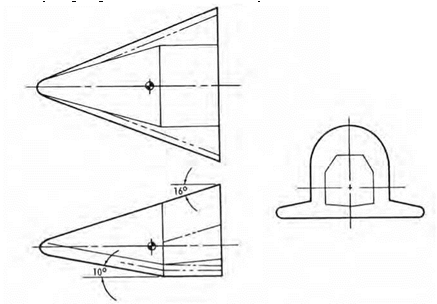

Since neither wind tunnels nor CFD could as yet prove the persistence of waveforms for more than a small fraction of the 200 to 300 body lengths needed to represent the distance from an HSCT to the surface, Domenic Maglieri of Eagle Aeronautics led a feasibility study in 1992 on the most cost effective ways to verify design concepts with realistic testing. After exploring a wide range of alternatives, the team selected the Teledyne-Ryan BQM-34 Firebee II remotely piloted vehicle (RPV), which the Air Force and Navy had used as a supersonic target drone. Four of these 28-feet-long RPVs, which could sustain a speed of Mach 1.3 at 9,000 feet (300 body lengths from the surface) were still available as surplus. Modifying them with low-boom design features such as specially configured 40-inch nose extensions (shown in Figure 7 with projected waveforms from 20,000 feet), could provide far-field measurements needed to verify the waveform shaping projected by CFD and wind tunnel models.[485] Meanwhile, a complementary plan at the Dryden Flight Research Center led to NASA’s first significant sonic boom testing there since the 1960s. SR-71 program manager David Lux, atmospheric specialist L. J. Ehernberger, aerodynamicist Timothy R. Moes, and principal investigator Edward A. Haering came up with a proposal to demonstrate CFD design concepts by having one of Dryden’s SR-71s modified with a low – boom configuration. As well as being much larger, faster, and higher-flying than the little Firebee, an SR-71 would also allow easier acquisition of near-field measurements for direct comparison with CFD predictions.[486] To lay the groundwork for this modification, the Dryden Center obtained baseline data from a standard SR-71 using one of its distinctive F-16XL aircraft (built by General Dynamics in the early 1980s for evaluation by the Air Force as a long-range strike version of the F-16 fighter). In tests at Edwards during July 1993, the F-16XL flew as close as 40 feet below and behind an SR-71 cruising at Mach 1.8 to collect nearfield pressure measurements. Both the Langley Center and McDonnell – Douglas analyzed this data, which had been gathered by a standard flight – test nose boom. Both reached generally favorable conclusions about the ability of CFD and McDonnell-Douglas’s proprietary MDBOOM program (derived from PCBoom) to serve as design tools. Based on these results, McDonnell-Douglas Aerospace West designed modifications to reduce the bow and middle shock waves of the SR-71 by reshaping the front of the airframe with a "nose glove” and adding to the midfuselage cross-section. An assessment of these modifications by Lockheed Engineering & Sciences found them feasible.[487] The next step would be to obtain the considerable funding that would be needed for the modifications and testing.

In May 1994, the Dryden Center used two of its fleet of F-18 Hornets to measure how near-field shockwaves merged to assess the feasibility of a similar low-cost experiment in waveform shaping using two SR-71s. Flying at Mach 1.2 with one aircraft below and slightly behind the other, the first experiment positioned the canopy of the lower F-18 in the tail shock extending down from the upper F-18 (called a tail-canopy formation). The second experiment had the lower F-18 fly with its canopy in the inlet shock of the upper F-18 (inlet-canopy). Ground sensor recordings revealed that the tail-canopy formation caused two separate N-wave signatures, but the inlet-canopy formation yielded a single modified signature, which two of the recorders measured as a flattop waveform. Even with the excellent visibility from the F-18’s bubble canopy (one pilot used the inlet shock wave as a visual cue for positioning

|

Figure 7. Proposed modifications to BQM-34 Firebee II. NASA.

|

his aircraft) and its responsive flight controls, maintaining such precise positions was still not easy, and the pilots recommended against trying to do the same with the SR-71, considering its larger size, slower response, and limited visibility.[488]

Atmospheric effects had long posed many uncertainties in understanding sonic booms, but advances in acoustics and atmospheric science since the SCR program promised better results. Scientists needed a better understanding not only of the way air molecules absorb sound waves, but also old issue of turbulence. In addition to using the Air Force’s Boomfile and other available material, Langley’s Acoustic Division had Eagle Aeronautics, in a project led by Domenic Maglieri, restore and digitize data from the irreplaceable XB-70 records.[489]

The division also took advantage of the NATO Joint Acoustic Propagation Experiment (JAPE) at the White Sands Missile Range in August 1991 to do some new testing. The researchers arranged for F-15, F-111, T-38 aircraft, and one of Dryden’s SR-71s to make 59 supersonic passes over an extensive array of BEAR, other recording systems, and meteorological sensors—both early in the morning (when the air was still) and during the afternoon (when there was usually more turbulence). Although meteorological data were incomplete, results later showed

UNMODIFIED SR-71 CONFIGURATION,

M -18. a-3.5 DEG

SR-71 CONFIGURATION WITH Mr DONNELL DOUGLAS-MODIFIED FUSELACE M =18. a = 3 9 DEG

am

Figure 8. Proposed SR-71 low-boom modification. NASA.

the effects of molecular relaxation and turbulence on both the rise time and overpressure of bow shocks.[490] Additional atmospheric information came from experiments on waveform freezing (persistence), measuring diffraction and distortion of sound waves, and trying to discover the actual relationship among molecular relaxation, turbulence, humidity, and other weather conditions.[491]

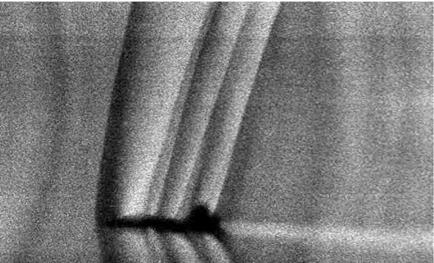

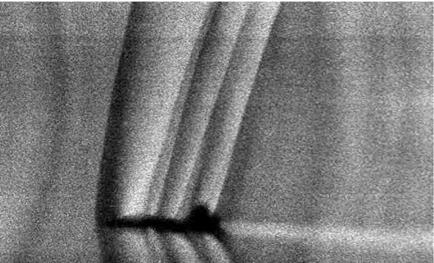

Leonard Weinstein of the Langley Center even developed a way to capture images of shock waves in the real atmosphere. He did this using a ground-based schlieren system (a specially masked and filtered tracking camera with the Sun providing backlighting). As shown in the accompanying photo, this was first demonstrated in December 1993 with a T-38 flying just over Mach 1 at Wallops Island.[492] All of the research into the theoretical, aerodynamic, and atmospheric aspects of sonic boom—no matter how successful—would not protect the Achilles’ heel of previous programs: the subjective response of human beings.

As a result, the Langley Center, led by Kevin Shepherd of the Acoustics Division, had begun a systematic effort to measure human responses to different strengths and shapes of sonic booms and hopefully determine a tolerable level for community acceptance. As an early step, the division built an airtight foam-lined sonic boom simulator booth (known as the "boom box”) based on one at the University of Toronto. Using the latest in computer-generated digital amplification and loudspeaker technology, it was capable of generating shaped waveforms up to 4 psf (140 decibels). Based on responses from subjects, researchers selected the perceived-level decibel (PLdB) as the preferred metric. For responses outside a laboratory setting, Langley planned several additional acceptance studies.[493]

By 1994, early results had become available from two of these projects. The Langley Center and Wyle Laboratories had developed mobile boom simulator equipment called the In-Home Noise Generation/ Response System (IHONORS). It consisted of computerized sound systems installed in 33 houses for 8 weeks at a time in a network connected by modems to a monitor at Langley. From February to December 1993, these households were subjected to almost 58,500 randomly timed sonic booms of various signatures for 14 hours a day. Although definitive analyses were not available until the following year, the initial results confirmed how the level of annoyance increased whenever subjects were startled or trying to rest.[494]

Preliminary results were also in from the first phase of the Western USA Sonic Boom Survey of civilians who had been exposed to such sounds for many years. This part of the survey took place in remote desert towns around the Air Force’s vast Nellis combat training range complex in Nevada. Unlike previous community surveys, it correlated citizen responses to accurately measured sonic boom signatures (using BEAR devices) in places where booms were a regular occurrence, yet where the subjects did not live on or near a military installation (i. e., where

|

Leonard Weinstein’s innovative schlieren photograph showing shock waves emanating from a T-38 flying Mach 1.1 at 13,000 feet, December 1993. NASA.

|

the economic benefits of the base for the local economy might influence their opinions). Although findings were not yet definitive, these 1,042 interviews proved more decisive than any of the many other research projects in determining the future direction of the HSCT effort. Based on a metric called day-night average noise level, the respondents found the booms much more annoying than previous studies on other types of aircraft noise had, even at the levels projected for low-boom designs. Their negative responses, in effect, dashed hopes that the HSR program might lead to an overland supersonic transport.[495]

Well before the paper on this survey was presented at the 1994 Sonic Boom Workshop, its early findings had prompted NASA Headquarters to reorient High-Speed Research toward an HSCT design that would only fly supersonic over water. Just as with the AST program 20 years earlier, this became the goal of Phase II of the HSR program (which began using FY 1994 funding left over from the canceled NASP).[496]

At the end of the 1994 workshop, Christine Darden discussed lessons learned so far and future directions. While the design efforts had shown outstanding progress, dispersal of the work among two NASA Centers

and two major aircraft manufacturers had resulted in communication problems as well as a certain amount of unhelpful competition. The milestone-driven HSR effort required concurrent progress in various different areas, which is inherently difficult to coordinate and manage. And even if low-boom airplane designs had been perfected to meet acoustic criteria, they would have been heavier and suffer from less acceptable low-speed performance than unconstrained designs. Under the new HSR strategy, any continued minimization research would now aim at lowering the sonic boom of the "baseline” overwater design, while propagation studies would concentrate on predicting boom carpets, focused booms, secondary booms, and ground disturbances. In view of the HSCT’s overwater mission, new environmental studies would devote more attention to the penetration of shock waves into water and the effect of sonic booms on marine mammals and birds.[497]

Although the preliminary results of the first phase of the Western USA Survey had already had a decisive impact, Wyle Laboratories completed Phase II with a similar polling of civilians in Mojave Desert communities exposed regularly to sonic booms, mostly from Edwards AFB. Surprisingly, this phase of the survey found the people there much more amenable to sonic booms than the desert dwellers in Nevada were, but they were still more annoyed by booms than by other aircraft noise of comparable perceived loudness.[498]

With the decision to end work on a low-boom HSCT, the proposed modifications of the Firebee RPVs and SR-71 had of course been canceled (postponing for another decade any full-scale demonstrations of boom shaping). Nevertheless, some testing continued that would prove of future value. From February through April 1995, the Dryden Center conducted more SR-71 and F-16XL sonic boom flight tests. Led by Ed Haering, this experiment included an instrumented YO-3A light aircraft from the Ames Center, an extensive array of various ground sensors, a network of new differential Global Positioning System (GPS) receivers accurate to within 12 inches, and installation of a sophisticated new nose boom with four pressure sensors on the F-16XL. On eight long missions, one of Dryden’s SR-71s flew at speeds between Mach 1.25 and Mach 1.6 at 31,000-48,000 feet, while the F-16XL (kept aloft by in-flight refuelings) made numerous near – and mid-field measurements at distances from 80 to 8,000 feet. Some of these showed that the canopy shock waves were still distinct from the bow shock after 4,000-6,000 feet. Comparisons of far-field measurements obtained by the YO-3A flying at 10,000 feet above ground level and the recording devices on the surface revealed effects of atmospheric turbulence. Analysis of the data validated two existing sonic boom propagation codes and clearly showed how variations in the SR-71’s gross weight, speed, and altitude caused differences in shock wave patterns and their coalescence into N-shaped waveforms.[499]

This successful experiment marked the end of dedicated sonic boom flight-testing during the HSR program.

By late 1998, a combination of economic, technological, political, and budgetary problems (including NASA’s cost overruns for the International Space Station) convinced Boeing to cut its support and the Administration of President Bill Clinton to terminate the HSR program at the end of FY 1999. Ironically, NASA’s success in helping the aircraft industry develop quieter subsonic aircraft, which had the effect of moving the goalpost for acceptable airport noise, was one of the factors convincing Boeing to drop plans for the HSCT. Nevertheless, the HSR program was responsible for significant advances in technologies, techniques, and scientific knowledge, including a better understand of the sonic boom and ways to diminish it.[500]

OPTIMUM

OPTIMUM