Another significant program to emerge from NASA’s ACEE program was the Advanced Turboprop project, which lasted from 1976 to 1987.

Like E Cubed, the ATP was largely focused on improving fuel efficiency. The project sought to move away from the turbofan and improve on the open-rotor (propeller) technology of 1950s. Open rotors have high bypass ratios and therefore hold great potential to dramatically increase fuel efficiency. NASA believed an advanced turboprop could lead to a reduction in fuel consumption of 20 to 30 percent over existing turbofan engines with comparable performance and cabin comfort (acceptable noise and vibration) at a Mach 0.8 and an altitude of 30,000 feet.[1432]

There were two major obstacles to returning to an open-rotor system, however. The most fundamental problem was that propellers typically lose efficiency as they turn more quickly at higher flight speeds. The challenge of the ATP was to find a way to ensure that propellers could operate efficiently at the same flight speeds as turbojet engines. This would require a design that allowed the fan to operate at slow speeds to maximize efficiency while the turbine operates fast to achieve adequate thrust. Another major obstacle facing NASA’s ATP was the fact that turboprop engines tend to be very noisy, making them less than ideal for commercial airline use. NASA’s ATP sought to overcome the noise problem and increase fuel efficiency by adopting the concept of swept propeller blades.

There were two major obstacles to returning to an open-rotor system, however. The most fundamental problem was that propellers typically lose efficiency as they turn more quickly at higher flight speeds. The challenge of the ATP was to find a way to ensure that propellers could operate efficiently at the same flight speeds as turbojet engines. This would require a design that allowed the fan to operate at slow speeds to maximize efficiency while the turbine operates fast to achieve adequate thrust. Another major obstacle facing NASA’s ATP was the fact that turboprop engines tend to be very noisy, making them less than ideal for commercial airline use. NASA’s ATP sought to overcome the noise problem and increase fuel efficiency by adopting the concept of swept propeller blades.

The ATP generated considerable interest from the aeronautics research community, growing from a NASA contract with the Nation’s last major propeller manufacturer, Hamilton Standard, to a project that involved 40 industrial contracts, 15 university grants, and work at 4 NASA research Centers—Lewis, Langley, Dryden, and Ames. NASA engineers, along with a large industry team, won the Collier Trophy for developing a new fuel-efficient turboprop in 1987.[1433]

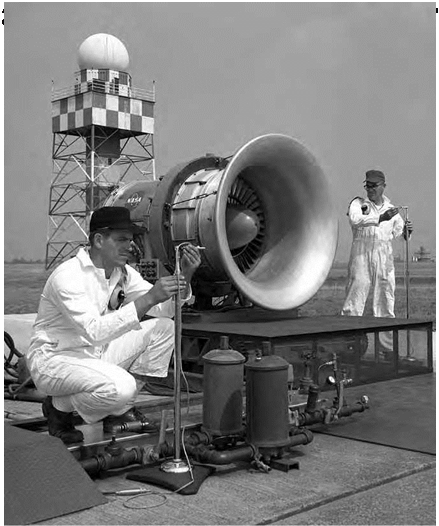

NASA initially contracted with Allison, P&W, and Hamilton Standard to develop a propeller for the ATP that rotated in one direction. This was called a "single rotation tractor system” and included a gearbox, which enabled the propeller and turbines to operate at different speeds. The NASA/industry team first conducted preliminary ground-testing. It combined the Hamilton Standard SR-7A prop fan with the Allison turbo shaft engine and a gearbox and performed 50 hours of success-

AFT NACELLE

AFT NACELLE

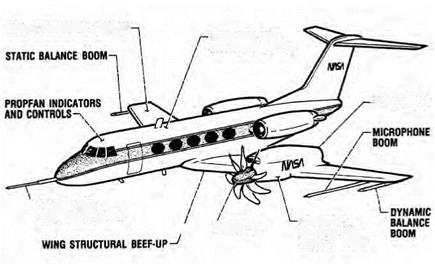

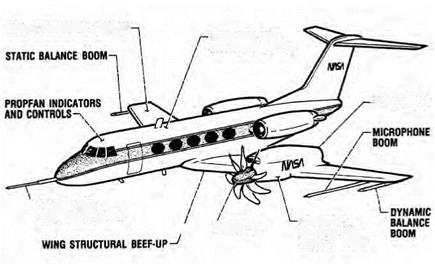

Schematic drawing of the NASA propfan testbed, showing modifications and features proposed for the basic Grumman Gulfstream airframe. NASA.

ful stationary tests in May and June 1986.[1434] Next, the engine parts were shipped to Savannah, GA, and reassembled on a modified Gulfstream II with a single-blade turboprop on its left wing. Flight-testing took place in 1987, validating NASA’s predictions of a 20 to 30 percent fuel savings.[1435]

Meanwhile, P&W’s main rival, GE, was quietly developing its own approach to the ATP known as the "unducted fan.” GE released the design to NASA in 1983, and NASA Headquarters instructed NASA Lewis to cooperate with GE on development and testing.[1436] Citing concerns about weight and durability, P&W decided not to use a gearbox to allow the propellers and the turbines to turn at different speeds.[1437] Instead, the company developed a counter-rotating pusher system. They mounted two counter-rotating propellers on the rear of the plane, which pushed it into flight. They also put counter-rotating blade rows in the turbine. The counter-rotating turbine blades were turning relatively

slowly to accommodate the fan, but because they were turning in opposite directions, their relative speed was high and therefore highly efficient.[1438]

GE performed ground tests of the unducted fan in 1985 that showed a 20 percent fuel-conservation rate.[1439] Then, in 1986, a year before the NASA/industry team flight test, GE mounted the unducted fan—the propellers and the fan mounted behind an F404 engine—on a Boeing 727 airplane and conducted a successful flight test.[1440]

Mark Bowles and Virginia Dawson have noted in their analysis of the ATP that the competition between the two ATP concepts and industry’s willingness to invest in the open-rotor technology fostered public acceptance of the turboprop concept.[1441] But despite the growing momentum and the technical success of the ATP project, the open rotor was never adopted for widespread use on commercial aircraft. P&W’s Crow said that the main reason was that it was just too noisy.[1442] "This was clearly more fuel-efficient technology, but it was not customer friendly at all,” said Crow. Another problem was that the rising fuel prices that had spurred NASA to work on energy-efficient technology were now going back down. There was no longer a favorable ratio of cost to develop turboprop technology versus savings in fuel burn.[1443] "In one sense of the word it was a failure,” said Crow. "Neither GE nor Pratt nor Boeing nor anyone else wanted us to commercialize those things.”

Mark Bowles and Virginia Dawson have noted in their analysis of the ATP that the competition between the two ATP concepts and industry’s willingness to invest in the open-rotor technology fostered public acceptance of the turboprop concept.[1441] But despite the growing momentum and the technical success of the ATP project, the open rotor was never adopted for widespread use on commercial aircraft. P&W’s Crow said that the main reason was that it was just too noisy.[1442] "This was clearly more fuel-efficient technology, but it was not customer friendly at all,” said Crow. Another problem was that the rising fuel prices that had spurred NASA to work on energy-efficient technology were now going back down. There was no longer a favorable ratio of cost to develop turboprop technology versus savings in fuel burn.[1443] "In one sense of the word it was a failure,” said Crow. "Neither GE nor Pratt nor Boeing nor anyone else wanted us to commercialize those things.”

Nevertheless, the ATP yielded important technological breakthroughs that fed into later engine technology developments at both GE and P&W. Crow said the ATP set the stage for the development of P&W’s latest engine, the geared turbofan.[1444] That engine is not an open-rotor system, but it does use a gearbox to allow the fan to turn more slowly than the turbines. The fan moves a large amount of air past the engine core without changing the velocity of the air very much. This enables a high bypass ratio, thereby increasing fuel efficiency; the bypass ratio is 8 to 1 in the 14,000-17,000- pound thrust class and 12 to 1 in the 17,000-23,000-pound thrust class.[1445]

GE renewed its ATP research to compete with P&W’s geared turbofan, announcing in 2008 that it would consider both open rotor and encased engine concepts for its new engine core development program, known as E Core. The company announced an agreement with NASA in the fall of 2008 to conduct a joint study on the feasibility of an open – rotor engine design. In 2009, GE plans to revisit its original open-rotor fan designs to serve as a baseline. GE and NASA will then conduct wind tunnel tests using the same rig that was used for the ATP. [1446] Snecma, GE’s 50/50 partner in CFM International—an engine manufacturing partnership—will participate in fan blade design testing. GE says the new E Core design—whether it adopts an open rotor or not—aims to increase fuel efficiency 16 percent above the baseline (a conventional turbofan configuration) in narrow-body and regional aircraft.[1447]

Another major breakthrough resulting from the ATP was the development of computational fluid dynamics (CFD), which allowed engineers to predict the efficiency of new propulsion systems more accurately. "What computational fluid dynamics allowed us to do was to design a new air foil based on what the flow field needed rather than proscribing a fixed air foil before you even get started with a design process,” said Dennis Huff, NASA’s Deputy Chief of the Aeropropulsion Division. "It was the difference between two – and three-dimensional analysis; you could take into account how the fan interacted with nacelle and certain aerodynamic losses that would occur. You could model numerically, whereas the correlations before were more empirically based.”[1448] Initially, companies were reluctant to embrace NASA’s new approach because they distrusted computational codes and wanted to rely on existing design methods, according to Huff. However, NASA continued to verify and validate the design methods until the companies began to accept them as standard practice. "I would say by the time we came out of the Advanced Turboprop project, we had a lot of these aerodynamic CFD tools in place that were proven on the turboprop, and we saw the companies developing codes for the turbo engine,” Huff said.[1449]

Another major breakthrough resulting from the ATP was the development of computational fluid dynamics (CFD), which allowed engineers to predict the efficiency of new propulsion systems more accurately. "What computational fluid dynamics allowed us to do was to design a new air foil based on what the flow field needed rather than proscribing a fixed air foil before you even get started with a design process,” said Dennis Huff, NASA’s Deputy Chief of the Aeropropulsion Division. "It was the difference between two – and three-dimensional analysis; you could take into account how the fan interacted with nacelle and certain aerodynamic losses that would occur. You could model numerically, whereas the correlations before were more empirically based.”[1448] Initially, companies were reluctant to embrace NASA’s new approach because they distrusted computational codes and wanted to rely on existing design methods, according to Huff. However, NASA continued to verify and validate the design methods until the companies began to accept them as standard practice. "I would say by the time we came out of the Advanced Turboprop project, we had a lot of these aerodynamic CFD tools in place that were proven on the turboprop, and we saw the companies developing codes for the turbo engine,” Huff said.[1449]

AFT NACELLE

AFT NACELLE