The Space Shuttle represented a rocket-lofted approach to hypersonic space access. But rockets were not the only means of propulsion contemplated for hypersonic vehicles. One of the most important aspects of hypersonic evolution since the 1950s has been the development of the supersonic combustion ramjet, popularly known as a scramjet. The ramjet in its simplest form is a tube and nozzle, into which air is introduced, mixed with fuel, and ignited, the combustion products passing

through a classic nozzle and propelling the engine forward. Unlike a conventional gas turbine, the ramjet does not have a compressor wheel or staged compressor blades, cannot typically function at speeds less than Mach 0.5, and does not come into its own until the inlet velocity is near or greater than the speed of sound. Then it functions remarkably well as an accelerator, to speeds well in excess of Mach 3.

Conventional subsonic-combustion ramjets, as employed by the Mach 4.31 X-7, held promise as hypersonic accelerators for a time, but they could not approach higher hypersonic speeds because their subsonic internal airflow heated excessively at high Mach. If a ramjet could be designed that had a supersonic internal flow, it would run much cooler and at the same time be able to accelerate a vehicle to doubledigit hypersonic Mach numbers, perhaps reaching the magic Mach 25, signifying orbital velocity. Such an engine would be a scramjet. Such engines have only recently made their first flights, but they nevertheless are important in hypersonics and point the way toward future practical air-breathing hypersonics.

An important concern explored at the NACA’s Lewis Flight Propulsion Laboratory during the 1950s was whether it was possible to achieve supersonic combustion without producing attendant shock waves that slow internal flow and heat it. Investigators Irving Pinkel and John Serafini proposed experiments in supersonic combustion under a supersonic wing, postulating that this might afford a means of furnishing additional lift. Lewis researchers also studied supersonic combustion testing in wind tunnels. Supersonic tunnels produced very low air pressure, but it was known that aluminum boro – hydride could promote the ignition of pentane fuel even at pressures as low as 0.03 atmospheres. In 1955, Robert Dorsch and Edward Fletcher successfully demonstrated such tunnel combustion, and subsequent research indicated that combustion more than doubled lift at Mach 3.

Though encouraging, this work involved flow near a wing, not in a ramjet-like duct. Even so, NACA aerodynamicists Richard Weber and John MacKay posited that shock-free flow in a supersonic duct could be attained, publishing the first open-literature discussion of theoretical scramjet performance in 1958, which concluded: "the trends developed herein indicate that the [scramjet] will provide superior performance

at higher hypersonic flight speeds.”[644] The Weber-MacKay study came a year after Marquardt researchers had demonstrated supersonic combustion of a hydrogen and air mix. Other investigators working contemporaneously were the manager William Avery and the experimentalist Frederick Billig, who independently achieved supersonic combustion at the Johns Hopkins University Applied Physics Laboratory (APL), and J. Arthur Nicholls at the University of Michigan.[645]

The most influential of all scramjet advocates was the colorful Italian aerodynamicist, partisan leader, and wartime emigree, Antonio Ferri. Before the war, as a young military engineer, he had directed supersonic wind tunnel studies at Guidonia, Benito Mussolini’s showcase aeronautical research establishment outside Rome. In 1943, after thecollapse of the Fascist regime and the Nazi assumption of power, he left Guidonia, leading a notably successful band of anti-Nazi, anti-Fascist partisans. Brought to America by Moe Berg, a baseball player turned intelligence agent, Ferri joined NACA Langley, becoming Director of its Gas Dynamics Branch. Turning to the academic world, he secured a professorship at Brooklyn Polytechnic Institute. He formed a close association with

Alexander Kartveli, chief designer at Republic Aviation, and designer of the P-47, F-84, XF-103, and F-105. Indeed, Kartveli’s XF-103 (which, alas, never was completed or flown) employed a Ferri engine concept. In 1956, he established General Applied Science Laboratories (GASL), with financial backing from the Rockefellers.[646]

Ferri emphasized that scramjets could offer sustained performance far higher than rockets could, and his strong reputation ensured that people listened to him. At a time when shock-free flow in a duct still loomed as a major problem, Ferri did not flinch from it but instead took it as a point of departure. He declared in September 1958 that he had achieved it, thus taking a position midway between the demonstrations at Marquardt and APL. Because he was well known, he therefore turned the scramjet from a wish into an invention, which might be made practical.

He presented his thoughts publicly at a technical colloquium in Milan in 1960 ("Many of the older men present,” John Becker wrote subsequently, "were politely skeptical”) and went on to give a far more detailed discussion in May 1964, at the Royal Aeronautical Society in London. This was the first extensive public presentation on hypersonic propulsion, and the attendees responded with enthusiasm. One declared that whereas investigators "had been thinking of how high in flight speed they could stretch conventional subsonic burning engines, it was now clear that they should be thinking of how far down they could stretch supersonic burning engines,” and another added that Ferri now was "assailing the field which until recently was regarded as the undisputed regime of the rocket.”[647]

Scramjet advocates were offered their first opportunity to actually build such propulsion systems with the Air Force’s abortive Aerospaceplane program of the late 1950s-mid-1960s. A contemporary to Dyna-Soar but far less practical, Aerospaceplane was a bold yet premature effort to produce a logistical transatmospheric vehicle and possible orbital strike system. Conceived in 1957 and initially known as the Recoverable Orbital Launch System (ROLS), Aerospaceplane attracted surprising interest from industry. Seventeen aerospace companies submitted contract proposals and related studies; Convair, Lockheed, and Republic submitted detailed designs. The Republic concept had the greatest degree of engine-airframe integration, a legacy of Ferri’s partnership with Kartveli.

By the early 1960s, Aerospaceplane not surprisingly was beset with numerous developmental problems, along with a continued debate over whether it should be a single – or two-stage system, and what proportion of its propulsion should be turbine, scramjet, and pure rocket. Though it briefly outlasted Dyna-Soar, it met the same harsh fate. In the fall of 1963, the Air Force Scientific Advisory Board damned the program in no uncertain terms, noting: "Aerospaceplane has had such an erratic history, has involved so many clearly infeasible factors, and has been subjected to so much ridicule that from now on this name should be dropped. It is recommended that the Air Force increase [its] vigilance [so] that no new program achieves such a difficult position.”[648] The next year, Congress slashed its remaining funding, and Aerospaceplane was at last consigned to a merciful oblivion.

In the wake of Aerospaceplane’s cancellation, both the Air Force and NASA maintained an interest in advancing scramjet propulsion for transatmospheric aircraft. The Navy’s scramjet interest, though great, was primarily in smaller engines for missile applications. But Air Force and NASA partisans formed an Ad-Hoc Working Group on Hypersonic Scramjet Aircraft Technology.

Both agencies pursued development programs that sought to build and test small scramjet modules. The Air Force Aero-Propulsion Laboratory sponsored development of an Incremental Scramjet flight – test program at Marquardt. This proposed test vehicle underwent extensive analysis and study, though without actually flying as a functioning scramjet testbed. The first manifestation of Langley work was the so – called Hypersonic Research Engine (HRE), an axisymmetric scramjet of circular cross section with a simple Oswatitsch spike inlet, designed by Anthony duPont. Garrett AiResearch built this engine, planned for a derivative of the X-15. The HRE never actually flew as a "hot” functioning engine, though the X-15A-2 flew repeatedly with a boilerplate test article mounted on the stub ventral fin (during its record flight to Mach 6.70 on October 3, 1967, searing hypersonic shock interactions melted it off the plane). Subsequent tunnel tests revealed that the HRE was, unfortunately, the wrong design. A podded and axisymmetric design, like an airliner’s fanjet, it could only capture a small fraction of the air that flowed past a vehicle, resulting in greatly reduced thrust. Integrating the scramjet with the airframe, so that it used the forebody to assist inlet performance and the afterbody as a nozzle enhancement, would more than double its thrust.[649]

Investigation of such concepts began at Langley in 1968, with pioneering studies by researchers John Henry, Shimer Pinckney, and others. Their work expanded upon a largely Ferri-inspired base, defining what emerged as common basic elements of subsequent Langley scramjet research. It included a strong emphasis upon airframe integration, use of fixed geometry, a swept inlet that could readily spill excess airflow, and the use of struts for fuel injection. Early observations, published in 1970, showed that struts were practical for a large supersonic combustor at Mach 8. The program went on to construct test scramjets and conducted almost 1,000 wind tunnel test runs of engines at Mach 4

and Mach 7. Inlets at Mach 4 proved sensitive to "unstarts,” a condition where the shock wave is displaced, disrupting airflow and essentially starving the engine of its oxygen. Flight at Mach 7 raised the question of whether fuel could mix and burn in the short available combustor length.[650]

Langley test engines, like engines at GASL, Marquardt, and other scramjet research organizations, encountered numerous difficulties. Large disparities existed between predicted performance and that actually achieved in the laboratory. Indeed, the scramjet, advanced so boldly in the mid-1950s, would not be ready for serious pursuit as a propulsive element until 1986. Then, on the eve of the National Aerospace Plane development program, Langley researchers Burton Northam and Griffin Anderson announced that NASA had succeeded at last in developing a practical scramjet. They proclaimed triumphantly: "At both Mach 4 and Mach 7 flight conditions, there is ample thrust both for acceleration and cruise.”[651]

Out of such optimism sprang the National Aero-Space Plane program, which became a central feature of the presidency of Ronald Reagan. It was linked to other Reagan-era defense initiatives, particularly his Strategic Defense Initiative, a ballistic missile shield intended to reduce the threat of nuclear war, which critics caustically belittled as "Star Wars.” SDI called for the large-scale deployment of defensive arms in space, and it became clear that the Space Shuttle would not be their carrier. Experience since the Shuttle’s first launch in April 1981 had shown that it was costly and took a long time to prepare for relaunch. The Air Force was unwilling to place the national eggs in such a basket. In February 1984, Defense Secretary Caspar Weinberger approved a document stating that total reliance upon the Shuttle represented an unacceptable risk.

An Air Force initiative was under way at the time that looked toward an alternative. Gen. Lawrence A. Skantze, Chief of Air Force Systems Command (AFSC), had sponsored studies of Trans Atmospheric Vehicles (TAVs) by Air Force Aeronautical Systems Division (ASD). These reflected concepts advanced by ASD’s chief planner, Stanley A. Tremaine, as well as interest from Air Force Space Division (SD), the Defense Advanced Research Projects Agency (DARPA), and Boeing and other companies. TAVs were SSTO craft intended to use the Space Shuttle Main Engine (SSME) and possibly would be air-launched from derivatives of the Boeing 747 or Lockheed C-5. In August 1982, ASD had hosted a 3-day conference on TAVs, attended by representatives from AFSC’s Space Division and DARPA. In December 1984, ASD went further. It established a TAV Program Office to "streamline activities related to longterm, preconceptual design studies.”[652]

DARPAs participation was not surprising, for Robert Cooper, head of this research agency, had elected to put new money into ramjet research. His decision opened a timely opportunity for Anthony duPont, who had designed the HRE for NASA. DuPont held a strong interest in "combined – cycle engines” that might function as a turbine air breather, translate to ram/scram, and then perhaps use some sophisticated air collection and liquefaction process to enable them to boost as rockets into orbit. There are several types of these engines, and duPont had patented such a design as early as 1972. A decade later, he still believed in it, and he learned that Anthony Tether was the DARPA representative who had been attending TAV meetings.

Tether sent him to Cooper, who introduced him to DARPA aerody – namicist Robert Williams, who brought in Arthur Thomas, who had been studying scramjet-powered spaceplanes as early as Sputnik. Out of this climate of growing interest came a $5.5 million DARPA study program, Copper Canyon. Its results were so encouraging that DARPA took the notion of an air-breathing single-stage-to-orbit vehicle to Presidential science adviser George Keyworth and other senior officials, including Air Force Systems Command’s Gen. Skantze. As Thomas recalled: "The people were amazed at the component efficiencies that had been

|

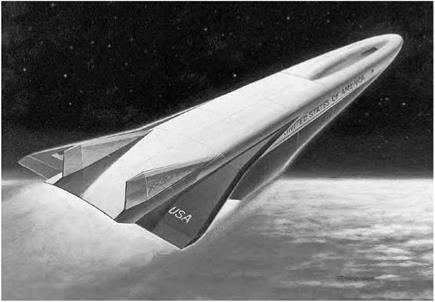

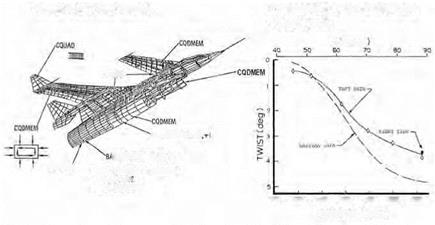

The National Aero-Space Plane concept in final form, showing its modified lifting body design approach. NASA.

|

assumed in the study. They got me aside and asked if I really believed it. Were these things achievable? Tony [duPont] was optimistic everywhere: on mass fraction, on drag of the vehicle, on inlet performance, on nozzle performance, on combustor performance. The whole thing, across the board. But what salved our consciences was that even if these things weren’t all achieved, we still could have something worthwhile. Whatever we got would still be exciting.”[653]

Gen. Skantze realized that SDI needed something better than the Shuttle—and Copper Canyon could possibly be it. Briefings were encouraging, but he needed to see technical proof. That evidence came when he visited GASL and witnessed a subscale duPont engine in operation. Afterward, as DARPAs Bob Williams recalled subsequently: "the Air Force system began to move with the speed of a spaceplane.”[654] Secretary of Defense Caspar Weinberger received a briefing and put his support behind the effort. In January 1986, the Air Force established a joint-service Air Force-Navy-NASA National Aero-Space Plane Joint Program Office at Aeronautical Systems Division, transferring into it all the personnel

previously assigned to the TAV Program Office established previously. (The program soon received an X-series designation, as the X-30.) Then came the clincher. President Ronald Reagan announced his support for what he now called the "Orient Express” in his State of the Union Address to the Nation on February 4, 1986. President Reagan’s support was not the product of some casual whim: the previous spring, he had ordered a joint Department of Defense-NASA space launch study of future space needs and, additionally, established a national space commission. Both strongly endorsed "aerospace plane development,” the space commission recommending it be given "the highest national priority.”[655]

Though advocates of NASP attempted to sharply differentiate their effort from that of the discredited Aerospaceplane of the 1960s, the NASP effort shared some distressing commonality with its predecessor, particularly an exuberant and increasingly unwarranted optimism that afforded ample opportunity for the program to run into difficulties. In 1984, with optimism at its height, DARPA’s Cooper declared that the X-30 could be ready in 3 years. DuPont, closer to the technology, estimated that the Government could build a 50,000-pound fighter-size vehicle in 5 years for $5 billion. Such predictions proved wildly off the mark. As early as 1986, the "Government baseline” estimate of the aircraft rose to 80,000 pounds. Six years later, in 1992, its gross weight had risen eightfold, to 400,000 pounds. It also had a "velocity deficit” of 3,000 feet per second, meaning that it could not possibly attain orbit. By the next year, NASP "lay on life support.”[656]

It had evolved from a small, seductively streamlined speedster to a fatter and far less appealing shape more akin to a wooden shoe, entering a death spiral along the way. It lacked performance, so it needed greater power and fuel, which made it bigger, which meant it lacked performance so that it needed greater power and fuel, which made it bigger. . . and bigger. . . and bigger. X-30 could never attain the "design closure” permitting it to reach orbit. NASP’s support continuously softened,

particularly as technical challenges rose, performance estimates fell, and other national issues grew in prominence. It finally withered in the mid – 1990s, leaving unresolved what, if anything, scramjets might achieve.[657]

![]()

Comparison Ground Test Data

Comparison Ground Test Data![]()

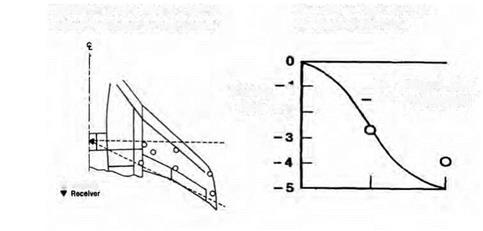

НІМАТ Wing with Electro-Optical Deflection Measurement System

НІМАТ Wing with Electro-Optical Deflection Measurement System