Early in the 1960s, researchers at Lockheed introduced an entirely different approach to thermal protection, which in time became the standard. Ablatives were unrivalled for once-only use, but during that decade the hot structure continued to stand out as the preferred approach for reusable craft such as Dyna-Soar. As noted, it used an insulated primary or load-bearing structure with a skin of outer panels. These emitted heat by radiation, maintaining a temperature that was high but steady.

2124-T851

2124-T851

7075-T76S1

LOCKALLOV

HAYNES 188

HAYNES 188

HAYNES 188

Vi RENE 41 INC0 62S

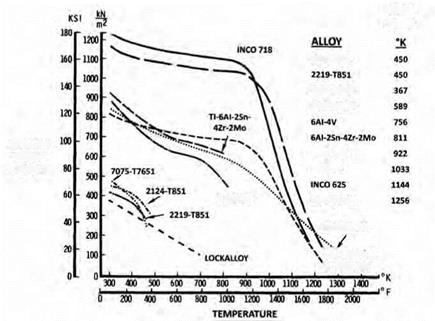

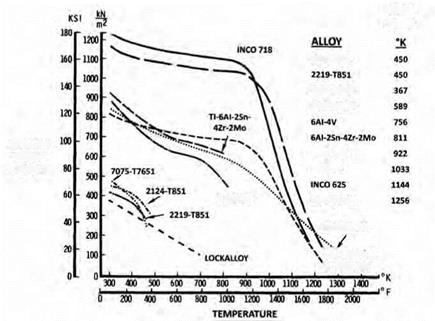

Strength versus temperature for various superalloys, including Rene 41, the primary structural material used on the X-20 Dyna-Soar. NASA.

Metal fittings supported these panels, and while the insulation could be high in quality, these fittings unavoidably leaked heat to the underlying structure. This raised difficulties in crafting this structure of aluminum and even of titanium, which had greater heat resistance. On Dyna-Soar, only Rene 41 would do.[1062]

Ablatives avoided such heat leaks while being sufficiently capable as insulators to permit the use of aluminum. In principle, a third approach combined the best features of hot structures and ablatives. It called for the use of temperature-resistant tiles, made perhaps of ceramic, which could cover the vehicle skin. Like hot-structure panels, they would radiate heat, while remaining cool enough to avoid thermal damage. In addition, they were to be reusable. They also were to offer the excellent insulating properties of good ablators, preventing heat from reaching the underlying structure—which once more might be of aluminum. This concept became known as reusable surface insulation (RSI). In time, it gave rise to the thermal protection of the Shuttle.

RSI grew out of ongoing work with ceramics for thermal protection. Ceramics had excellent temperature resistance, light weight, and good insulating properties. But they were brittle and cracked rather than stretched in response to the flexing under load of an underlying metal primary structure. Ceramics also were sensitive to thermal shock, as when heated glass breaks when plunged into cold water. In flight, such thermal shock resulted from rapid temperature changes during reentry.[1063]

Monolithic blocks of the ceramic zirconia had been specified for the nose cap of Dyna-Soar, but a different point of departure used mats of solid fiber in lieu of the solid blocks. The background to the Shuttle’s tiles lay in work with such mats that took place early in the 1960s at Lockheed Missiles and Space Company. Key people included R. M. Beasley, Ronald Banas, Douglas Izu, and Wilson Schramm. A Lockheed patent disclosure of December 1960 gave the first presentation of a reusable insulation made of ceramic fibers for use as a heat shield. Initial research dealt with casting fibrous layers from a slurry and bonding the fibers together.

Related work involved filament-wound structures that used long continuous strands. Silica fibers showed promise and led to an early success: a conical radome of 32-inch diameter built for Apollo in 1962. Designed for reentry, it had a filament-wound external shell and a lightweight layer of internal insulation cast from short fibers of silica. The two sections were densified with a colloid of silica particles and sintered into a composite. This gave a nonablative structure of silica composite reinforced with fiber. It never flew, as design requirements changed during the development of Apollo. Even so, it introduced silica fiber into the realm of reentry design.

Another early research effort, Lockheat, fabricated test versions of fibrous mats that had controlled porosity and microstructure. These were impregnated with organic fillers such as Plexiglas (methyl methacrylate). These composites resembled ablative materials, though the filler did not char. Instead it evaporated or volatilized, producing an outward flow of cool gas that protected the heat shield at high heat – transfer rates. The Lockheat studies investigated a range of fibers that included silica, alumina, and boria. Researchers constructed multilayer composite structures of filament-wound and short-fiber materials that resembled the Apollo radome. Impregnated densities were 40 to 60 lb/ ft3, the higher density being close to that of water. Thicknesses of no more than an inch gave acceptably low back-face temperatures during simulations of reentry.

This work with silica-fiber ceramics was well underway during 1962. Three years later, a specific formulation of bonded silica fibers was ready for further development. Known as LI-1500, it was 89 percent porous and had a density of 15 lb/ft3, one-fourth that of water. Its external surface was impregnated with filler to a predetermined depth, again to provide additional protection during the most severe reentry heating. By the time this filler was depleted, the heat shield was to have entered a zone of more moderate heating, where the fibrous insulation alone could provide protection.

Initial versions of LI-1500, with impregnant, were intended for use with small space vehicles similar to Dyna-Soar that had high heating rates. Space Shuttle concepts were already attracting attention—the January 1964 issue of Astronautics & Aeronautics, the journal of the American Institute of Aeronautics and Astronautics, presents the thinking of the day—and in 1965 a Lockheed specialist, Maxwell Hunter, introduced an influential configuration called Star Clipper. His design employed LI-1500 for thermal protection.

Like other Shuttle concepts, Star Clipper was to fly repeatedly, but the need for an impregnant in LI-1500 compromised its reusability. But in contrast to earlier entry vehicle concepts, Star Clipper was large, offering exposed surfaces that were sufficiently blunt to benefit from H. Julian Allen’s blunt-body principle. They had lower temperatures and heating rates, which made it possible to dispense with the impregnant. An unfilled version of LI-1500, which was inherently reusable, now could serve.

Here was the first concept of a flight vehicle with reusable insulation, bonded to the skin, which could reradiate heat in the fashion of a hot structure. However, the matted silica by itself was white and had low thermal emissivity, making it a poor radiator of heat. This brought excessive surface temperatures that called for thick layers of the silica insulation, adding weight. To reduce the temperatures and the thickness, the silica needed a coating that could turn it black for high emis – sivity. It then would radiate well and remain cooler.

The selected coating was a borosilicate glass, initially with an admixture of Cr2O3 and later with silicon carbide, which further raised the emissivity. The glass coating and the silica substrate were both silicon dioxide; this assured a match of their coefficients of thermal expansion, to prevent the coating from developing cracks under the temperature changes of reentry. The glass coating could soften at very high temperatures to heal minor nicks or scratches. It also offered true reusability, surviving repeated cycles to 2,500 °F. A flight test came in 1968 as NASA Langley investigators mounted a panel of LI-1500 to a Pacemaker reentry test vehicle along with several candidate ablators. This vehicle carried instruments and was recovered. Its trajectory reproduced the peak heating rates and temperatures of a reentering Star Clipper. The LI-1500 test panel reached 2,300 °F and did not crack, melt, or shrink. This proof – of-concept test gave further support to the concept of high-emittance reradiative tiles of coated silica for thermal protection.[1064]

Lockheed conducted further studies at its Palo Alto Research Center. Investigators cut the weight of RSI by raising its porosity from the 89 percent of LI-1500 to 93 percent. The material that resulted, LI-900, weighed only 9 pounds per cubic foot, one-seventh the density of water.[1065] There also was much fundamental work on materials. Silica exists in three crystalline forms: quartz, cristobalite, and tridymite. These not only have high coefficients of thermal expansion but also show sudden expansion or contraction with temperature because of solid-state phase changes. Cristobalite is particularly noteworthy; above 400 °F, it expands by more than 1 percent as it transforms from one phase to another. Silica fibers for RSI were to be glass, an amorphous rather than a crystalline state with a very low coefficient of thermal expansion and an absence of phase changes. The glassy form thus offered superb resistance to thermal stress and thermal shock, which would recur repeatedly during each return from orbit.[1066]

The raw silica fiber came from Johns Manville, which produced it from high-purity sand. At elevated temperatures, it tended to undergo "devitrification,” transforming from a glass into a crystalline state. Then, when cooling, it passed through phase-change temperatures and the fiber suddenly shrank, producing large internal tensile stresses. Some fibers broke, giving rise to internal cracking within the RSI and degradation of its properties. These problems threatened to grow worse during subsequent cycles of reentry heating.

To prevent devitrification, Lockheed worked to remove impurities from the raw fiber. Company specialists raised the purity of the silica to 99.9 percent while reducing contaminating alkalis to as low as 6 parts per million. Lockheed proceeded to do these things not only in the laboratory but also in a pilot plant. This plant took the silica from raw material to finished tile, applying 140 process controls along the way. Established in 1970, the pilot plant was expanded in 1971 to attain a true manufacturing capability. Within this facility, Lockheed produced tiles of LI-1500 and LI-900 for use in extensive programs of test and evaluation. In turn, the increasing availability of these tiles encouraged their selection for Shuttle protection in lieu of a hot-structure approach.[1067]

General Electric (GE) also became actively involved, studying types of RSI made from zirconia and from mullite, 3Al2O3+2SiO2, as well as from silica. The raw fibers were commercial grade, with the zirconia coming from Union Carbide and the mullite from Babcock and Wilcox. Devitrification was a problem, but whereas Lockheed had addressed it by purifying its fiber, GE took the raw silica from Johns Manville and tried to use it with little change. The basic fiber, the Q-felt of Dyna – Soar, also had served as insulation on the X-15. It contained 19 different elements as impurities. Some were present at a few parts per million, but others—aluminum, calcium, copper, lead, magnesium, potassium, sodium—ran from 100 to 1,000 parts per million. In total, up to 0.3 percent was impurity.

General Electric treated this fiber with a silicone resin that served as a binder, pyrolyzing the resin and causing it to break down at high temperatures. This transformed the fiber into a composite, sheathing each strand with a layer of amorphous silica that had a purity of 99.98 percent or more. This high purity resulted from that of the resin. The amorphous silica bound the fibers together while inhibiting their devitrification. General Electric’s RSI had a density of 11.5 lb/ft3, midway between that of LI-900 and LI-1500.[1068]

Many Shuttle managers had supported hot structures, but by mid – 1971 they were in trouble. In Washington, the Office of Management and Budget (OMB) now was making it clear that it expected to impose stringent limits on funding for the Shuttle, which brought a demand for new configurations that could cut the cost of development. Within weeks, the contractors did a major turnabout. They abandoned hot structures and embraced RSI. Managers were aware that it might take time to develop for operational use, but they were prepared to use ablatives for interim thermal protection and to switch to RSI once it was ready.[1069]

What brought this dramatic change? The advent of RSI production at Lockheed was critical. This drew attention from Max Faget, a longtime NACA-NASA leader who had kept his hand in the field of Shuttle design, offering a succession of conceptual design configurations that had helped to guide the work of the contractors. His most important concept, designated MSC-040, came out in September 1971 and served as a point of reference. It used RSI and proposed to build the Shuttle of aluminum rather than Rene 41 or anything similar.[1070]

Why aluminum? "My history has always been to take the most conservative approach,” Faget explained subsequently. Everyone knew how to work with aluminum, for it was the most familiar of materials, but everything else carried large question marks. Titanium, for one, was literally a black art. Much of the pertinent shop-floor experience had been gained within the SR-71 program and was classified. Few machine shops had pertinent background, for only Lockheed had constructed an airplane—the SR-71—that used titanium hot structure. The situation was worse for columbium and the superalloys, for these metals had been used mostly in turbine blades. Lockheed had encountered serious difficulties as its machinists and metallurgists wrestled with titanium. With the Shuttle facing the OMB’s cost constraints, no one cared to risk an overrun while machinists struggled with the problems of other new materials.[1071]

NASA Langley had worked to build a columbium heat shield for the Shuttle and had gained a particularly clear view of its difficulties. It was heavier than RSI but offered no advantage in temperature resistance.

In addition, coatings posed serious problems. Silicides showed promise of reusability and long life, but they were fragile and easily damaged. A localized loss of coating could result in rapid oxygen embrittlement at high temperatures. Unprotected columbium oxidized readily, and above the melting point of its oxide, 2,730 °F, it could burst into flame.[1072] "The least little scratch in the coating, the shingle would be destroyed during reentry,” Faget said. Charles Donlan, the Shuttle Program Manager at NASA Headquarters, placed this in a broader perspective in 1983:

Phase B was the first really extensive effort to put together studies related to the completely reusable vehicle. As we went along, it became increasingly evident that there were some problems. And then as we looked at the development problems, they became pretty expensive. We learned also that the metallic heat shield, of which the wings were to be made, was by no means ready for use. The slightest scratch and you are in trouble.[1073]

Other refractory metals offered alternatives to columbium, but even when proposing to use them, the complexity of a hot structure also militated against its selection. As a mechanical installation, it called for large numbers of clips, brackets, standoffs, frames, beams, and fasteners. Structural analysis loomed as a formidable task. Each of many panel geometries needed its own analysis, to show with confidence that the panels would not fail through creep, buckling, flutter, or stress under load. Yet this confidence might be fragile, for hot structures had limited ability to resist over-temperatures. They also faced the continuing issue of sealing panel edges against ingestion of hot gas during reentry.[1074]

In this fashion, having taken a long look at hot structures, NASA did an about-face as it turned toward the RSI that Lockheed’s Max Hunter had recommended as early as 1965. Then, in January 1972, President Richard Nixon gave his approval to the Space Shuttle program, thereby raising it to the level of a Presidential initiative. Within days, NASA’s Dale Myers spoke to a conference in Houston and stated that the Agency had made the basic decision to use RSI. Requests for proposal soon went out, inviting leading aerospace corporations to bid for the prime contract on the Shuttle orbiter, and North American won this $2.6-billion prize in July. However, the RSI wasn’t Lockheed’s. The proposal specified mullite RSI for the undersurface and forward fuselage, a design feature that had been held over from the company’s studies of a fully reusable orbiter during the previous year.[1075]

Still, was mullite RSI truly the one to choose? It came from General Electric and had lower emissivity than the silica RSI of Lockheed but could withstand higher temperatures. Yet the true basis for selection lay in the ability to withstand 100 reentries as simulated in ground test. NASA conducted these tests during the last 5 months of 1972, using facilities at its Ames, Johnson, and Kennedy Centers, with support from Battelle Memorial Institute.

The main series of tests ran from August to November and gave a clear advantage to Lockheed. That firm’s LI-900 and LI-1500 went through 100 cycles to 2,300 °F and met specified requirements for maintenance of low back-face temperature and minimal thermal conductivity. The mullite showed excessive back-face temperatures and higher thermal conductivity, particularly at elevated temperatures. As test conditions increased in severity, the mullite also developed coating cracks and gave indications of substrate failure.

The tests then introduced acoustic loads, with each cycle of the simulation now subjecting the RSI to loud roars of rocket flight along with the heating of reentry. LI-1500 continued to show promise. By mid – November, it demonstrated the equivalent of 20 cycles to 160 decibels, the acoustic level of a large launch vehicle, and 2,300 °F. A month later, NASA conducted what Lockheed describes as a "sudden death shootout”: a new series of thermal-acoustic tests, in which the contending materials went into a single large 24-tile array at NASA Johnson. After 20 cycles, only Lockheed’s LI-900 and LI-1500 remained intact. In separate tests, LI-1500 withstood 100 cycles to 2,500 °F and survived a thermal overshoot to 3,000 °F, as well as an acoustic overshoot to 174 dB. Clearly, this was the material NASA wanted.[1076]

|

|

As insulation, the tiles were astonishing. A researcher could heat one in a furnace until it was white hot, remove it, allow its surface to cool for a couple of minutes, and pick it up at its edges using his or her fingers, with its interior still at white heat. Lockheed won the thermal-protection subcontract in 1973, with NASA specifying LI-900 as the baseline RSI. The firm responded with preparations for a full – scale production facility in Sunnyvale, CA. With this, tiles entered the mainstream of thermal protection.

2124-T851

2124-T851