Laboratory Experiments and Sonic Boom Theory

The rapid progress made in understanding the nature and significance of sonic booms during the 1960s resulted from the synergy among flight testing, wind tunnel experiments, psychoacoustical studies, theoretical refinements, and new computing capabilities. Vital to this process was the largely free exchange of information by NASA, the FAA, the USAF, the airplane manufacturers, academia, and professional organizations such as the American Institute of Aeronautics and Astronautics (AIAA) and the Acoustical Society of America (ASA). The sharing of information even extended to potential rivals in Europe, where the Anglo-French Concorde supersonic airliner got off to a headstart on the more ambitious American program.

Designing commercial aircraft has always required tradeoffs between speed, range, capacity, weight, durability, safety, and, of course, costs— both for manufacturing and operations. Balancing such factors was especially challenging with an aircraft as revolutionary as the SST. Unlike with the supersonic military aircraft in the 1950s, NASA’s scientists and engineers and their partners in industry also had to increasingly consider the environmental impacts of their designs. At the Agency’s aeronautical Centers, especially Langley, this meant that aerodynamicists incorporated the growing knowledge about sonic booms in their equations, models, and wind tunnel experiments.

Harry Carlson of the Langley Center had conducted the first wind tunnel experiment on sonic boom generation in 1959. As reported in December, he tested seven models of various geometrical and airplane-like shapes at differing angles of attack in Langley’s original 4 by 4 supersonic wind tunnel at a speed of Mach 2.01. The tunnel’s relatively limited interior space mandated the use of very small models to obtain sonic boom signatures: about 2 inches in length for measuring shock waves at 8 body lengths distance and only about three-quarters inch for trying to measure them at 32 body lengths (as close as possible to the "far field,” a distance where multiple shock waves coalesce into the typical N-wave signature). Although far-field data were problematic, the overall results correlated with existing theory, such as Whitham’s formulas on volume-induced overpressures and Walkden’s on those caused by lift.[387] Carlson’s attempt to design one of the models to alleviate the strength of the bow shock was unsuccessful, but this might be considered NASAs first attempt at boom minimization.

The small size and extreme precision needed for the models, the disruptive effects of the assemblies needed to hold them, and the extra sensitivity required of pressure-sensing devices all limited a wind tunnel’s ability to measure the type of shock waves that would reach the ground from a full-sized aircraft. Even so, substantial progress continued, and the data served as a useful cross-check on flight test data and mathematical formulas.[388] For example, in 1962 Carlson used a 1-inch model of a B-58 to make the first correlation of flight test data with wind tunnel data and sonic boom theory. Results proved that wind tunnel readings, with appropriate extrapolations, could be used with some confidence to estimate sonic boom signatures.[389]

Exactly 5 years after publishing results of the first wind tunnel sonic boom experiment, Harry Carlson was able to report, "In recent years, intensive research efforts treating all phases of the problem have served to provide a basic understanding of this phenomenon. The theoretical studies [of Whitham and Walkden] have resulted in the correlations with the wind tunnel data.. .and with the flight data.”[390] As for minimization, wind tunnel tests of SCAT models had revealed that some configurations (e. g., the "arrow wing”) produced lower overpressures.[391] Such possibilities were soon being explored by aerodynamicists in industry, academia, and NASA. They included Langley’s long-time supersonic specialist, F. Edward McLean, who had discovered extended near-field effects that might permit designing airframes for lower overpressures.[392] Of major significance (and even more potential in the future), improved data reduction methods and numerical evaluations of sonic boom theory were being adapted for high-speed processing with new computer codes and hardware, such as Langley’s massive IBM 704. Using these new capabilities, Carlson, McLean, and others eventually designed the SCAT – 15F, an improved SST concept optimized for highly efficient cruise.[393]

In addition to reports and articles, NASA researchers presented findings from the growing knowledge about sonic booms in various meetings and professional symposia. One of the earliest took place September 17-19, 1963, when NASA Headquarters sponsored an SST feasibility studies review at the Langley Center—attended by Government, contractor, and airline personnel—that examined every aspect of the planned airplane. In a session on noise, Harry Carlson warned that "sonic boom considerations alone may dictate allowable minimum altitudes along most of the flight path and have indicated that in many cases the airframe sizing and engine selection depend directly on sonic boom.”[394] On top of that, Harvey Hubbard and Domenic Maglieri discussed how atmospheric effects and community response to building vibrations might pose problems with the current SST sonic boom objectives (2 psf during acceleration and 1.5 psf during cruise).[395]

The conferees discussed various other technological challenges for the planned American SST, some indirectly related to the sonic boom issue. For example, because of frictional heating, an airframe covered largely with stainless steel (such as the XB-70) or titanium (such as the then-top secret A-12/YF-12) would be needed to cruise at Mach 2.7+ and over 60,000 feet, an altitude that many hoped would allow the sonic boom to weaken by the time it reached the surface. Manufacturing such a plane, however, would be much more expensive than building aMach 2.2 SST with aluminum skin, such as the Concorde.

Despite such concerns, the FAA had already released the SST request for proposals (RFP) on August 15, 1963. Thereafter, as explained by Ed McLean, "NASA’s role changed from one of having its own concepts evaluated by the airplane industry to one of evaluating the SST concepts of the airplane industry.”[396] By January 1964, Boeing, Lockheed, North American, and their jet engine partners had submitted initial proposals. In retrospect, advocates of the SST were obviously hoping that technology would catch up with requirements before it went into production.

Although the SST program was now well underway, a growing awareness of the public response to booms became one factor in many that triagency (FAA-NASA-DOD) groups in the mid-1960s, including the PAC chaired by Robert McNamara, considered in evaluating the proposed SST designs. The sonic boom issue also became the focus of a special committee of the National Academy of Sciences and attracted increasing attention from the academic and scientific community at large.

The Acoustical Society of America, made up of professionals of all fields involving sound (ranging from music to noise to vibrations), sponsored the first Sonic Boom Symposium on November 3, 1965, during its 70th meeting in—appropriately enough—St. Louis. McLean, Hubbard, Carlson, Maglieri, and other Langley experts presented papers on the background of sonic boom research and their latest findings.[397] The paper by McLean and Barrett L. Shrout included details on a breakthrough in using near-field shock waves to evaluate wind tunnel models for boom minimization, in this case a reduction in maximum overpressure in a climb profile from 2.2 to 1.1 psf. This technique also allowed the use of 4-inch models, which were easier to fabricate to the close tolerances required for accurate measurements.[398]

In addition to the scientists and engineers employed by the aircraft manufactures, eminent researchers in academia took on the challenge of discovering ways to minimize the sonic boom, usually with support from NASA. These included the team of Albert George and A. Richard Seebass of Cornell University, which had one of the Nation’s premier aeronautical laboratories. Seebass edited the proceedings of NASA’s first sonic boom research conference, held on April 12, 1967. The meeting was chaired by another pioneer of minimization, Wallace D. Hayes of Princeton University, and attended by more than 60 other Government, industry, and university experts. Boeing had been selected as the SST contractor less than4 months earlier, but the sonic boom was becoming recognized far and wide as a possibly fatal flaw for its future production, or at least for allowing it to fly supersonically over land.[399] The two most obvious theoretical ways to reduce sonic booms during supersonic cruise—flying much higher with no increase in weight or building an airframe 50 percent longer at half the weight—were not considered practical.[400] Furthermore, as apparent from a presentation by Domenic Maglieri on flight test findings, such an airplane would still have to deal with the problem of booms caused by maneuvering and accelerating, and from atmospheric conditions.[401]

The stated purpose of this conference was "to determine whether or not all possible aerodynamic means of reducing sonic boom overpressure were being explored.”[402] In that regard, Harry Carlson showed how various computer programs then being used at Langley for aerodynamic analyses (e. g., lift and drag) were also proving to be a useful tool for bow wave predictions, complementing improved wind tunnel experiments for examining boom minimization concepts.[403] After presentations by representatives from NASA, Boeing, and Princeton, and follow-on discussions by other experts, some of the attendees thought more avenues of research could be explored. But many were still concerned whether low enough sonic booms were possible using contemporary technologies. Accordingly, NASA’s Office of Advanced Research and Technology, which hosted the conference, established specialized research programs on seven aspects of sonic boom theory and applications at five American universities and the Aeronautical Research Institute of Sweden.[404] This mobilization of aeronautical brainpower almost immediately began to pay dividends.

Seebass and Hayes cochaired NASA’s second sonic boom conference on May 9-10, 1968. It included 19 papers on the latest boom-related testing, research, experimentation, and theory by specialists from NASA and the universities. The advances made in one year were impressive. In the area of theory, for example, the straightforward linear technique for predicting the propagation of sonic booms from slender airplanes such as the SST had proven reliable, even for calculating some nonlinear (mathematically complex and highly erratic) aspects of their signatures.

Additional field testing had improved understanding of the geometrical acoustics caused by atmospheric conditions. Computational capabilities needed to deal with such complexities continued to accelerate. Aeronautical Research Associates of Princeton (ARAP), under a NASA contract, had developed a computer program to calculate overpressure signatures for supersonic aircraft in a horizontally stratified atmosphere. Offering another preview of the digital future, researchers at Ames had begun using a computer with graphic displays to perform flow-field analyses and to experiment with a dozen diverse aircraft configurations for lower boom signatures. Several other papers by academic experts, such as Antonio Ferri of New York University (a notable prewar Italian aerodynamicist who had worked at the NACA’s Langley Laboratory after escaping to the United States in 1944), dealt with progress in the aerodynamic techniques to reduce sonic booms.[405]

Nevertheless, several important theoretical problems remained, such as the prediction of sonic boom signatures near a caustic (an objective of the previously described Jackass Flats testing in 1970), the diffraction of shock waves into "shadow zones” beyond the primary sonic boom carpet, nonlinear shock wave behavior near an aircraft, and the still mystifying effects of turbulence. Ira R. Schwartz of NASA’s Office of Advanced Research and Technology summed up the state of sonic boom minimization as follows: "It is yet too early to predict whether any of these design techniques will lead the way to development of a domestic SST that will be allowed to fly supersonic over land as well as over water.”[406]

Rather than conduct another meeting the following year, NASA deferred to a conference by NATO’s Advisory Group for Aerospace Research & Development (AGARD) on aircraft engine noise and sonic boom, held in Paris during May 1969. Experts from the United States and five other nations attended this forum, which consisted of seven sessions. Three of the sessions, plus a roundtable, dealt with the status of boom research and the challenges ahead.[407] As reflected by these conferences, the three-way partnership between NASA, Boeing, and the academic aeronautical community during the late 1960s continued to yield new knowledge about sonic booms as well as technological advance in exploring ways to deal with them. In addition to more flight test data and improved theoretical constructs, much of this progress was the result of various experimental apparatuses.

The use of wind tunnels (especially Langley’s 4 by 4 supersonic wind tunnels and the 9 by 7 and 8 by 7 supersonic sections of Ames’s Unitary Wind Tunnel complex) continued to advance the understanding of shock wave generation and aircraft configurations that could minimize the sonic boom.[408] As two of Langley’s sonic boom experts reported in 1970, the many challenges caused by nonuniform tunnel conditions, model and probe vibrations, boundary layer effects, and the precision needed for small models "have been met with general success.”[409]

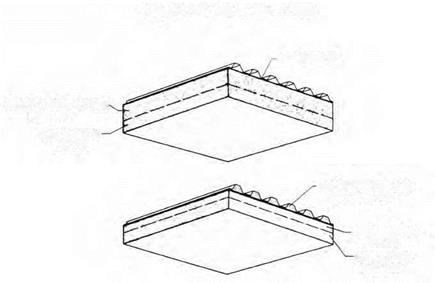

Also during the latter half of the 1960s, NASA and its contractors developed several new types of simulators that proved useful in studying the physical and psychoacoustic effects of sonic booms. The smallest (and least expensive) was a spark discharge system. The Langley Center and other laboratories used these "bench-type” devices for basic research into the physics of pressure waves. Langley’s system created miniature sonic booms by using parabolic or two-dimensional mirrors to focus the shock waves caused by discharging high voltage bolts of electricity between tungsten eletrodes toward precisely placed microphones. Such experiments were used to verify laws of geometrical acoustics. The system’s ability to produce shock waves that spread out spherically proved useful for investigating how the cone-shaped waves generated by aircraft interact with buildings.[410]

For studying the effect of temperature gradients on boom propagation, Langley used a ballistic range consisting of a helium gas launcher that shot miniature projectiles at constant Mach numbers through a partially enclosed chamber. The inside could be heated to ensure a stable atmosphere for accuracy in boom measurements. Innovative NASA – sponsored simulators included Ling-Temco-Vought’s shock-expansion tube, basically a mobile 13-foot-diameter conical horn mounted on a trailer, and General American Research Division’s explosive gas-filled envelopes suspended above sensors at Langley’s sonic boom simulation range.[411] NASA also contracted with Stanford Research Institute for simulator experiments that showed how sonic booms could interfere with sleep, especially for older people.[412]

Other simulators were devised to handle both human and structural response to sonic booms. (The need to better understand effects on people was called for in a report released in June 1968 by the National Academy of Sciences.)[413] Unlike the previously described studies using actual sonic booms created by aircraft, these devices had the advantages of a controlled laboratory environment. They allowed researchers to produce multiple boom signatures of varying shapes, pressures, and durations as often as needed at a relatively low cost.[414] The Langley Center’s Low-Frequency Noise Facility—built earlier in the 1960s to generate the intense chest-pounding sounds of giant Saturn boosters during Apollo launches—also performed informative sonic boom simulation experiments. Consisting of a cylindrical test chamber 24 feet in diameter and 21 feet long, it could accommodate people, small structures, and materials for testing. Its electrohydraulically operated 14-foot piston was capable of producing sound waves from 1-50 hertz (sort of a super subwoofer) and sonic boom N-waves from 0.5 to 20 psf at durations from 100 to 500 milliseconds.[415]

To provide an even more versatile system designed specifically for sonic boom research, NASA contracted with General Applied Science Laboratories (GASL) of Long Island, NY, to develop an ideal simulator using a quick action valve and shock tube design. (Antonio Ferri was the president of GASL, which he had cofounded with the illustrious aeronautical scientist Theodore von Karman in 1956). Completed in 1969, this new simulator consisted of a high-speed flow valve that sent pressure wave bursts through a heavily reinforced 100-foot-long conical duct that expanded into an 8 by 8 test section with an instrumentation and model room. It could generate overpressures up to 10 psf with durations from 50 to 500 milliseconds. Able to operate at less than a 1-minute interval between bursts, its sonic boom signatures proved very accurate and easy to control.[416] In the opinion of Ira Schwartz, "the GASL/NASA facility represents the most advanced state of the art in sonic boom simulation.”[417]

While NASA and its partners were learning more about the nature of sonic booms, the SST was becoming mired in controversy. Many in the public, the press, and the political arena were concerned about the noise SSTs would create, with a growing number expressing hostility to the entire SST program. As one of the more reputable critics wrote in 1966, with a map showing a dense network of future boom carpets crossing the United States, "the introduction of supersonic flight, as it is at present conceived, would mean that hundreds of millions of people would not only be seriously disturbed by the sonic booms. . . they would also have to pay out of their own pockets (through subsidies) to keep the noise-creating activity alive.”[418]

Opposition to the SST grew rapidly in the late 1960s, becoming a cause celebre for the burgeoning environmental movement as well as target for small-Government conservatives opposed to Federal subsidies.[419] Typical of the growing trend among opinion makers, the New York Times published its first strongly anti-sonic-boom editorial in June 1968, linking the SST’s potential sounds with an embarrassing incident the week before when an F-105 flyover shattered 200 windows at the Air Force Academy, injuring a dozen people.[420] The next 2 years brought a growing crescendo of complaints about the supersonic transport, both for its expense and the problems it could cause—even as research on controlling sonic booms began to bear some fruit.

By the time 150 scientists and engineers gathered in Washington, DC, for NASA’s third sonic boom research conference on October 29-30, 1970, the American supersonic transport program was less than 6 months away from cancellation. Thus the 29 papers presented at the conference and others at the ASA’s second sonic boom symposium in Houston the following month might be considered, in their entirety, as a final status report on sonic boom research during the SST decade.[421] Of future if not near-term significance, considerable progress was being made in understanding how to design airplanes that could fly faster than the speed of sound while leaving behind a gentler sonic footprint.

As summarized by Ira Schwartz: "In the area of boom minimization, the NASA program has utilized the combined talents of Messrs. E. McLean, H. L. Runyan, and H. R. Henderson at NASA Langley Research Center, Dr. W. D. Hayes at Princeton University, Drs. R. Seebass and A. R. George at Cornell University, and Dr. A. Ferri at New York University to determine the optimum equivalent bodies of rotation [a technique for relating airframe shapes to standard aerodynamic rules governing simple projectiles with round cross sections] that minimize the overpressure, shock pressure rise, and impulse for given aircraft weight, length, Mach number, and altitude of operation. Simultaneously, research efforts of NASA and those of Dr. A. Ferri at New York University have provided indications of how real aircraft can be designed to provide values approaching these optimums. . . . This research must be continued or even expanded if practical supersonic transports with minimum and acceptable sonic boom characteristics are to be built.”[422]

Any consensus among the attendees about the progress they were making was no doubt tempered by their awareness of the financial problems now plaguing the Boeing Company and the political difficulties facing the administration of President Richard Nixon in continuing to subsidize the American SST. From a technological standpoint, many of them also seemed resigned that Boeing’s final 2707-300 design (despite its 306-foot length and 64,000-foot cruising altitude) would not pass the overland sonic boom test. Richard Seebass, who was in the vanguard of minimization research, admitted that "the first few generations of supersonic transport (SST) aircraft, if they are built at all, will be limited to supersonic flight over oceanic and polar regions.”[423] In view of such concerns, some of the attendees were even looking toward hypersonic aerospace vehicles, in case they might cruise high enough to leave an acceptable boom carpet.

As for the more immediate prospects of a domestic supersonic transport, Lynn Hunton of the Ames Research Center warned that "with regard to experimental problems in sonic boom research, it is essential that the techniques and assumptions used be continuously questioned as a requisite for assuring the maximum in reliability.”[424] Harry Carlson probably expressed the general opinion of Langley’s aerodynamicists when he cautioned that "the problem of sonic boom minimization through airplane shaping is inseparable from the problems of optimization of aerodynamic efficiency, propulsion efficiency, and structural weight. . . . In fact, if great care is not taken in the application of sonic boom design principles, the whole purpose can be defeated by performance degradation, weight penalties, and a myriad of other practical considerations.”[425]

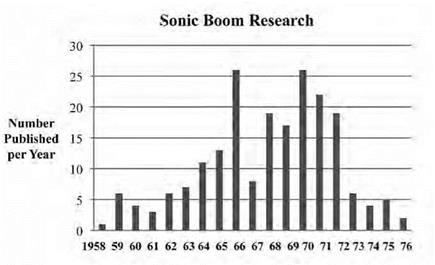

After both the House and Senate voted in March 1971 to eliminate SST funding, a joint conference committee confirmed its termination in May.[426] This and related cuts in supersonic research inevitably slowed momentum in dealing with sonic booms. Even so, researchers in NASA, as well as in academia and the aerospace industry, would keep alive the possibility of civilian supersonic flight in a more constrained and less technologically ambitious era. Fortunately for them, the ill – fated SST program left behind a wealth of data and discoveries about sonic booms. As evidence, the Langley Research Center produced or sponsored more than 200 technical publications on the subject over 19 years, most related to the SST program. (Many of those published in the early 1970s were based on previous research and testing.) This literature, depicted in Figure 4, would be a legacy of enduring value in the future.[427]

Keeping Hopes Alive: Supersonic Cruise Research

"The number one technological tragedy of our time.” That was how President Nixon characterized the votes by the Congress to stop funding an American supersonic transport.[428] Despite its cancellation, the White House, the Department of Transportation (DOT), and NASA—as well as some in Congress—did not allow the progress in supersonic technologies the SST had engendered to completely dissipate. During 1971 and 1972, the DOT and NASA allocated funds for completing some of the tests and experiments that were underway when the program was terminated. The administration then added line-item funding to NASA’s fiscal year (FY) 1973 budget for scaled-down supersonic research, especially as related to environmental problems. In response, NASA established the Advanced Supersonic Technology (AST) program in July 1972.

To more clearly indicate the exploratory nature of this effort and allay fears that it might be a potential follow-on to the SST, the AST program was renamed Supersonic Cruise Aircraft Research (SCAR) in 1974. When the term aircraft in its title continued to raise suspicion in some quarters that the goal might be some sort of prototype, NASA shortened the program’s name to Supersonic Cruise Research (SCR) in 1979.[429] For the sake of simplicity, the latter name is often applied to all 9 years of the program’s existence. For NASA, the principal purpose of AST, SCAR, and SCR was to conduct and support focused research into the problems of supersonic flight while advancing related technologies. NASA’s aeronautical Centers, most of the major airframe manufactures, and many research organizations and universities participated. From Washington, NASA’s Office of Aeronautics and Space Technology (OAST) provided overall supervision but delegated day-to-day management to the Langley Research Center, which established an AST Project Office in its Directorate of Aeronautics, soon placed under a new Aeronautics System Division. The AST program was organized into four major elements— propulsion, structure and materials, stability and control, and aerodynamic performance—plus airframe-propulsion integration. (NASA spun off the propulsion work on a variable cycle engine [VCE] as a separate program in 1976.) Sonic boom research was one of 16 subelements.[430]

At the Aeronautical Systems Division, Cornelius "Neil” Driver, who headed the Vehicle Integration Branch, and Ed McLean, as chief of the AST Project Office, were key officials in planning and managing the AST/SCAR effort. After McLean retired in 1978, the AST Project Office passed to a fellow aerodynamicist, Vincent R. Mascitti, while Driver took over the Aeronautical Systems Division. One year later, Domenic Maglieri replaced Mascitti in the AST Project Office.[431] Despite Maglieri’s sonic boom expertise, the goal of minimizing the AST’s sonic boom for overland cruise had long since ceased being an SCR objective. As later explained by McLean: "The basic approach of the SCR program. . . was to search for the solution of supersonic problems through disciplinary research. Most of these problems were well known, but no satisfactory solution had been found. When the new SCR research suggested a potential solution. . . the applicability of the suggested solution was assessed by determining if it could be integrated into a practical commercial supersonic airplane and mission. . . . If the potential solution could not be integrated, it was discarded.”[432]

To meet the practicality standard for integration into a supersonic airplane, solving the sonic boom problem had to clear a new and almost insurmountable hurdle. In April 1973, responding to years of political pressure, the FAA announced a new rule that banned commercial or civil aircraft from supersonic flight over the land mass or territorial waters of the United States if measureable overpressure would reach the surface.[433] One of the initial objectives of the AST’s sonic boom research had been to

|

Figure 4. Reports produced or sponsored by NASA Langley, 1958-1976. NASA. |

establish a metric on public acceptability of sonic boom signatures for use in the aerodynamic design process. The FAA’s stringent new regulation seemed to rule out any such flexibility.

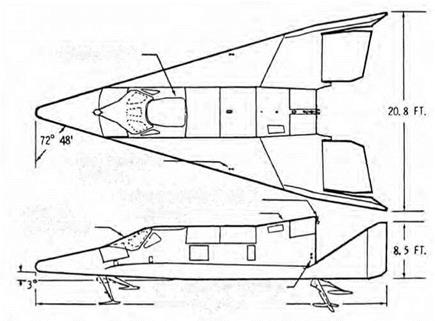

As a result, when Congress cut FY 1974 funding for the AST program from $40 million to about $10 million, the subelement for sonic boom research went on NASA’s chopping block. The design criteria for the SCAR/SCR program became a 300-foot-long, 270-passenger airplane that could fly as effectively as possible over land at subsonic speeds yet still cruise efficiently at 60,000 feet and Mach 2.2 over water. To meet these criteria, Langley aerodynamicists modified their SCAT-15F design from the late 1960s into a notional concept with better low-speed performance (but higher sonic boom potential) called the ATF-100. This served as a baseline for three industry teams in coming up with their own designs.[434]

When the AST program began, however, prospects for a significant quieting of its sonic footprint appeared possible. Sonic boom theory had advanced significantly during the 1960s, and some promising if not yet practical ideas for reducing boom signatures had begun to emerge. As

indicated by Figure 4, some findings based on that research continued to come out in print during the early 1970s.

As far back as 1965, NASA’s Ed McLean had discovered that the sonic boom signature from a very long supersonic aircraft flying at the proper altitude could be nonasymptotic (i. e., not reach the ground in the form of an N-wave). This confirmed the possibility of tailoring an airplane’s shape into something more acceptable.[435] Some subsequent theoretical suggestions, such as various ways of projecting heat fields to create a longer "phantom” fuselage, are still decidedly futuristic, while others, such as adding a long spike to the nose of an SST to slow the rise of the bow shock wave, would (as described later) eventually prove more realistic.[436] Meanwhile, researchers under contract to NASA kept advancing the state of the art in more conventional directions. For example, Antonio Ferri of New York University in partnership with Hans Sorensen of the Aeronautical Research Institute of Sweden used new 3-D measuring techniques in Sweden’s trisonic wind tunnel to more accurately correlate near-field effects with linear theory. Testing NYU’s model of a 300-foot – long SST cruising at Mach 2.7 at 60,000 feet, it showed the opportunity for sonic booms of less than 1.0 psf.[437] Ferri’s early death in 1975 left a void in supersonic aerodynamics, not least in sonic boom research.[438]

By the end of the SST program, Albert George and Richard Seebass had formulated a mathematical foundation for many of the previous theories. They also devised a near-field boom-minimization theory, applicable in an isothermal atmosphere, for reducing the overpressures of flattop and ramp-type signatures. It was applicable to both front and rear shock waves along with their parametric correlation to airframe lift and area. In a number of seminal papers and articles in the early 1970s, they explained the theory along with some ideas on possible aerodynamic

shaping (e. g., slightly blunting an aircraft’s nose) and the optimum cruise altitude (lower than previously thought) for reducing boom signatures.[439]

Theoretical refinements and new computer modeling techniques continued to appear in the early 1970s. For example, in June 1972, Charles Thomas of the Ames Research Center explained a mathematical procedure using new algorithms for waveform parameters to extrapolate the formation of far-field N-waves. This was an alternative to using F-function effects (the pattern of near-field shock waves emanating from an airframe), which were the basis of the previously discussed program developed by Wallace Hayes and colleagues at ARAP. Although both methods accounted for acoustical ray tracing and could arrive at similar results, Thomas’s program allowed easier input of flight information (speed, altitude, atmospheric conditions, etc.) for automated data processing.[440]

In June 1973, at the end of the AST program’s first year, NASA Langley’s Harry Carlson, Raymond Barger, and Robert Mack published a study on the applicability of sonic boom minimization concepts for overland supersonic transport designs. They examined four reduced-boom concepts for a commercially viable Mach 2.7 SST with a range of 2,500 nautical miles (i. e., coast to coast in the United States). Using experimentally verified minimization concepts of George, Seebass, Hayes, Ferri, Barger, and the English researcher L. B. Jones, along with computational techniques developed at Langley during the SST program, Carlson’s team examined ways to manipulate the F-function to project a flatter far-field sonic boom signature. In doing this, the team was handicapped by the continuing lack of established signature characteristics (the combinations of initial peak overpressure, maximum shock strength, rise time, and duration) that people would best tolerate, both outdoors and especially indoors. Also, the complexity of aft aircraft geometry made measuring effects on tail shocks difficult.[441]

Even so, their study confirmed the advantages of designs with highly swept wings toward the rear of the fuselage with twist and camber for

sonic boom shaping. It also found the use of canards (small airfoils used as horizontal stabilizers near the nose of rear-winged aircraft) could optimize lift distribution for sonic boom benefits. Although two designs showed bow shocks of less than 1.0 psf, their report noted "that there can be no assurance at this time that [their] shock-strength values. . . if attainable, would permit unrestricted overland operations of supersonic transports.”[442] Ironically, these words were written just before the new FAA rule rendered them largely irrelevant.

In October 1973, Edward J. Kane of Boeing, who had been a key sonic boom expert during the SST program, released the results of a similar NASA-sponsored study on the feasibility of a commercially viable low-boom transport using technologies projected to be available in 1985. Based on the latest theories, Boeing explored two longer-range concepts: a high-speed (Mach 2.7) design that would produce a sonic boom of 1.0 psf or less, and a medium-speed (Mach 1.5) design with a signature of 0.5 psf or less.[443] In retrospect, this study, which reported mixed results, represented industry’s perspective on the prospects for boom minimization just as the AST program dropped plans for supersonic cruise over land.

Obviously, the virtual ban on civilian supersonic flight in the United States dampened any enthusiasm by private industry to continue investing very much capital in sonic boom research. Within NASA, some of those with experience in sonic boom research also redirected their efforts into other areas of expertise. Of the approximately 1,000 technical reports, conference papers, and articles by NASA and its contractors listed in bibliographies of the SCR program from 1972 to 1980, only 8 dealt directly with the sonic boom.[444]

Even so, progress in understanding sonic booms did not come to a complete standstill. In 1972, Christine M. Darden, a Langley mathematician in an engineering position, had developed a computer code to adapt Seebass and George’s minimization theory, which was based on an isothermal (uniform) atmosphere, into a program that applied to a standard (stratified) atmosphere. It also allowed more design flexibility than previous low-boom configuration theory did, such as better aerodynamics in the nose area.[445]

Using this new computer program, Darden and Robert Mack followed up on the previously described study by Carlson’s team by designing wing-body models with low-boom characteristics: one for cruise at Mach 1.5 and two for cruise at Mach 2.7. At 6 inches in length, these were the largest yet tested for sonic boom propagation in a4 by 4 supersonic wind tunnel—an improvement made possible by continued progress in measuring and extrapolating near-field effects to signatures in the far field. The specially shaped models (all arrow-wing configurations, which distributed lift effects to the rear) showed significantly lower overpressures and flatter signatures than standard designs did, especially at Mach 1.5, at which both the bow and tail shocks were softened. Because of funding limitations, this promising research could not be sustained long enough to develop definitive boom minimization techniques.[446] It was apparently the last significant experimentation on sonic boom minimization for more than a decade.

While this work was underway, Darden and Mack presented a paper on current sonic boom research at the first SCAR conference, held at Langley on November 9-12, 1976 (the only paper on that subject among the 47 presentations). "Contrary to earlier beliefs,” they explained, "it has been found that improved efficiency and lower sonic boom characteristics do not always go hand in hand.” As for the acceptability of sonic booms, they reported that the only research in North America was being done at the University of Toronto.[447] Another NASA contribution to understanding sonic booms came in early 1978 with the publication by Harry Carlson

of "Simplified Sonic-Boom Prediction,” a how-to guide on a relatively quick and easy method to determine sonic boom characteristics. It could be applied to a wide variety of supersonic aircraft configurations as well as spacecraft at altitudes up to 76 kilometers. Although his clever series of graphs and equations would not provide the accuracy needed to predict booms from maneuvering aircraft or in designing airframe configurations, Carlson explained that "for many purposes (including the conduct of preliminary engineering studies or environmental impact statements), sonic-boom predictions of sufficient accuracy can be obtained by using a simplified method that does not require a wind tunnel or elaborate computing equipment. Computational requirements can in fact be met by hand-held scientific calculators, or even slide rules.”[448]

The month after publication of this study, NASA released its final environmental impact statement (EIS) for the Space Shuttle program, which benefited greatly from the Agency’s previous research on sonic booms, including that with the X-15 and Apollo missions, and adaptations of Charles Thomas’s waveform-based computer program.[449] While ascending, the EIS estimated maximum overpressures of 6 psf (possibly up to 30 psf with focusing effects) about 40 miles downrange over open water, caused by both its long exhaust plume and its curving flight profile while accelerating toward orbit. During reentry of the manned vehicle, the sonic boom was estimated at a more modest 2.1 psf, which would affect about 500,000 people as it crossed the Florida peninsula or 50,000 when landing at Edwards.[450] In following decades, as populations in those areas boomed, millions more would be hearing the sonic signatures of returning Shuttles, more than 120 of which would be monitored for their sonic booms.[451]

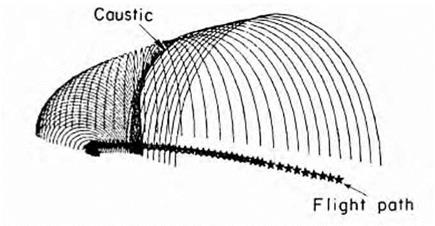

Some other limited experimental and theoretical work on sonic booms continued in the late 1970s. Richard Seebass at Cornell and Kenneth Plotkin of Wyle Research, for example, delved deeper into the challenging phenomena of caustics and focused booms.[452] At the end of the decade, Langley’s Raymond Barger published a study on the relationship of caustics to the shape and curvature of acoustical wave fronts caused by actual aircraft maneuvers. To graphically display these effects, he programmed a computer to draw simulated three-dimensional line plots of the acoustical rays in the wave fronts. Figure 5 shows how even a simple decelerating turn, in this case from Mach 2.4 to Mach 1.5 in a radius of 23 kilometers (14.3 miles), can focus the kind of caustic that might result in a super boom.[453]

Unlike in the 1960s, there was little if any NASA sonic boom flight testing during the 1970s. As a case in point, NASA’s YF-12 Blackbirds at Edwards (where the Flight Research Center was renamed the Dryden Flight Research Center in 1976) flew numerous supersonic missions in support of the AST/SCAR/SCR program, but none of them were dedicated to sonic boom issues.[454] On the other hand, operations of the Concorde began providing a good deal of empirical data on sonic booms.

One discovery about secondary booms came after British Airways and Air France began Concorde service to the United Sates in May 1976. Although the Concordes slowed to subsonic speeds while well offshore, residents along the Atlantic seaboard began hearing what were called the "East Coast Mystery Booms.” These were detected all the way from Nova Scotia to South Carolina, some measurable on seismographs.[455] Although a significant number of the sounds defied explanation, studies by the Naval Research Laboratory, the Federation of American Scientists, a committee of the Jason DOD scientific advisory group, and the FAA eventually determined that most of the low rumbles heard in Nova Scotia and New England were secondary booms from the Concorde. They were reaching land after being bent or reflected by temperature varia-

|

Figure 5. Acoustic wave front above a maneuvering aircraft. NASA. |

tions high up in the thermosphere from Concordes still about 75 to 150 miles offshore. In July 1978, the FAA issued new rules prohibiting the Concorde from creating sonic booms that could be heard in the United States. The new FAA rules did not address the issue of secondary booms because of their low intensity; nevertheless, after Concorde secondary booms were heard by coastal communities, the Agency became even more sensitive to the sonic boom potential inherent in AST designs.[456]

The second conference on Supersonic Cruise Research, held at NASA Langley in November 1979, was the first and last under its new name. More than 140 people from NASA, other Government agencies, and the aerospace industry attended. This time there were no presentations on the sonic boom, but a representative from North American Rockwell did describe the concept of a Mach 2.7 business jet for 8-10 passengers that would generate a sonic boom of only 0.5 psf.[457] It would take another

20 years for ideas about low-boom supersonic business jets to result in more than just paper studies.

Despite SCR’s relatively modest cost versus its significant technological accomplishments, the program suffered a premature death in 1981. Reasons for this included the Concorde’s economic woes, opposition to civilian R&D spending by key officials in the new administration of President Ronald Reagan, and a growing federal deficit. These factors, combined with cost overruns for the Space Shuttle, forced NASA to abruptly cancel Supersonic Cruise Research without even funding completion of many final reports.[458] As regards sonic boom research, an exception to this was a compilation of charts for estimating minimum sonic boom levels published by Christine Darden in May 1981. She and Robert Mack also published results of their previous experimentation that would be influential when efforts to soften the sonic boom resumed.[459]

PILOTS HATCH

PILOTS HATCH ABLATIVE HEAT SHIELDS

ABLATIVE HEAT SHIELDS