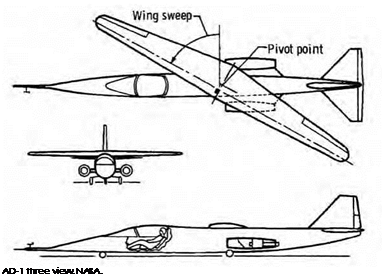

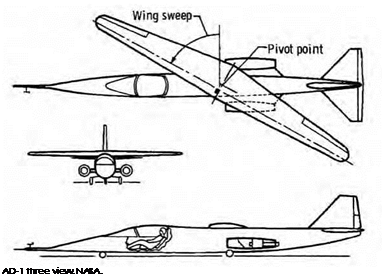

The AD-1 was a small and inexpensive demonstrator aircraft intended to investigate some of the issues of an oblique wing. It flew between 1979 and 1982. It had a maximum takeoff weight of 2,100 pounds and a maximum speed of 175 knots. It is an interesting case because (1) NASA had an unusually large role in its design and integration—it was essentially a NASA aircraft—and (2) because it provides a neat illustration of the prosecution of a particular objective through design, analysis, wind tunnel test, flight test, and planned follow-on development.[953]

The oblique wing was conceived by German aerodynamicists in the midst of the Second World War. But it was only afterward, through the

|

|

brilliance and determination of NASA aerodynamicist Robert T. Jones that it advanced to actual flight. Indeed, Jones, father of the American swept wing, became one of the most persistent proponents of the oblique wing concept.[954] The principal advantage of the oblique wing is that it spreads both the lift and volume distributions of the wing over a greater length than that of a simple symmetrically swept wing. This has the effect of reducing both the wave drag because of lift and the wave drag because of volume, two important components of supersonic drag. With this theoretical advantage come practical challenges. The challenges fall into two broad categories: the effects of asymmetry on the flight characteristics (stability and handling qualities) of the vehicle, and the aeroelas – tic stability of the forward-swept wing. The research objectives of the AD-1 were primarily oriented toward flying qualities. The AD-1 was not intended to explore structural dynamics or divergence in depth, other

than establishing safety of flight. Mike Rutkowski analyzed the wing for flutter and divergence using NASTRAN and other methods.[955]

However, the project did make a significant accomplishment in the use of static aeroelastic tailoring. The fiberglass wing design by Ron Smith was tailored to bend just enough, with increasing g, to cancel out an aerodynamically induced rolling moment. Pure bending of the oblique wing increases the incidence (and therefore the lift) of the forward-swept tip and decreases the incidence (and lift) of the aft-swept tip. In a pullup maneuver, increasing lift coefficient (CL), and load factor at a given flight condition, this would cause a rollaway from the forward-swept tip. At the same time, induced aerodynamic effects (the downwash/upwash distribution) increase the lift at the tip of an aft-swept wing. On an aircraft with only one aft-swept tip, this would cause a roll toward the forward-swept side. The design intent for the AD-1 was to have these two effects cancel each other as nearly as possible, so that the net change in rolling moment because of increasing g at a given flight condition would be zero. The design condition was CL = 0.3 for 1-g flight at 170 knots, 12,500-foot altitude, and a weight of 1,850 pounds, with the wing at 60-degree sweep.[956]

An aeroelastically scaled one-sixth model was tested at full-scale Reynolds number in the Ames 12-Foot Pressure Wind Tunnel. A stiff aluminum wing was used for preliminary tests, then two fiberglass wings. The two fiberglass wings had zero sweep at the 25- and 30-percent chord lines, respectively, bracketing the full-scale AD-1 wing, which had zero sweep at 27-percent chord. The wings were tested at the design scaled dynamic pressure and at two lower values to obtain independent variation of wing load because of angle of attack and dynamic pressure at a constant angle of attack. Forces and moments were measured, and deflection was determined from photographs of the wing at test conditions.[957]

Subsequently, ". . . the actual wing deflection in bending and twist was verified before flight through static ground loading tests.” Finally, in-flight measurements were made of total force and moment coefficients and of aeroelastic effects. Level-flight decelerations provided angle-of-attack sweeps at constant load, and windup turns provided angle-of-attack sweeps at constant "q” (dynamic pressure). Results were interpreted and compared with predictions. The simulator model, with aeroelastic effects included, realistically represented the dynamic responses of the flight vehicle.[958]

Provision had been made for mechanical linkage between the pitch and roll controls, to compensate for any pitch-roll coupling observed in flight. However, the intent of the aeroelastic wing was achieved closely enough that the mechanical interconnect was never used.[959] Roll trim was not needed at the design condition (60-degrees sweep) nor at zero sweep, where the aircraft was symmetric. At intermediate sweep angles, roll trim was required. The correction of this characteristic was not pursued because it was not a central objective of the project. Also, the airplane experienced fairly large changes in rolling moment with angle of attack beyond the linear range. Vortex lift, other local flow separations, and ultimately full stall of the aft-swept wing, occurred in rapid succession as angle of attack was increased from 8 to approximately 12 degrees. Therefore, it would be a severe oversimplification to say that the AD-1 had normal handling qualities.[960]

The AD-1 flew at speeds of 170 knots or less. On a large, high-speed aircraft, divergence of the forward-swept wing would also be a consideration. This would be addressed by a combination of inherent stiffness, aeroelastic tailoring to introduce a favorable bend-twist coupling, and, potentially, active load alleviation. The AD-1 project provided initial correlation of measured versus predicted wing bending and its effects on the vehicle’s flight characteristics. NASA planned to take the next step with a supersonic oblique wing aircraft, using the same F-8 airframe that had been used for earlier supercritical wing tests. These studies delved deeper into the aeroelastic issues: "Preliminary studies have been performed to identify critical DOF [Degree of Freedom] for flutter model tests of oblique configurations. An ‘oblique’ mode has been identified with a 5 DOF model which still retains its characteristics with the three rotational DOF’s. An interdisciplinary analysis code (STARS), which is capable of performing flutter and aeroservoelastic analyses, has been developed. The structures module has a large library of elements and in conjunction with numerical analysis routines, is capable of efficiently performing statics, vibration, buckling, and dynamic response analysis of structures. . . . ” The STARS code also included supersonic (potential gradient method) and subsonic (doublet lattice) unsteady aerodynamics calculations. " . . . Linear flutter models are developed and transformed to the body axis coordinate system and are subsequently augmented with the control law. Stability analysis is performed using hybrid techniques. The major research benefit of the OWRA [Oblique Wing Research Aircraft] program will be validation of design and analysis tools. As such, the structural model will be validated and updated based on ground vibration test (GVT) results. The unsteady aero codes will be correlated with experimentally measured unsteady pressures.”[961] While the OWRA program never reached flight, (NASA was ready to begin wing fabrication in 1987, expecting first flight in 1991), these comments illustrate the typical interaction of flight programs with analytical methods development and the progressive validation process that takes place. Such methods development is often driven by unconventional problems (such as the oblique wing example here) and eventually finds its way into routine practice in more conventional applications. For example, in the design of large passenger aircraft today, the loads process is typically iterated to include the effects of static aeroelastic deflections on the aerodynamic load distribution.[962]

X-29

The Grumman X-29 aircraft was an extraordinarily ambitious and productive flight-test program run between 1984 and 1992. It demonstrated a large (approximately 35 percent) unstable static margin in the pitch axis, a digital active flight control system utilizing three-surface pitch control (all-moving canards, wing flaps, and aft-mounted strake flaps), and a thin supercritical forward-swept wing, aeroelastically tailored to prevent structural divergence. The X-29 was funded by the Defense Advanced Research Projects Agency (DARPA) through the USAF Aeronautical Systems Division (ASD). Grumman was responsible for aircraft design and fabrication, including the primary structural analyses, although there was extensive involvement of NASA and the USAF in addressing the entire realm of unique technical issues on the project. NASA Ames Research Center/ Dryden Flight Research Facility was the responsible test organization.[963]

Careful treatment of aeroelastic stability was necessary for the thin FSW to be used on a supersonic, highly maneuverable aircraft. According to Grumman, "Automated design and analysis procedures played a major role in the development of the X-29 demonstrator aircraft.” Grumman used one of its programs, called FASTOP, to optimize the X-29’s structure to avoid aeroelastic divergence while minimizing the weight impact.[964]

In contrast to the AD-1, which allowed the forward-swept wing to bend along its axis, thereby increasing the lift at the forward tip, the X-29’s forward-swept wings were designed to twist when bending, in a manner that relieved the load. This was accomplished by orienting the primary spanwise fibers in the composite skins at a forward "kick angle” relative to the nominal structural axis of the wing. The optimum angle was found in a 1977 Grumman feasibility study: "Both beam and coarse- grid, finite-element models were employed to study various materials and laminate configurations with regard to their effect on divergence and flutter characteristics and to identify the weight increments required to avoid divergence.”[965] While a pure strength design was optimum at zero kick angle, an angle of approximately 10 degrees was found to be best for optimum combined strength and divergence requirements.

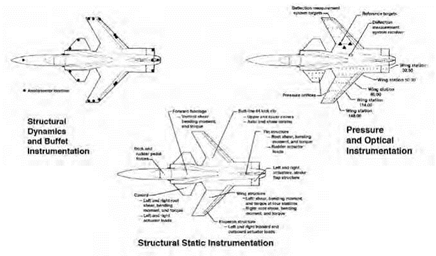

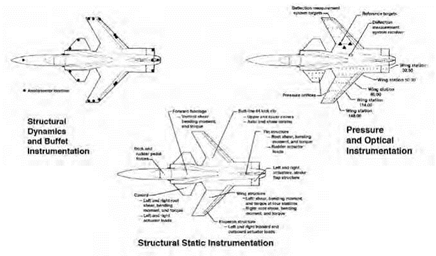

When the program reached the flight-test phase, hardware-in-the – loop simulation was integral to the flight program. During the functional and envelope expansion phases, every mission was flown on the simulator before it was flown in the airplane.[966] In flight, the X-29 No. 1 aircraft (of two that were built) carried extensive and somewhat unique instrumentation to measure the loads and deflections of the airframe, and particularly of the wing. This consisted of pressure taps on the left wing and canard, an optical deflection measurement system on the right wing, strain gages for static structural load measurement, and accelerometers for structural dynamic and buffet measurement.

The most unusual element of this suite was the optical system, which had been developed and used previously on the HiMAT demonstrator (see preceding description). Optical deflection data were sampled at a

|

|

rate of 13 samples per channel per second. Data quality was reported to be very good, and initial results showed good match to predictions. In addition, pressure data from the 156 wing and 17 canard pressure taps was collected at a rate of 25 samples per channel per second. One hundred six strain gages provided static loads measurement as shown. Structural dynamic data from the 21 accelerometers was measured at 400 samples per channel per second. All data was transmitted to ground station and, during limited-envelope phase, to Grumman in Calverton, NY, for analysis.[967] "Careful analyses of the instrumentation requirements, flight test points, and maneuvers are conducted to ensure that data of sufficient quality and quantity are acquired to validate the design, fabrication, and test process.”[968] The detailed analysis and measurements provided extensive opportunities to validate predictive methods.

The X-29 was used as a test case for NASA’s STARS structural analysis computer program, which had been upgraded with aeroservoelas- tic analysis capability. In spite of the exhaustive analysis done ahead of time, there were, as is often the case, several "discoveries” made during flight test. Handling qualities at high alpha were considerably better than predicted, leading to an expanded high-alpha control and maneuverability investigation in the later phases of the project. The X-29

No. 1 was initially limited to 21-degree angle of attack, but, during subsequent Phase II envelope expansion testing, its test pilots concluded it had "excellent control response to 45 deg. angle of attack and still had limited controllability at 67 deg. angle of attack.”[969]

There were also at least two distinct types of aeroservoelastic phenomena encountered: buffet-induced modes and a coupling between the canard position feedback and the aircraft’s longitudinal aerodynamic and structural modes were observed.[970] The modes mentioned involved frequencies between 11 and 27 hertz (Hz). Any aircraft with an automatic control system may experience interactions between the aircraft’s structural and aerodynamic modes and the control system. Typically, the aeroelastic frequencies are much higher than the characteristic frequencies of the motion of the aircraft as a whole. However, the 35-percent negative static margin of the X-29A was much larger than any unstable margin designed into an aircraft before or since. As a consequence, its divergence timescale was much more rapid, making it particularly challenging to tune the flight control system to respond quickly enough to aircraft motions, without being excited by structural dynamic modes. Simply stated, the X-29A provided ample opportunity for aeroservoelastic phenomena to occur, and such were indeed observed, a contribution of the aircraft that went far beyond simply demonstrating the aerodynamic and maneuver qualities of an unstable forward – swept canard planform.[971]

In sum, each of these five advanced flight projects provides important lessons learned across many disciplines, particularly the validation of computer methods in structural design and/or analysis. The YF-12 project provided important correlation of analysis, ground-test data, and flight data for an aircraft under complex aerothermodynamic loading. The Rotor Aerodynamic Limits survey collected important data on helicopter rotors—a class of system often taken for granted yet one that represent an incredibly complex interaction of aerodynamic, aeroelas – tic, and inertial phenomena. The HiMAT, AD-1, and X-29 programs each advanced the state of the art in aeroelastic design as applied to nontraditional, indeed exotic, planforms featuring unstable design, composite structures, and advanced flight control concepts. Finally, the data required to validate structural analysis and design methods do not automatically come from the testing normally performed by aircraft developers and users. Special instrumentation and testing techniques are required. NASA has developed the facilities and the knowledge base needed for many kinds of special testing and is able assign the required priority to such testing. As these cases show, NASA therefore plays a key role in this process of gaining knowledge about the behavior of aircraft in flight, evaluating predictive capabilities, and flowing that experience back to the people who design the aircraft.

![]() John D. Anderson, Jr.

John D. Anderson, Jr.![]() The history of the development of computational fluid dynamics is an exciting and provocative story. In the whole spectrum of the history of technology, CFD is still very young, but its importance today and in the future is of the first magnitude. This essay offers a capsule history of the development of theoretical fluid dynamics, tracing how the Navier-Stokes equations came about, discussing just what they are and what they mean, and examining their importance and what they have to do with the evolution of computational fluid dynamics. It then discusses what CFD means to NASA—and what NASA means to CFD. Of course, many other players have been active in CFD, in universities, other Government laboratories, and in industry, and some of their work will be noted here. But NASA has been the major engine that powered the rise of CFD for the solution of what were otherwise unsolvable problems in the fields of fluid dynamics and aerodynamics.

The history of the development of computational fluid dynamics is an exciting and provocative story. In the whole spectrum of the history of technology, CFD is still very young, but its importance today and in the future is of the first magnitude. This essay offers a capsule history of the development of theoretical fluid dynamics, tracing how the Navier-Stokes equations came about, discussing just what they are and what they mean, and examining their importance and what they have to do with the evolution of computational fluid dynamics. It then discusses what CFD means to NASA—and what NASA means to CFD. Of course, many other players have been active in CFD, in universities, other Government laboratories, and in industry, and some of their work will be noted here. But NASA has been the major engine that powered the rise of CFD for the solution of what were otherwise unsolvable problems in the fields of fluid dynamics and aerodynamics.