Why did flight 90 crash? At a technical level (and as we will see the technical never is purely technical) the NTSB concluded that the answer was twofold: not enough thrust and contaminated wings. Easily said, less easily demonstrated. The crash team mounted three basic arguments. First, from the cockpit voice recorder, investigators could extract and frequency analyze the background noise, noise that was demonstrably dominated by the rotation of the low-pressure compressor. This frequency, which corresponds to the number of blades passing per second (BPF), is closely related to the instrument panel gauge N1 (percentage of maximum rpm for the low pressure compressor) by the following formula:

BPF (blades per second) = (rotations per minute (rpm) x number of blades)/60

or

Percent max rpm (N1) = (rpm x 60 x BPF x 100)/(maximum rpm x number of blades)

Applying this formula, the frequency analyzer showed that until 1600:55 – about six seconds before the crash – N1 remained between 80 and 84 percent of maximum. Normal N1 during standard takeoff thrust was about 90 percent. It appeared that only during these last seconds was the power pushed all the way. So why was N1 so low, so discordant with the relatively high setting of the EPR at 2.04? After all, we heard a moment ago on the CVR that the engines had been set at 2.04, maximum takeoff thrust. How could this be? The report then takes us back to the gauges.

The primary instrument for takeoff thrust was the Engine Pressure Ratio gauge, the EPR. In the 737 this gauge was read off of an electronically divided signal in which the inlet engine nose probe pressure given by Pt2 was divided by the engine exhaust pressure probe Pt7. Normally the Pt2 probe was deiced by the anti-ice bleed air from the engine’s eighth stage compressor. If, however, ice were allowed to form in and block the probe Pt2, the EPR gauge would become completely unreliable. For with Pt2 frozen, pressure measurement took place at the vent (see figure 2) – and the pressure at that vent was significantly lower than the compressed air in the midst of the compressor, making

apparent EPR = Pt7/(Pt2-vent) > real EPR = Pt7/Pt2.

Since takeoff procedure was governed by throttling up to a fixed EPR reading of 2.04, a falsely high reading of the EPR meant that the “real” EPR could have been much less, and that meant less engine power.

To test the hypothesis of a frozen low pressure probe, the Boeing Company engineers took a similarly configured 737-200 aircraft with JT8D engines resembling those on the accident flight, and blocked with tape the Pt2 probe on the number one engine (simulating the probe being frozen shut). They left the number two engine probe unblocked (normal). The testers then set the Engine Pressure Ratio

Figure 2. Pt2 and Pt7. Source: NTSB-90, p. 25, figure 5.

indicator for both engines at takeoff power (2.04), and observed the resulting readings on the other instruments for both “frozen” and “normal” cases. This experiment made it clear that the EPR reading for the blocked engine was deceptive – as soon as the tape was removed from Pt2, the EPR revealed not the 2.04 to which it had been set, but a mere 1.70. Strikingly, all the other number one engine gauges

Figure 3. Instruments for Normal/Blocked Pt2. Source: NTSB-90, p. 26, figure 6.

– N1, N2, EGT, and Fuel Flow – remained at the level expected for an EPR of 1.70. One thing was now clear: instead of two engines operating at an EPR of 2.04 or 14,500 lbs of thrust each, flight 90 had taken off, hobbled into a stall, and begun falling towards the 14th Street Bridge with two engines delivering an EPR of 1.70, a mere 10,750 lbs of thrust apiece. At that power, the plane was only marginally able

to climb under perfect conditions. And with wings covered with ice and snow, flight 90 was not, on January 13, flying under otherwise perfect conditions.

Finally, in Boeing’s Flight Simulator Center in Renton, Washington, staff unfolded a third stage of inquiry into the power problem. With some custom programming the computer center designed visuals to reproduce the runway at Washington National Airport, the 14th Street Bridge and the railroad bridge. Pilots flying the simulator under “normal” (no-ice configuration) concurred that the simulation resembled the 737s they flew. With normalcy defined by this consensus, the simulator was then set to replicate the 737-200 with wing surface contamination – specifically the coefficient of lift was degraded and that of drag augmented. Now using the results of the engine test and noise spectrum analysis, engineers set the EPR at 1.70 instead of the usual takeoff value of 2.04. While alone the low power was not “fatal” and alone the altered lift and drag were not catastrophic, together the two delivered five flights that did reproduce the flight profile, timing and position of impact of the ill-starred flight 90. Under these flight conditions the last possible time in which recovery appeared possible by application of full power (full EPR = 2.23) was about 15 seconds after takeoff. Beyond that point, no addition of power rescued the plane.4

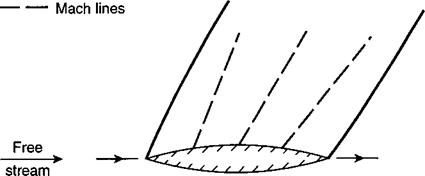

Up to now the story is as logically straightforward as it is humanly tragic: wing contamination and low thrust resulting from a power setting fixed on the basis of a frozen, malfunctioning gauge drove the 737 into a low-altitude stall. But from this point on in the story that limpid quality clouds. Causal lines radiated every which way like the wires of an old, discarded computer – some terminated, some crossed, some led to regulations, others to hardware; some to training, and others to individual or group psychology. At the same time, this report, like others, began to focus the causal inquiry upon an individual element, or even on an individual person. This dilemma between causal diffusion and causal localization lay at the heart of this and other inquiries. But let us return to the specifics.

The NTSB followed, inter alia, the deicing trucks. Why, the NTSB asked, was the left side of the plane treated without a final overspray of glycol while the right side received it? Why was the glycol mixture wrongly reckoned for the temperature? Why were the engine inlets not properly covered during the spraying? Typical of the ramified causal paths was the one that led to a non-regulation nozzle used by one of the trucks, such that its miscalibration left less glycol in the mixture (18%) than there should have been (30%).5 What does one conclude? That the replacement nozzle killed these men, women and children? That the purchase order clerk who bought it was responsible? That the absence of a “mix monitor” directly registering the glycol-to-water ratio was the seed of destruction?6 And the list of circumstances without which the accident would not have occurred goes on – including the possibility that wing de-icing could have been used on the ground, that better gate holding procedures would have kept flight 90 from waiting so long between deicing and takeoff, to name but two others.7

There is within the accident report’s expanding net of counterfactual conditionals a fundamental instability that, I believe, registers in the very conception of these accident investigations. For these reports in general – and this one in particular – systematically turn in two conflicting directions. On one side the reports identify a wide net of necessary causes of the crash, and there are arbitrarily many of these – after all the number of ways in which the accident might not have happened is legion. Human responsibility in such an account disperses over many individuals. On the other side, the reports zero in on sufficient, localizable causes, often the actions of one or two people, a bad part or faulty procedure. Out of the complex net of interactions considered in this particular accident, the condensation was dramatic: the report lodged immediate, local responsibility squarely with the captain.

Fundamentally, there were two charges: that the captain did not reject the takeoff when the first officer pointed out the instrument anomalies, and that, once in the air, the captain did not demand a full-throttle response to the impending stall. Consider the “rejection” issue first. Here it is worth distinguishing between dispersed and individuated causal agency (causal instability), and individual and multiple responsibility (agency instability). There is also a third instability that enters, this one rooted between the view that flight competence stems from craft knowledge and the view that it comes from procedural knowledge (protocol instability).

The NTSB began its discussion of the captain’s decision not to reject by citing the Air Florida Training and Operations Manual:

Under adverse conditions on takeoff, recognition of an engine failure may be difficult. Therefore, close reliable crew coordination is necessary for early recognition.

The captain ALONE makes the decision to “REJECT.”

On the B-737, the engine instruments must be closely monitored by the pilot not flying. The pilot flying should also monitor the engine instruments within his capabilities. Any crewmember will call out any indication of engine problems affecting flight safety. The callout will be the malfunction, e. g., “ENGINE FAILURE,” “ENGINE FIRE,” and appropriate engine number.

The decision is still the captain’s, but he must rely heavily on the first officer.

The initial portion of each takeoff should be performed as if an engine failure were to occur.8

The NTSB report used this training manual excerpt to show that despite the fact that the co-pilot was the “pilot flying,” responsibility for rejection lay squarely and unambiguously with the captain. But intriguingly, this document also pointed in a different direction: that rejection was discussed in the training procedure uniquely in terms of the failure of a single engine. Since engine failure typically made itself known through differences between the two engines’ performance instruments, protocol directed the pilot’s attention to a comparison (cross-check) between the number one and number two engines, and here the two were reading exactly the same way. Now it is true that the NTSB investigators later noted that the reliance on differences could have been part of the problem.9 In the context of training procedures that stressed the

cross-check, the absence of a difference between the left and right engines strikes me not as incidental, but rather as central. In particular it may help explain why the first officer saw something as wrong – but not something that fell into the class of expectations. He did not see a set of instruments that protocol suggested would reflect the alternatives “ENGINE FAILURE” or “ENGINE FIRE.”

But even if the first officer or captain unambiguously knew that, say, N1 was low for a thrust setting of the EPR readout of 2.04, the rejection process itself was riddled with problems. Principally, it makes no sense. The airspeed V1 functioned as the speed below which it was supposed to be safe to decelerate to a stop and above which it was safe to proceed to takeoff even with an engine failure. But this speed was so racked with confusion that it is worth discussing. Neil Van Sickle gives a typical definition of VI in his Modern Airmanship, where he writes that VI is “The speed at which… should one engine fail, the distance required to complete the takeoff exactly equals the distance required to stop.”10 So before VI, if the engine failed, you could stop in less distance than you could get off the ground. Other sources defined V1 as the speed at which air would pass the rudder rapidly enough for rudder authority to keep a plane with a dead engine from spinning. Whatever its basis, as the Air Florida Flight Operations Manual for the Boeing 737 made clear, pilots were to reject a takeoff if the engine failed before V1; afterwards, presumably, the takeoff ought be continued. The problem is that, by its use, the speed V1 had come to serve as a marker for the crucial spatial point where the speed of the plane and distance to go made it possible to stop (barely) before overrunning the runway. In the supporting documents of the NTSB report (called the Docket) one finds in the Operations Group “factual report” the following hybrid definition of VI:

[V1 is] the speed at which, if an engine failure occurs, the distance to continue the takeoff to a height of 35 feet will not exceed the usable takeoff distance; or the distance to bring the airplane to a full stop will not exceed the acceleration – stop distance available. VI must not be greater than the rotation speed, Vr [rejecting after rotation would be enormously dangerous], or less than the ground minimum control speed Vmcg [rejecting before the plane achieves sufficient rudder authority to become controllable would be suicidal].11

Obviously, VI cannot possibly do the work demanded of it: it is the wrong parameter to be measuring. Suppose the plane accelerated at a slow, constant rate from the threshold to the overrun area, achieving V1 as it began to cross the far end of the runway. That would, by the book, mean it could safely take off where in reality it would be within a couple of seconds of collapsing into a fuel-soaked fire. The question should be whether V1 has been reached by a certain point on the runway where a maximum stop effort will halt the plane before it runs out of space (a point known elsewhere in the lore as the acceleration-stop distance). If one is going to combine the acceleration-stop distance with the demand that the plane have rudder authority and that it be possible to continue in the space left to an engine-out takeoff, then one way or another, the speed VI must be achieved at or before a fixed point on the runway. No such procedure existed.

Sadly, as the NTSB admitted, it was technically unfeasible to marry the very precise inertial navigation system (fixing distance) to a simple measurement of time elapsed since the start of acceleration. And planting distance-to-go markers on the runway was dismissed because of the “fear of increasing exposure to unnecessary high-speed aborts and subsequent overruns… .[that might cause] more accidents than they might prevent.”12 With such signs the rolling protocol would presumably demand that the pilots reject any takeoff where VI was reached after a certain point on the runway. But given the combination of technical limitations and cost-benefit decisions about markers, it was, in fact, impossible to know in a protocol-following way whether VI had been achieved in time for a safe rejection. This meant that the procedure of rejection by VI turns out to be completely unreliable in just that case where the airplane is accelerating at a less than normal rate. And it is exactly such a low-acceleration case that we are considering in flight 90. What is demanded of a pilot – a pilot on any flight using VI as a go-no-go speed – is a judgment, a protocol – defying judgment, that VI has been reached “early enough” (determined without an instrument or exterior marking) in the takeoff roll and without a significant anomaly. (Given the manifest and recognized dangers of aborting a high-speed roll, “significant” here obviously carries much weight; Air Florida, for example, forbids its pilots from rejecting a takeoff solely on the basis of the illumination of the Master Caution light.)13

The NTSB report “knows” that there is a problem with the V1 rejection criterion, though it knows it in an unstable way:

It is not necessary that a crew completely analyze a problem before rejecting a takeoff on the takeoff roll. An observation that something is not right is sufficient reason to reject a takeoff without further analysis… The Safety Board concludes that there was sufficient doubt about instrument readings early in the takeoff roll to cause the captain to reject the takeoff while the aircraft was still at relatively low speeds; that the doubt was clearly expressed by the first officer; and that the failure of the captain to respond and reject the takeoff was a direct cause of the accident.14

Indeed, after a careful engineering analysis involving speed, reverse thrust, the runway surface, and braking power, the NTSB determined the pilot could have aborted even with a frictional coefficient of 0.1 (sheet ice) – the flight 90 crew should not have had trouble braking to a stop from a speed of 120 knots on the takeoff roll. “Therefore, the Safety Board believes that the runway condition should not have been a factor in any decision to reject the takeoff when the instrument anomaly was noted.”15

What does this mean? What is this concept of agency that takes the theoretical engineering result computed months later and uses it to say “therefore… should not have been a factor”? Is it that the decision that runway condition “should not have been a factor” would have been apparent to a Laplacian computer, an ideal pilot able to compute friction coefficients by sight and from it deceleration distance using weight, wind, breaking power, and available reverse thrust? Robert Buck, a highly experienced pilot – a 747 captain, who was given the Air Medal by President Truman – wrote about the NTSB report on flight 90: “How was a pilot to know that [he could have stopped]? No way from training, no way was there any runway coefficient information given the pilot; a typical NTSB after-the-fact, pedantic, unrealistic piece of laboratory-developed information.”16 Once the flight was airborne with the stickshaker vibrating and the stall warning alarm blaring, the NTSB had a different criticism: the pilot did not ram the throttles into a full open position. Here the report has an interesting comment. “The Board believes that the flightcrew hesitated in adding thrust because of the concern about exceeding normal engine limitations which is ingrained through flightcrew training programs.” If power is raised to make the exhaust temperature rise even momentarily above a certain level, then, at bare minimum, the engine has to be completely disassembled and parts replaced. Damage can easily cost hundreds of thousands of dollars, and it is no surprise that firewalling a throttle is an action no trained pilot executes easily. But this line of reasoning can be combined with arguments elsewhere in the report. If the captain believed (as the NTSB argues) that the power delivered was normal takeoff thrust, he might well have seen the stall warning as the result of an over-rotation curable by no more than some forward pressure on the yoke. By the time it became clear that the fast rate of pitch and high angle of attack were not easily controllable (737s notoriously pitch up with contaminated wings), he did apply full power – but given the delay in jet engines between power command and delivery, it was too late. The NTSB recommended changes in “indoctrination” to allow for modification if loss of aircraft is the alternative.17

In the end, the NTSB concluded their analysis with the following statement of probable cause, the bottom line:

The National Transportation Safety Board determines that the probable cause of this accident was the flightcrew’s failure to use engine anti-ice during ground operation and takeoff, their decision to take off with snow/ice on the airfoil surfaces of the aircraft, and the captain’s failure to reject the takeoff during the early stage when his attention was called to anomalous engine instrument readings.18

But there was one more implied step to the account. From an erroneous gauge reading and icy wing surfaces, the Board had driven their “probable cause” back to a localizable faulty human decision. Now they began, tentatively, to put that human decision itself under the microscope. Causal diffusion shifted to causal localization.