Trans-Atlantic influences have always had an impact on American technology, beginning with the earliest European voyages to encounter the New World and the first permanent European settlers in America during the seventeenth century. Traditional symbols of the American frontier, like the ax, log cabin, and the Kentucky Rifle, all had European origins. Many American engineers learned their trade as apprentices to immigrant figures like the Englishman, Benjamin Latrobe (1764-1820). Born near Leeds, he was educated in Britain and Germany before emigrating to Virginia in 1796. Over the next quarter of a century, he engineered numerous major buildings, public waterworks, and influenced the design of the Capitol Building of the U. S. Congress in Washington, D. C. Latrobe’s career touched the lives of innumerable designers, engineers, and construction companies. Similar instances extended through the 20th century.

Americans may be vaguely aware of this scientific-technological legacy, but they also tend to regard certain modern technological phenomena as distinctively American: Henry Ford invented the automobile; the Wright brothers invented the airplane; and so on. While many aerospace engineers and historians have been aware of international influences in specific instances, the collective impact seems to have been far more pervasive than generally assumed.1 So that is the focus of this paper – the international impact on the history of American flight. There are three major themes of international influence: the influence of topical literature, the immigration of aerospace professionals, and the globalization of the aerospace industry since 1945. In this essay, I have focused on the first two factors: topical literature and aviation professionals.

In the United States, the Wright brothers’ powered flight on 17 December 1903 is generally regarded as a unique American triumph – a demonstration of traditional Yankee ingenuity. True, the Wrights made several significant contributions in control systems, airfoil theory, and propeller design. But they started by writing to the Smithsonian Institution, in Washington, D. C., for available literature on human flight. Consequently, they used a wind tunnel traceable from published work by Englishman Francis Wenham in 1871. Assorted aerodynamic theories came from a variety of sources, including the well known British engineer, John Smeaton. The Wrights learned a great deal from the published results of Otto Lilienthal’s gliding experiments in Germany, and studied data from other pioneering fliers like Percy Pilcher in Britain. Although the Wrights developed their own engine in 1903, the

207

P Galison and A. Roland (eds.), Atmospheric Flight in the Twentieth Century, 207-222 © 2000 Kluwer Academic Publishers.

internal combustion engine itself was largely the result of late nineteenth century refinements by Gottlieb Daimler and Karl Benz in Germany. The Wrights’ aerial achievement above a remote beach at Kitty Hawk, North Carolina, certainly owed much to non-American sources.2

In the years prior to World War I, aerial meets across the country and demonstrations at annual state and county fairs featured daring pilots from Europe as well as the U. S. These events helped the nation become “air-minded,” and often created a market for airplanes. For several years, one of the best selling aircraft in America was the French Bleriot, offered as a completely finished product or available in kit form. The Bleriot planes started dozens of Americans in aeronautical careers and became a recognized symbol of aviation progress on both sides of the Atlantic. About the time world War I broke out in Europe, there was an active aviation community in America, although it was recognized that the U. S. had fallen behind the Europeans in aeronautical research. The Europeans took a different view of aviation as a technological phenomenon, and their governments, as well as industrial firms, tended to be more supportive of what might be called “applied research.” As early as 1909, the internationally known British physicist, Lord Rayleigh, was appointed head of the British Advisory Committee for Aeronautics; in Germany, Ludwig Prandtl and others were beginning the sort of investigations that soon made the University of Gottingen a center of theoretical aerodynamics. Additional programs were soon under way in France and elsewhere on the continent. Similar progress in the United States remained slow. In fact, until 1915, most American-designed aircraft used airfoil sections from standardized tables issued by the Royal Air Force and by the French engineer, Alexander Eiffel, who pursued an ancillary career as an aerodynamacist in the years after completing his engineering masterpiece in Paris, the Eiffel Tower.

Proponents of an American research organization not only turned consistently to the example of the British Advisory Committee, but also used some of the language of its charter in framing a similar document for the United States. This early example of the impact of European literature on American efforts had evolved from first-hand observation and comparison on the eve of World War I. In 1914, acutely aware of European progress, Charles D. Walcott, secretary of the Smithsonian Institution, was able to find funds to dispatch two Americans on a fact-finding tour overseas. Dr. Albert F. Zahm taught physics and experimented in aeronautics at Catholic University in Washington, D. C.; Dr. Jerome C. Hunsaker, a graduate of the Massachusetts Institute of Technology, was developing a curriculum in aeronautical engineering at the MIT. Their report, issued in 1914, emphasized the galling disparity between European progress and American inertia. The visit also established European contacts that later proved valuable to the NACA.3

The outbreak of war in Europe in 1914 helped serve as a catalyst for the creation of an American agency. The use of German dirigibles for long-range bombing of British cities and the rapid evolution of airplanes for reconnaissance and for pursuit underscored the shortcomings of American aviation. Against this background,

Charles D. Walcott pushed for legislative action to provide for aeronautical research allowing the United States to match progress overseas. Walcott received support from Progressive-era leaders in the country, who viewed government agencies for research as consistent with Progressive ideals such as scientific inquiry and technological progress. By the spring of 1915, the drive for an aeronautical research organization finally succeeded and the National Advisory Committee for Aeronautics (NACA) was formally authorized.

The tour of Europe by Zahm and Hunsaker not only hastened the origins of the NACA, but also influenced the teaching of aeronautics in America, since both learned much from their visits to European research centers. In the process, Hunsaker became more familiar with the aerodynamic experiments of Alexander Eiffel, the renowned designer and constructor of the Eiffel Tower in Paris (1889). During the late 19th and early 20th centuries, Eiffel became immersed in aeronautical experiments including the construction of a wind tunnel of advanced design. Because there were so few reliable sources on aerodynamics, Hunsaker and his wife translated Eiffel’s book on the subject, and it became a key text for Hunsaker’s pioneering aeronautics courses at MIT.

After America entered World War I in 1917, a lack of adequate fighter planes forced combat pilots like Eddie Rickenbacker and others to rely on French and British equipment. The only U. S.-built plane to see extensive combat in the war, the de Haviland DH-4, was a direct copy of the British version. The DH-4 also served as the backbone of the pioneering U. S. Air Mail service during the 1920s.4

European influences also affected other prewar trends in America. On the invitation of Glenn Curtiss, Douglas Thomas left England in 1914 to join the young Curtiss airplane company. Thomas, an experienced engineer at the famed British firm of Sopwith, became a leading designer at Curtiss, where he played a central role in laying out the flying boat America and a trainer that evolved into the famous JN-4 “Jenny” series of World War I. Like the DH-4, the Jennies emerged as a major symbol of American aviation development in the postwar era. Thomas subsequently joined another company, Thomas-Morse and helped design the Thomas-Morse Scout, a significant biplane fighter design. The Thomas Morse organization had begun with two British brothers, William and Oliver Thomas (no relation to Douglas) who graduated from London’s Central Technical College in the early 1900s. They migrated to America, worked for Curtiss and various other engineering firms, and finally established themselves as the aeronautical engineering firm of Thomas Brothers in Bath, New York. World War I brought a volume of orders for planes and engines. Needing capital and room for expansion, Thomas Brothers merged with the Morse Chain Company in Ithaca, New York. As the Thomas-Morse Aircraft Corporation, the company employed more that 1,200 workers and became one of the leading aviation manufacturers of the World War II era. In the 1920s, Thomas-Morse eventually became part of Consolidated Aircraft, which evolved into the aerospace giant, General Dynamics. About the time of the 1920s merger, Oliver Thomas emigrated to Argentina to become a rancher. William remained in America and became fascinated with the pastime of building model airplanes. During the 1930s, he became prominent in promoting the hobby as a national phenomenon and was eventually named as president of the Academy of Model Aeronautics. Over the decades since, the Academy offered the creative framework that subsequently launched the careers of thousands of aeronautical engineers.5

In the immediate postwar era, America again drew on European expertise to develop the young National Advisory Committee for Aeronautics. With no firsthand experience, NACA planners built a conventional, open circuit tunnel based on a design proved at the British National Physical Laboratory. At the University of Gottingen in Germany the famous physicist Ludwig Prandtl and his staff had already built a closed circuit, return flow tunnel in 1908. Among other things, the closed circuit design required less power, boasted a more uniform airflow, and permitted pressurization as well as humidity control. The NACA engineers at Langley knew how to scale up data from the small models tested in their sea level, open circuit tunnels, but they soon realized that their estimates were often wide of the mark. For significant research, the NACA experimenters needed facilities like the tunnels in Gottingen. They also needed someone with experience in the design and operation of these more exotic tunnels. Both requirements were met in the person of Max Munk.

Munk had been one of Prandtl’s brightest lights at Gottingen. During World War I, many of Munk’s experiments in Germany were instantaneously tagged as military secrets (though they usually appeared in England, completely translated, within days of his completing them). After the war, Prandtl contacted his prewar acquaintance, Jerome Hunsaker, with the news that Munk wanted to settle in America. Officially, America still remained at war with Germany. For Munk to enter the United States in 1920, President Woodrow Wilson had to sign two special orders: one to countermand Munk’s status as an enemy alien, and another permitting him to hold a government job. In the spring of 1921, construction of a pressurized, or variable density tunnel, began at Langley. Under Munk’s supervision, the tunnel began operations in 1922 and proved highly successful in the theory of airfoils, contributing to the NACA’s growing reputation as a world center for airfoil research. Munk’s tenure at the NACA was a stormy one. He was brilliant, erratic, and an autocrat. After many confrontations with various bureaucrats and Langley engineers, Munk resigned from the NACA in 1929. But his style of imaginative research and sophisticated wind tunnel experimentation was a significant legacy to the young agency.6

The American aviation community continued to keep a close eye on European developments. While serving as NACA Langley’s chief physicist, Edward Pearson Warner was packed off to Europe in 1920 for an extensive tour designed to gain insights concerning research and development trends among Europe’s leading aviation centers. Soon after, the NACA established a permanent observation post in Paris. Headed by John J. Ide, this Continental venue maintained a steady flow of information to American civil and military authorities. The Paris office remained an important operation until World War II forced its closure.

Back in the U. S., the NACA continued to be influenced by Europeans on its staff as well as European theory imported to serve in NACA’s research projects. One of the principal figures to emerge in this era was Theodore Theodorsen, a Norwegian emigre and Chief Physicist at Langley in 1929. After graduating from the Technological Institute of Norway in 1921, he taught there and came to the U. S. three years later. He was an instructor at Johns Hopkins, 1924 to 1929, where he received his Ph. D. Steeped in mathematical research, he was a strong proponent of airfoil studies through theoretical analyses. In this respect, he proved a useful counterpart to experimental investigators like the American, Eastman Jacobs, who was pushing for a new variable density tunnel in the 1930s. Their exchanges helped shape research that led to laminar flow wings. While the NACA deserves credit for its eventual breakthrough in laminar flow wings, the resolution of the issue illustrates a fascinating degree of universality in aeronautical research. The NACA, born in response to European progress in aeronautics, benefited through the employment of Europeans like Munk and Theodorsen and profited from a continuous interaction with the European community – or at least in attempts to stay abreast.

In 1935, Jacobs traveled to Rome as the NACA representative to the Fifth Volta Congress on High-Speed Aeronautics. During the trip, he visited several European research facilities, comparing equipment and discussing the newest theoretical concepts. The United States, he concluded, held a leading position, but he asserted that “we certainly cannot keep it long if we rest on our laurels.” On his way home, Jacobs stopped off at Cambridge University in Great Britain for long visits with colleagues who were investigating the peculiarities of high-speed flow, including statistical theories of turbulence. These informal exchanges proved to be highly influential on Jacobs’ approach to the theory of laminar flow by focusing on the issue of pressure distribution over the airfoil. Working out the details of the idea took three years and engaged the energies of many individuals, including several on Theodorsen’s staff even though Theodorsen himself remained skeptical.

Once the theory appeared sound, Jacobs had a wind tunnel model of the wing rushed through the Langley shop and tested it in a new icing tunnel that could be used for some low-turbulence testing. The new airfoil showed a fifty percent decrease in drag. Jacobs was elated, not only because the project incorporated complex theoretical analysis, but also because the subsequent empirical tests justified a new variable density tunnel. Without diminishing the role of the NACA in laminar flow research, the British influence represented an essential catalyst in the story.7

Advances in aeronautical theory represented only one dimension of aeronautical progress in America; the European legacy embraced a variety of practical domains having a lasting influence on the American scene. During World War I, the Dutch designer Anthony Fokker gave his name to a series of German fighters that built a formidable reputation. He re-established his firm in Holland after the war, then moved to America in 1922, first as a consultant then as head of his own manufacturing company. The cachet of the Fokker name helped make his big, tri-motor airliners successful and materially promoted airline travel in the United States. The welded, tubular steel fuselage framework and cantilevered wings of Fokker transports represented a valuable example of design and construction during the pre-WWII era. Subsequent progress in modem, metal aircraft reflected a marked heritage from Germany in the person of Adolf Rohrbach, a pioneer in the art of stressed skin construction. Rohrbach delivered some highly publicized lectures in the United States during 1926 and published an influential article on this subject that appeared in the Society of Automotive Engineers Journal in 1927. Then there was Samuel Heron of Britain. Before settling in the United States in 1921, he had worked for Rolls Royce and other leading British engine manufacturers. In addition to his work in the technical center for the U. S. Air Corps at McCook Field, Heron worked for Wright Aeronautical, Ethyl Corporation, and other American companies. Heron proposed the sodium-cooled valve, a key component of high-powered radial engines that helped pave the way for the use of potent, high-octane fuels in modem aircraft powerplants. Charles Lindbergh’s non-stop flight across the Atlantic in 1927, in a plane powered by a Wright Aeronautical engine, owed a debt to several areas of Heron’s work in aircraft engines and fuels.8

A variety of additional practical issues needed resolution, and Europeans played a key role here as well. A catalyst in this respect was the Daniel Guggenheim Fund for the Promotion of Aeronautics. America lacked an aeronautical infrastructure. Commercial aviation in particular needed daily, reliable weather forecasts, a foundation of legal guidelines, and a nation-wide educational system for training aeronautical engineers and scientists. The Guggenheim Fund helped bridge these gaps, relying heavily on imported know-how and experts from overseas. Between 1926 and 1930, this private philanthropy supported a variety of programs that profoundly influenced the growth of American aviation. Since meteorology was necessary for accurate forecasting over airline routes, the Guggenheim Fund sponsored several research efforts and founded a department of meteorology at MIT. The expert who directed these Guggenheim efforts was Carl-Gustav Rossby, bom in Stockholm, and educated in Sweden, Norway, and Germany. After building the meteorology department at MIT, he went on to Chicago in 1941. Through his own research and through influence on a new generation of students, Rossby laid the foundations for aviation weather forecasting in the United States. The Guggenheims also promoted professional studies in aviation law, developing the Air Law Institute within Northwestern University. The American organization enjoyed immense benefits from an exchange of professors with the Air Law Institute of Konigsberg in Germany.9

As aviation in the U. S. progressed after World War I, the need for larger numbers of trained engineers became evident. Two of the pioneering American universities with major aeronautical training curricula had emigres as principal professors. At the University of Michigan it was Felix Pawlowski, trained in Germany and France before the war. In 1913, he began offering some of the first aeronautical engineering courses in America, worked for the U. S. Army War Department, and became head of Michigan’s Aeronautical Engineering Department in the postwar era. Pawlowski maintained close contacts with the aeronautical community overseas; Michigan’s curriculum was continuously enlivened by visiting European experts who fascinated students with discussions of advanced theoretical studies and research problems. Moreover, the aeronautical engineering curricula at universities across America relied heavily on British textbooks in advanced aerodynamics, structures, and related aviation topics. In addition to Pawlowski at Michigan, other schools also employed European professors.

At New York University, it was Alexander Klemin, who graduated from the University of London in 1909, and came to America in 1914. He took an MS degree at MIT and succeeded Hunsaker as director of its Aeronautics Department. In 1925, he became Guggenheim Professor of Aeronautics at New York University, where he enjoyed a long and distinguished career. In the 1930s, his interest in rotary wing flight made NYU a center of research in helicopters and autogiros. Klemin’s success in acquiring sophisticated wind tunnel facilities gave NYU an additional role as a center of productive testing for major northeastern manufacturers like Grumman, Seversky, Vought, and Sikorsky. Moreover, Klemin became a leading figure in the institutionalization of aeronautics in America. He was one of the people who helped create one of the early industry periodical magazines, Aviation, which gained strength through successive decades, and eventually became known as Aviation Week and Space Technology. In 1933, Klemin joined Jerome Hunsaker, Edward P. Warner, and others who desired a professional engineering focus apart from the Society of Automotive Engineers, the professional home of most of aviation’s practicing engineers. Like the founders of the NACA, the founders of the new American organization also looked to Europe for precedents and used the Royal Aeronautics Society as the model for the Institute of Aeronautical Sciences. In due time, the IAS evolved into the American Institute of Aeronautics and Astronautics, the premier aviation and aerospace organization in the United States.

These foreign influences received little or no acknowledgment in aviation circles, although there was one notable exception – the NACA cowling. Details of this important component, which enclosed radial engines in such a way that drag was notably reduced and cooling was enhanced, appeared in an NACA technical note in 1928. The NACA configuration unquestionably resolved many aerodynamic and practical problems. Nonetheless, the agency never took out a patent on the cowling, ostensibly because it was unwilling to joust with British experts over the relative merits of the “Townend ring” (after British researcher Hubert Townend) which predated the NACA design. As one veteran engineer, H. J.E. Reid observed in 1931, “It is regrettable that the [Langley] Laboratory, in its report on cowlings, did not mention the work of Townend and give him credit.” 10

But America still lagged in theoretical aerodynamics. In 1929, the Guggenheim Fund played a crucial role in luring the brilliant young scientist trained at Gottingen, Theodore von Karman, to the United States. Von Karman joined the faculty at the California Institute of Technology and helped transform the science of aeronautics especially in high-speed research. Within the decade, not only did the Institute’s research projects enrich the field of aerodynamic theory, its graduates began to dominate the discipline in colleges and universities across the nation. During and after World War II, Von Karman became a central figure in American jet propulsion and rocket research.11

The largest foreign group in American aeronautics was Russian – emigres who left their country in the wake of the Revolution of 1917 and the end of the Romanov dynasty. They occupied a variety of positions in academics and industry, and left an enduring legacy of progress. For example, Boris Alexander Bakhmeteff became Professor of Civil Engineering at Columbia University, where his work in hydraulics made him a recognized aviation consultant. He was bom in Tbilisi in 1880, educated in Russia and Switzerland, and taught hydraulics and theoretical mechanics at the Polytechnic Institute at St. Petersburg before coming to America in 1917. Alexander Nikolsky was bom in Kursk in 1902, and was educated at the Russian Naval Academy, 1919-1921. He did advanced studies in Paris in the mid – 1920s, coming to the U. S. in 1928. After further graduate study at MIT, he became a design chief at Vought-Sikorsky Division of the United Aircraft Corporation. Nicholas Alexander, bom in Russia in 1886, became a professor of aeronautical engineering at Rhode Island State College after World War I. There were many others who contributed to American progress as engineers and educators.12

Two Russian emigres became major figures in the American aviation manufacturing industry. Alexander Prokofieff de Seversky was bom in Tbilisi in 1894. After graduation from the Imperial Naval Academy in 1914, he had started post-graduate studies at the Military School of Aeronautics when World War I began. By the time of the Revolution in 1917, he had been shot down and lost a leg, although he returned to duty and shot down 13 German planes. He came to the United States in 1918 as part of a Russian air mission, but decided to remain, becoming a test pilot for the U. S. Army Air Service. His training eventually led to a post as consulting engineer, and he spent several years perfecting an improved bombsight with automatic adjustments. His patents on bomb sights earned money to start the Seversky Aero Corporation (later, the Republic Aircraft Corporation). Over the years, de Seversky invented several items: an improved wing flap; improved procedures in stmctural fabrication; turbo-superchargers for air-cooled engines. Seversky’s company designed and built the P-35 fighter in the 1930s, a plane with retractable landing gear and other features that represented an important transition to modem fighters in the U. S. Army Air Force. The chief engineer for the P-35, Alexander Kartveli, a fellow emigre from Russia, performed a critical role in the P-35 project, as well as its more famous successor, the legendary Republic P-47 Thunderbolt of World War II. Finally, de Seversky’s books and articles on aviation and aerial warfare were widely read in America and helped the country respond to the realities of the new air age as a result of World War II.13

Without a doubt, the best known Russian figure was Igor Sikorsky, bom in Kiev in 1889. Following his education at the Naval Academy of St. Petersburg, he took courses at the Polytechnic Institute of Kiev in 1907-1908. During those years, he began designing and building aircraft, leading to the first four-engined planes to fly.

After immigrating to the U. S. in 1919, Sikorsky developed a number of successful planes, and his company became a division of United Aircraft Corporation in 1929. A series of Sikorsky flying boats during the 1930s established important structural advances, set records, and helped the U. S. to establish pioneering overwater routes to Latin America and to the Orient. Sikorsky also spent considerable effort in perfecting helicopters, and his 1939 machine set the pattern for subsequent helicopter progress in America. As one knowledgeable engineer-historian wrote later, “Few men in aviation can match the span of personal participation and contribution that typify Igor Sikorsky’s active professional life.”14

Less well known, but significant nevertheless, were the contributions of a Sikorsky employee, also Russian, who started the United States on the road towards swept-wing aircraft in the postwar era. Additional emigres from other European countries also helped shape America’s research in high-speed aerodynamics and transonic analyses. Considerable influence emanated from Germany, a traditional leader in theoretical studies in the 1930s and through World War II. In many instances, personnel at the NACA’s Langley laboratories had made preliminary steps in the direction of advanced work, but the data gleaned later from captured German documents often served as catalytic elements in achieving postwar results. By the end of the war, American analysts were already unnerved by the success of Germany’s jet combat aircraft and missile technology, in addition to variable-sweep aircraft prototypes and seemingly bizarre advanced studies. Summing up these “shocking developments,” as NACA veteran John Becker remembered them, he also noted that NACA’s prestige with industry, Congress, and the scientific community had sunk to a new low.

Like several other chapters in the story of high speed flight, the story began in Europe, where an international conference on high speed flight – the Volta Congress – met in Rome during October 1935. Among the participants was Adolf Busemann, a young German aeronautical engineer from Lubeck, who proposed an airplane with swept wings. In the paper Busemann presented at the Rome Conference, he predicted that his “arrow wing” would have less drag than straight wings exposed to shock waves at supersonic speeds. There was polite discussion of Buseman’s paper, but little else, since propeller-driven aircraft of the 1930s lacked the performance to merit serious consideration of such a radical design. Within a decade, the evolution of the turbojet dramatically changed the picture. In 1942, designers for the Messerschmitt firm, builders of the remarkable ME-262 jet fighter, realized the potential of swept wing aircraft and studied Busemann’s paper more intently. Following promising wind tunnel tests, Messerschmitt had a swept-wing research plane under development as the war ended. The American chapter of the swept wing story originated with Michael Gluhareff, a graduate of the Imperial Military Engineering College in Russia during World War I. He fled the Russian revolution and gained aeronautical engineering experience in Scandinavia. Gluhareff arrived in the United States in 1924 and joined the company of his Russian compatriot, Igor Sikorsky. By 1935, he was chief of design for Sikorsky Aircraft and eventually became a major figure in developing the first practical helicopter.

In the meantime, Gluhareff became fascinated by the possibilities of low-aspect ratio tailless aircraft and built a series of flying models in the late 1930s. In a memo to Sikorsky in 1941, he described a possible pursuit-interceptor having a deltashaped wing. Eventually, a wind tunnel model was built; initial tests were encouraging. Wartime exigencies derailed GluharefFs “Dart” configuration until 1944, when a balsa model of the Dart, along with some data, wound up on the desk of Robert T. Jones, a Langley aerodynamicist. Studying GluhareflPs model, Jones soon realized that the lift and drag figures for the Dart were based on outmoded calculations for wings of high-aspect ratio. Using more recent theory for low-aspect shapes, backed by some theoretical work published earlier by Max Munk, Jones suddenly had a breakthrough. He made his initial reports to NACA directors in early March, 1945. Within weeks, advancing American armies captured German scientists and test data that corroborated Jones’ assumptions. Utilization of theses collective legacies, as well as wartime studies on supersonic wind tunnels by Antonio Ferri, of Italy, all leavened successful postwar progress in high-speed research and aviation technology.15

World War II imparted additional aspects of international influence on American progress in aviation and air power. One example involved the famous Norden bombsight. Highly touted before and after the war as a top-secret, crucial American weapon, its originator and namesake was a Dutchman bom in the Dutch East Indies (1880), educated in Germany and Switzerland, an emigre to America in 1904, an entrepreneur during the 1920s and 1930s, and a well-to-do retiree in Zurich, Switzerland, where he died in 1965 as a non-U. S. citizen who still proudly held his Dutch citizenship. During the war, thousands of state-of-the-art, high precision aeronautical instruments in American aircraft came from the production facilities of the Kollsman Instrument Company. Paul Wilhelm Kollsman, bom in Germany, was educated in Munich and Stuttgart before immigrating to America in 1923; he founded the instmment company five years later. One of the most curious international episodes involved the celluloid femme fatale, Hedy Lamarr, the glamorous film star bom in Vienna, Austria, and George Antheil, the American-born composer. Based on Lamarr’s earlier marriage to an Austrian arms dealer and manufacturer, she picked up a workable understanding of electronic signals. With the assistance of the eclectic Antheil (and encouraged by Charles Kettering, the research director of General Motors), they patented a control device in 1942. Regrettably, their system for a jam-proof radio control system for aerial launched torpedoes was not fielded during the war. However, the principles in the Lamarr – Antheil patent became the basis for successful jamming systems that evolved in the 1960s. In a different context, chemical engineering research by the German – American firm of Rohm and Haas resulted in extremely significant wartime advantages for the United States. “Plexiglas,” the material almost exclusively used in U. S. military aircraft of World War II, was basically developed by the German component of Rohm and Haas in the late 1930s. Politically and legally separated from its German counterpart during the war, the American constituency of the firm perfected the product and turned out prodigious quantities of Plexiglas for the

American war effort. The U. S. component also produced military grade hydraulic fluid that retained its functional properties in both high and low temperature extremes, making it an invaluable part of Allied air combat operations.

This international context of the U. S. aviation industry was even more manifest in aircraft production. Between 1938 and 1940, British and French orders totaled several hundred million dollars and over 20,000 aircraft, at a time when Congress had authorized a U. S. Air Corps strength of only 5,500 planes. Official U. S. Air Force histories later noted that the pre-war European orders had effectively advanced the American aircraft industry by one whole year.16 Additional overseas legacies were represented by the development of the P-51 fighter and the evolution of jet engines.

The P-51 developed a reputation as one of the best fighters of World War II. Ironically, its introduction into the Air Force occurred almost as an afterthought. The design had originated in the dark days of 1940, when the RAF placed an emergency order with North American Aviation in California. In a series of around-the-clock design conferences, North American’s engineers finalized a configuration and hand – built the first airplane in just 102 days. The principal project engineer for the P-51 was Ed Schmued. Bom and educated in Germany, Schmued worked with aviation firms in Europe and South America before arriving in the United States in 1930, when one of the companies who employed him wound up as part of the North American Corporation. During the gestation of the P-51 design, the NACA’s Eastman Jacobs happened by one day, and the North American design team pressed him for details of the NACA wing to be used on the airplane. Relying on laminar flow, this feature constituted yet another element of the European legacy to American aeronautics. The P-51 Mustang emerged from the drawing boards as a lean, lithe airplane. After flying an early export version powered by an Allison engine, a canny test pilot from Rolls Royce (Ronald W. Harker) realized that the more powerful Rolls Royce Merlin engine might give the Mustang a stunning increase in performance. He was right. With a top speed surpassing 440 MPH, the Mustang could outspeed and outmaneuver any comparable German fighter. Rolls Royce licensed the Merlin engine for manufacture in the United States, and the hybrid P-5 ID Mustang went into production for the U. S. Army Air Forces in 1943. From beginning to end, the P-51 reflected a consistent European heritage.17

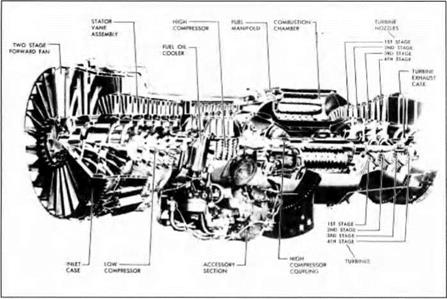

In America, the idea of jet propulsion had surfaced as early as 1923, when an engineer at the Bureau of Standards wrote a paper on the subject which was published by the NACA. The paper came to a negative conclusion: fuel consumption would be excessive; compressor machinery would be too heavy; high temperatures and high pressures were major barriers. These were assumptions that subsequent studies and preliminary investigations seemed to substantiate into the 1930s. By the late 1930s, the Langley staff became interested in the idea of some form of jet propulsion to augment power for military planes for takeoff and during combat. In 1940, Eastman Jacobs and a small staff came up with a jet propulsion test bed they called the “Jeep.” By the summer, however, the Jeep had grown into something else – a research aircraft for transonic flight. With Eastman Jacobs again, a small team made design studies of a jet plane. Although work on the Jeep and the jet plane design continued into 1943, these projects had already been overtaken by European developments.

Frank Whittle, in England, had bench-tested a jet engine in 1937, and four years later, a plane was developed to demonstrate it in flight. During a tour to Britain in April 1941, General H. H. “Flap” Arnold, Chief of the U. S. Army Air Forces, was dumbfounded to learn about a British turbojet plane, the Gloster E28/39. The aircraft had already entered its final test phase and, in fact, made its first flight the following month. Fearing a German invasion, the British were willing to share the turbojet technology with America. That September, an Air Force Major, with a set of drawings manacled to his wrist, flew from London to Massachusetts, where General Electric went to work on an American copy of Whittle’s turbojet. An engine, along with Whittle himself, eventually followed. A special contract went to Bell Aircraft to design a suitable plane, designated as the XP-59A. Development of the engine and design of the Bell XP-59A was so cloaked in secrecy that the NACA learned nothing about them until the summer of 1943.

The XP-59A, equipped with Whittle engine, became the first American jet plane to fly, taking to the air on October 1, 1942. Subsequent prototypes used General Electric engines that had evolved from the original Whittle powerplant. Similarly, many of America’s first-generation military jet planes began their operational lives with British engines. The USAF’s first operational jet fighter, the Lockheed P-80 Shooting Star, was designed around the de Havilland Goblin jet engine. British influence remained strong through the mid-1950s. The Republic F-84F Thunderstreak had a Wright Aeronautical J-65 engine, built under license from the Sapphire powerplant of British Armstrong Siddeley. Grumman’s U. S. Navy jet fighter, the F9F Panther, also relied on versions of British jet engines: the F9F-2 had a Pratt & Whitney J-42 (licensed from the Rolls-Royce Nene design); the F9F-5 used a Pratt & Whitney J-48 (licensed from the Rolls-Royce Tay engine series).

Clearly, American jet engines in the early postwar era owed much to this British bequest, along with a catalog of technological legacies from German sources. “Project Paperclip” brought some 260 scientists and engineers to work in America at United States Air Force research and development centers. Along with leading aerodynamicists came gas turbine specialists like Hans von Ohain and Ernst Eckert. The first jet plane to fly (in 1939) used a jet engine designed by von Ohain, who spent his postwar career in development laboratories at Wright-Patterson Air Force Base. An expert in heat transfer, Eckert soon found himself at NACA’s Lewis Laboratory, where he helped lay the foundations for film cooling of turbine blades – a fundamental advance in gas turbine technology. Eckert’s work at Lewis sparked a continuing process of successful research in this field; he wrote basic reference works on the subject; his tenure at the University of Minnesota established heat transfer studies as an accepted subject that subsequently occupied researchers at America’s leading aeronautical engineering schools. 18

The European legacy was also evident in postwar flight research, such as the rocket-powered X-15 research planes of the late 1950s. The X-15 series were thoroughbreds, capable of speeds up to Mach 6.72 (4534 MPH) at altitudes up to 354,200 feet (67 miles). There was a familiar European thread in the design’s genesis. In the late 1930s and during World War II, German scientists Eugen Sanger and Irene Bredt developed studies for a rocket plane that could be boosted to an Earth orbit and then glide back to land. The idea reshaped American thinking about hypersonic vehicles. “Professor Sanger’s pioneering studies of long-range rocket – propelled aircraft had a strong influence on the thinking which led to initiation of the X-15 program.” NACA researcher John Becker wrote, “Until the Sanger and Bredt paper became available to us after the war we had thought of hypersonic flight only as a domain for missiles….” A series of subsequent studies in America “provided the background from which the X-15 proposal emerged.”19 During the Cold War era, when America and the Soviets began their ideological and technological race to land a man on the moon, the American space effort continued to draw from assorted international sources. As a group, the most significant “catch” of Operation Paperclip may have been Wemher von Braun and the German research team responsible for the remarkable V-2 missile technology. The von Braun team assisted American counterparts in developing a family of postwar military rockets and related space technology, fabricated the booster for America’s first artificial satellite, Explorer I (January 31, 1958), and played a central role in developing the Saturn launch vehicles used in America’s successful manned lunar landing in 1969. Nor were the German emigres with the von Braun contingent the only foreign team to impact the American space effort. In the early 1960s, following Canada’s cancellation of an advanced jet fighter/interceptor designed by the Canadian firm, AVRO, the National Aeronautics and Space Administration immediately sought out the project’s key engineers to work on the early phases of the Apollo project. Over two dozen AVRO veterans signed on, becoming key players in research and development of Apollo systems and operational technology.20