PHILOSOPHY OF DATA AND MODELING

Philosophers of science traditionally construe observation as some unanalyzed and epistemologically unproblematic primitive used to test hypotheses. Philosophers have been largely oblivious to the facts that data are the point of observation, that collecting data involves instrumentation and modeling that can be very problematic, and that much experimental data collection has nothing to do with hypothesis testing or explanation. Just because they rarely concern hypothesis testing or explanation, flight – and ground-test are ideally pure case studies for the epistemology of instrumentation and data.

Examination of scientific practice suggests the following:

First Law of Scientific Data: No matter how much data you have, it is

never enough.

Second Law of Scientific Data: The data you have is never the data you

really want or need.51

The Second Law reflects the fact that the parameters of interest often are removed from what probes and transducers feasibly can measure. You must calculate derived measures from these direct measures via recourse to models.

Complicating such calculation are systematic errors – “black noise”, not Gaussian or “white noise” – associated with instrumentation. For example, mechanical pressure gauges are unreliable in their upper and lower quarter ranges. And pressure transducers have lag phenomena which create different distortions when pressure is increasing than when falling. Wind tunnels are confined spaces where wall-contact turbulence boundary effects affect the direct measurement values. All such effects must be corrected for in the data reduction process.52

These corrections are accomplished by the application of models predicting the contaminating influence of each boundary effect. Calibration curves based on measured variations in tunnel or instrument performance are another species of model used to make corrections. We see here how very model dependent ground and flight test data are. In each case the raw data are enhanced by the addition of a model that brings into relief the actual measured effects.

Here we encounter the First Law: The available data usually are insufficient for reliable interpretation. Data usually yield intelligible observations only by the addition of assumed models to “raw” data. Models range from simple choice of French curve for interpolating data to sophisticated mathematical structures. Sometimes the additions are empirically substantiated – as when there are systematic errors in the data due to instrument distortions or known chamber effects.

Frequently the additions are not substantiated, so we exploit the fact that at a certain level data and assumptions are interchangeable and make up for insufficient data by adding assumptions in lieu of more data.52. This is a general practice known

|

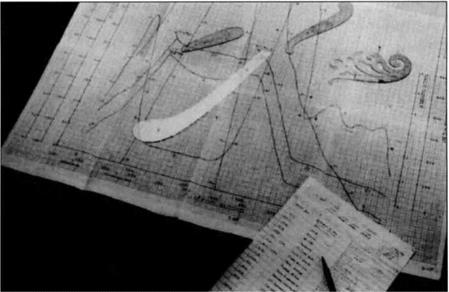

Figure 31. X-15 time-history plot of velocity, altitude, mach number, and dynamic pressure from launch to touchdown, September 14, 1966. Lying on top of the plot are the French curves uses to interpolate between measured data points and correct for measurement error. Fench curves manifest mathematical functions, and so choice of curve amounts to the addition of a structural-escalating model to the data. Lower-right is the flight event log. [Reworked NASA EC93 42307-8.] |

as structural escalation where the addition of, perhaps soft or unsubstantiated, assumptions to data produces a far more robust and stable structure that enables clear interpretation of the data.54 Here is where actual scientific practice parts company with standard philosophical wisdom asserting that assumptions or auxiliary hypotheses must be known or established before they can contribute to scientific knowledge.

When scientists model data via addition of unsubstantiated assumptions in lieu of additional data, the relevant epistemological question concerns not epistemic pedigree, but rather robustness: The significant question is whether intelligible structures revealed by addition of assumptions are real effects in the data or whether they are artifacts of assumptions added to the data. Computer modeling allows one to investigate whether the observed structures are real effects in the data or artifacts of the structurally-escalating assumptions.55

The basic strategy is to do variant or end-member modeling to see how robust the effects are: What happens to our structures if we vary the assumptions we add to the data? A robust effect in the data is one that is insensitive to the specific assumptions added to the data over the plausible range of assumptions. If an effect is robust, then it almost certainly is not an artifact of the added assumptions.

But flight test reveals that assessment of real vs. artifactual effects cannot be reduced to mere robustness. For example, many engine variables such as fuel-flow are collected via analog devices subject to considerable noise. A central part of data reduction (and a main GE EDP unit function) is filtering out noise to obtain real signal. Originally this was done simply by inserting various “plug-in” bandpass filters into the data transmission process. Such techniques work only when the signal-to-noise ratio is favorable.56 If it is not, but the signals are suitably regular, one can add together (“integrate”) various returns until one accumulates enough that the sum of their peaks rises above the noise level. While such techniques work well for radar astronomy, their applicability to flight test with its wildly varying test protocols is problematic.57

A more promising technique feeds noisy data into one input and a specified wave function into another input and performs a cross-correlational analysis. If the added wave function is a reasonable approximation to the true signal underlying the noise, there will be “considerable improvement in detection” of the true signal due to “the fact that we are putting more information into the system, and thus may expect to get more out.”58 The decisions as to which signals to add are based more on operator expertise than they are on established fact.

The correctness of such additive filtering cannot be assessed using robustness considerations. Indeed, the lack of robustness (the true signal quickly disappears if the added wave function is not close to right on) indicates the structurally escalated effects are real.

Whether robustness or sensitivity to parameter assumptions testifies to real vs. artifactual effects in the augmented data depends on available theories governing addition of assumptions to data. Good calibration data and corrections may settle things. For filtering, there is a well-established theory of noisy analog data underlying integration filtering techniques used in radar astronomy. (Our addition of a possible wave-form is a one-shot simulation of such integration techniques.) And given that established theory, if our additions are sensitive to the specific waveforms added, the result is reliable.

These are theoretically driven evaluations of real effects. What if we have no theory – if the assumptions have no pedigree? Then we have real effects where robust parameter variation establishes stable effects. Robust effects in realistic parameter spanning spaces generally are real effects in data. But not all real effects are robust. Non-robust effects also can be real effects, but established knowledge or theory is required to make the case.

Data typically mix real effects with artifacts. We often throw away data to discover real effects. Thus noisy data are a mix of signal and noise, and we use filtering to throw away the noise, bringing the signal into relief. Knowledge about the measured parameter can reduce the amount of noise we collect. In flight test, most parameters are wave phenomena. Sampling at rates higher than the base frequencies yields mostly noise as data. That is why oscillographs don’t work in wind tunnels. Good instrumentation design reduces the amount of noise or artifact introduced into the raw data.

The notion of “raw data” used above is only heuristic. Every instrument design involves implementation of a model of the interaction of various physical parameters with instruments, of the systematic distortions such instruments undergo, and the correction of such instruments — all before we encounter the augmenting modeling assumptions involved in data reduction and analysis.

The points are: (i) all data are model-dependent, (ii) all data reduction and data analysis involves further modeling; (iii) thus there are no “raw data”; (iv) whether assumptions are previously established or unsubstantiated, standard techniques exist for evaluating whether effects revealed by the augmentation of data by assumptions are real effects or artifacts of the data; (v) both instrumentation and added assumptions can introduce artifacts into data; and (vi) the final assessment of artifacts vs. real effects requires recourse to theory or knowledge when effects are not robust.