I

All the papers in this collection are highly accomplished exercises in the history of technology but, of course, they represent history of somewhat different kinds. For example, they assume different positions along the so-called “internal-external” dimension. Profs. Smith and Mindell’s admirably detailed history of the turbo fan engine largely, but not wholly, falls into the “internal” category, as do the authoritative accounts by Prof. Vincenti of work done in supersonic wind tunnels, and Prof. Suppe on the instrumentation used in test flying. Of a more “external” character are papers dealing with the economic, institutional, and legal setting of aeronautical activity, such as Profs. Crouch and Roland’s discussion of patents or Prof. Douglas’ account of the different professional inputs to airport design. Again on the “externalist” side we have Prof. Jakab’s account of the hypocrisy and profiteering surrounding the emergence of McCook Field.

Apart from the “internal – external” divide we also have other kinds of variation. Some papers are clearly more descriptive, while some are more explanatory. I think it is true to say, however, that most contributors have chosen to keep a low profile when it comes to questions of methodology or theory. This is a wise strategy. Concrete examples often speak louder than explicit theorizing. Readers can put their own gloss on the material and see for themselves points of contact with other ideas. Nevertheless it is in the area of theory and method where I may have a role to play. I am not an historian of technology, indeed I am not an historian at all, and I have no expertise in aerodynamics. My comments will all be from the standpoint of sociology. I shall focus on what I take to be the more significant sociological themes that are explicit, or implicit, in what has been said. I shall try to suggest ways in which sociological considerations might be brought into the discussion or where they might help in taking the analysis further. Adopting a sociological approach biases me towards what I have called the “external” end of the scale, but there are some points of contact with the more technical, “internal” discussions, and I shall do my best to indicate these. Here I shall be struggling somewhat, but for me these points of contact between the social and the technical represent the greatest challenge, and the greatest interest, of the whole exercise.

II

Let me begin with Prof. Galison’s paper. His claim, and I think he makes it convincingly, is that aircraft accident reports are beset by an unavoidable

349

P. Galison and A. Roland (eds.), Atmospheric Flight in the Twentieth Century, 349-360 © 2000 Kluwer Academic Publishers.

indeterminacy. Investigators confront an enormously complicated web of circumstances and actions. How is this manifest complexity to be handled? There are two possible directions in which to go. One direction is to narrow down the explanation of the accident on to one or more particulars and highlight these as the cause. We will then hear about the Captain who chose to take-off in bad weather, or the co-pilot who was not assertive enough to over-rule him, or the quality control inspector who, years before, let pass a minute metallurgical flaw. The other direction leads to a broader view, embracing a wide range of facts which can all be found to have played a contributory role in the accident. Had the de-icing been done properly? Was the company code of practice wrong in discouraging the use of full – throttle? What is it that sets the style and tone of group interaction in the cockpit? Who designed the three hydraulic systems that could be disabled by one improbable but logically possible event? Accident reports, he argues, tend to oscillate between specific causes and the broad range of other relevant particulars.

Prof. Galison has put his finger on a logical point similar in structure to that made famous by the physicist Pierre Duhem in his Aim and Structure of Physical Theory (1914). No hypothesis can be tested in isolation, said Duhem. Every test depends on numerous background assumptions or auxiliary hypotheses, e. g. about how the test apparatus works, about the purity of materials used, and about the screening of the experiment. When a prediction goes wrong we therefore have a choice about where to lay the blame. We can, logically, either blame the hypothesis under test, or some feature, specified or unspecified, of the background knowledge. Prof. Galison is saying the same thing in a different context. No causal explanations can be given in isolation. Whether we realize it or not, the holistic character of understanding always gives us choice.

How are those in search of causes to make such choices? Prof. Galison tells us not to think in terms of absolute rights or wrongs in the exercise of this logical freedom. The unavoidable fact is that there are different “scales” on which to work. This, “undermines any attempt to fix a single scale as the single ‘right’ position from which to understand the history of these occurrences” (p.38). What, then, in practice inclines the accident report writer to settle on one or another strategy for sifting the facts and identifying a cause? Legal, economic and moral pressure, we are told, has tended to favour the sharp focus. This has the advantage that it offers a clear way to allocate responsibility: this person or that act was responsible; this defect, or that mechanical system, was the cause. The utility of the strategy is clear. Individuals can be shamed or punished; and a component can be replaced. Now, we may be encouraged to feel, the matter is closed and all is safe again.

Is this a universal strategy rooted in some common feature of human understanding, or near universal social need? Do accident reports from, say, India and China, have the same character as those from France and America? Or are some cultures more holistic and fatalistic and others more individualistic and litigious? It would be a fascinating, though enormous, undertaking to answer a comparative question of this kind. Prof. Galison gives us some intriguing hints to be going on with. In his experience there is not much difference between, say, an Indian and an

American report. What stand out strongly are not broad cultural differences but sharp conflicts of a more local kind. While the National Transportation Safety Board blames a dead pilot, authoritative and experienced pilots dismiss the report as pedantic and unrealistic. While official American investigators point to the questionable behavior of the control system of a French built aircraft under icy conditions, the equally official French investigators point the finger at the crew who ventured into the bad weather. Prof Galison speaks here of the “desires” of industry, of the “stake” of pilots, and of the “investment” of government regulators, as well as economic “pressures”. All of these locutions can be brought under the one simple rubric of ‘interests’. The phenomenon, as it has been described, is structured, strongly and in detail, by social interests. Let me state the underlying methodology of Prof. Galison’s paper clearly and boldly. It is relativist, because it denies any absolute right, and depends on what is sometimes called an ‘interest model’. As we shall see, these challenging themes weave their way through the rest of the book as well, and inform much that is said by the other contributors.

Ill

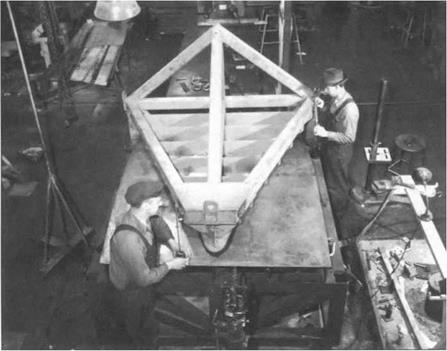

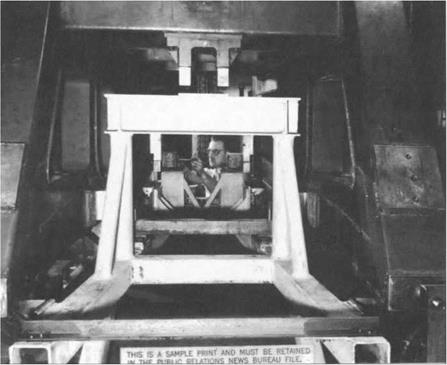

A number of papers take up a comparative stance – perhaps the most explicit being Prof. Crouch’s discussion of the differential enthusiasm with which governments encouraged aviation in Europe as compared to the United States. It wasn’t the Wright brother’s insistence on taking out patents that held back American aviation, it was lack of government awareness and involvement. Prof. Roland takes up the story of patents to show how the earlier shortcomings were later dramatically repaired. Once the government had got the message, expedient ways were found for circumventing legal issues. One of the themes of Prof. Roland’s paper is that of a culture of design, craft-skill and engineering expertise. His point is that if anything inhibited the flow of information and expertise it wasn’t patents, it was the localized character of engineering practices and the desire to keep successful techniques away from the gaze of competitors. So significant was this local character of engineering knowledge that when different firms came to produce aircraft or components according to the same specifications, they still turned out subtly different objects. Prof. Ferguson gives a stunning and well-documented example of this. He looks at the wartime collaboration of Boeing, Douglas and Vega in producing B-17 bombers. Despite the intelligent anticipation of such problems, and a determined attempt to overcome them, these three companies could not build identical aircraft. The ‘same’ tail sections, for example, proved not to be interchangeable. The lesson, and Prof. Fergusen draws it out clearly, is that practical knowledge does not reside in abstract specifications (not even in the engineer’s blue prints.) Knowledge is the possession of groups of people at all levels in the production process. It resides locally in their shared practices. The problem of producing the same aircraft remained insurmountable as long as the three local cultures survived intact and in separation from one another. It was only overcome by removing, or significantly weakening, the group boundaries. This was done by deliberately making it possible for people to meet and interact. In effect, it needed the three groups to be made into one group.

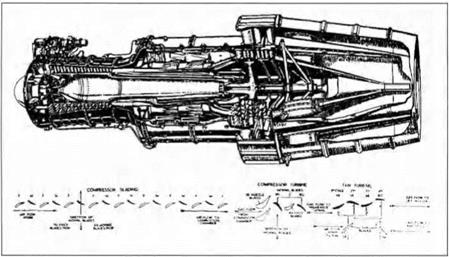

Others tell us of similar phenomena. Profs. Smith and Mindell speak of the different engineering cultures of Pratt and Whitney, on the one hand, and General Electric, on the other. General Electric’s rapid and innovative development of the CJ 805 by-pass turbofan engine was helped by their recruitment of a number of key engineers from NACA. Again, we see that knowledge and ideas move when people move. Smith and Mindell characterize the culture of Pratt and Whitney as “conservative” – and explain how, nevertheless, contingencies conspired to ensure that its incrementally developed JT8D finally triumphed over the product of the more radical group at General Electric. Their history of the triumph of the Pratt and Whitney engine shows how different groups produce different solutions to the same problem. Add to this their discussion of the Rolls Royce Conway, and the debatable question of which was the ‘first’ by-pass engine, and we have another demonstration of the complexity of historical phenomena and the diversity of legitimate descriptions that are possible. Finally, they address the general question of why turbo-fan engines emerged when they did – some twenty five years after Whittle and Griffith had suggested the idea? The concept became interesting, they argue, because of decline in commitment to speed and speed alone as the arbiter of commercial success in civil aviation. Turbo-fans could succeed economically in the high sub-sonic realm in a way that jets could not. We are dealing with new measures of success, “measures that embody social assumptions in machinery” (p.47). This pregnant sentence once again gives an interest-bound and relativist cast to the argument.

The theme of the local engineering culture, whether it be Pratt and Whitney versus General Electric, or Boeing versus Douglas, strikes me as sociologically fascinating. And it is, of course, something calling out for comparative analysis. How many kinds of local engineering culture are there? Are there as many cultures as there are firms? Ferguson’s work suggests that there are, and that we are dealing with a very localized and sharply differentiated effect. Nevertheless, even if local cultures are all different from one another, they might still fall into classes and kinds, perhaps even a small number of kinds. If they were to fall into a small number of kinds then comparison should enable us to see the same patterns repeating themselves over and over again. This might allow us to identify the underlying causes of such cultural styles. Calling Pratt and Whitney “conservative” might tempt us to think in terms of a dichotomy, such as conservative versus radical. Other dichotomies, such as open societies versus closed societies, or Gemeinschaft versus Gessellschaft, the organic versus the mechanical, or markets versus traditions, will then suggest themselves as models. But there are other options. Why work with a dichotomy? If we follow the suggestions of the eminent anthropologist Mary Douglas we arrive at the idea that there might be, not two, but four basic kinds of engineering culture (cf. Douglas,1973,1982).

Let me explain how this rather striking conclusion can be reached. The first step is to notice that the basic options open to anyone in organising their social affairs are limited. They can attend to the boundaries around their group and find them, or try to make them, either strong or weak. They can then structure the social space within the group in a hierarchical or an egalitarian manner. If we assume that extreme, or pure cases, are more stable, or self-reinforcing, than eclectic or mixed structures, this gives us essentially a two-by-two matrix yielding four ideal types.

The same conclusion can be arrived at by another route. Think of the strategies available to us for dealing with strangers. We can embrace strangers, we can exclude them, we can ignore them, or we can assimilate them to existing statuses or roles. To embrace strangers means sustaining a weak boundary around the group, while to exclude them requires a strong inside-outside boundary. Having a pre-existing slot according to which they can be understood and their role defined means having a complex social structure already in place which is sufficiently stable to stand elaboration and growth. Responses to strangers thus etch out the pattern of boundaries around and within the group and the options, of embracing, excluding, ignoring or assimilating, generate four basic structures – arguably the same four as identified above.

The second step in Mary Douglas’s argument is to suggest that our treatment of things is, at least in part, structured by their utility for responding to people. We have seen the principle at work in the large scale in the case of the by-pass engine as a response to market conditions. But the idea can be both deepened and generalized. Perhaps our use of objects, at whatever level of detail, will always be monitored for implications about the treatment of people. Do they leave existing patterns of interaction and deference in tact, or do they subvert them? Do they provide opportunities to control others, or opportunities to evade control? Take some simple examples. A place may be deemed “dangerous” if we don’t want people to go there. Or a thing is not to be moved or changed, because it embodies the rights of some person or group, say the right of ownership or use. Or the introduction of a new practice may render existing expertise irrelevant, and hence existing experts redundant. Put these two ideas together, social interaction as the necessary vehicle and unavoidable medium for the use of things, and the limited patterns of such interactions, and we may have a basis for a simple typology of cultures or styles in the employment of natural things and processes. We may even have a basis for a typology of styles of engineering.

Whatever your reaction to this idea, I hope you will agree that we badly need intellectual resources with which to think about such difficult themes. That is why I should like to hear a lot more about the sociology of Pratt and Whitney and General Electric, and a lot more from Prof. Suppe and Prof. Vincenti about the groups with whom they worked. How strong were the boundaries between the inside and the outside of the group? Did members of the group readily work with those outside it, trading ideas and information? How hierarchical or internally structured was the inside of the group? Did people swap roles or was the division of labor clear cut? Did they eat and relax together as well as work together? Who and how many were the isolates or outcasts -1 mean: those who were considered unreliable, incompetent or downright dangerous? These are the questions that Mary Douglas wants us to ask.

They may shed light on why some parts of a design are held stable while others are changed, or why a problem may be deemed solved by one group while another treats that solution as an unsatisfying expedient.

I say that these ideas may provide the basis of a typology. There are many ways of criticizing the line of thought I have just sketched. But I would ask you not to be too critical too soon. Give the idea time to settle. It may start generating connections that are not at first obvious to you. For example, Prof. Bilstein’s account of the international heritage of American aerospace technology connects with what I have just said about responses to strangers. He is telling us about a segment of culture that was eager to embrace strangers, where ‘stranger’ here means experts and outsiders from Europe. The European input is sometimes retrospectively edited out, but in practice and despite difficulties, it was seen as a source of opportunity rather than threat.

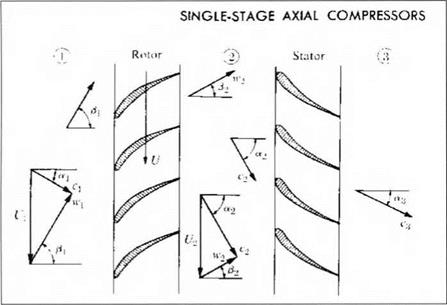

Or consider the “ways of thinking” alluded to by Prof. Vincenti. Here too we are dealing with questions of group culture and group boundaries. His first-hand account describes the problems of understanding an aerofoil in the transonic region. Two different groups of people had reason to address this issue: those designing wings, and those who worked on propellers. Those who work on wings routinely use the categories of ‘lift’, ‘center of lift’ and ‘drag’, but this is not exactly how the designers of axial turbines and compressors think: “because the airfoil-like blades of their machines operate in close proximity to one another, [they] think of the forces on them rather differently” (p.23). If NACA had structured its research sections differently and with different boundaries from those which actually obtained, might Prof. Vincenti’s group have had a different composition, and could that have enabled them to think about the forces on their wings “rather differently”? Or, to put the point another way, what would have happened if propeller and wing theorists had switched roles? Would the same understanding have emerged, or would they, like the teams at Boeing, Douglas and Vega, have made subtly different artifacts? These are enormously difficult questions that we may never be able to answer in a fully satisfactory way. The need to think counterfactually may defeat even the most well – informed analyst, but these, surely, are the ultimate questions that we cannot avoid addressing.

There is one misunderstanding that I should warn you against. Theories such as Douglas’s tend to be seen, by critics, in terms of stereotypes. The stereotype has them saying that “everything is social” or that “the only causes of belief are social.” Douglas’s approach is not of this kind at all. Society is not the only cause, just as it was not the only cause at work in the episode when the tail-sections from different factories would not fit together. We must also remember that certain large-scale pieces of equipment such as wind tunnels (or great radio telescopes or particle accelerators) have a way of so imposing themselves on their users that they actually generate a characteristic social structure. Given certain background conditions, the apparatus itself may be a cause of the social arrangements. This is compatible with what Douglas is saying. Her ideas would still come into play. The point would be this: suppose people have to queue up to use such things as wind tunnels, radio telescopes and accelerators. If so, there has to be a decision about who comes first, and how long they can have for their experiment. Things have to be planned in advance, timetables have to be drawn up and respected. These are all matters which presuppose a clear structure of authority. Someone must have the power to do this. These are not, perhaps, easy environments for the individualist. Having said that the physical form of the apparatus can be a cause, it would still be interesting to know what leeway there is here. To what extent is it possible to operate such things as wind tunnels, or huge telescopes, in different ways? And if it is possible, how many different ways are there?

IV

Two papers that I have not yet mentioned seem to me to provide a particularly good opportunity for opening up themes bearing on local cultures in engineering or science. The first of them is Prof. Schatzberg’s account of the manufacture of wooden aeroplanes during World War II. The British and Canadians were successful in their continuation of this technology, the Americans had ‘forgotten’ how to do it. Although not presented in these terms, this episode clearly bears on the question of local engineering culture and style. There must have been something about de Havilland which explains why they could and did build in wood and which differentiates them from the American manufacturers, such as Curtiss-Wright, who did not or could not. And presumably there was something equally special about the Canadian firms who were especially keen to get in on the act. Prof. Schatzberg grounds these facts about the culture of firms in the wider culture. He suspects that we need to go to the symbolic meaning of the material under discussion, namely wood. Wood for the Americans meant outmoded tradition; metal meant modernity. Wood for the Canadians meant the mythical heart of their nation, with its great forests. Wood for the British was more neutral, having neither strongly positive nor negative connotations. In that matter-of-fact spirit they built the formidable Mosquito – made of ply and balsa.

Symbolic meanings are not easy to deal with, so it is worth seeing if the argument can be recast in simpler terms. Would it suffice to appeal to, say, vested interests? Canada’s wood was a major economic resource – what better stance to take than to advocate a use and a market for it? Self-interest would dictate nothing less. The American manufacturers had moved on from wood, they had gone all out for metal so as not to be left behind in a competitive market. Who wants to go backwards in a manufacturing technique? It is expensive to re-tool and re-skill. What about de Havilland? They were still building the famous Tiger Moth trainer out of wood, and supplying it in great numbers to the RAF. Even more to the point, as Prof. Schatzberg points out, they could cite their experience with wooden airliners which had important similarities with the Mosquito (p.20). Perhaps economic contingencies such as these are basic, and explain the phenomenon attributed to symbolic meanings. But as Prof. Schatzberg argues, such contingencies often balance out. Canada may be rich in forests, but so was the United States. Canada was no more short of aluminum than the United States. Britain was short of both aluminum and timber and had to import both. As for the market, why did it lead manufacturers to metal in America but not in Britain? Why was demand differently structured in the two cases? That points to a difference of underlying disposition. Perhaps the presence and operation of such dispositions can be illuminated by the idea of symbolic meaning. But whatever our thoughts about the relation of symbolic meanings to interests, Prof. Schatzberg’s intriguing study raises important questions. How local are local engineering cultures, and to what extent do they depend on the wider society?

V

I now want to focus on Prof. Hashimoto’s paper. You will recall that this deals with the work of Leonard Bairstow and his team at the Natural Physical Laboratory in Teddington during and after World War I. We are told of their problematic relation both to PrandtTs school in Gottingen and the (mostly) Cambridge scientists who worked at the Royal Aircraft Establishment in Famborough. Bairstow worked with models in a wind tunnel. He had made his reputation with important studies of the stability of aircraft. Divergences then began to appear between the results of the wind tunnel work and those of the Famborough people testing the performance of real aircraft in the air. Bairstow took the view that his wind tunnel models gave the correct result and the full scale testing was producing flawed and erroneous claims.

Prof. Hashimoto’s account is a clear and convincing example of an interest explanation of the kind I have spoken about. Bairstow and his team had invested enormous effort into the wind tunnel work. Reputations depended on it. Is it any wonder, when it comes to the subtle matter of exercising judgement and assessing probabilities, that Bairstow should be influenced by the time and effort put into his particular approach? Notice I say “influenced” rather than “biased”. I don’t think that there is any evidence in the paper that Bairstow was operating on anything other than the rational plane. He seems to have been a forceful controversialist, but those arguing on the other side had their vested interests as well. Time, energy, skill, and not a little courage, had gone into their results too. Rational persons acting in good faith can and do differ, sometimes radically. To my mind the only questionable note in Prof. Hashimoto’s analysis is when a negative evaluation of Bairstow creeps into the historical description. I suspect that the analysis goes through as well without it.

Bairstow did not just argue in defense of his wind tunnel work on models. He also held out against Prandtl’s “boundary layer” concept. This stance seems to me even more intriguing than his opposition to scale effects. If I understand the matter rightly, Prandtl’s idea of a boundary layer functioned as a justification for certain processes of approximation and simplification which made the treatment of the flow round an aerofoil mathematically tractable. For example, it allowed the problem to be divided into two parts. Bairstow’s opposition seems to have centered on his suspicion of these mathematical expedients. His obituarist, in the Biographical

Memoirs of Fellows of the Royal Society (Temple, 1965 ), suggested that Bairstow didn’t want a simplified or approximate solution to the Navier-Stokes equation for viscous flow, but a full and rigorous solution for the whole mass of fluid. He tried to produce such a treatment, but with only limited success (p.25). So again we are dealing with a matter of scientific judgement on Bairstow’s part. His response to Prandtl was not foolish or dogmatic, but a rational expression of a particular set of priorities and intellectual values. I should like to know where these priorities came from, and if they can be explained.

At first sight it seems odd that a person such as Bairstow, who placed so much weight on the experimental observation of models in wind tunnels, should incline to a mathematically rigorous and complete solution to the equations of flow. We might expect an experimentalist to embrace any clever expedient that would get a plausible answer, even at the cost of a certain eclecticism. So there is a puzzle here. Of course, it may be that we are dealing with nothing but a psychological idiosyncrasy of Bairstow as an individual, but we should at least explore other possibilities. If his strategy commanded the respect of some portion of the scientific community then we are certainly dealing with more than idiosyncrasy. I have already suggested that large installations, like wind tunnels, may demand or encourage clear-cut social organisation and hierarchy. Could it be that living with the demand for rigorous and extensive social accountability lends credibility to the demand for a correspondingly rigorous and general form of scientific accountability? Or is the issue one of insiders and outsiders, of the boundary round the group? If there were a body of existing mathematical techniques which had always sufficed in the past then why import potentially disruptive novelty from the outside? Here we see the relevance of Mary Douglas’s observations about strategies for dealing with strangers. What she says about ways of dealing with novel persons applies on the cognitive plane too. Typically, dealing with new ideas means dealing with new people. A disinclination to do the latter might explain a disinclination to do the former. Clearly all this is speculation on my part. It is no more than a stab in the dark. But however wrong the suggestions might be, I think that this is the area where the argument can be developed.

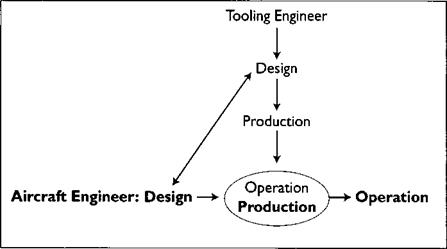

Interest explanations, it seems, are ubiquitous, and beginning with Prof. Galison and ending with Prof. Hashimoto, we have seen how naturally the different contributors have had recourse to them here. Nevertheless, there is a great deal that we do not yet understand about the operation of interests. For example, the trade-off between long term and short term interests is little understood, though they often incline us to quite different courses of action. Prof. Deborah Douglas’s account, of the relation between engineers and architects, suggests that at first these two groups felt they were in competition with one another. In the event they reached a working relationship, seeing the advantages of co-operation rather than conflict. Prof. Jakab describes how the drying up of government contracts, in 1919, led to a change in the relation between the Engineering Division at McCook Field and the private manufacturers of aircraft. After a period of plenty, a divergence of interests came to the fore and set the stage for a decade of bickering. In offering an interest analysis, then, we are not dealing with a static and unchanging set of oppositions, but a potentially flexible and evolving system of self-understanding. Critics of interest explanations, and there are many of them, often assume they are static and forget that change and flexibility are actually inherent to this manner of explanation.

VI

A further theme I could have pursued is brought out with great clarity in Prof. Anderson’s paper (a paper which, for non-specialists such as myself, was invaluable for introducing some of the basic ideas in aerodynamics). Prof. Anderson’s theme was the coming together of academic or ‘pure’ science with the needs of the practical engineer, and the synthesis of a new form of knowledge that might be called ‘engineering science’. There is a temptation, amongst intellectuals, to treat theory as higher, or more profound, than practice. Practice is the application of theory so, (we might be tempted to conclude) behind every practice there must lurk a theory. In opposition to this, Prof. Anderson reminds us of the post hoc character of the circulation theory of lift. Similarly, with regard to drag, the theoretical explanation of just why engine cowling was so effective came well after the practical innovation itself. There is a whole epistemology to be built by insisting that practice can, and frequently does, have priority over theory. That epistemology will be the one needed to understand the history of aviation and the science of aeronautics. The theme of the priority of the empirical is also made explicit at the end of Prof. Suppe’s paper which tells us about the growth of instrumentation in the history of flight testing. He argues for the significance of this site as a source of new ideas to revivify the philosophy of science. That discipline has, arguably, been too closely linked with the academic laboratory, or at least, with the image of scientific work as theory testing. Prof. Suppe takes us out of the laboratory into the hanger, where the price of failure is far greater than the disappointment of a refuted theory. The aim is to discern useful patterns in the mass of data produced by probes and gauges, it is to root out systematic error, and to find the trick of making signals stand out from noise. Again, there are no rules and no absolute guarantees. Even, or perhaps especially, in these practical fields, reality cannot speak directly to those who want and need to act with the maximum of realism.

If we were looking for a philosophical standpoint that does justice to the practical character of knowledge, for an epistemology for the engineer or aviator, where might we look? I can think of two sources that would be worth exploring, though both suggestions may seem a little surprising. First we could follow the lead of Hyman Levy. Levy was professor of mathematics at Imperial College, London, and had written one of the early textbooks in aeronautics, Cowley and Levy (1918). His theoretical work in fluid mechanics linked him with Bairstow, and he had worked in this field at the National Physical Laboratory. Levy wanted to understand his science philosophically and to develop a philosophy appropriate for “modem man”. He was also a Marxist and belonged to that impressive band of left-wing scientists in Britain in the inter-war years which included J. D. Bernal, Lancelot Hogben, J. B.S. Haldane, and Joseph Needham [see Werskey (1988)]. They did much to spread the scientific attitude and to educate the public about the potential and importance of science. As a Marxist, Levy accepted the laws of dialectical materialism, one of which is the law of the unity of theory and practice. In his Modern Science. A Study of Physical Science in the World Today; published in 1939, Levy devoted a chapter to ‘The Unity of Theory and Experiment.’ He did not here talk in the language of philosophical Marxism, but about wind tunnels and the measurement of lift and drag. His exemplar of the unity of theory and practice was aeronautical research. This might commend itself to Prof. Anderson and Prof. Suppe. But I can also think of another source of philosophical inspiration, one coming from what, at first sight, appears to be the opposite ideological direction. For a philosophy which grounds theory in practice, and meaning in use, we could hardly do better than to consult Wittgenstein’s Philosophical Investigations (1953) and his Remarks on the Foundations of Mathematics (1956). The formalistic concern with theory testing that, as Prof. Suppe observes, limits so much academic philosophy, is wholly absent from Wittgenstein’s work. Perhaps it is no coincidence that Wittgenstein was trained as an engineer and did research on propeller blades. The young Wittgenstein once asked Bertrand Russell if he (Wittgenstein) was any good at philosophy. Wittgenstein’s reason for asking was that if he was no good he intended to become an aviator.1 [3] 1909 they set up the Advisory Committee for Aeronautics under the eminent Cambridge physicist Lord Rayleigh, and dedicated a section of the National Physical Laboratory to aeronautical questions. Prof. Schatzberg reminds us that such facts stand in opposition to many a political lament about Britain’s national decline and lack of industrial spirit. But can this rapid response really have come from a culture that was (as the stereotype has it) dominated by an anti-technological elite steeped in the classics?2

As the papers in this collection make amply clear, the history of aviation as a field of study is rich and many-faceted. There is material enough here to prompt major, and exciting, revisions in the history of culture – as well as in the theory of scientific and technical knowledge.

[1]

P. Galison and A. Roland (eds.), Atmospheric Flight in the Twentieth Century; vii-xvi © 2000 Kluwer Academic Publishers.

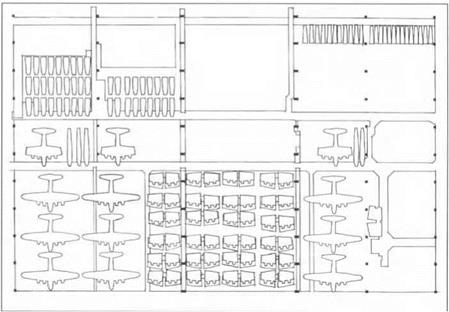

The result was three different ways of producing the B-17, and an indeterminacy about the best way to mass produce aircraft. An engineer writing on the Douglas system was careful to avoid making comparisons between different production ideologies, arguing that, “Among the many abilities of Americans… is the ingenuity by which different groups achieve similar or identical results, but with widely varying, sometimes seemingly opposite methods.” Unfortunately, the resulting aircraft from these methods were merely similar, and never identical. In fact, in October of 1943 General “Hap” Arnold specifically contacted the National Aircraft War Production Council (NAWPC) about the “non-interchangeability” of assemblies from different companies. He complained that “the different processes employed by the various manufacturers preclude the replacement of a Vega tail assembly by a Boeing or a Douglas tail assembly.” Despite rigid adherence to a single set of master gages and templates supplied by Boeing, differing production ideologies and traditions of practice brought about three different products.27

The result was three different ways of producing the B-17, and an indeterminacy about the best way to mass produce aircraft. An engineer writing on the Douglas system was careful to avoid making comparisons between different production ideologies, arguing that, “Among the many abilities of Americans… is the ingenuity by which different groups achieve similar or identical results, but with widely varying, sometimes seemingly opposite methods.” Unfortunately, the resulting aircraft from these methods were merely similar, and never identical. In fact, in October of 1943 General “Hap” Arnold specifically contacted the National Aircraft War Production Council (NAWPC) about the “non-interchangeability” of assemblies from different companies. He complained that “the different processes employed by the various manufacturers preclude the replacement of a Vega tail assembly by a Boeing or a Douglas tail assembly.” Despite rigid adherence to a single set of master gages and templates supplied by Boeing, differing production ideologies and traditions of practice brought about three different products.27