Colour TV from a black-and-white world

During Apollo, colour television was still in its infancy and was still viewed as notoriously complicated technology. Conventional colour TV cameras of the time used at least three imaging tubes to generate simultaneous images in red, blue and green. The cameras were therefore large, heavy and required constant attention to keep the three images aligned in the final camera output. A simpler system was required and designers turned to a derivative of one of the earliest methods of generating colour TV, the colour wheel.

During Apollo, colour television was still in its infancy and was still viewed as notoriously complicated technology. Conventional colour TV cameras of the time used at least three imaging tubes to generate simultaneous images in red, blue and green. The cameras were therefore large, heavy and required constant attention to keep the three images aligned in the final camera output. A simpler system was required and designers turned to a derivative of one of the earliest methods of generating colour TV, the colour wheel.

CBS, one of the United States’ three major TV companies at the time, initially developed the colour wheel camera in the days before a rival system was adopted for general use. The colour wheel camera had one great advantage that lent itself to use in space. Since the colour scans were expressed sequentially instead of simultaneously, only a single imaging tube was required and the camera could be made much smaller than conventional colour cameras of the time.

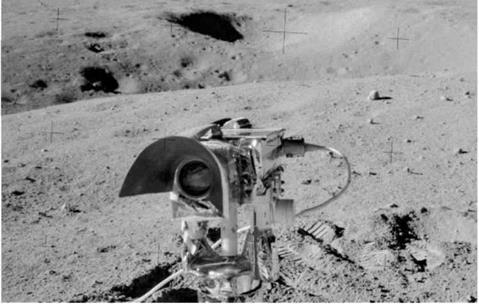

With only a single tube, the Apollo 12’s troublesome colour TV camera on

Apollo colour camera produced its tripod on the Moon. (NASA).

what was essentially a standard black-and-white signal at 60 fields per second, 262.5 lines per field, with about 200 useful lines per field. Directly in front of the imaging tube, between it and the lens, was the colour wheel. This had six filters as two sets each of red, blue and green. It was spun at 10 revolutions per second such that each field from the camera was an analysis of the image in red, then blue, then green, over and over. If viewed on a black-and-white monitor, the image would display a pronounced 20-Hz flicker because the field that represented green was brighter than the other two, but would only come around 20 times per second. The bandwidth given over to this television signal was increased to 2 MHz which overlapped other components in the Apollo S-band radio signal. Consequently, careful filtering was required to remove these from the TV image.

The flickering black-and-white signal received from the spacecraft, or from the Moon’s surface, had to undergo extensive processing at Houston. In television studios of the Apollo era, it was crucial that the timing of the TV signal was extremely accurate and stable. In other words, the pulses within the signal that define the start of a line or field should occur with extreme regularity and precision. In addition, all equipment dealing with the signal had to agree when the lines and fields began – that is, they all had to be synchronised. This was a problem for Apollo because there was no provision to send synchronising pulses to the camera. In fact, there would have been no point incorporating such pulses because the Doppler shift caused by the changing velocity of the spacecraft or of the landing site with respect to

|

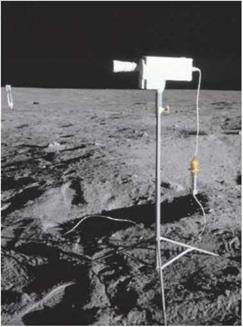

Apollo 16’s remotely-controlled colour TV camera mounted on the rover. Note the sunshade mounted over the lens. (NASA) |

|

The changing image from the Moon. Still frames from the TV coverage on Apollo 11 (left) and Apollo 17 (right). (NASA) |

the receiver on the turning Earth constantly altered the timing of the received TV signal anyway.

This was in the days before mass digital storage made the task of synchronising video simple. The engineers’ solution used two large videotape recorders to correct the signal’s timing. The first machine recorded the pictures coming from space and synchronised itself with the pulses that were built into the incoming television signal. However, instead of the tape going onto a take-up reel, it was passed directly to a second videotape machine which replayed its contents. This second machine took its timing reference from the local electronics so that when it reproduced the signal, it did so with the timing pulses synchronised with the TV station.

Once the timing had been sorted, a colour signal had to be derived from the three separate, sequential fields that represented red, blue and green. To achieve this, a magnetic disk recorder spinning at 3,600 rpm (once every 60th of a second) recorded the red, blue and green fields separately onto six tracks. From this disk, the appropriate fields could be read out simultaneously using multiple heads and combined conventionally to produce a standard colour television signal. Overall, the time required to undertake the processing put the image about 10 seconds behind the associated audio.

Apollo 10 proved that a colour camera worked within the overall Apollo system though Tom Stafford had to battle against a conservative NASA bureaucracy to get it there. On Apollo 12, colour TV was transmitted from the lunar surface for the first time – or at least it was until the camera was inadvertently aimed at the Sun. This destroyed part of the sensitive imaging tube and gave the commercial TV networks a headache while they scrambled for something to show the viewers!

The cameras for Apollos 11, 12 and 14 were merely placed on stands near the LM, which was acceptable as long as activity was centred around the lander, but when the Apollo 14 crew set off for their geological traverse they walked out of shot and left the audience watching an unchanging scene for several hours. It was clear that when lunar exploration stepped up a gear for the J-missions, the TV camera would have to be mounted on the lunar rover.