Matching the Tunnel to the Supercomputer

The use of sophisticated wind tunnels and their accompanying complex mathematical equations led observers early on to call aerodynamics the

|

A model of the X-43A and the Pegasus Launch Vehicle in the Langley 31-Inch Mach 10 Tunnel. NASA. |

"science” of flight. There were three major methods of evaluating an aircraft or spacecraft: theoretical analysis, the wind tunnel, and full-flight testing. The specific order of use was ambiguous. Ideally, researchers originated a theoretical goal and began their work in a wind tunnel, with the final confirmation of results occurring during full-flight testing. Researchers at Langley sometimes addressed a challenge first by studying it in flight, then moving to the wind tunnel for more extreme testing, such as dangerous and unpredictable high speeds, and then following up with the creation of a theoretical framework. The lack of knowledge of the effect of Reynolds number was at the root of the inability to trust wind tunnel data. Moreover, tunnel structures such as walls, struts, and supports affected the performance of a model in ways that were hard to quantify.[602]

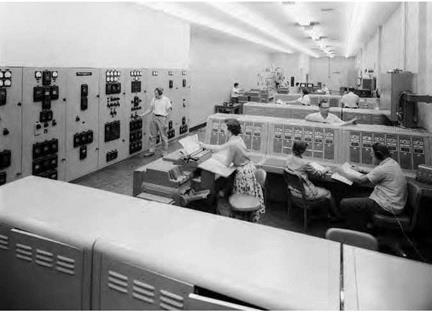

From the early days of the NACA and other aeronautical research facilities, an essential component of the science was the "computer.” Human computers, primarily women, worked laboriously to finish the myriad of calculations needed to interpret the data generated in wind

tunnel tests. Data acquisition became increasingly sophisticated as the NACA grew in the 1940s. The Langley Unitary Plan Wind Tunnel possessed the capability of remote and automatic collection of pressure, force, temperature data from 85 locations at 64 measurements a second, which was undoubtedly faster than manual collection. Computers processed the data and delivered it via monitors or automated plotters to researchers during the course of the test. The near-instantaneous availability of test data was a leap from the manual (and visual) inspection of industrial scales during testing.[603]

Computers beginning in the 1970s were capable of mathematically calculating the nature of fluid flows quickly and cheaply, which contributed to the idea of what Baals and Corliss called the "electronic wind tunnel.”[604] No longer were computers only a tool to collect and interpret data faster. With the ability to perform billions of calculations in seconds to mathematically simulate conditions, the new supercomputers potentially could perform the job of the wind tunnel. The Royal Aeronautical Society published The Future of Flight in 1970, which included an article on computers in aerodynamic design by Bryan Thwaites, a professor of theoretical aerodynamics at the University of London. His essay would be a clarion call for the rise of computational fluid dynamics (CFD) in the late 20th century.[605] Moreover, improvements in computers and algorithms drove down the operating time and cost of computational experiments. At the same time, the time and cost of operating wind tunnels increased dramatically by 1980. The fundamental limitations of wind tunnels centered on the age-old problems related to model size and Reynolds number, temperature, wall interference, model support ("sting”) interference, unrealistic aeroelastic model distortions under load, stream nonuniformity, and unrealistic turbulence levels. Problematic results from the use of test gases were a concern for the design of vehicles for flight in the atmospheres of other planets.[606]

|

The control panels of the Langley Unitary Wind Tunnel in 1956. NASA. |

The work of researchers at NASA Ames influenced Thwaites’s assertions about the potential of CFD to benefit aeronautical research. Ames researcher Dean Chapman highlighted the new capabilities of supercomputers in his Dryden Lecture in Research for 1979 at the American Institute of Aeronautics and Astronautics Aerospace Sciences Meeting in New Orleans, LA, in January 1979. To Chapman, innovations in computer speed and memory led to an "extraordinary cost reduction trend in computational aerodynamics,” while the cost of wind tunnel experiments had been "increasing with time.” He brought to the audience’s attention that a meager $1,000 and 30 minutes computer time allowed the numerical simulation of flow over an airfoil. The same task in 1959 would have cost $10 million and would have been completed 30 years later. Chapman made it clear that computers could cure the "many ills of wind-tunnel and turbomachinery experiments” while providing "important new technical capabilities for the aerospace industry.”[607]

The crowning achievement of the Ames work was the establishment of the Numerical Aerodynamic Simulation (NAS) Facility, which began operations in 1987. The facility’s Cray-2 supercomputer was capable of 250 million computations a second and 1.72 billion per second for short periods, with the possibility of expanding capacity to 1 billion computations per second. That capability reduced the time and cost of developing aircraft designs and enabled engineers to experiment with new designs without resorting to the expense of building a model and testing it in a wind tunnel. Ames researcher Victor L. Peterson said the new facility, and those like it, would allow engineers "to explore more combinations of the design variables than would be practical in the wind tunnel.”[608]

The impetus for the NAS program arose from several factors. First, its creation recognized that computational aerodynamics offered new capabilities in aeronautical research and development. Primarily, that meant the use of computers as a complement to wind tunnel testing, which, because of the relative youth of the discipline, also placed heavy demands on those computer systems. The NAS Facility represented the committed role of the Federal Government in the development and use of large-scale scientific computing systems dating back to the use of the ENIAC for hydrogen bomb and ballistic missile calculations in the late 1940s.[609]

It was clear to NASA that supercomputers were part of the Agency’s future in the late 1980s. Futuristic projects that involved NASA supercomputers included the National Aero-Space Plane (NASP), which had an anticipated speed of Mach 25; new main engines and a crew escape system for the Space Shuttle; and refined rotors for helicopters. Most importantly from the perspective of supplanting the wind tunnel, a supercomputer generated data and converted them into pictures that captured flow phenomena that had been previously unable to be simulated.[610] In other words, the "mind’s eye” of the wind tunnel engineer could be captured on film.

Nevertheless, computer simulations were not to replace the wind tunnel. At a meeting sponsored by Advisory Group for Aerospace

Research & Development (AGARD) on the Integration of Computers and Wind Testing in September 1980, Joseph G. Marvin, the chief of the Experimental Fluid Dynamics Branch at Ames, asserted CFD was an "attractive means of providing that necessary bridge between wind – tunnel simulation and flight.” Before that could happen, a careful and critical program of comparison with wind tunnel experiments had to take place. In other words, the wind tunnel was the tool to verify the accuracy of CFD.[611] Dr. Seymour M. Bogdonoff of Princeton University commented in 1988 that "computers can’t do anything unless you know what data to put in them.” The aerospace community still had to discover and document the key phenomena to realize the "future of flight” in the hypersonic and interplanetary regimes. The next step was inputting the data into the supercomputers.[612]

Researchers Victor L. Peterson and William F. Ballhaus, Jr., who worked in the NAS Facility, recognized the "complementary nature of computation and wind tunnel testing,” where the "combined use” of each captured the "strengths of each tool.” Wind tunnels and computers brought different strengths to the research. The wind tunnel was best for providing detailed performance data once a final configuration was selected, especially for investigations involving complex aerodynamic phenomena. Computers facilitated the arrival and analysis of that final configuration through several steps. They allowed development of design concepts such as the forward-swept wing or jet flap for lift augmentation and offered a more efficient process of choosing the most promising designs to evaluate in the wind tunnel. Computers also made the instrumentation of test models easier and corrected wind tunnel data for scaling and interference errors.[613]