The largest and most densely populated habitat on Earth is the ocean realm. As Claire Nouvian observes, “The deep sea, which has been immersed in total darkness since the dawn of time. . . forms the planet’s largest habitat.” Zoologists and oceanographers are far from documenting the welter of sea life. “Current estimates about the number of species yet to be discovered vary between 10 and 30 million,” writes Nouvian. “By comparison, the number of known species populating the planet today, whether terrestrial, aerial, or marine, is estimated at about 1.4 million.”39 Down-welling light, whether sunlight or moonlight, in the upper reaches of the ocean’s water column can reveal an animal’s location or hideout. To avoid predators, marine animals largely forage for food at night, under cover of darkness. The result is that the ocean’s water column is the site of the largest daily animal migration known, with marine animals migrating vertically as much as tens of meters to a kilometer. Harbor Branch Oceanographic Institute scientist Marsh Youngbluth explains:

Each evening and morning all the oceans and even the lakes of the world are a theater to mass movements involving billions and billions of creatures swimming from deep waters to the surface and then back again to a colder, darker world. . . . Sixty years ago, when active sonar was first available, captains of fishing vessels thought that the bottom was rising under the boat. This phenomenon, called the “vertical migration,” is the largest synchronized animal movement on Earth. . . . Like nomads in deserts, they move to and from the surface waters in search of an oasis: the first 100m of the sea, where the sunlight still penetrates, the “photic” zone, abounding with food. . . . The signal that triggers and resets this ritual is the daily waning and waxing of sunlight, so travel frequently starts at dusk and ends at dawn.40

Although the deep sea is one place sunlight cannot reach, light and vision are no less critical there than for landlocked creatures. The Spookfish, for instance, lives at 1,000 meters’ depth in cold, dark Pacific Ocean waters and has reflective telescopes for eyes.41 Spookfish weren’t exactly the first astronomers, but unlike any other vertebrates, their eyes include crystal mirrors that focus light on the retina. And some marine animals have evolved the equivalent of wearing Polaroid sunglasses—they can see polarized light, which assists in their discerning jellyfish and other nearly transparent species moving through the water column.

Deep below the coral reefs, ocean animals produce their own light to see and lure prey, signal distress or otherwise communicate, attract a mate, repel and stun predators, and counter-shade themselves.42 Nouvian contends that bioluminescence is the most common mode of communication on the planet. Approximately 90 percent of all ocean animals produce or deploy bioluminescence in some capacity. Also referred to as cold body radiation or “cold light,” nearly 98 percent of the energy used in generating this living light affords only a minimal release of heat, presumably to protect the luminescent organism from being detected by predators who sense infrared.

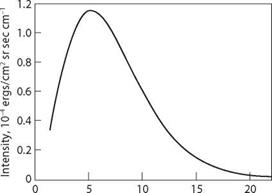

Bioluminescence has evolved independently some forty to fifty times as a result of its clear survival advantages. While fireflies provide the most familiar example, a handful of land-based organisms luminesce, including fly larvae as well as some earthworms, snails, mushrooms, and centipedes. In the ocean realm, bioluminescence is the rule rather than the exception. Ranking among the bioluminaries are bacteria, snails, krill, squid, eel, jellies, sponges, corals, clams, sea worms, Green Lanternsharks, Megamouth Sharks, and an array of fish. Inhabitants of mid-water habitats luminesce at varying intensities to countershade themselves and blend in with down-welling light so informative in the ecology of the water column. Lanternfishes, of which there are at least two hundred species, can illuminate photophores along the entire length of their bodies to provide counter shade. “Photophores are often highly evolved structures, consisting not only of a light-generating cell, but also a reflector, lens, and color filter,” explains Scripps Institute oceanographer Tony Koslow. “Most photophores emit light in the blue end of the spectrum to match the wavelength of down – welling light.”43

Viperfish and deep-sea anglerfishes have attached to their bodies a kind of a fishing rod with a lighted lure that attracts sizable prey directly into their toothy mouths. Marine biologist Edith Widder notes other deployments of bioluminescence in the ocean depths:

There are many fishes, shrimps, and squids that use headlights to search for prey and to signal to mates. . . . And as with some cars, some headlights can be rolled down and out of sight when they are not in use—a handy way of hiding that reflective surface and allowing the fish to better blend into the darkness. Most headlights in the ocean are blue, which is the color that travels furthest through seawater and the only color that most deep-sea animals can see. But there are some very interesting exceptions like dragonfish with red headlights that are invisible to most other animals but that the drag – onfish can see and use like a sniper scope to sneak up on unsuspecting, unseeing prey.44

Deep-sea prawns and some squid emit bioluminescent fluids to startle predators and evade being eaten. Koslow reports, “Some jellyfishes jettison bioluminescing tentacles before making their escape, much as a lizard may let go its still-writhing tail to occupy its predator while effecting its escape.”45 Other jellyfish can spray their predators with a luminescent substance that makes the

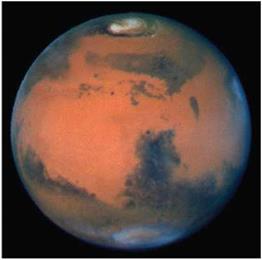

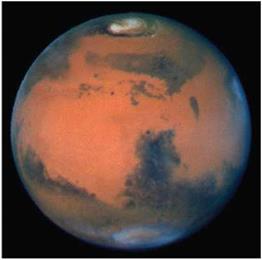

Plate 1. More than a century after Per – cival Lowell mapped Mars, the Hubble Space Telescope turned its best camera onto the red planet, producing this true color image in March 1997, just after the last day of Martian spring in the northern hemisphere. Mars was near its closest approach to Earth, 60 million kilometers away (NASA/David Crisp and the WFPC2 Camera Team).

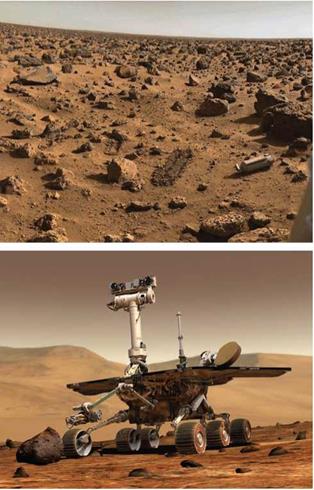

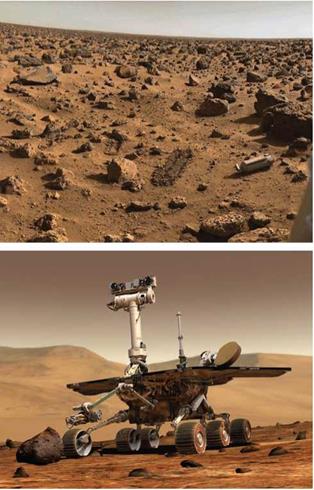

Plate 2. Mars as seen by the Viking 2 lander. Images like this dashed the more fevered speculation of Mars as a living planet stemming from Percival Lowell and subsequent science fiction; it was revealed as a frigid and arid desert with a tenuous atmosphere, where water cannot exist for more than a moment on the surface before evaporating (NASA/Mary Dale – Bannister, Washington University in St. Louis).

Plate 2. Mars as seen by the Viking 2 lander. Images like this dashed the more fevered speculation of Mars as a living planet stemming from Percival Lowell and subsequent science fiction; it was revealed as a frigid and arid desert with a tenuous atmosphere, where water cannot exist for more than a moment on the surface before evaporating (NASA/Mary Dale – Bannister, Washington University in St. Louis).

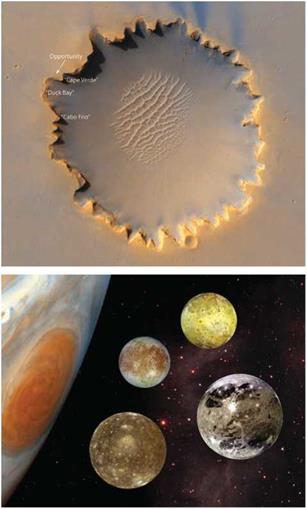

Plate 3. Artist’s conception of one of the Mars Exploration Rovers on Mars. Spirit and Opportunity each far exceeded their life expectancy and have performed at a very high level in the unforgiving conditions on the surface of Mars. The rovers were identical as pieces of hardware, yet had quite different experiences and adventures. Opportunity has exceeded its design lifetime by a factor of more than 35 (NASA/Jet Propulsion Laboratory).

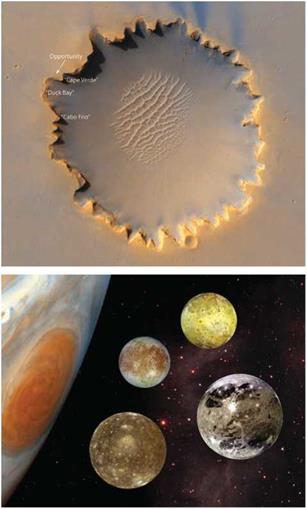

Plate 4. Victoria Crater at Meridiani Planum on Mars, which is about 730 meters in diameter. Opportunity spent over two years exploring the rim of the crater, occasionally venturing inside, and by mid-2011 had traveled over 20 miles across the Martian terrain. Opportunity had a lucky bounce on landing and extricated itself from the kind of sand dune that disabled Spirit (NASA/Jet Propulsion Laboratory).

Plate 5. The four Galilean moons of Jupiter, visited by the Voyager spacecraft in 1979. In decreasing size order, the moons are Ganymede, Callisto, Io, and Europa; Jupiter is not shown at the same scale. Ganymede is 5300 km in diameter. The spacecraft made many discoveries in the Jovian moon system, including volcanism on Io and a subsurface ocean on Europa (NASA Planetary Photojournal).

Plate 5. The four Galilean moons of Jupiter, visited by the Voyager spacecraft in 1979. In decreasing size order, the moons are Ganymede, Callisto, Io, and Europa; Jupiter is not shown at the same scale. Ganymede is 5300 km in diameter. The spacecraft made many discoveries in the Jovian moon system, including volcanism on Io and a subsurface ocean on Europa (NASA Planetary Photojournal).

Plate 6. Attached to the body of each Voyager spacecraft is a gold-plated, copper phonograph record. The record contains musical selections, images, and audio greetings in many world languages, along with instructions on how to retrieve the information. The analog technology will be very durable in the far reaches of space (NASA/Jet Propulsion Laboratory).

Plate 6. Attached to the body of each Voyager spacecraft is a gold-plated, copper phonograph record. The record contains musical selections, images, and audio greetings in many world languages, along with instructions on how to retrieve the information. The analog technology will be very durable in the far reaches of space (NASA/Jet Propulsion Laboratory).

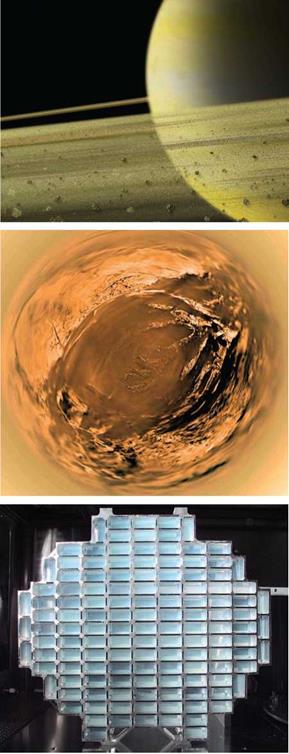

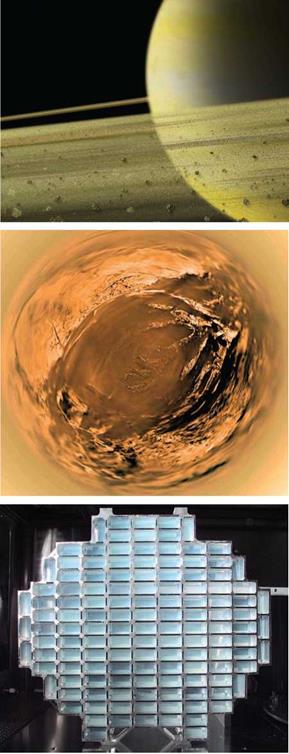

Plate 7. An artist’s impression of a close-up view of Saturn’s rings.

The rings are thought to be made of material unable to form a moon because of Saturn’s tidal forces, or to be debris from a moon that broke up due to tidal forces. The particles are made of ice and rock and range in size from less than a millimeter to tens of meters across (NASA/ Marshall Image Exchange).

The rings are thought to be made of material unable to form a moon because of Saturn’s tidal forces, or to be debris from a moon that broke up due to tidal forces. The particles are made of ice and rock and range in size from less than a millimeter to tens of meters across (NASA/ Marshall Image Exchange).

Plate 8. A panoramic view of Saturn’s moon Titan, from the Huygens lander during its descent to the surface in late 2005. In this fish-eye view from an altitude of three miles, a dark, sandy basin is surrounded by pale colored hills and a surface laced with stream beds and shallow bodies of liquid composed of methane and ethane (NASA/ESA/Descent Imager Team).

Plate 9. Stardust collected samples of a comet and interstellar dust samples using a particle collector with cells containing aerogel, which is an amorphous, silica-based material that is strong yet exceptionally light. Particles entered this solid foam at high speed and were decelerated and trapped. The spacecraft returned its samples to Earth in 2006 (NASA/Jet Propulsion Laboratory).

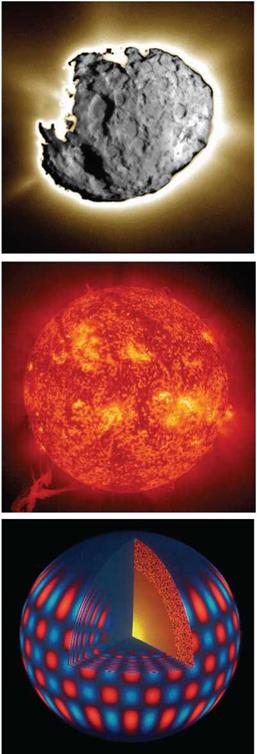

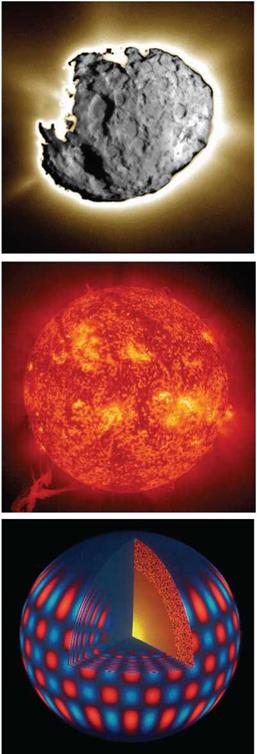

Plate 10. This composite image was taken of comet Wild 2 by Stardust during its close approach in early 2004. The comet is about 3 miles in diameter. Its surface is intensely active, with jets of gas and dust spewing millions of miles into space. This image is a hybrid: a short exposure captures the jet while a long exposure captures the surface features (NASA/Jet Propulsion Laboratory).

Plate 11. SOHO took this image of the Sun in January 2000. The relatively placid optical appearance belies the intense activity seen in this ultraviolet image, where a huge, twisting prominence has escaped the Sun’s surface. When these events are pointed at the Earth, telecommunications and power grids can be affected (NASA/SOHO).

Plate 11. SOHO took this image of the Sun in January 2000. The relatively placid optical appearance belies the intense activity seen in this ultraviolet image, where a huge, twisting prominence has escaped the Sun’s surface. When these events are pointed at the Earth, telecommunications and power grids can be affected (NASA/SOHO).

Plate 12. This computer representation shows one of millions of modes of sound wave oscillations of the Sun, where receding regions are colored red and approaching regions are colored blue. The Sun “rings” like a bell, with many complex harmonics, and study of the surface motions can be used to diagnose the interior regions (NSO/AURA/NSF).

Plate 13. People have used the sky as a map, a clock, a calendar, and as a cultural and spiritual backdrop since antiquity. This celestial map was produced in the seventeenth century by the Dutch cartographer Frederick de Wit. Constellations and star patterns are unchanging from generation to generation, so the stars were seen as being eternal (Wikimedia Commons/ Frederick de Wit).

Plate 14. We live in a city of stars, seen here in a full-sky panorama of the Milky Way photographed from Death Valley in California. The ragged band of light represents a view through the disk of the spiral galaxy we inhabit, and Hipparcos has mapped out the nearby regions of the disk and parts of the extended halo (U. S. National Park Service/Dan Duriscoe).

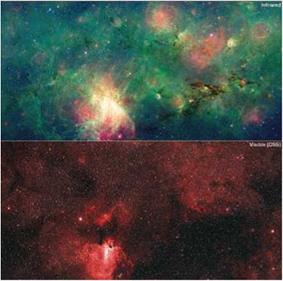

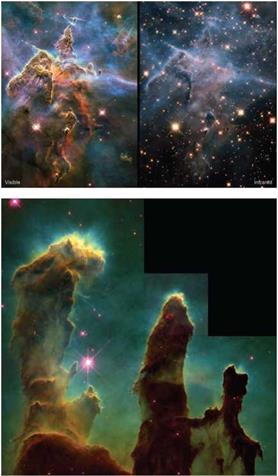

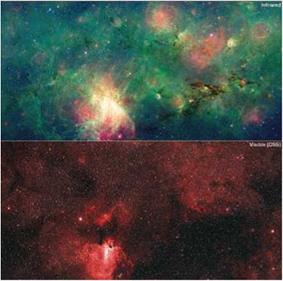

Plate 15. This Spitzer Space Telescope image shows star formation around the Omega Nebula, M17. This Messier object is a nebulosity around an open cluster of three dozen hot, young stars, about 5,000 light-years away. Spitzer records information at invisibly long wavelengths, and the difference between the view in the infrared (top) and the optical (bottom) can be dramatic. Colors in the infrared view represent different temperature regimes, with red coolest and blue hottest (NASA/JPL – Caltech/M. Povich).

Plate 15. This Spitzer Space Telescope image shows star formation around the Omega Nebula, M17. This Messier object is a nebulosity around an open cluster of three dozen hot, young stars, about 5,000 light-years away. Spitzer records information at invisibly long wavelengths, and the difference between the view in the infrared (top) and the optical (bottom) can be dramatic. Colors in the infrared view represent different temperature regimes, with red coolest and blue hottest (NASA/JPL – Caltech/M. Povich).

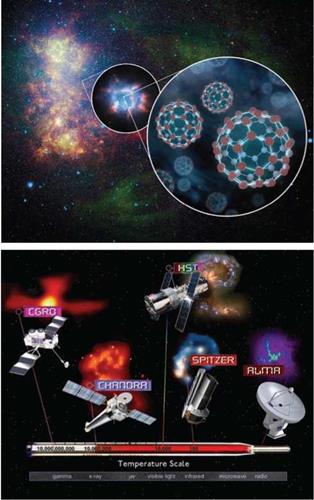

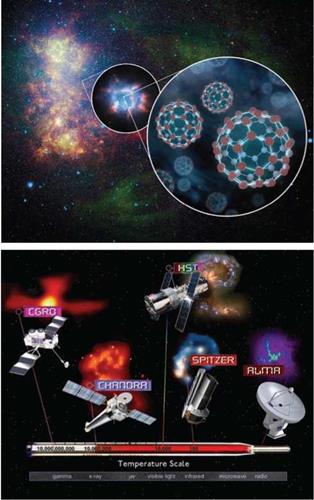

Plate 16. The Spitzer Space Telescope detected molecules of buckminsterfullerene, or “buckyballs,” in a nearby galaxy, the Small Magellanic Cloud. The first zoom shows the type of planetary nebula where the molecules were found, and the second shows the molecule structure, where sixty carbon atoms are arranged like a tiny soccer ball (NASA/SSC/Kris Sellgren).

Plate 17. NASA’s Great Observatories are multi-billion – dollar missions with complex instrument suites, designed to answer fundamental questions in all areas of astrophysics. Including the ground-based Atacama Large Millimeter Array (ALMA), they can diagnose the universe at temperatures ranging from tens to tens of billions of Kelvin (NASA/ CXC/M. Weiss).

Plate 17. NASA’s Great Observatories are multi-billion – dollar missions with complex instrument suites, designed to answer fundamental questions in all areas of astrophysics. Including the ground-based Atacama Large Millimeter Array (ALMA), they can diagnose the universe at temperatures ranging from tens to tens of billions of Kelvin (NASA/ CXC/M. Weiss).

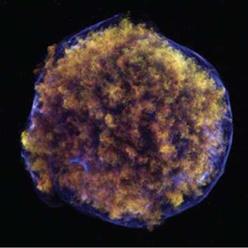

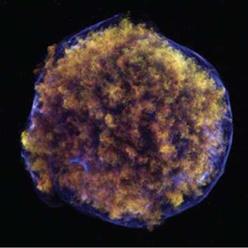

Plate 18. The dying star that produced this great bubble of hot glowing gas was first noted by Tycho Brahe in 1572. A white dwarf detonated as a supernova when mass falling in from a companion triggered its collapse; the shock wave from the subsequent explosion led to the blue arc. The surrounding material is iron-rich and highly excited iron atoms create spectral lines detectable at X-ray wavelengths (NASA/ CXC/Chinese Academy of Sciences/F. Lu).

Plate 18. The dying star that produced this great bubble of hot glowing gas was first noted by Tycho Brahe in 1572. A white dwarf detonated as a supernova when mass falling in from a companion triggered its collapse; the shock wave from the subsequent explosion led to the blue arc. The surrounding material is iron-rich and highly excited iron atoms create spectral lines detectable at X-ray wavelengths (NASA/ CXC/Chinese Academy of Sciences/F. Lu).

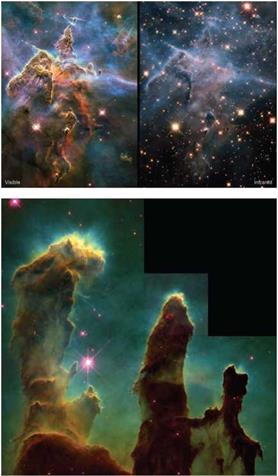

Plate 19. Two images of a pillar of star birth, three light-years high, in the Carina nebula, about 7,500 light-years away. Images taken through different filters select different wavelength ranges, which are combined into “true color” composites, where the colors convey astrophysical information in either visible light or infrared waves. Images like this have turned nebulae, galaxies, and clusters into “places” that resonate in the popular imagination (NASA/ESA/ STScI/M. Livio).

Plate 20. This image of the towering gas columns and bright knots of young stars seen in the Eagle Nebula (M16) was probably the first Hubble Space Telescope image to achieve widespread public recognition. It was part of the inspiration for the Hubble Heritage project, which showcases a different high-impact color image on the web each month. New worlds are being born at the tips of these fingers of hot gas (NASA/ESA/STScI/J. Hester/P. Scowen).

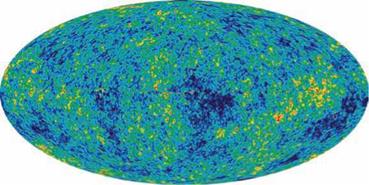

Plate 21. This exquisitely accurate map of the microwave sky, a projection of the celestial sphere onto a plane, shows the universe when it was a tiny fraction of its present age. The temperature variation between red and blue “speckles” is about a hundredth of a percent. The tiny variations, on angular scales of about a degree, represent the seeds for galaxy formation. It took a hundred million years or so for gravity to form the first galaxies (NASA/WMAP Science Team).

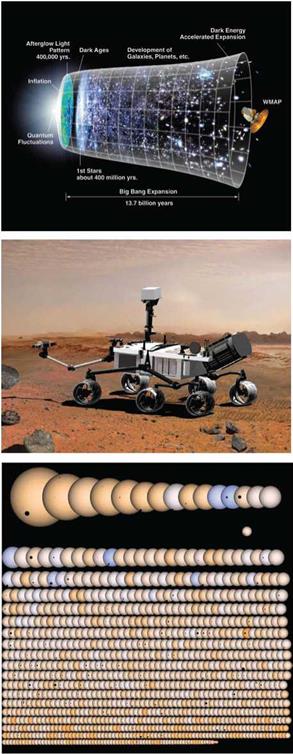

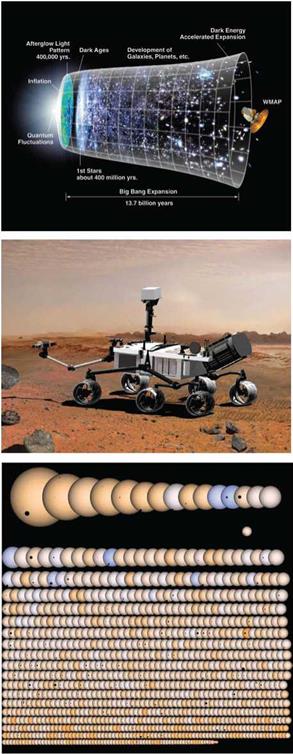

Plate 22. WMAP has played a major role in pushing the big bang model to the limit. The current model of the expanding universe posits an early epoch of inflation or exponential expansion, and subsequent expansion governed in turn by dark matter, causing deceleration, and more recently, dark energy, which is causing acceleration. WMAP has ushered in an era of “precision” cosmology (NASA/WMAP Science Team).

Plate 23. The Mars Science Laboratory, named Curiosity, will be exploring Mars for at least two years, starting with its landing in August 2012. The rover is the size of an SUV, compared to the Mars Exploration Rovers, which are the size of a golf cart, and the earlier Pathfinder, which is the size of a go – kart. Curiosity will study the past and present habitability of Mars by a detailed geochemical analysis of its rocks and atmosphere (NASA/ JPL-Caltech).

Plate 23. The Mars Science Laboratory, named Curiosity, will be exploring Mars for at least two years, starting with its landing in August 2012. The rover is the size of an SUV, compared to the Mars Exploration Rovers, which are the size of a golf cart, and the earlier Pathfinder, which is the size of a go – kart. Curiosity will study the past and present habitability of Mars by a detailed geochemical analysis of its rocks and atmosphere (NASA/ JPL-Caltech).

Plate 24. This montage of 1,235 exoplanet candidates from Kepler shows the planets projected against their parent stars, giving an idea of how they are detected by the slight dimming of the star’s light. By the end of its mission, Kepler will have collected enough data to be sensitive to Earth-like planets in Earth-like orbits of their stars, many of which are expected to be habitable (NASA/ Kepler Science Team).

predator in turn visible to fish looking for a quick meal. Medical researchers are only beginning to realize what we might learn from deep-sea bioluminaries. Off Puget Sound in the Pacific Northwest live the Aequorea victoria jellyfish, from which Green Fluorescent Protein (GFP) was first derived and used to generate other fluorescing marker proteins crucial in cancer and brain research and invaluable to cell biology and genetic engineering.46 What zoologists discover about the variety of species deploying bioluminescence in the deep ocean can help astrobiologists anticipate the life – forms that might illuminate the icy oceans of Europa, or alien seas on exoplanets orbiting other stars. To that end, Spitzer and other telescopes nightly scour the skies in search of other worlds.

Plate 2. Mars as seen by the Viking 2 lander. Images like this dashed the more fevered speculation of Mars as a living planet stemming from Percival Lowell and subsequent science fiction; it was revealed as a frigid and arid desert with a tenuous atmosphere, where water cannot exist for more than a moment on the surface before evaporating (NASA/Mary Dale – Bannister, Washington University in St. Louis).

Plate 2. Mars as seen by the Viking 2 lander. Images like this dashed the more fevered speculation of Mars as a living planet stemming from Percival Lowell and subsequent science fiction; it was revealed as a frigid and arid desert with a tenuous atmosphere, where water cannot exist for more than a moment on the surface before evaporating (NASA/Mary Dale – Bannister, Washington University in St. Louis). Plate 5. The four Galilean moons of Jupiter, visited by the Voyager spacecraft in 1979. In decreasing size order, the moons are Ganymede, Callisto, Io, and Europa; Jupiter is not shown at the same scale. Ganymede is 5300 km in diameter. The spacecraft made many discoveries in the Jovian moon system, including volcanism on Io and a subsurface ocean on Europa (NASA Planetary Photojournal).

Plate 5. The four Galilean moons of Jupiter, visited by the Voyager spacecraft in 1979. In decreasing size order, the moons are Ganymede, Callisto, Io, and Europa; Jupiter is not shown at the same scale. Ganymede is 5300 km in diameter. The spacecraft made many discoveries in the Jovian moon system, including volcanism on Io and a subsurface ocean on Europa (NASA Planetary Photojournal). Plate 6. Attached to the body of each Voyager spacecraft is a gold-plated, copper phonograph record. The record contains musical selections, images, and audio greetings in many world languages, along with instructions on how to retrieve the information. The analog technology will be very durable in the far reaches of space (NASA/Jet Propulsion Laboratory).

Plate 6. Attached to the body of each Voyager spacecraft is a gold-plated, copper phonograph record. The record contains musical selections, images, and audio greetings in many world languages, along with instructions on how to retrieve the information. The analog technology will be very durable in the far reaches of space (NASA/Jet Propulsion Laboratory). The rings are thought to be made of material unable to form a moon because of Saturn’s tidal forces, or to be debris from a moon that broke up due to tidal forces. The particles are made of ice and rock and range in size from less than a millimeter to tens of meters across (NASA/ Marshall Image Exchange).

The rings are thought to be made of material unable to form a moon because of Saturn’s tidal forces, or to be debris from a moon that broke up due to tidal forces. The particles are made of ice and rock and range in size from less than a millimeter to tens of meters across (NASA/ Marshall Image Exchange). Plate 11. SOHO took this image of the Sun in January 2000. The relatively placid optical appearance belies the intense activity seen in this ultraviolet image, where a huge, twisting prominence has escaped the Sun’s surface. When these events are pointed at the Earth, telecommunications and power grids can be affected (NASA/SOHO).

Plate 11. SOHO took this image of the Sun in January 2000. The relatively placid optical appearance belies the intense activity seen in this ultraviolet image, where a huge, twisting prominence has escaped the Sun’s surface. When these events are pointed at the Earth, telecommunications and power grids can be affected (NASA/SOHO).

Plate 15. This Spitzer Space Telescope image shows star formation around the Omega Nebula, M17. This Messier object is a nebulosity around an open cluster of three dozen hot, young stars, about 5,000 light-years away. Spitzer records information at invisibly long wavelengths, and the difference between the view in the infrared (top) and the optical (bottom) can be dramatic. Colors in the infrared view represent different temperature regimes, with red coolest and blue hottest (NASA/JPL – Caltech/M. Povich).

Plate 15. This Spitzer Space Telescope image shows star formation around the Omega Nebula, M17. This Messier object is a nebulosity around an open cluster of three dozen hot, young stars, about 5,000 light-years away. Spitzer records information at invisibly long wavelengths, and the difference between the view in the infrared (top) and the optical (bottom) can be dramatic. Colors in the infrared view represent different temperature regimes, with red coolest and blue hottest (NASA/JPL – Caltech/M. Povich). Plate 17. NASA’s Great Observatories are multi-billion – dollar missions with complex instrument suites, designed to answer fundamental questions in all areas of astrophysics. Including the ground-based Atacama Large Millimeter Array (ALMA), they can diagnose the universe at temperatures ranging from tens to tens of billions of Kelvin (NASA/ CXC/M. Weiss).

Plate 17. NASA’s Great Observatories are multi-billion – dollar missions with complex instrument suites, designed to answer fundamental questions in all areas of astrophysics. Including the ground-based Atacama Large Millimeter Array (ALMA), they can diagnose the universe at temperatures ranging from tens to tens of billions of Kelvin (NASA/ CXC/M. Weiss). Plate 18. The dying star that produced this great bubble of hot glowing gas was first noted by Tycho Brahe in 1572. A white dwarf detonated as a supernova when mass falling in from a companion triggered its collapse; the shock wave from the subsequent explosion led to the blue arc. The surrounding material is iron-rich and highly excited iron atoms create spectral lines detectable at X-ray wavelengths (NASA/ CXC/Chinese Academy of Sciences/F. Lu).

Plate 18. The dying star that produced this great bubble of hot glowing gas was first noted by Tycho Brahe in 1572. A white dwarf detonated as a supernova when mass falling in from a companion triggered its collapse; the shock wave from the subsequent explosion led to the blue arc. The surrounding material is iron-rich and highly excited iron atoms create spectral lines detectable at X-ray wavelengths (NASA/ CXC/Chinese Academy of Sciences/F. Lu).

Plate 23. The Mars Science Laboratory, named Curiosity, will be exploring Mars for at least two years, starting with its landing in August 2012. The rover is the size of an SUV, compared to the Mars Exploration Rovers, which are the size of a golf cart, and the earlier Pathfinder, which is the size of a go – kart. Curiosity will study the past and present habitability of Mars by a detailed geochemical analysis of its rocks and atmosphere (NASA/ JPL-Caltech).

Plate 23. The Mars Science Laboratory, named Curiosity, will be exploring Mars for at least two years, starting with its landing in August 2012. The rover is the size of an SUV, compared to the Mars Exploration Rovers, which are the size of a golf cart, and the earlier Pathfinder, which is the size of a go – kart. Curiosity will study the past and present habitability of Mars by a detailed geochemical analysis of its rocks and atmosphere (NASA/ JPL-Caltech).