On TARGIT: Civil Aviation Crash Testing in the

On December 1, 1984, a Boeing 720B airliner crashed near the east shore of Rogers Dry Lake. Although none of the 73 passengers walked away from the flaming wreckage, there were no fatalities. The occupants were plastic, anthropomorphic dummies, some of them instrumented to collect research data. There was no flight crew on board; the pilot was seated in a ground-based cockpit 6 miles away at NASA Dryden.

As early as 1980, Federal Aviation Administration (FAA) and NASA officials had been planning a full-scale transport aircraft crash demonstration to study impact dynamics and new safety technologies to improve aircraft crashworthiness. Initially dubbed the Transport Crash Test, the project was later renamed Transport Aircraft Remotely Piloted Ground Impact Test (TARGIT). In August 1983, planners settled on the name Controlled Impact Demonstration (CID). Some wags immediately twisted the acronym to stand for "Crash in the Desert” or "Cremating Innocent Dummies.”[954] In point of fact, no fireball was expected. One of the primary test objectives included demonstration of anti-misting kerosene (AMK) fuel, which was designed to prevent formation of a postimpact fireball. While many airplane crashes are surviv – able, most victims perish in postcrash fire resulting from the release of fuel from shattered tanks in the wings and fuselage. In 1977, FAA officials looked into the possibility of using an additive called Avgard FM-9 to reduce the volatility of kerosene fuel released during catastrophic crash events. Ground-impact studies using surplus Lockheed SP-2H airplanes

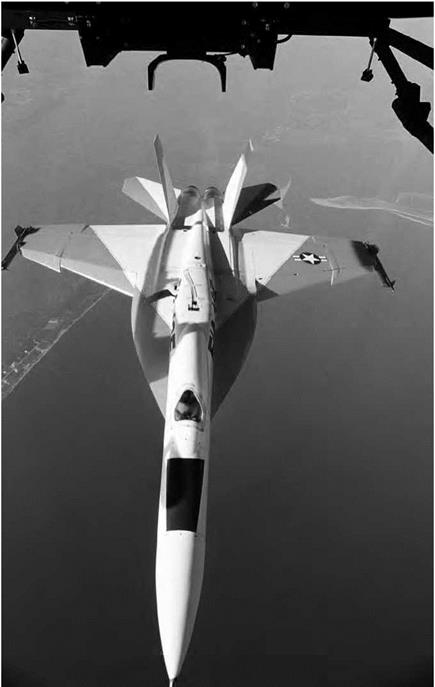

showed great promise, because the FM-9 prevented the kerosene from forming a highly volatile mist as the airframe broke apart.[955] As a result of these early successes, the FAA planned to implement the requirement that airlines add FM-9 to their fuel. Estimates made calculated that the impact of adopting AMK would have included a one-time cost to airlines of $25,000-$35,000 for retrofitting each high-bypass turbine engine and a 3- to 6-percent increase in fuel costs, which would drive ticket prices up by $2-$4 each. In order to definitively prove the effectiveness of AMK, officials from the FAA and NASA signed a Memorandum of Agreement in 1980 for a full-scale impact demonstration. The FAA was responsible for program management and providing a test aircraft, while NASA scientists designed the experiments, provided instrumentation, arranged for data retrieval, and integrated systems.[956] The FAA supplied the Boeing 720B, a typical intermediate-range passenger transport that entered airline service in the mid-1960s. It was selected for the test because its construction and design features were common to most contemporary U. S. and foreign airliners. It was powered by four Pratt & Whitney JT3C-7 turbine engines and carried 12,000 gallons of fuel. With a length of 136 feet, a 130-foot wingspan, and maximum takeoff weight of 202,000 pounds, it was the world’s largest RPRV. FAA Program Manager John Reed headed overall CID project development and coordination with all participating researchers and support organizations.

Researchers at NASA Langley were responsible for characterizing airframe structural loads during impact and developing a data – acquisition system for the entire aircraft. Impact forces during the demonstration were characterized as being survivable for planning purposes, with the primary danger to be from postimpact fire. Study data to be gathered included measurements of structural, seat, and occupant response to impact loads, to corroborate analytical models developed at Langley, as well as data to be used in developing a crashworthy seat and restraint system. Robert J. Hayduk managed NASA crashworthiness and cabin-instrumentation requirements.[957] Dryden personnel,

under the direction of Marvin R. "Russ” Barber, were responsible for overall flight research management, systems integration, and flight operations. These included RPRV control and simulation, aircraft/ground interface, test and systems hardware integration, impact-site preparation, and flight-test operations.

The Boeing 720B was equipped to receive uplinked commands from the ground cockpit. Commands providing direct flight path control were routed through the autopilot, while other functions were fed directly to appropriate systems. Information on engine performance, navigation, attitude, altitude, and airspeed was downlinked to the ground pilot.[958] Commands from the ground cockpit were conditioned in control-law computers, encoded, and transmitted to the aircraft from either a primary or backup antenna. Two antennas on the top and bottom of the Boeing 720B provided omnidirectional telemetry coverage, each feeding a separate receiver. The output from the two receivers was then combined into a single input to a decoder that processed uplink data and generated commands to the controls. Additionally, the flight engineer could select redundant uplink transmission antennas at the ground station. There were three pulse-code modulation systems for downlink telemetry, two for experimental data, and one to provide aircraft control and performance data.

The airplane was equipped with two forward-facing television cameras—a primary color system and a black-and-white backup—to give the ground pilot sufficient visibility for situational awareness. Ten highspeed motion picture cameras photographed the interior of the passenger cabin to provide researchers with footage of seat and occupant motion during the impact sequence.[959] Prior to the final CID mission, 14 test flights were made with a safety crew on board. During these flights, 10 remote takeoffs, 13 remote landings (the initial landing was made by the safety pilot), and 69 CID approaches were accomplished. All remote takeoffs were flown from the Edwards Air Force Base main runway. Remote landings took place on the emergency recovery runway (lakebed Runway 25).

Research pilots for the project included Edward T. Schneider, Fitzhugh L. Fulton, Thomas C. McMurtry, and Donald L. Mallick.

William R. "Ray” Young, Victor W. Horton, and Dale Dennis served as flight engineers. The first flight, a functional checkout, took place March 7, 1984. Schneider served as ground pilot for the first three flights, while two of the other pilots and one or two engineers acted as safety crew. These missions allowed researchers to test the uplink/downlink systems and autopilot, as well as to conduct airspeed calibration and collect ground-effects data. Fulton took over as ground pilot for the remaining flight tests, practicing the CID flight profile while researchers qualified the AMK system (the fire retardant AMK had to pass through a degrader to convert it into a form that could be burned by the engines) and tested data-acquisition equipment. The final pre-CID flight was completed November 26. The stage was set for the controlled impact test.[960] The CID crash scenario called for a symmetric impact prior to encountering obstructions as if the airliner were involved in a gear-up landing short of the runway or an aborted takeoff. The remote pilot was to slide the airplane through a corridor of heavy steel structures designed to slice open the wings, spilling fuel at a rate of 20 to 100 gallons per second. A specially prepared surface consisting of a rectangular grid of crushed rock peppered with powered electric landing lights provided ignition sources on the ground, while two jet-fueled flame generators in the airplane’s tail cone provided onboard ignition sources.

On December 1, 1984, the Boeing 720B was prepared for its final flight. The airplane had a gross takeoff weight of 200,455 pounds, including 76,058 gallons of AMK fuel. Fitz Fulton initiated takeoff from the remote cockpit and guided the Boeing 720B into the sky for the last time.[961]At an altitude of 200 feet, Fulton lined up on final approach to the impact site. He noticed that the airplane had begun to drift to the right of centerline but not enough to warrant a missed approach. At 150 feet, now fully committed to touchdown because of activation of limited-duration photographic and data-collection systems, he attempted to center the flight path with a left aileron input, which resulted in a lateral oscillation.

The Boeing 720B struck the ground 285 feet short of the planned impact point, with the left outboard engine contacting the ground first.

|

|

|

|

This caused the airplane to yaw during the slide, bringing the right inboard engine into contact with one of the wing openers and releasing large quantities of degraded (i. e., highly flammable) AMK and exposing them to a high-temperature ignition source. Other obstructions sliced into the fuselage, permitting fuel to enter beneath the passenger cabin. The resulting fireball was spectacular.[962]

To casual observers, this might have made the CID project appear a failure, but such was not the case. The conditions prescribed for the AMK test were very narrow and failed to account for a wide range of variables, some of which were illustrated during the flight test. The results were sufficient to cause FAA officials to abandon the idea of forcing U. S. airlines to use AMK, but the CID provided researchers with a wide range of data for improving transport-aircraft crash survivability.

The experiment also provided significant information for improving RPV technology. The 14 test flights leading up to the final demonstration gave researchers an opportunity to verify analytical models, simulation techniques, RPV control laws, support software, and hardware. The remote pilot assessed the airplane’s handling qualities, allowing

programmers to update the simulation software and validate the control laws. All onboard systems were thoroughly tested, including AMK degraders, autopilot, brakes, landing gear, nose wheel steering, and instrumentation systems. The CID team also practiced emergency procedures, such as the ability to abort the test and land on a lakebed runway under remote control, and conducted partial testing of an uplinked flight termination system to be used in the event that control of the airplane was lost. Several anomalies—intermittent loss of uplink signal, brief interruption of autopilot command inputs, and failure of the uplink decoder to pass commands—cropped up during these tests. Modifications were implanted, and the anomalies never recurred.[963] Handling qualities were generally good. The ground pilot found landings to be a special challenge as a result of poor depth perception (because of the low- resolution television monitor) and lack of peripheral vision. Through flight tests, the pilot quickly learned that the CID profile was a high – workload task. Part of this was due to the fact that the tracking radar used in the guidance system lacked sufficient accuracy to meet the impact parameters. To compensate, several attempts were made to improve the ground pilot’s performance. These included changing the flight path to give the pilot more time to align his final trajectory, improving ground markings at the impact site, turning on the runway lights on the test surface, and providing a frangible 8-foot-high target as a vertical reference on the centerline. All of these attempts were compromised to some degree by the low-resolution video monitor. After the impact flight, members of the control design team agreed that some form of head-up display (HUD) would have been helpful and that more of the piloting tasks should have been automated to alleviate pilot workload.[964] In terms of RPRV research, the project was considered highly successful. The remote pilots accumulated 16 hours and 22 minutes of RPV experience in preparation for the impact mission, and the CID showed the value of comparing predicted results with flight-test data. U. S. Representative William Carney, ranking minority member of the House Transportation, Aviation, and Materials Subcommittee, observed the CID test. "To those who were disappointed with the outcome,” he later wrote, "I can only say that the

results dramatically illustrated why the tests were necessary. I hope we never lose sight of the fact that the first objective of a research program is to learn, and failure to predict the outcome of an experiment should be viewed as an opportunity, not a failure.”[965]