Robert A. Rivers

Robert A. Rivers

The evolution of flight has witnessed the steady advancement of instrumentation to furnish safety and efficiency. Providing revolutionary enhancements to aircraft instrument panels for improved situational awareness, efficiency of operation, and mitigation of hazards has been a NASA priority for over 30 years. NASA’s heritage of research in synthetic vision has generated useful concepts, demonstrations of key technological breakthroughs, and prototype systems and architectures.

The evolution of flight has witnessed the steady advancement of instrumentation to furnish safety and efficiency. Providing revolutionary enhancements to aircraft instrument panels for improved situational awareness, efficiency of operation, and mitigation of hazards has been a NASA priority for over 30 years. NASA’s heritage of research in synthetic vision has generated useful concepts, demonstrations of key technological breakthroughs, and prototype systems and architectures.

HE CONNECTION OF THE NATIONAL AERONAUTICS AND SPACE

ADMINISTRATION (NASA) to improving instrument displays dates to the advent of instrument flying, when James H. Doolittle conducted his "blind flying” experiments with the Guggenheim Flight Laboratory in 1929, in the era of the Ford Tri-Motor transport.[1121] Doolittle became the first pilot to take off, fly, and land entirely by instruments, his visibility being totally obscured by a canvas hood. At the time of this flight, Doolittle was already a world-famous airman, who had earned a doctorate in aeronautical engineering from the Massachusetts Institute of Technology and whose research on accelerations in flight constituted one of the most important contributions to interwar aeronautics. His formal association with the National Advisory Committee for Aeronautics (NACA) Langley Aeronautical Laboratory began in 1928. In the late 1950s, Doolittle became the last Chairman of the NACA and helped guide its transition into NASA.

The capabilities of air transport aircraft increased dramatically between the era of the Ford Tri-Motor of the late 1920s and the jetliners

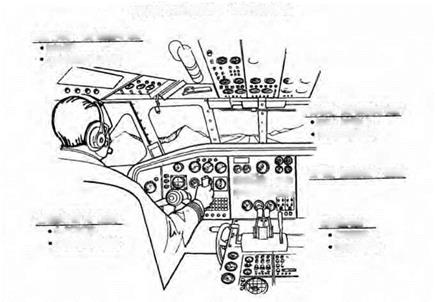

of the late 1960s. Passenger capacity increased thirtyfold, range by a factor of ten, and speed by a factor of five.[1122] But little changed in one basic area: cockpit presentations and the pilot-aircraft interface. As NASA Ames Research Center test pilot George E. Cooper noted at a seminal November 1971 conference held at Langley Research Center (LaRC) on technologies for future civil aircraft:

Controls, selectors, and dial and needle instruments which were in use over thirty years ago are still common in the majority of civil aircraft presently in use. By comparing the cockpit of a 30-year-old three-engine transport with that of a current four-engine jet transport, this similarity can be seen. However, the cockpit of the jet transport has become much more complicated than that of the older transport because of the evolutionary process of adding information by more instruments, controls, and selectors to provide increased capability or to overcome deficiencies. This trend toward complexity in the cockpit can be attributed to the use of more complex aircraft systems and the desire to extend the aircraft operating conditions to overcome limitations due to environmental constraints of weather (e. g., poor visibility, low ceiling, etc.) and of congested air traffic. System complexity arises from adding more propulsion units, stability and control augmentation, control automation, sophisticated guidance and navigation systems, and a means for monitoring the status of various aircraft systems.[1123]

Controls, selectors, and dial and needle instruments which were in use over thirty years ago are still common in the majority of civil aircraft presently in use. By comparing the cockpit of a 30-year-old three-engine transport with that of a current four-engine jet transport, this similarity can be seen. However, the cockpit of the jet transport has become much more complicated than that of the older transport because of the evolutionary process of adding information by more instruments, controls, and selectors to provide increased capability or to overcome deficiencies. This trend toward complexity in the cockpit can be attributed to the use of more complex aircraft systems and the desire to extend the aircraft operating conditions to overcome limitations due to environmental constraints of weather (e. g., poor visibility, low ceiling, etc.) and of congested air traffic. System complexity arises from adding more propulsion units, stability and control augmentation, control automation, sophisticated guidance and navigation systems, and a means for monitoring the status of various aircraft systems.[1123]

Assessing the state of available technology, human factors, and potential improvement, Cooper issued a bold challenge to NASA and the larger aeronautical community, noting: "A major advance during the 1970s must be the development of more effective means for systematically evaluating the available technology for improving the pilot-aircraft interface if major innovations in the cockpit are to be obtained during

PILOT-AIRCRAFT INTERFACE

PILOT-AIRCRAFT INTERFACE

KINESTHETIC CUES

KINESTHETIC CUES

G-FORCES

DISTURBANCES

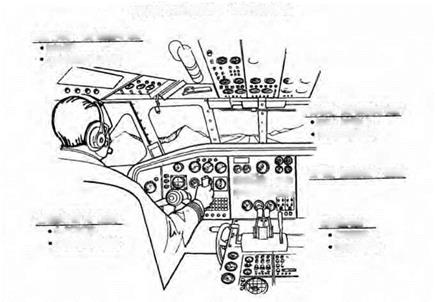

The pilot-aircraft interface, as seen by NASA pilot George E. Cooper, circa 1 971. Note the predominance of gauges and dials. NASA.

Cooper’s concept of an advanced multifunction electronic cockpit. Note the flightpath "highway in the sky” presentation. NASA.

the 1980s.”[1124] To illustrate his point, Cooper included two drawings, one representative of the dial-intensive contemporary jetliner cockpit presentation and the other of what might be achieved with advanced multifunction display approaches over the next decade.

At the same conference, Langley Director Edgar M. Cortright noted that, in the 6 years from 1964 through 1969, airline costs incurred by congestion-caused terminal area traffic delays had risen from less than $40 million to $160 million per year. He said that it was "symptomatic of the inability of many terminals to handle more traffic,” but that "improved ground and airborne electronic systems, coupled with acceptable aircraft characteristics, would improve all-weather operations, permit a wider variety of approach paths and closer spacing, and thereby increase airport capacity by about 100 percent if dual runways were provided.”[1125] Langley avionics researcher G. Barry Graves noted the potentiality of revolutionary breakthroughs in cockpit avionics to improve the pilot-aircraft interface and take aviation operations and safety to a new level, particularly the use of "computer-centered digital systems for both flight management and advanced control applications, automated communications, [and] systems for wide-area navigation and surveillance.”[1126]

At the same conference, Langley Director Edgar M. Cortright noted that, in the 6 years from 1964 through 1969, airline costs incurred by congestion-caused terminal area traffic delays had risen from less than $40 million to $160 million per year. He said that it was "symptomatic of the inability of many terminals to handle more traffic,” but that "improved ground and airborne electronic systems, coupled with acceptable aircraft characteristics, would improve all-weather operations, permit a wider variety of approach paths and closer spacing, and thereby increase airport capacity by about 100 percent if dual runways were provided.”[1125] Langley avionics researcher G. Barry Graves noted the potentiality of revolutionary breakthroughs in cockpit avionics to improve the pilot-aircraft interface and take aviation operations and safety to a new level, particularly the use of "computer-centered digital systems for both flight management and advanced control applications, automated communications, [and] systems for wide-area navigation and surveillance.”[1126]

But this early work generated little immediate response from the aviation community, as requisite supporting technologies were not sufficiently mature to permit their practical exploitation. It was not until the 1980s, when the pace of computer graphics and simulation development accelerated, that a heightened interest developed in improving pilot performance in poor visibility conditions. Accordingly, researchers increasingly studied the application of artificial intelligence (AI)

to flight deck functions, working closely with professional pilots from the airlines, military, and flight-test community. While many exaggerated claims were made—given the relative immaturity of the computer and AI field at that time—researchers nevertheless recognized, as Sheldon Baron and Carl Feehrer wrote, "one can conceive of a wide range of possible applications in the area of intelligent aids for flight crew.”[1127] Interviews with pilots revealed that "descent and approach phases accounted for the greatest amounts of workload when averaged across all system management categories,” stimulating efforts to develop what was then popularly termed a "pilot’s associate” AI system.[1128]

In this growing climate of interest, John D. Shaughnessy and Hugh

In this growing climate of interest, John D. Shaughnessy and Hugh

P. Bergeron’s Single Pilot Instrument Flight Rules (SPIFR) project constituted a notable first step, inasmuch as SPIFR’s novel "follow-me box” showed promise as an intuitive aid for inexperienced pilots flying in instrument conditions. Subsequently, Langley’s James J. Adams conducted simulator evaluations of the display, confirming its potential.[1129] Building on these "follow-me box” developments, Langley’s Eric C. Stewart developed a concept for portraying an aircraft’s current and future desired positions. He created a synthetic display similar, to the scene a driver experiences while driving a car, combining it with

highway-in-the-sky (HITS) displays.[1130] This so-called "E-Z Fly” project was incorporated into Langley’s General-Aviation Stall/Spin Program, a major contemporary study to improve the safety of general-aviation (GA) pilots and passengers. Numerous test subjects, from nonpilots to highly experienced test pilots, evaluated Stewart’s concept of HITS implementation. NASA flight-test reports illustrated both the challenges and the opportunities that the HITS/E-Z Fly combination offered.[1131]

E-Z Fly decoupled the flight controls of a Cessna 402 twin-engine, general-aviation aircraft simulated in Langley’s GA Simulator, and HITS offered a system of guidance to the pilot. This decoupling, while making the simulated airplane "easy to fly,” also reduced its responsiveness. Providing this level of HITS technology in a low-end GA aircraft posed a range of technical, economic, implementation, and operational challenges. As stated in a flight-test report, "The concept of placing inexperienced pilots in the National Airspace System has many disadvantages. Certainly, system failures could have disastrous consequences.”[1132] Nevertheless, the basic technology was sound and helped set the stage for future projects. NASA Langley was developing the infrastructure in the early 1990s to support wide-ranging research into synthetically driven flight deck displays for GA, commercial and business aircraft (CBA), and NASA’s HighSpeed Civil Transport (HSCT).[1133] The initial limited idea of easing the workload for low-time pilots would lead to sophisticated display systems that would revolutionize the flight deck. Ultimately, in 1999, a dedicated, well-funded Synthetic Vision Systems Project was created, headed by

E-Z Fly decoupled the flight controls of a Cessna 402 twin-engine, general-aviation aircraft simulated in Langley’s GA Simulator, and HITS offered a system of guidance to the pilot. This decoupling, while making the simulated airplane "easy to fly,” also reduced its responsiveness. Providing this level of HITS technology in a low-end GA aircraft posed a range of technical, economic, implementation, and operational challenges. As stated in a flight-test report, "The concept of placing inexperienced pilots in the National Airspace System has many disadvantages. Certainly, system failures could have disastrous consequences.”[1132] Nevertheless, the basic technology was sound and helped set the stage for future projects. NASA Langley was developing the infrastructure in the early 1990s to support wide-ranging research into synthetically driven flight deck displays for GA, commercial and business aircraft (CBA), and NASA’s HighSpeed Civil Transport (HSCT).[1133] The initial limited idea of easing the workload for low-time pilots would lead to sophisticated display systems that would revolutionize the flight deck. Ultimately, in 1999, a dedicated, well-funded Synthetic Vision Systems Project was created, headed by

Daniel G. Baize under NASA’s Aviation Safety Program (AvSP). Inspired by Langley researcher Russell V. Parrish, researchers accomplished a number of comprehensive and successful GA and CBA flight and simulation experiments before the project ended in 2005. These complex, highly organized, and efficiently interrelated experiments pushed the state of the art in aircraft guidance, display, and navigation systems.

Significant work on synthetic vision systems and sensor fusion issues was also undertaken at the NASA Johnson Space Center (JSC) in the late 1990s, as researchers grappled with the challenge of developing displays for ground-based pilots to control the proposed X-38 reentry test vehicle. As subsequently discussed, through a NASA-contractor partnership, they developed a highly efficient sensor fusion technique whereby real-time video signals could be blended with synthetically derived scenes using a laptop computer. After cancellation of the X-38 program, JSC engineer Jeffrey L. Fox and Michael Abernathy of Rapid Imaging Software, Inc., (RIS, which developed the sensor fusion technology for the X-38 program, supported by a small business contract) continued to expand these initial successes, together with Michael L. Coffman of the Federal Aviation Administration (FAA). Later joined by astronaut Eric C. Boe and the author (formerly a project pilot on a number of LaRC Synthetic Vision Systems (SVS) programs), this partnership accomplished four significant flight-test experiments using JSC and FAA aircraft, motivated by a unifying belief in the value of Synthetic Vision Systems technology for increasing flight safety and efficiency.

Significant work on synthetic vision systems and sensor fusion issues was also undertaken at the NASA Johnson Space Center (JSC) in the late 1990s, as researchers grappled with the challenge of developing displays for ground-based pilots to control the proposed X-38 reentry test vehicle. As subsequently discussed, through a NASA-contractor partnership, they developed a highly efficient sensor fusion technique whereby real-time video signals could be blended with synthetically derived scenes using a laptop computer. After cancellation of the X-38 program, JSC engineer Jeffrey L. Fox and Michael Abernathy of Rapid Imaging Software, Inc., (RIS, which developed the sensor fusion technology for the X-38 program, supported by a small business contract) continued to expand these initial successes, together with Michael L. Coffman of the Federal Aviation Administration (FAA). Later joined by astronaut Eric C. Boe and the author (formerly a project pilot on a number of LaRC Synthetic Vision Systems (SVS) programs), this partnership accomplished four significant flight-test experiments using JSC and FAA aircraft, motivated by a unifying belief in the value of Synthetic Vision Systems technology for increasing flight safety and efficiency.

Synthetic Vision Systems research at NASA continues today at various levels. After the SVS project ended in 2005, almost all team members continued building upon its accomplishments, transitioning to the new Integrated Intelligent Flight Deck Technologies (IIFDT) project, "a multi-disciplinary research effort to develop flight deck technologies that mitigate operator-, automation-, and environment-induced hazards.”[1134] IIFDT constituted both a major element of NASA’s Aviation Safety Program and a crucial underpinning of the Next Generation Air Transportation System (NGATS), and it was itself dependent upon the maturation of SVS begun within the project that concluded in 2005. While

much work remains to be done to fulfill the vision, expectations, and promise of NGATS, the principles and practicality of SVS and its application to the cockpit have been clearly demonstrated.[1135] The following account traces SVS research, as seen from the perspective of a NASA research pilot who participated in key efforts that demonstrated its potential and value for professional civil, military, and general-aviation pilots alike.

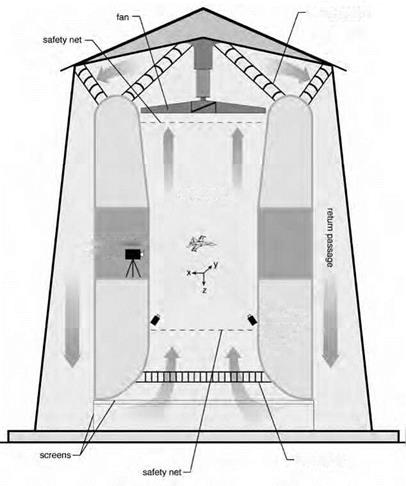

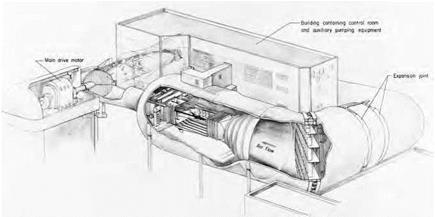

turning vanes

turning vanes

PILOT-AIRCRAFT INTERFACE

PILOT-AIRCRAFT INTERFACE