Sensor Fusion Arrives

![]() Integrating an External Vision System was an overarching goal of the HSR program. The XVS would include advanced television and infrared cameras, passive millimeter microwave radar, and other cutting – edge sensors, fused with an onboard database of navigation information, obstacles, and topography. It would thus furnish a complete, synthetically derived view for the aircrew and associated display symbologies in real time. The pilot would be presented with a visual meteorological conditions view of the world on a large display screen in the flight, deck simulating a front window. Regardless of actual ambient meteorological conditions, the pilot would thus "see” a clear daylight scene, made possible by combining appropriate sensor signals; synthetic scenes derived from the high-resolution terrain, navigation, and obstacle databases; and head-up symbology (airspeed, altitude, velocity vector, etc.) provided by symbol generators. Precise GPS navigation input would complete the system. All of these inputs would be processed and displayed in real time (on the order of 20-30 milliseconds) on the large "virtual window” displays. During the HSR program, Langley did not develop the sensor fusion technology before program termination and, as a result, moved in the direction of integration of the synthetic database derived view with sophisticated display symbologies, redefining the implementation of the primary flight display and navigation display. Part of the problem with developing the sensor fusion algorithms was the perceived need for large, expensive computers. Langley continued on this path when the Synthetic Vision Systems project was initiated under NASA’s Aviation Safety Program in 1999 and achieved remarkable results in SVS architecture, display development, human factors engineering, and flight deck integration in both GA and CBA domains.[1164]

Integrating an External Vision System was an overarching goal of the HSR program. The XVS would include advanced television and infrared cameras, passive millimeter microwave radar, and other cutting – edge sensors, fused with an onboard database of navigation information, obstacles, and topography. It would thus furnish a complete, synthetically derived view for the aircrew and associated display symbologies in real time. The pilot would be presented with a visual meteorological conditions view of the world on a large display screen in the flight, deck simulating a front window. Regardless of actual ambient meteorological conditions, the pilot would thus "see” a clear daylight scene, made possible by combining appropriate sensor signals; synthetic scenes derived from the high-resolution terrain, navigation, and obstacle databases; and head-up symbology (airspeed, altitude, velocity vector, etc.) provided by symbol generators. Precise GPS navigation input would complete the system. All of these inputs would be processed and displayed in real time (on the order of 20-30 milliseconds) on the large "virtual window” displays. During the HSR program, Langley did not develop the sensor fusion technology before program termination and, as a result, moved in the direction of integration of the synthetic database derived view with sophisticated display symbologies, redefining the implementation of the primary flight display and navigation display. Part of the problem with developing the sensor fusion algorithms was the perceived need for large, expensive computers. Langley continued on this path when the Synthetic Vision Systems project was initiated under NASA’s Aviation Safety Program in 1999 and achieved remarkable results in SVS architecture, display development, human factors engineering, and flight deck integration in both GA and CBA domains.[1164]

Simultaneously with these efforts, JSC was developing the X-38 unpiloted lifting body/parafoil recovery reentry vehicle. The X-38 was a technology demonstrator for a proposed orbital crew rescue vehicle

that could, in an emergency, return up to seven astronauts to Earth, a veritable space-based lifeboat. NASA planners had forecasted a need for such a rescue craft in the early days of planning for Space Station Freedom (subsequently the International Space Station). Under a Langley study program for the Space Station Freedom Crew Emergency Rescue Vehicle (CERV, later shortened to CRV), Agency engineers and research pilots had undertaken extensive simulation studies of one candidate shape, the HL-20 lifting body, whose design was based on the general aerodynamic shape of the Soviet Union’s BOR-4 subscale spaceplane.[1165] The HL-20 did not proceed beyond these tests and a full-scale mockup. Instead, Agency attention turned to another escape vehicle concept, one essentially identical in shape to the nearly four-decade-old body shape of the Martin SV-5D hypersonic lifting reentry test vehicle, sponsored by NASA’s Johnson Space Center. The Johnson configuration spawned its own two-phase demonstrator research effort: the X-38 program, for a series of subsonic drop-shapes air-launched from NASA’s NB-52B Stratofortress, and the second, for an orbital reentry shape to be test – launched from the Space Shuttle from a high-inclination orbit. But while tests of the former did occur at the NASA Dryden Flight Research Center (DFRC) in the late 1990s, the fully developed orbital craft did not proceed to development and orbital test.[1166]

![]() To remotely pilot this vehicle during its flight-testing at Dryden, project engineers were developing a system displaying the required navigation and control data. Television cameras in the nose of the X-38 provided a data link video signal to a control flight deck on the ground. Video signals alone, however, were insufficient for the remote pilot to perform all the test and control maneuvers, including "flap turns” and "heading hold” commands during the parafoil phase of flight. More information on the display monitor would be needed. Further complications arose because of the design of the X-38: the crew would be lying on its

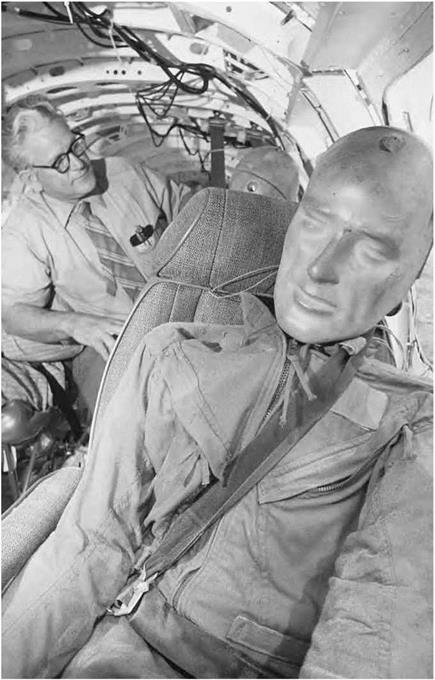

To remotely pilot this vehicle during its flight-testing at Dryden, project engineers were developing a system displaying the required navigation and control data. Television cameras in the nose of the X-38 provided a data link video signal to a control flight deck on the ground. Video signals alone, however, were insufficient for the remote pilot to perform all the test and control maneuvers, including "flap turns” and "heading hold” commands during the parafoil phase of flight. More information on the display monitor would be needed. Further complications arose because of the design of the X-38: the crew would be lying on its

backs, looking at displays on the "ceiling” of the vehicle. Accordingly, a team led by JSC X-38 Deputy Avionics Lead Frank J. Delgado was tasked with developing a display system allowing the pilot to control the X-38 from a perspective 90 degrees to the vehicle direction of travel. On the cockpit design team were NASA astronauts Rick Husband (subsequently lost in the Columbia reentry disaster), Scott Altman, and Ken Ham, and JSC engineer Jeffrey Fox.

![]() Delgado solicited industry assistance with the project. Rapid Imaging Software, Inc., a firm already working with imaginative synthetic vision concepts, received a Phase II Small Business Innovation Research (SBIR) contract to develop the display architecture. RIS subsequently developed LandForm VisualFlight, which blended "the power of a geographic information system with the speed of a flight simulator to transform a user’s desktop computer into a ‘virtual cockpit.’”[1167] It consisted of "symbology fusion” software and 3-D "out-the-window” and NAV display presentations operating using a standard Microsoft Windows-based central processing unit (CPU). JSC and RIS were on the path to developing true sensor fusion in the near future, blending a full SVS database with live video signals. The system required a remote, ground-based control cockpit, so Jeff Fox procured an extended van from the JSC motor pool. This vehicle, officially known as the X-38 Remote Cockpit Van, was nicknamed the "Vomit Van” by those poor souls driving around lying on their backs practicing flying a simulated X-38. By spring 2002, JSC was flying the X-38 from the Remote Cockpit Van using an SVS NAV Display, an SVS out-the-window display, and a video display developed by RIS. NASA astronaut Ken Ham judged it as furnishing the "best seat in the house” during X-38 glide flights.[1168]

Delgado solicited industry assistance with the project. Rapid Imaging Software, Inc., a firm already working with imaginative synthetic vision concepts, received a Phase II Small Business Innovation Research (SBIR) contract to develop the display architecture. RIS subsequently developed LandForm VisualFlight, which blended "the power of a geographic information system with the speed of a flight simulator to transform a user’s desktop computer into a ‘virtual cockpit.’”[1167] It consisted of "symbology fusion” software and 3-D "out-the-window” and NAV display presentations operating using a standard Microsoft Windows-based central processing unit (CPU). JSC and RIS were on the path to developing true sensor fusion in the near future, blending a full SVS database with live video signals. The system required a remote, ground-based control cockpit, so Jeff Fox procured an extended van from the JSC motor pool. This vehicle, officially known as the X-38 Remote Cockpit Van, was nicknamed the "Vomit Van” by those poor souls driving around lying on their backs practicing flying a simulated X-38. By spring 2002, JSC was flying the X-38 from the Remote Cockpit Van using an SVS NAV Display, an SVS out-the-window display, and a video display developed by RIS. NASA astronaut Ken Ham judged it as furnishing the "best seat in the house” during X-38 glide flights.[1168]

Indeed, during the X-38 testing, a serendipitous event demonstrated the value of sensor fusion. After release from the NASA NB-52B Stratofortress, the lens of the onboard X-38 television camera became partially covered in frost, occluding over 50 percent of the FOV. This would have proved problematic for the pilot had orienting symbology

not been available in the displays. Synthetic symbology, including spatial entities identifying keep-out zones and runway outlines, provided the pilot with a synthetic scene replacing the occluded camera image. This foreshadowed the concept of sensor fusion, in which, for example, blossoming as the camera traversed the Sun could be "blended” out, and haze obscuration could be minimized by adjusting the degree of synthetic blend from 0 to 100 percent.[1169]

![]() But then, on April 29, 2002, faced with rising costs for the International Space Station, NASA canceled the X-38 program.[1170] Surprisingly, the cancellation did not have the deleterious impact upon sensor fusion development that might have been anticipated. Instead, program members Jeff Fox and Eric Boe secured temporary support via the Johnson Center Director’s discretionary fund to keep the X-38 Remote Cockpit Van operating. Mike Abernathy, president of RIS, was eager to continue his company’s sensor fusion work. He supported their efforts, as did Patrick Laport of Aerospace Applications North America (AANA). For the next 2 years, Fox and electronics technician James B. Secor continued to improve the van, working on a not-to-interfere basis with their other duties. In July 2004, Fox secured further Agency funding to convert the remote cockpit, now renamed, at Boe’s suggestion, the Advanced Cockpit Evaluation System (ACES). It was rebuilt with a single, upright seat affording a 180-degree FOV visual system with five large surplus monitors. An array of five cameras was mounted on the roof of the van, and its input could be blended in real time with new RIS software to form a complete sensor fusion package for the wraparound monitors or a helmet – mounted display.[1171] Subsequently, tests with this van demonstrated true sensor fusion. Now, the team looked for another flight project it could use to demonstrate the value of SVS.

But then, on April 29, 2002, faced with rising costs for the International Space Station, NASA canceled the X-38 program.[1170] Surprisingly, the cancellation did not have the deleterious impact upon sensor fusion development that might have been anticipated. Instead, program members Jeff Fox and Eric Boe secured temporary support via the Johnson Center Director’s discretionary fund to keep the X-38 Remote Cockpit Van operating. Mike Abernathy, president of RIS, was eager to continue his company’s sensor fusion work. He supported their efforts, as did Patrick Laport of Aerospace Applications North America (AANA). For the next 2 years, Fox and electronics technician James B. Secor continued to improve the van, working on a not-to-interfere basis with their other duties. In July 2004, Fox secured further Agency funding to convert the remote cockpit, now renamed, at Boe’s suggestion, the Advanced Cockpit Evaluation System (ACES). It was rebuilt with a single, upright seat affording a 180-degree FOV visual system with five large surplus monitors. An array of five cameras was mounted on the roof of the van, and its input could be blended in real time with new RIS software to form a complete sensor fusion package for the wraparound monitors or a helmet – mounted display.[1171] Subsequently, tests with this van demonstrated true sensor fusion. Now, the team looked for another flight project it could use to demonstrate the value of SVS.

Its first opportunity came in November 2004, at Creech Air Force Base in Nevada. Formerly known as Indian Springs Auxiliary Air Field, a backwater corner of the Nellis Air Force Base range, Creech had risen

to prominence after the attacks of 9/11, as it was the Air Force’s center of excellence for unmanned aerial vehicle (UAV) operations. It used, as its showcase, the General Atomics Predator UAV. The Predator, modified as a Hellfire-armed attack system, had proven a vital component of the global war on terrorism. With UAVs increasing dramatically in their capabilities, it was natural that the UAV community at Nellis would be interested in the work of the ACES team. Traveling to Nevada to demonstrate its technology to the Air Force, the JSC team used the ACES van in a flight-following mode, receiving downlink video from a Predator UAV. That video was then blended with synthetic terrain database inputs to provide a 180-degree FOV scene for the pilot. The Air Force’s Predator pilots found the ACES system far superior to the narrow-view perspective they then had available for landing the UAV.

![]() In 2005, astronaut Eric Boe began training for a Shuttle flight and left the group, replaced by the author, who had spent years over 10 years at Langley as a project or research pilot on all of that Center’s SVS and XVS projects. The author transferred to JSC from Langley in 2004 as a research pilot and learned of the Center’s SVS work from Boe. The author’s involvement with the JSC group linked Langley and JSC’s SVS efforts, for he provided the JSC group with his experience with Langley’s SVS research.

In 2005, astronaut Eric Boe began training for a Shuttle flight and left the group, replaced by the author, who had spent years over 10 years at Langley as a project or research pilot on all of that Center’s SVS and XVS projects. The author transferred to JSC from Langley in 2004 as a research pilot and learned of the Center’s SVS work from Boe. The author’s involvement with the JSC group linked Langley and JSC’s SVS efforts, for he provided the JSC group with his experience with Langley’s SVS research.

That spring, a former X-38 cooperative student—Michael Coffman, now an engineer at the FAA’s Mike Monroney Aeronautical Center in Oklahoma City—serendipitously visited Fox at JSC. They discussed using the sensor fusion technology for the FAA’s flight-check mission. Coffman, Fox, and Boe briefed Thomas C. Accardi, Director of Aviation Systems Standards at FAA Oklahoma City, on the sensor fusion work at JSC, and he was interested in its possibilities. Fox seized this opportunity to establish a memorandum of understanding (MOU) among the Johnson Space Center, the Mike Monroney Aeronautical Center, RIS, and AANA. All parties would work on a quid pro quo basis, sharing intellectual and physical resources where appropriate, without funding necessarily changing hands. Signed in July 2005, this arrangement was unique in its scope and, as will be seen, its ability to allow contractors and Government agencies to work together without cost. JSC and FAA Oklahoma City management had complete trust in their employees, and both RIS and AANA were willing to work without compensation, predicated on their faith in their product and the likely potential return on their investment, effectively a Skunk Works approach taken to the extreme. The stage was set for major SVS accomplishments, for

during this same period, huge strides in SVS development had been made at Langley, which is where this narrative now returns.[1172]