Emergent Hypersonic Technology and the Onset of the Missile Era

The ballistic missile and atomic bomb became realities within a year of each other. At a stroke, the expectation arose that one might increase the range of the former to intercontinental distance and, by installing an atomic tip, generate a weapon—and a threat—of almost incomprehensible destructive power. But such visions ran afoul of perplexing technical issues involving rocket propulsion, guidance, and reentry. Engineers knew they could do something about propulsion, but guidance posed a formidable challenge. MIT’s Charles Stark Draper was seeking inertial guidance, but he couldn’t approach the Air Force requirement, which set an allowed miss distance of only 1,500 feet at a range of 5,000 miles for a ballistic missile warhead.[554]

Reentry posed an even more daunting prospect. A reentering 5,000-mile-range missile would reach 9,000 kelvins, hotter than the solar surface, while its kinetic energy would vaporize five times its weight in iron.[555] Rand Corporation studies encouraged Air Force and industry missile studies. Convair engineers, working under Karel J. "Charlie” Bossart, began development of the Atlas ICBM in 1951. Even with this seemingly rapid implementation of the ballistic missile idea, time scales remained long term. As late as October 1953, the Air Force declared that it would not complete research and development until "sometime after 1964.”[556]

Matters changed dramatically immediately after the Castle Bravo nuclear test on March 1, 1954, a weaponizable 15-megaton H-bomb, fully 1,000 times more powerful than the atomic bomb that devastated Hiroshima less than a decade previously. The "Teapot Committee,” chaired by the Hungarian emigree mathematician John von Neumann, had anticipated success with Bravo and with similar tests. Echoing Bruno Augenstein of the Rand Corporation, the Teapot group recom-

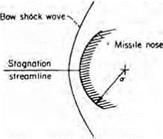

It ia well known that for any truly blunt body, the bow shock wave is detached and there exists a stagnation point at the nose. Consider conditions at this point and assume that the local radius of curvature of the body is a (see sketch).

|

|

The bow shock wave is normal to the stagnation streamline and converts the supersonic flow ahead of the shock to a low subsonic speed flow at high static temperature downstream of the shock. Thus, it is suggested that conditions near the stagnation point may be investigated by treating the nose section as if it were a segment of a sphere in a subsonic flow field.

Extract of text from NACA Report 1381 (1953), in which H. Julian Allen and Alfred J. Eggers

postulated using a blunt-body reentry shape to reduce surface heating of a reentry body. NASA.

mended that the Atlas miss distance should be relaxed "from the present 1,500 feet to at least two, and probably three, nautical miles.”[557] This was feasible because the new H-bomb had such destructive power that such a "miss” distance seemed irrelevant. The Air Force leadership concurred, and only weeks after the Castle Bravo shot, in May 1954, Vice Chief of Staff Gen. Thomas D. White granted Atlas the service’s highest developmental priority.

But there remained the thorny problem of reentry. Only recently, most people had expected an ICBM nose cone to possess the needle – nose sharpness of futurist and science fiction imagination. The realities of aerothermodynamic heating at near-orbital speeds dictated otherwise. In 1953, NACA Ames aerodynamicists H. Julian Allen and Alfred

Eggers concluded that an ideal reentry shape should be bluntly rounded, not sharply streamlined. A sharp nose produced a very strong attached shock wave, resulting in high surface heating. In contrast, a blunt nose generated a detached shock standing much further off the nose surface, allowing the airflow to carry away most of the heat. What heating remained could be alleviated via radiative cooling or by using hot structures and high-temperature coatings.[558]

There was need for experimental verification of blunt body theory, but the hypersonic wind tunnel, previously so useful, was suddenly inadequate, much as the conventional wind tunnel a decade earlier had been inadequate to obtaining the fullest understanding of transonic flows. As the slotted throat tunnel had replaced it, so now a new research tool, the shock tube, emerged for hypersonic studies. Conceived by Arthur Kantrowitz, a Langley veteran working at Cornell, the shock tube enabled far closer simulation of hypersonic pressures and temperatures. From the outset, Kantrowitz aimed at orbital velocity, writing in 1952 that: "it is possible to obtain shock Mach numbers in the neighborhood of 25 with reasonable pressures and shock tube sizes.”[559]

Despite the advantages of blunt body design, the hypersonic environment remained so extreme that it was still necessary to furnish thermal protection to the nose cone. The answer was ablation: covering the nose with a lightweight coating that melts and flakes off to carry away the heat. Wernher von Braun’s U. S. Army team invented ablation while working on the Jupiter intermediate-range ballistic missile (IRBM), though General Electric scientist George Sutton made particularly notable contributions. He worked for the Air Force, which built and successfully protected a succession of ICBMs: Atlas, Titan, and Minuteman.[560]

|

A Jupiter IRBM launches from Cape Canaveral on May 18, 1958, on an ablation reentry test. U. S. Army. |

Flight tests were critical for successful nose cone development, and they began in 1956 with launches of the multistage Lockheed X-17. It rose high into the atmosphere before firing its final test stage back at Earth, ensuring the achievement of a high-heat load, as the test nose cone would typically attain velocities of at least Mach 12 at only 40,000 feet. This was half the speed of a satellite, at an altitude typically traversed by today’s subsonic airliners. In the pre-ablation era, the warheads typically burned up in the atmosphere, making the X-17 effectively a flying shock tube whose nose cones only lived long enough to return data by telemetry. Yet out of such limited beginnings (analogous to the

rudimentary test methodologies of the early transonic and supersonic era just a decade previously) came a technical base that swiftly resolved the reentry challenge.[561]

Tests followed with various Army and Air Force ballistic missiles. In August 1957, a Jupiter-C (an uprated Redstone) returned a nose cone after a flight of 1,343 miles. President Dwight D. Eisenhower subsequently showed it to the public during a TV appearance that sought to bolster American morale a month after Sputnik had shocked the world. Two Thor-Able flights went to 5,500 miles in July 1958, though their nose cones both were lost at sea. But the agenda also included Atlas, which first reached its full range of 6,300 miles in November 1958. Two nose cones built by GE, the RVX-1 and -2, flew subsequently as payloads. An RVX-2 flew 5,000 miles in July 1959 and was recovered, thereby becoming the largest object yet to be brought back. Attention now turned to a weaponized nose cone shape, GE’s Mark 3. Flight tests began in October, with this nose cone entering operational service the following April.[562]

Success in reentry now was a reality, yet there was much more for the future. The early nose cones were symmetric, which gave good ballistic characteristics but made no provision for significant aerodynamic maneuver and cross-range. The military sought both as a means of achieving greater operational flexibility. An Air Force experimental uncrewed lifting body design, the Martin SV-5D (X-23) PRIME, flew three flights between December 1966 and April 1967, lofted over the Pacific Test Range by modified Atlas boosters. The first flew 4,300 miles, maneuvering in pitch (but not in cross-range), and missed its target aim point by only 900 feet. The third mission demonstrated a turning cross-range of 800 miles, the SV-5D impacting within 4 miles of its aim point and subsequently was recovered.[563]

Other challenges remained. These included piloted return from the Moon, reusable thermal protection for the Shuttle, and planetary entry into the Jovian atmosphere, which was the most demanding of all. Even

so, by the time of PRIME in 1967, the reentry problem had been resolved, manifested by the success of both ballistic missile nose cone development and the crewed spacecraft effort. The latter was arguably the most significant expression of hypersonic competency until the return to Earth from orbit by the Space Shuttle Columbia in 1981.