While the helicopter industry did not emerge until the 1950s, the NACA was engaged in significant rotary wing research starting in the 1930s at the Langley Memorial Aeronautical Laboratory (LMAL), now the NASA

|

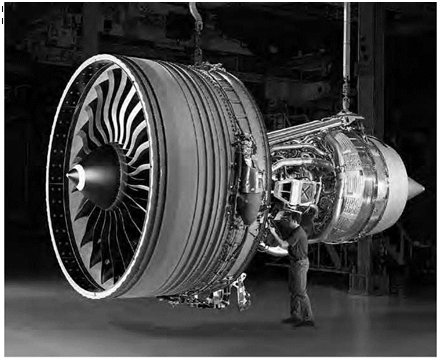

Pitcairn PCA-2 Autogiro. NASA.

|

Langley Research Center.[271] The early contributions were the result of studies of the autogiro. The focus was on documenting flight characteristics, performance prediction methods, comparison of flight-test and wind tunnel test results, and theoretical predictions. In addition, fundamental operating problems definition and potential solutions were addressed. In 1931, the NACA made its first direct purchase of a rotary wing aircraft for flight test investigations, a Pitcairn PCA-2 autogiro. (With few exceptions, future test aircraft were acquired as short-term loan or long-term bailment from the military aviation departments.) The Pitcairn was used over the next 5 years in flight-testing and tests of the rotor in the Langley 30- by 60-foot Full-Scale Tunnel. Formal publications of greatest permanent value received "report” status, and the Pitcairn’s first study, NACA Technical Report 434, was the first authoritative information on autogiro performance and rotor behavior.[272]

The mid-1930s brought visiting autogiros and manufacturing personnel to Langley Research Center. In addition, analytical and wind tunnel work was carried out on the "Gyroplane,” which incorporated a rotor without the usual flapping or lead-lag hinges at the blade root. This was the first systematic research documented and published for what is now called the "rigid” or "hingeless” rotor. This work was the forerunner of the hingeless rotor’s reappearance in the 1950s and 1960s with extensive R&D effort by industry and Government. The NACA’s early experience with the Gyroplane rotor suggested that "designing toward flexibility rather than toward rigidity would lead to success.” In the 1950s, the NACA began to encourage this design approach to those expressing interest in hingeless rotors.

While the NACA worked to provide the fundamentals of rotary wing aerodynamics, the autogiro industry experienced major changes. Approximately 100 autogiros were built in the United States and hundreds more worldwide. Problems in smaller autogiros were readily addressed, but those in larger sizes persisted. They included stick vibration, heavy control forces, vertical bouncing, and destructive out-of-pattern blade behavior known as ground resonance. Private and commercial use underwent a discouraging stage. However, military interest grew in autogiro utility capabilities for safe flight at low airspeed. In an early example of cooperation with the military, the NACA’s research effort was linked to the needs of the Army Air Corps (AAC), predecessor of the Army Air Forces (AAF). In quick succession, Langley Laboratory conducted flight and/or wind tunnel tests on a series of Kellett Autogiros, including the KD-1, YG-1, YG-1A, YG-1B, and the Pitcairn YG-2. The NACA provided control force and performance measurements, and pilot assessments of the YG-1. In addition, recommendations were provided on maneuver limitations and redesign for better military serviceability. This led to the NACA providing recommendations and pilot training to enable the Army Air Corps to begin conducting its own rotary wing aircraft experimental and acceptance testing.

In the fall of 1938, international events required that the NACAs emphasis turn to preparedness. The United States required fighters and bombers with superior performance. In the next few years, experimental rotary wing research declined, but important basic groundwork was conducted. Limited effort began on the potentially catastrophic phenomena of ground resonance or coupled rotor-fuselage mechanical instability. Photographs were taken of the rotor-blade out-of-pattern behavior by mounting a camera high on the Langley Field balloon (airship) hangar while an autogiro

was operated on the ground. Exploratory flight tests were done using a hub – mounted camera. In these tests blade motion studies were conducted to document the pattern of rotor-blade stalling behavior. In the closing years of the 1930s, analytical progress was also made in the creation of a new theory of rotor aerodynamics that became a classic reference and formed the basis for NACA helicopter experimentation in the 1940s.[273] In these years, the top leadership of the NACA engaged in visible participation in the formal dialogue with the rotating wing community. In 1938, Dr. George W. Lewis, the NACA Headquarters Director of Aeronautical Research, served as Chairman of the Research Programs session of the pioneering Rotating – Wing Aircraft Meeting at the Franklin Institute in Philadelphia. In 1939, Dr. H. J.E. Reid, Director of Langley Laboratory, the NACA’s only laboratory, served as Chairman of the session in Dr. Lewis’s absence.[274]

The early 1940s continued a period of only modest NACA effort on rotary wing research. However, military interest in the helicopter as a new operational asset started to grow with attention to the need for special missions such as submarine warfare and the rescue of downed pilots. As noted in the introduction to this chapter, the need was met by the Sikorsky R-4 (YR-4B), which was the only production helicopter used in United States military operations during the Second World War. The R-4 production started in 1943 as a direct outgrowth of the Sikorsky VS-300. As the helicopter industry emerged, the NACA rotary wing community enjoyed a productive contact through the interface provided by the NACA Rotating Wing (later renamed Helicopter) Subcommittee. It was in these technical subcommittees that experts from Government, industry, and academia spelled out the research needs and set priorities to be addressed by the NACA rotary wing research specialists. The NACA committee and subcommittee roles were marked by a strong supervisory tone, as called for in the NACA charter. The members lent a definite direction to NACA research based on their technical needs. They also attended annual inspection tours of the three NACA Centers to review the progress on the assigned

|

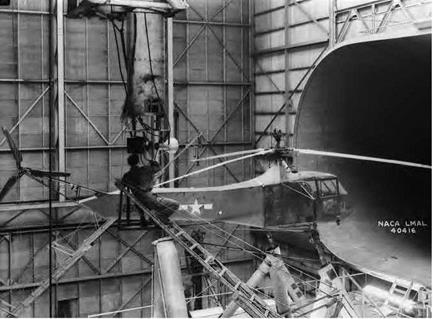

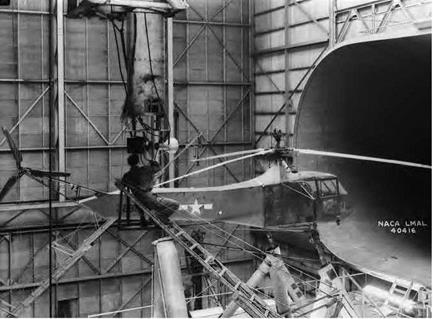

Sikorsky YR-4B tested in the Langley 30 x 60 ft. wind tunnel. NASA.

|

research efforts. In the NASA era, the committees and subcommittees evolved into a more advisory function: commenting upon and ranking the merits of projects proposed by the research teams.

NACA Report 716, published in 1941, constituted a particularly significant contribution to helicopter theory, for it provided simplified methods and charts for determining rotor power required and blade motion.[275] For the first time, design studies could be performed to begin to assess the impacts of blade-section stalling and tip-region compressibility effects. Theoretical work continued throughout the 1940s to extend the simple theory into the region of more extreme operating conditions. Progress began to be made in unraveling the influence of airfoil selection, high blade – section angles of attack, and high tip Mach numbers. The maximum Mach number excursion occurred as the tip passed through the region where the rotor rotational velocity and the forward airspeed combined.

Flight research was begun with the first production helicopter, the Sikorsky YR-4B. This work produced a series of comparisons of flight – test results with theoretical predictions utilizing the new methodology

for rotor performance and blade motion. The results of the comparisons validated the basic theoretical methods for hover and forward flight in the range of practical steady-state operating conditions. The YR-4B helicopter was also tested in the Langley 30 by 60 tunnel.

This facilitated rotor-off testing to provide fuselage-only lift and drag measurements. This in turn enabled the flight measurements to be adjusted for direct comparison with rotor theory.

With research progressing in flight test, wind tunnel test and theory development, a growing, well-documented open rotary wing database was swiftly established. At the request of industry, Langley airfoil specialists designed and tested airfoils specifically tailored to operating in the challenging unsteady aerodynamic environment of the helicopter rotor. However, the state-of-the-art of airfoil development required that the airfoil be designed on the basis of a single, steady airflow condition. Selecting this artful compromise between rapid excursions into the high angle of attack stall regions and the zero-lift conditions was daunting.[276] Database buildup also included the opportunity offered by the YR-4B 30×60 wind tunnel test setup. This provided the opportunity to document a database from hovering tests on six sets of rotor blades of varying construction and geometry. The testing included single, coaxial, and tandem rotor configurations. Basic single rotor investigations were conducted of rotor-blade pressure distribution, Mach number effects, and extreme operation conditions.

In 1952, Alfred Gessow and Garry Myers published a comprehensive textbook for use by the growing helicopter industry.[277] [278] The authors’ training and experience had been gained at Langley Laboratory, and the experimental and theoretical work done by laboratory personnel over the previous 15 years (constituting over 70 published documents) served as the basis of the aerodynamic material developed in the book. The Gessow-Myers textbook remains to this day a classic introduction to helicopter design.

Significant contributions were made in rotor dynamics. The principal contributions addressed the lurking problem of ground resonance, or self-excited mechanical instability—the coupling of in-plane rotor-blade

oscillations with the rocking motion of the fuselage on its landing gear. First encountered in some autogiro designs, the potential for a catastrophic outcome also existed for the helicopter.11 Theory developed and disseminated by the NACA enabled the understanding and analysis of ground resonance. This capability was considered essential to the successful design, production, and general use of rotary wing aircraft. Langley pioneered the use of scaled models for the study of dynamic problems such as ground resonance, blade flutter, and control coupling.[279] This contribution to the contemporary state-of-the-art was a forerunner of the all-encompassing development and use of mathematical modeling throughout the modern rotary wing technical community.

As the helicopter flight-testing experience evolved, the research pilots observed problems in holding to steady, precision flight to enable data recording. Frequent control input adjustments were required to prevent diverging into attitudes that were difficult to recover from. Investigation of these flying quality characteristics led to devising standard piloting techniques to produce research-quality data. Deliberate, sharp-step and pulse-control inputs were made, and the resulting aircraft pitch, roll, and yaw responses were recorded for a few seconds. Out of this work came the research specialties of rotary wing flying qualities, stability and control, and handling qualities. Standard criteria for defining required flying qualities specifications gradually emerged from the NACA flight research. The results of this work supported the development of Navy helicopter specifications in the early 1950s and eventually for all military helicopters in 1956. In 1957, research at the NACA Ames Research Center produced a systematic protocol for pilots to assess aircraft handling qualities.[280] The importance of damping of angular velocity and control power, and their interrelation, was investigated in Langley flight-testing. The results provided the basis for a major portion of formal flying-qualities criteria.[281] After modification in 1969 based on exten-

sive study of in-flight and simulation tasks at Ames, the Cooper-Harper Handling Qualities Rating Scale was published. It remains the standard for evaluating aircraft flying qualities, including rotary wing vehicles.[282]

In the late 1950s, the Army expanded the use of helicopters. The rotary wing industry grew to the point that manufacturers’ engineering departments included research and development staff. In addition, the Army established an aviation laboratory (AVLABS), now known as the Aviation Applied Technology Directorate (AATD), at the Army Transportation School, Fort Eustis, VA. This organization was able to sponsor and publish research conducted by the manufacturers. Fort Eustis was situated within 25 miles of the NACA’s Langley Research Center in Hampton on the Virginia peninsula. A majority of the key AVLABS personnel were experienced NACA rotary wing researchers. As it turned out, this personnel relocation, amounting to an unplanned "contribution” of expertise to the Army, was the forerunner of significant, long-term, co-located laboratory teaming agreements between the Army and NASA.

![]() These research programs (along with the Soviet Projekt 100LDU testbed discussed earlier) provided invaluable hands-on experience with state – of-the-art flight control technologies. Data from the Jaguar ACT and the CCV F-104G supported the Experimental Aircraft Program (EAP) and contributed to the technology base for the Anglo-German-Italian – Spanish Eurofighter multirole fighter, now known as the Typhoon. Many other advanced aircraft development programs, including the French Rafale, the Mitsubishi F-2 fighter, the Russian Su-27 family of fighters and attack aircraft, and the entire family of Airbus airliners, were the beneficiaries of these research efforts. In addition, the importance of the infusion of technology made possible by open dissemination of NASA technical publications should not be underestimated.

These research programs (along with the Soviet Projekt 100LDU testbed discussed earlier) provided invaluable hands-on experience with state – of-the-art flight control technologies. Data from the Jaguar ACT and the CCV F-104G supported the Experimental Aircraft Program (EAP) and contributed to the technology base for the Anglo-German-Italian – Spanish Eurofighter multirole fighter, now known as the Typhoon. Many other advanced aircraft development programs, including the French Rafale, the Mitsubishi F-2 fighter, the Russian Su-27 family of fighters and attack aircraft, and the entire family of Airbus airliners, were the beneficiaries of these research efforts. In addition, the importance of the infusion of technology made possible by open dissemination of NASA technical publications should not be underestimated.