Centurion—Third-Generation ERAST Program Test Vehicle (1996-1998)

The Centurion, which was built in 1998 by AeroVironment, represented third-generation advancement on the technology developed in the Pathfinder and Pathfinder Plus UAVs. The Centurion, however, was still

|

|

considered to be a prototype demonstrator for future solar-powered vehicles that could stay airborne for long periods of time. Prior to construction of the full-scale Centurion UAV, a quarter-scale model was constructed to verify design predictions. The Centurion had a five-section wingspan of 206 feet, which was more than twice the wingspan of Pathfinder; incorporated a redesigned airfoil; and had a length of 12 feet and an aspect ratio of 26 to 1. The aircraft, which had 14 direct-current electric motors, was to be powered by bifacial solar cells that covered 80 percent of the upper wing surface and had a maximum output of 31 kilowatts. The Centurion

|

|

had a cruising speed of between 17 and 21 mph and could carry a payload of approximately 100 pounds to an altitude of 100,000 feet, or 600 pounds to 80,000 feet. The primary building material consisted of carbon fiber and graphite epoxy composite structure, Kevlar, Styrofoam leading edge, and plastic film covering. Centurion flew three low-altitude developmental test flights on battery power at NASA Dryden, verifying handling qualities, performance, and structural integrity. The primary mission of the Centurion was to verify the handling and performance characteristics of an ultra-lightweight all-wing UAV with a wingspan of over 200 feet.[1551]

Helios Prototype-Fourth-Generation and Last ERAST Unmanned Aerial Vehicle

The Helios Prototype, which resulted from modifications of the Centurion and renaming of the aircraft to Helios, was a proof-of-concept flying wing that because of budget limitations had two different configurations: a high-altitude configuration designated the HP-01 (1998-2002) and a long-endurance configuration designated the HP-03 (2003).[1552] The two primary objectives for the Helios Prototypes were to demonstrate sustained flight at an altitude of 100,000 feet and the ability to fly nonstop

|

|

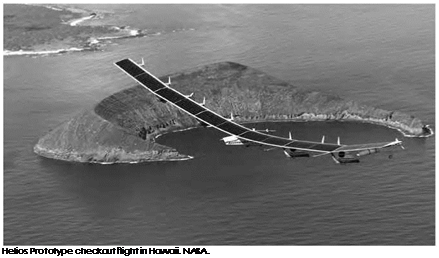

for at least 24 hours, including at least 14 hours above 50,000 feet. Initial low-altitude test flights were conducted under battery power at Edwards Air Force Base in 1999. Afterward, Helios was equipped with high – efficiency photovoltaic solar cells and underwent high-altitude flighttesting in the summer of 2001 at the U. S. Navy Pacific Missile Range Facility in Hawaii. On August 13, 2001, following further upgrading of systems, the high-altitude configuration (HP-01) reached an altitude of 96,863 feet, setting a world record for sustained horizontal flight by a winged aircraft and effectively satisfying the first Helios objective of high – altitude performance. The previous record was held by the Air Force’s SR-71A. The plan for the long-endurance configuration was to use solar cells to power the electric motors and subsystems during daylight hours and a modified hydrogen-air fuel cell system during the night. The vehicle also was equipped with backup lithium batteries and was battery – powered for its first six low-altitude test flights at Dryden.

Helios used wing dihedral (wing upsweep), engine power, elevator control surfaces, and a stability augmentation and control system to provide aerodynamic stability and control. Helios added a sixth wing panel to the Centurion, giving the remotely powered aircraft a wingspan of 247 feet, which was longer than the wingspans of either the U. S. Air Force C-5 transport or the Boeing 747 commercial airliner. The aircraft had a length of 12 feet, a wing chord of 8 feet, an empty weight of 1,322 pounds, an aspect ratio of 31 to 1, and wing loading of 0.835 pounds

per square foot. The craft, which had 14 (only 10 on long-endurance HPO3 version) brushless direct-current electric motors each rated at 2 horsepower (1.5 kW) that each drove a lightweight, 79-inch-diameter propeller, was powered by 62,120 bifacial solar cells covering the upper wing surface. Bifacial solar cells enabled Helios to convert solar energy into electricity when illuminated from either above or below, which enabled the vehicle to absorb reflected energy when flying above cloud cover. The Helios had a cruising speed of between 19 and 27 mph and could carry a payload of up to 726 pounds, including ballast, instruments, experiments, and a supplemental energy system. Helios was designed to operate at up to 100,000 feet, which is above 99 percent of the Earth’s atmosphere, with a typical endurance mission at 50,000 to 70,000 feet. Like the Centurion, the Helios Prototype was constructed mostly of composite material, including carbon fiber, graphite epoxy, Kevlar, Styrofoam, and a thin transparent plastic film. The main tubular wing spar, which was made of carbon fiber and also wrapped with Nomex and Kevlar, was thicker on the top and bottom to absorb the constant bending motions that occurred during flight. Under-wing pods were attached to carry the landing gear, battery backup system, fuel cells, flight control computers, and data instruments. The Helios flight control surfaces consisted of 72 trailing-edge elevators that provided pitch control. The fixed landing gear consisted of two wheels on each pod.[1553]

![]() At an October 13, 1999, flight demonstration at the Dryden Flight Center, Ray Morgan—vice president of AeroVironment, which developed the Helios—noted that the ultimate intention is for Helios-type UAVs to "stay in the stratosphere for months at a time and act as an 11-mile-high tower.” Morgan added that the Helios had a "very unique, slow, and stable flight characteristic. . . that means we can fly in fairly tight circles and be, essentially, a geo-stationary platform in the sky— and that has lots of potential application.”[1554] Some of commercial tasks envisioned for future solar-powered HALE aircraft to follow the Helios Prototype were storm tracking studies, atmospheric sampling, spectral imaging for agricultural and natural resource purposes, pipeline mon-

At an October 13, 1999, flight demonstration at the Dryden Flight Center, Ray Morgan—vice president of AeroVironment, which developed the Helios—noted that the ultimate intention is for Helios-type UAVs to "stay in the stratosphere for months at a time and act as an 11-mile-high tower.” Morgan added that the Helios had a "very unique, slow, and stable flight characteristic. . . that means we can fly in fairly tight circles and be, essentially, a geo-stationary platform in the sky— and that has lots of potential application.”[1554] Some of commercial tasks envisioned for future solar-powered HALE aircraft to follow the Helios Prototype were storm tracking studies, atmospheric sampling, spectral imaging for agricultural and natural resource purposes, pipeline mon-

itoring, and telecommunications platforms. Morgan further noted that reliability of the Helios was obtained in two ways: simplicity, because the UAV is simply a flying wing, and redundancy. Each motor pylon turned 2 horsepower into 10 pounds of thrust. There was only one moving part in each pylon. There were no brushes, gearboxes, or mechanism for variation in the pitch required to operate the UAV.[1555] Furthermore, while the Helios had 72 elevators, the UAV could use differential thrust to turn the aircraft without use of the elevators.

![]() The Helios HP03 long-endurance configuration, which included a hydrogen-air fuel cell pod, made its first high-altitude flight June 7, 2003. The test objectives of this flight were: (1) to demonstrate the readiness of the aircraft systems, flight support equipment and instrumentation, and flight procedures; (2) to validate the handling and aeroelastic stability of the aircraft; (3) to demonstrate the operation of the fuel cell system and gaseous hydrogen storage tanks; and (4) to provide training for personnel to staff future multiday flights. The flight was planned to be a 30-hour endurance flight, but because of leakage in the coolant and compressed air line, the flight had to be aborted after 15 hours. Flight data, however, verified that the vehicle was aeroelastically stable under the flight conditions expected for the long-endurance flight demonstration.

The Helios HP03 long-endurance configuration, which included a hydrogen-air fuel cell pod, made its first high-altitude flight June 7, 2003. The test objectives of this flight were: (1) to demonstrate the readiness of the aircraft systems, flight support equipment and instrumentation, and flight procedures; (2) to validate the handling and aeroelastic stability of the aircraft; (3) to demonstrate the operation of the fuel cell system and gaseous hydrogen storage tanks; and (4) to provide training for personnel to staff future multiday flights. The flight was planned to be a 30-hour endurance flight, but because of leakage in the coolant and compressed air line, the flight had to be aborted after 15 hours. Flight data, however, verified that the vehicle was aeroelastically stable under the flight conditions expected for the long-endurance flight demonstration.

On its second test flight, on June 26, 2003, following further modifications based on the first flight, the Helios prototype crashed on the Navy’s Pacific Missile Range Facility in Hawaii. This flight was intended to evaluate the vehicle’s hydrogen fuel cell system designed to power the UAV during periods of darkness, which would be necessary for long – duration flights. Approximately 30 minutes into its flight and at an altitude of only 2,800 feet, the aircraft encountered turbulence and morphed into a high dihedral (wings upswept) configuration that, over time, took on an increasingly alarming U-like appearance. This caused Helios to become unstable in pitch, and the big UAV began to nose up and down. During one descending swoop, the big UAV exceeded its maximum permissible airspeed, causing the wing’s leading edge secondary structure on the outer panels to fail and the solar cells and skin on the upper surface to rip off. Shedding bits and pieces of structure, the Helios progressively disintegrated as it plunged downward, being destroyed when it impacted the ocean.[1556]

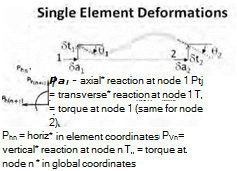

The Mishap Investigation Board (MIB) concluded that: "In summary, there was no evidence that suggests that there was prior structural failure(s) that would have contributed to the vehicle developing the higher-than-normal dihedral deflections [wing upsweep] that caused the unstable pitch oscillation and subsequent failure of the vehicle.”[1557] The report, in noting the lessons learned, identified both the proximate and root causes of the loss of Helios. The proximate cause of the crash was determined to be the "high dynamic pressure reached by the aircraft during the last cycle of the unstable pitch oscillation leading to failure of the vehicle’s secondary structure.” Two root causes, however, that contributed to or created the proximate cause of the accident were: (1) the lack of adequate analysis methods, which led to an inaccurate risk assessment of the effects of the configuration change from the HPO1 (high altitude) to the HPO3 (long endurance) version of the Helios and (2) configuration changes to the aircraft, which altered the Helios from a spanloader to a highly point-loaded mass distribution on the same structure, significantly reducing design robustness and margins of safety.[1558]

![]() The report noted that the Helios UAV represented "a nonlinear stability and control problem involving complex interactions among the flexible structure, unsteady aerodynamics, flight control system, propulsion system, the environmental conditions, and vehicle flight dynamics.” As a result of the investigation, the MIB made a number of key recommendations for future UAV development. These included:

The report noted that the Helios UAV represented "a nonlinear stability and control problem involving complex interactions among the flexible structure, unsteady aerodynamics, flight control system, propulsion system, the environmental conditions, and vehicle flight dynamics.” As a result of the investigation, the MIB made a number of key recommendations for future UAV development. These included:

1. Develop more advanced, multidisciplinary "time – domain” analysis methods appropriate to highly flexible, "morphing” vehicles;

2. Develop ground-test procedures and techniques appropriate to UAV class vehicles to validate new analysis methods and predictions;

3. Improve the technical insight for highly complex projects, using the expertise available from all NASA Research Centers;

4. Develop multidisciplinary models, which can describe the nonlinear dynamic behavior of aircraft modifications or perform incremental flight-testing; and

5. Provide adequate resources to future programs for more incremental flight-testing when large configuration changes significantly deviate from the initial design concept.

![]() As already noted, because of budget constraints, the NASA-industry team basically had to use one prototype vehicle for two tests: high altitude and long endurance. Adding to this factor, as noted in the MIB report, the switch from a regenerative fuel cell to a hydrogen-air fuel cell, which was necessitated by technical problems with the RFCS, combined with project time and budget deadlines, caused a critical change in the load factor on the wing structure. The hydrogen-air fuel cell system was more point loaded because of having to use a third pod at the centerline of the vehicle to hold the 520-pound primary hydrogen-air fuel cell. The originally planned for regenerative fuel cell system would have required only two pods, each one-third of the distance from the vehicle’s centerline to the wingtip.[1559]

As already noted, because of budget constraints, the NASA-industry team basically had to use one prototype vehicle for two tests: high altitude and long endurance. Adding to this factor, as noted in the MIB report, the switch from a regenerative fuel cell to a hydrogen-air fuel cell, which was necessitated by technical problems with the RFCS, combined with project time and budget deadlines, caused a critical change in the load factor on the wing structure. The hydrogen-air fuel cell system was more point loaded because of having to use a third pod at the centerline of the vehicle to hold the 520-pound primary hydrogen-air fuel cell. The originally planned for regenerative fuel cell system would have required only two pods, each one-third of the distance from the vehicle’s centerline to the wingtip.[1559]

Even with the loss of the Helios Prototype, the MIB noted both the success and the challenge of the solar UAV program, as reflected in the following statement from the Mishap report:

During the course of this investigation the MIB discovered that the AV [AeroVironment]/NASA technical team had created most of the world’s knowledge in the area of High Altitude – Long Endurance (HALE) aircraft design, development, and test. This has placed the United States in a position of world leadership in this class of vehicle, which has significant strategic implications for the nation. The capability afforded by such vehicles is real and unique, and can enable the use of the stratosphere for many government and commercial applications. The MIB also found that this class of vehicle is orders of magnitude more complex than it appears but that the AV/ NASA technical team had identified and solved the toughest technical problems. Although more knowledge can and should be pursued as recommended in this report, an adequate knowledge base now exists to design, develop, and deploy operational HALE systems.[1560]

ERAST Program Overview and Accomplishments

![]() The ERAST program, which started in 1994 and ended in 2003, following the loss of the Helios Prototype, accomplished almost all of its primary objectives, including development of a solar-powered UAV capable of flying at very high altitudes; demonstrating payload capabilities and sensors for atmospheric research; resolving solar-powered UAV operational issues; and demonstrating UAV’s usefulness to scientific, Government, and civil customers. The ERAST program also demonstrated that the unique joint NASA-industry ERAST alliance under a Joint Sponsored Research Agreement worked well and that good cooperation under this agreement led to efficient use of resources and expedited reaching the project milestones required to satisfy the program’s budget and time constraints. In regard to the alliance partner manufacturing the solar cells for the four generations of solar-powered UAV s, SunPower Corporation was able to make significant increases in efficiency and lower the cost of its commercial solar cells, which led to its successful mass-produced series A-300 solar cells. The one primary objective that remained unfulfilled at the close of the ERAST NASA-industry program was obtaining the long-endurance capability to fly for multiple days or longer. As with the earlier solar UAV program in the 1980s, a backup power system that would enable solar UAVs to fly in periods of darkness remained the critical problem to be solved. Significant progress in this area, however, was made and work continued at NASA Glenn under the Low Emissions Alternative Power (LEAP) program on the goal of perfecting a lightweight regenerative fuel cell system. NASA Glenn made its first closed-loop (system completely sealed) regenerative fuel cell demonstration in September 2003 and demonstrated five contiguous back-to-back charge-discharge cycles at full power under semiautonomous control in July 2005.[1561]

The ERAST program, which started in 1994 and ended in 2003, following the loss of the Helios Prototype, accomplished almost all of its primary objectives, including development of a solar-powered UAV capable of flying at very high altitudes; demonstrating payload capabilities and sensors for atmospheric research; resolving solar-powered UAV operational issues; and demonstrating UAV’s usefulness to scientific, Government, and civil customers. The ERAST program also demonstrated that the unique joint NASA-industry ERAST alliance under a Joint Sponsored Research Agreement worked well and that good cooperation under this agreement led to efficient use of resources and expedited reaching the project milestones required to satisfy the program’s budget and time constraints. In regard to the alliance partner manufacturing the solar cells for the four generations of solar-powered UAV s, SunPower Corporation was able to make significant increases in efficiency and lower the cost of its commercial solar cells, which led to its successful mass-produced series A-300 solar cells. The one primary objective that remained unfulfilled at the close of the ERAST NASA-industry program was obtaining the long-endurance capability to fly for multiple days or longer. As with the earlier solar UAV program in the 1980s, a backup power system that would enable solar UAVs to fly in periods of darkness remained the critical problem to be solved. Significant progress in this area, however, was made and work continued at NASA Glenn under the Low Emissions Alternative Power (LEAP) program on the goal of perfecting a lightweight regenerative fuel cell system. NASA Glenn made its first closed-loop (system completely sealed) regenerative fuel cell demonstration in September 2003 and demonstrated five contiguous back-to-back charge-discharge cycles at full power under semiautonomous control in July 2005.[1561]

[1] Peter W. Brooks, The Modern Airliner: Its Origins and Development (London: Putnam & Co., Ltd., 1961), pp. 91-111. Brooks uses the term to describe a category of large airliner and transport aircraft defined by common shared design characteristics, including circular cross-section constant-diameter fuselages, four-engines, tricycle landing gear, and propeller-driven (piston and turbo-propeller), from the DC-4 through the Bristol Britannia, and predominant in the time period 1942 through 1958. Though some historians have quibbled with this, I find Brooks’s reasoning convincing and his concept of such a "generation’ both historically valid and of enduring value.

[2] Quoted in Roy A. Grossnick, et al., United States Naval Aviation 1910-1995 (Washington: U. S. Navy, 1997), p. 15; Gordon Swanborough and Peter M. Bowers, United States Navy Aircraft Since 1911 (New York: Funk & Wagnalls, 1968), p. 394.

[3] Alexander Lippisch, "Recent Tests of Tailless Airplanes," NACA TM-564 (1930), a NACA translation of his article "Les nouveaux essays d’avions sans queue," l’Aerophile (Feb. 1-15, 1930), pp. 35-39.

[4] For Volta, see Theodore von Karman and Lee Edson, The Wind and Beyond: Theodore von Kdrmdn, Pioneer in Aviation and Pathfinder in Space (Boston: Little, Brown and Co., 1967), pp. 216-217, 221-222; Adolf Busemann, "Compressible Flow in the Thirties," Annual Review of Fluid Mechanics, vol. 3 (1971), pp. 6-1 1; Carlo Ferrari, "Recalling the Vth Volta Congress: High Speeds in Aviation," Annual Review of Fluid Mechanics, vol. 28 (1996), pp. 1-9; Hans-Ulrich Meier, "Histo – rischer Ruckblick zur Entwicklung der Hochgeschwindigkeitsaerodynamik," in H.-U. Meier, ed., Die Pfeilflugelentwicklung in Deutschland bis 1945 (Bonn: Bernard & Graefe Verlag, 2006), pp. 16-36; and Michael Eckert, The Dawn of Fluid Dynamics: A Discipline Between

Science and Technology (Weinheim: WileyVCH Verlag, 2006), pp. 228-231.

[5] Adolf Busemann, "Aerodynamische Auftrieb bei Uberschallgeschwindigkeit," Luflfahrlforschung, vol. 1 2, No. 6 (Oct. 3, 1935), pp. 210-220, esp. Abb. 4-5 (Figures 4-5).

[6] Theodore von Karman, Aerodynamics (New York: McGraw-Hill Book Company, Inc., 1963 ed.), p. 1 33.

[7] Ministero dell’Aeronautica, 1 ° Divisione, Sezione Aerodinamica Resultati di Esperienze (Rome: Guidonia, 1936); the swept "double-ender" wind tunnel study (anticipating the layout of Dornier’s Do 335 Pfeil ["Arrow"] of the late wartime years) was designated the J-1 0; its drawing is dated March 7, 1936. I thank Professor Claudio Bruno of the Universita degli Studi di Roma "La Sapi – enza"; and Brigadier General Marcello di Lauro and Lieutenant Colonel Massimiliano Barlattani of the Stato Maggiore dell’Aeronautica Militare (SMdAM), Rome, for their very great assistance in enabling me to examine this study at the Ufficio Storico of the SMdAM in June 2009.

[8] Raymond F. Anderson, "Determination of the Characteristics of Tapered Wings," NACA Report No. 572 (1936); see in particular Figs. 15 and 16, p. 11.

[9] For an example of such work, see Dr. Richard Lehnert, "Bericht uber Dreikomponentenmessungen mit den Gleitermodellen A4 V12/a und A4 V1 2/c," Archiv Nr 66/34 (Peenemunde: Heeres – Versuchsstelle, Nov. 27, 1940), pp. 6-10, Box 674, "C10/V-2/History" file, archives of the National Museum of the United States Air Force, Dayton, OH. Re: German research deficiencies, see Adolf Baeumker, Ein Beitrag zur Geschichte der Fuhrung der deutschen Luftfahrttechnik im ersten halben Jahrhun dert, 1900-1950 (Bad Godesberg: Deutschen Forschungs – und Versuchsanstalt fur Luft – und Raumfahrt e. V., 1971), pp. 61-74; Col. Leslie E. Simon, German Scientific Establishments (Washington: Office of Technical Services, Department of Commerce, 1947), pp. 7-9; Helmuth Trischler, "Self-Mobilization or Resistance? Aeronautical Research and National Socialism," and Ulrich Albrecht, "Military Technology and National Socialist Ideology," in Monika Renneberg and Mark Walker, eds., Science, Technology, and National Socialism (Cambridge: Cambridge University Press, 1994), pp. 72-1 25. For science and the Third Reich more generally, see Alan D. Beyerchen, Scientists Under Hitler: Politics and the Physics Community in the Third Reich (New Haven: Yale University Press, 1977); and Kristie Macrakis, Surviving the Swastika: Scientific Research in Nazi Germany (New York: Oxford University Press, 1993).

[10] USAAF, "German Aircraft, New and Projected Types" (1946), Box 568, "A-1A/Germ/1945 file, NMUSAF Archives; and J. McMasters and D. Muncy, "The Early Development of Jet Propelled Aircraft," AIAA Paper 2007-0151, Pts. 1-2 (2007).

1 1 . See Richard P. Hallion, "Lippisch Gluhareff, and Jones: The Emergence of the Delta Planform and the Origins of the Sweptwing in the United States," Aerospace Historian, vol. 26, no. 1 (Mar. 1979), pp. 1-10.

[12] Memo, Michael Gluhareff to 1.1. Sikorsky, July 1941, copy in the Gluhareff Dart accession file, National Air and Space Museum, Smithsonian Institution, Washington, DC. Gluhareff’s Dart appeared contemporaneously with a remarkably similar (though with a tractor propeller) Soviet design by Alexandr Sergeevich Moskalev. Though unclear, it seems Gluhareff first conceived the planform. It is possible that an informal interchange of information between the two occurred, as Soviet aeronautics and espionage authorities kept close track of American developments and the activities of the emigree Russian community in America.

1 3. Griswold is best known as coinventor (with Hugh De Haven) of the three-point seat restraint, which formed the basis for the modern automotive seat belt; Saab then advanced further, building upon their work. See "Three-Point Safety Belt is American, not Swedish, Invention,’ Status Report, vol. 35, no. 9 (Oct. 21, 2000), p. 7.

[14] Vought-Sikorsky, "Aerodynamic Characteristics of the Preliminary Design of a 1/20 Scale Model of the Dart Fighter,’ Vought-Sikorsky Wind Tunnel Report No. 192 (Nov. 1 8, 1942), copy in the Gluhareff Dart accession file, National Air and Space Museum, Smithsonian Institution, Washington, DC.

[15] Letter, Roger W. Griswold to Maj. Donald R. Eastman, Oct. 22, 1 946, Gluhareff Dart accession file, NASM.

[16] M. E. Gluhareff, "Tailless Airplane,’ U. S. patent No. 2,51 1,502, issued June 1 3, 1950; "Sikorsky Envisions Supersonic Airliner,” Aviation Week (May 4, 1959), pp. 67-68; M. E. Gluhareff, "Aircraft with Retractable Auxiliary Airfoil,’ U. S. patent No. 2,941,752, issued June 21, 1960.

[17] See William Sears’s biographical introduction to the "Collected Works of Robert T. Jones,’ NASA TM-X-3334 (1976), pp. vii-ix; and Walter G. Vincenti, "Robert Thomas Jones,’ in Biographical Memoirs, vol. 86 (Washington: National Academy of Sciences, 2005), pp. 3-21.

1 8. Transcript of interview of R. T. Jones by Walter Bonney, Sept. 24, 1 974, p. 5, in Jones biographical file, No. 001 147, Archives of the NASA Historical Division, National Aeronautics and Space Administration, Washington, DC.

[19] Transcript of Jones-Bonney interview, p. 5; Hallion conversation with Dr. Robert T. Jones at NASA Ames Research Center, Sunnyvale, CA, July 14, 1977; Max M. Munk, "The Aerodynamic Forces on Airship Hills, NACA Report No. 1 84 (1923); Max M. Munk, "Note on the Relative Effect of the Dihedral and the Sweep Back of Airplane Wings," NACA TN-177 (1924); H. S. Tsien, "Supersonic Flow Over an Inclined Body of Revolution," Journal of the Aeronautical Sciences, vol. 5, no. 2 (Oct. 1938), pp. 480-483.

[20] Note that although Lippisch called his tailless aircraft "deltas’ as early as 1 930, in fact they were generally broad high aspect ratio wings with pronounced leading edge taper, akin to the wing planform of America’s classic DC-1/2/3 airliners. During the Second World War, Lippisch did develop some concepts for sharply swept deltas (though of very thick and impracticable wing section). Taken all together, Lippisch’s deltas, whether of high or low aspect ratio planform, were not comparable to the thin slender and sharply swept (over 60 degrees) deltas of Jones, and Gluhareff before him, or Dietrich Kuchemann at the Royal Aircraft Establishment afterwards, which were more akin to high-supersonic and hypersonic shapes of the 1950s-1960s.

[21] For DM-1 and extrapolative tests, see Herbert A. Wilson, Jr., and J. Calvin Lowell, "Full-Scale Investigation of the Maximum Lift and Flow Characteristics of an Airplane Having Approximately Triangular Plan Form,’ NACA RM-L6K20 (1947); J. Calvin Lovell and Herbert A. Wilson, Jr., "Langley Full-Scale-Tunnel Investigation of Maximum Lift and Stability Characteristics of an Airplane Having Approximately Triangular Plan Form (DM-1 Glider),’ NACA RM-L7F16 (1947); and Edward F. Whittle, Jr., and J. Calvin Lovell, "Full-Scale Investigation of an Equilateral Triangular Wing Having 10-Percent-Thick Biconvex Airfoil Sections,’ NACA RM-L8G05 (1948).

[22] In 1944, Kotcher had conceived a rocket-powered "Mach 0.999" transonic research airplane (a humorous reference to the widely accepted notion of an "impenetrable" sonic "barrier") that subsequently inspired the Bell Aircraft Corporation to undertake design of the XS-1, the world’s first supersonic manned airplane.

[23] Kantrowitz would pioneer high-Mach research facilities design, and Soule would serve the NACA as research airplane projects leader, supervising the Agency’s Research Airplane Projects Panel (RAPP), a high-level steering group coordinating the NACA’s X-series experimental aircraft programs.

[24] Memo, Jones to Lewis, Mar. 5, 1945; see also ltr., Jones to Ernest O. Pearson, Jr., Feb. 2, 1960, and Navy/NACA Record of Invention Sheet, Apr. 10, 1946, Jones biographical file, NASA.

[25] Robert T. Jones, "Properties of Low-Aspect-Ratio Pointed Wings at Speeds Below and Above the Speed of Sound," NACA TN-1032 (1946), p. 1 1 [first issued at NACA LMAL on May 11, 1945].

[26] For Millikan visit to Germany, see Millikan Diary 6, Box 35, Papers of Clark B. Millikan, Archives, California Institute of Technology, Pasadena, CA; Alexander Lippisch, ltr. to editor, Aviation Week and Space Technology (Jan. 6, 1975); in 1977, while curator of science and technology

at the National Air and Space Museum, the author persuaded Jones to donate his historic delta test model to the museum; he had been using it for years as a letter opener!

[27] Jones noted afterward that at Volta, Busemann "didn’t have the idea of getting the wing inside the Mach cone so you got subsonic flow. The real key to [the swept wing] was to get subsonic flow at supersonic speed by getting the wing inside the Mach cone. . . the development of what I would say [was] the really correct sweep theory for supersonic speeds occurred in Germany in ’43 or ’44, and with me in 1945.’ (See transcript of Jones-Bonney interview, p. 6). But German researchers had mastered it earlier, as evident in a series of papers and presentations in a then-"Geheim" ("Secret”) conference report by

the Lilienthal-Gesellschaft fur Luftfahrtforschung, Allgemeine Stromungsforschung: Bericht uber die Sitzung Hochgeschwindigkeitsfragen am 29 und 30 Oktober 1942 in Berlin (Berlin: LGF, 1942).

[28] For his report, see Robert T. Jones, "Wing Planforms for High-Speed Flight,’ NACA TN-1033 (1946) [first issued at LMAL on June 23, 1945, as Confidential Memorandum Report L5F21 ]. Jones’s tortuous path to publication is related in James R. Hansen’s Engineer in Charge: A History of the Langley Aeronautical Laboratory, 1917-1958, SP-4305 (Washington: NASA, 1987), pp. 284-285.

[29] Jones, "Wing Planforms for High-Speed Flight," NACA TN-1033, p. 1.

[30] For the United States, this meant that Soviet intelligence collectors increasingly focused on American high-speed research. Bell Aircraft Corporation, manufacturer of the first American jet airplane, the first supersonic airplane, and advanced swept wing testbeds (the X-2 and X-5), figured prominently as a Soviet collection target as did the NACA. NACA engineer William Perl (born Mutterperl), a member of the Rosenberg spy ring who passed information on aviation and jet engines to Soviet intelligence, worked as a postwar research assistant for Caltech’s Theodore von Karman, director of the Guggenheim Aeronautical Laboratory of the California Institute of Technology (GALCIT), the Nation’s premier academic aero research facility. He cultivated a close bond with TvK’s sister Josephine ("Pipa") and TvK himself. Perl had almost unique access to the highest-level NACA and GALCIT reports on high-speed flight, and the state of advanced research and facilities planning for them and the U. S. Air Force. He associated as well with NACA notables, including Arthur Kantrowitz, Eastman Jacobs, and Robert T. Jones. So closely was he associated with von Karman that he once helpfully reminded him where to find the combination to an office safe! He helped screen sensitive NACA data for a presentation TvK was making on high-speed stability and control, and TvK recommended Perl for consultation on tunnel development at the proposed new Arnold Engineering Development Center (AEDC) in Tennessee. Perl was unmasked by the Venona signals intelligence decryption program, interrogated on his associations with known Communists, and subsequently arrested and convicted of perjury. (He had falsely denied knowing the Rosenbergs.) More serious espionage charges were not brought, lest court proceedings compromise the ongoing Venona collection effort. The Papers of Theodore von Karman, Box 31, Folder 31.38, Archives of the California Institute of Technology, and the Federal Bureau of Investigations’ extensive Perl documentation contain much revealing correspondence on Perl and his associates. I thank Ernest Porter and the FBI historical office for arranging access to FBI material. See also Katherine A. S. Sibley, Red Spies in America: Stolen Secrets and the Dawn of the Cold War (Lawrence: University Press of Kansas, 2004); and John Earl Haynes and Harvey Klehr’s Early Cold War Spies: The Espionage Trials that Shaped American Politics (Cambridge: Cambridge University Press, 2006) for further details on the Perl case.

[31] . George W. Gray, Frontiers of Flight: The Story of NACA Research (New York: Knopf, 1 948), p. 348.

[32] Re: German high-speed influence in the U. S., Britain, and Russia, see H. S. Tsien, "Reports on the Recent Aeronautical Developments of Several Selected Fields in Germany and Switzerland,’ in Theodore von Karman, ed., Where We Stand: First Report to General of the Army H. H. Arnold on Long Range Research Problems of the Air Forces with a Review of German Plans and Developments (Washington: HQ AAF, Aug. 22, 1945), Microfilm Reel 194, Papers of Gen. Henry H. Arnold, Manuscript Division, U. S. Library of Congress, Washington, DC; Ronald Smelt, "A Critical Review of German Research on High-Speed Airflow,’ Journal of the Royal Aeronautical Society, vol. 50,

No. 432 (Dec. 1946), pp. 899-934; Andrew Nahum, "I Believe the Americans Have Not Yet Taken Them All!’ in Helmuth Trischler, Stefan Zeilinger, Robert Bud, and Bernard Finn, eds., Tackling Transport (London: Science Museum, 2003), pp. 99-1 38; Matthew Uttley, "Operation ‘Sturgeon’ and Britain’s Post-War Exploitation of Nazi German Aeronautics,’ Intelligence and National Security, vol. 17, no. 2 (Sum. 2002), pp. 1-26; M. I. Gurevich, "O Pod’emnoi Sile Strelovidnogo Kryla v Sverkhzvukovom Potoke,’ Prikladnaya Matematika i Mekhanika, vol. 10 (1946), translated by the NACA as "Lift Force of an Arrow-Shaped Wing,’ NACA TM-1 245 (1949). Gurevich, cofounder of the MiG bureau (he is the "G" in "MiG’) was subsequently principal aerodynamicist of the MiG – 15, the Soviet Union’s swept wing equivalent to the American F-86. For a detailed examination

of F-86 wing development and the influence of German work (particularly Gothert’s) upon it, see Morgan M. Blair, "Evolution of the F-86,’ AIAA Paper 80-3039 (1980).

[33] Pitch-up was of such significance that it is discussed subsequently in greater detail within this essay.

[34] First comprehensively analyzed by Max M. Munk in his "Note on the Relative Effect of the Dihedral and the Sweep Back of Airplane Wings,’ NACA TN-177 (1924).

[35] See John E. Steiner, "Transcontinental Rapid Transit: The 367-80 and a Transport Revolution — The 1953-1978 Quarter Century," AIAA Paper 78-3009 (1978), p. 93; John E. Steiner, "Jet Aviation Development: A Company Perspective," in Walter J. Boyne and Donald H. Lopez, eds., The Jet Age: Forty Years of Jet Aviation (Washington: Smithsonian Institution Press, 1979), pp. 145-148; and William H. Cook, The Road to the 707: The Inside Story of Designing the 707 (Bellevue, WA: TYC Publishing Co., 1991), pp. 145-205.

[36] See, for example, Richard T. Whitcomb, "An Investigation of the Effects of Sweep on the Characteristics of a High-Aspect-Ratio Wing in the Langley 8-Ft. High Speed Tunnel,’ NACA RM-L6J01 a (1947), conclusion 4, p. 19; Stephen Silverman, "The Next 25 Years of Fighter Aircraft,’ AIAA Paper No. 78-301 3 (1978); Glen Spacht, "X-29 Integrated Technology Demonstrator and ATF,’ AIAA Paper No. 83-1058 (1983).

[37] A. M. "Tex’ Johnston with Charles Barton, Tex Johnston: Jet-Age Test Pilot (Washington: Smithsonian Institution Press, 1991), p. 1 05. The designation "L-39” could be taken to imply that the swept wing testbeds were modifications of Bell’s earlier and smaller P-39 Airacobra. In fact, it was coincidence; the L-39s were P-63 conversions, as is evident from examining photographs of the

two L-39 aircraft.

[38] Corwin H. Meyer, Corky Meyer’s Flight Journal: A Test Pilot’s Tales of Dodging Disasters—Just in Time (North Branch, MN: Specialty Press, 2006), p. 193.

[39] NACA’s L-39 trials are covered in three reports by S. A. Sjoberg and J. P. Reeder: "Flight Measurements of the Lateral and Directional Stability and Control Characteristics of an Airplane Having a 35° Sweptback Wing with 40-Percent-Span slots and a Comparison with Wind-Tunnel Data,’ NACA TN-151 1 (1948); "Flight Measurements of the Longitudinal Stability, Stalling, and Lift Characteristics of an Airplane Having a 35° Sweptback Wing Without Slots and With 40-Percent-Span Slots and a Comparison with Wind-Tunnel Data,’ NACA TN-1679 (1948); and "Flight Measurements of the Stability, Control, and Stalling Characteristics of an Airplane Having a 35° Sweptback Wing Without Slots and With 80-Percent-Span Slots and a Comparison with Wind-Tunnel Data,’ NACA TN-1743 (1948). The American L-39s were matched by foreign equivalents, most notably in Sweden, where the Saab company flew a subscale swept wing variant of its conventional Safir light aircraft, designated the Saab 201, to support development of its J29 fighter, Western Europe’s first production swept wing jet, which first flew in Sept. 1948. Like both the F-86 and MiG-15, it owed its design largely to German inspiration. Saab researchers were so impressed with what they had learned from the 201 that they subsequently flew another modified Safir, the Saab 202, with

a more sharply swept wing planform intended for the company’s next jet fighter, the J32 Lansen (Lance). See Hans G. Andersson, Saab Aircraft Since 1937 (Washington: Smithsonian Institution Press, 1989), pp. 106, 117.

[40] XP-86 test report, May 21, 1948, reprinted in Roland Beamont, Testing Early Jets: Compressibility and the Supersonic Era (Shrewsbury: Airlife, 1 990), p. 36. Beamont’s achievement remained largely secret; the first British pilot to fly through the speed of sound in a British airplane was John Derry, who did so in Sept. 1948.

41 . Quote from Nigel Walpole, Swift Justice: The Full Story of the Supermarine Swift (Barnsley, UK: Pen & Sword Books, 2004), p. 38.

[42] Charles Burnet, Three Centuries to Concorde (London: Mechanical Engineering Publications Ltd., 1979), pp. 121, 123.

[43] Michael Collins, Carrying the Fire: An Astronaut’s Journeys (New York: Farrar, Straus, and Giroux, 1 974), p. 9. Another Sabre veteran who went through Nellis at the same time recalled to the author how he once took off on a training sortie with ominous columns of lingering smoke from three earlier Sabre accidents.

[44] For development of control boost, artificial feel, and control limiting, see Robert G. Mungall, "Flight Investigation of a Combined Geared Unbalancing-Tab and Servotab Control System as Used with an All-Movable Horizontal Tail,’ NACA TN-1 763 (1 948); William H. Phillips, "Theoretical Analysis of Some Simple Types of Acceleration Restrictors,’ NACA TN-2574 (1951); R. Porter Brown, Robert G. Chilton, and James B. Whitten, "Flight Investigation of a Mechanical Feel Device in an Irreversible Elevator Control System of a Large Airplane,’ NACA Report No. 1 101 (1952); James J. Adams and James B. Whitten, "Tests of a Centering Spring Used as an Artificial Feel Device on the Elevator of a Fighter Airplane,’ NACA RM-L52G16; and Marvin Abramovitz, Stanley F. Schmidt, and Rudolph D. Van Dyke, Jr., "Investigation of the Use of a Stick Force Proportional to Pitching Acceleration for Normal-Acceleration Warning,’ NACA RM-A53E21 (1953).

[45] George E. Cooper and Robert C. Innis, "Effect of Area-Suction-Type Boundary-Layer Control on the Landing-Approach Characteristics of a 35° Swept-Wing Fighter,’ NACA RM-A55K14 (1957), p. 11. Other relevant Ames F-86 studies are: George A. Rathert, Jr., L. Stewart Rolls, Lee Wino – grad, and George E. Cooper, "Preliminary Flight Investigation of the Wing-Dropping Tendency and Lateral-Control Characteristics of a 35° Swept-Wing Airplane at Transonic Mach Numbers,’ NACA RM-A50H03 (1950); and George A. Rathert, Jr., Howard L. Ziff, and George E. Cooper, "Preliminary Flight Investigation of the Maneuvering Accelerations and Buffet Boundary of a 35° Swept-Wing Airplane at High Altitude and Transonic Speeds,’ NACA RM-A50L04 (1951).

[46] Edwin P. Hartman, Adventures in Research: A History of the Ames Research Center, 19401965, SP-4302 (Washington: NASA 1970), p. 252.

[47] A. Scott Crossfield with Clay Blair, Always Another Dawn: The Story of a Rocket Test Pilot (Cleveland: World Publishing Co., 1960), pp. 193-194. See also W. C. Williams and A. S. Crossfield, "Handling Qualities of High-Speed Airplanes,’ NACA RM-L52A08 (1952), p. 3; Melvin Sadoff, John D. Stewart, and George E. Cooper, "Analytical Study of the Comparative Pitch-Up Behavior of Several Airplanes and Correlation with Pilot Opinion,’ NACA RM-A57D04 (1957).

[48] Sadoff, Stewart, and Cooper, "Analytical Study of Comparative Pitch-Up Behavior,’ p. 1 2.

[49] S. A. Mikoyan, Stepan Anastasovich Mikoyan: An Autobiography (Shrewsbury: Airlife, 1999), p. 289.

[50] Maj. Gen. H. E. "Tom’ Collins, USAF (ret.), "Testing the Russian MiG,’ in Ken Chilstrom, ed., Testing at Old Wright Field (Omaha: Westchester House Publishers, 1991), p. 46.

51 . Two not so taken with the swept tailless configuration were Douglas aerodynamicist L. Eugene Root and former Focke-Wulf aerodynamicist Hans Multhopp. After an inspection trip to Messerschmitt in August 1945, Root wrote "a tailless design suffers a disadvantage of small allowable center of gravity travel. . . . Although equally good flying qualities can be obtained in either [tailless or conventional] case, the tailless design is considered more dangerous at very high speeds. For example, the Me 262 has been taken to a Mach number of 0.86 without serious difficulty, whereas the Me 163 could not exceed M = 0.82. For the Me 163 . . . it was not considered possible fundamentally to control the airplane longitudinally past M = 0.82 in view of a sudden diving moment and complete loss of elevator effectiveness.’ See L. E. Root, "Information of Messerschmitt Aircraft Design,’ Item Nos. 5, 25, File No. XXXII-37, Copy 079 (Aug. 1945), p. 3, Catalog D52.1Messerschmitt/144, in the Wright Field Microfilm Collection, National Air and Space Museum Archives, Paul E. Garber Restoration Facility, Silver Hill, MD. Focke-Wulf’s Hans Multhopp, designer of the influential T-tail sweptwing Ta 183, was even more dismissive. After the war, while working at the Royal Aircraft Establishment, he remarked that it constituted an "awful fashion;’ see Nahum, "I Believe. . .,’ in Trischler, et al., ed., Tackling Transport, p. 118. Multhopp later came to America, joining Martin and designing the SV-5 reentry shape that spawned the SV-5D PRIME, the X-24A, and the X-38.

[52] Burnet, Three Centuries to Concorde, p. 102.

[53] Crossfield with Blair, Always Another Dawn, p. 39; Melvin Sadoff and Thomas R. Sisk, "Longitudinal-Stability Characteristics of the Northrop X-4 Airplane (USAF No. 46-677)," NACA RM-A50D27 (1950); and Williams and Crossfield, "Handling Qualities of High-Speed Airplanes."

[54] Quote from Walpole, Swift Justice, pp. 58, 66. Walpole, a former Swift pilot, writes affectionately but frankly of its strengths and shortcomings. See also Burnet, Three Centuries to Concorde, pp. 1 27-1 28. Burnet was involved in analyzing Swift performance, and his book is an excellent review of Supermarine and other British efforts at this time.

[55] For the origins of the D-558 program, see Richard P. Hallion, Supersonic Flight: Breaking the Sound Barrier and Beyond—The Story of the Bell X-1 and Douglas D-558 (New York: The Macmillan Co. in association with the Smithsonian Institution, 1972).

[56] Particularly Bernard Gothert’s "Hochgeschwindigkeitmessungen an einem Pfeilflugel (Pfeilwin – kel ф = 35°),’ in the previously cited Lilienthal-Gesellschaft, Allgemeine Stromungsforschung, pp. 30-40, subsequently translated and issued by the NACA as "High-Speed Measurements on a Swept-Back [sic] Wing (Sweepback Angle ф = 35°),’ NACA TM-1 102 (1947), which directly influenced design of the 35-degree swept wings employed on the F-86, the B-47, and the D-558-2. Gothert, incidentally, used NACA airfoil sections for his studies, another example of the Agency’s pervasive international influence. At war’s end he was in Berlin; when ordered to report to Russian authorities, he instead fled the city, making his way back to Gottingen, where he met Douglas engineer Apollo M. O. Smith, with the Naval Technical Mission to Europe. Smith arranged for him to immigrate to the United States, where he had a long and influential career, rising to Chief Scientist of Air Force Systems Command, a position he held from 1964 to 1966. See Tuncer Cebeci, ed., Legacy of a Gentle Genius: The Life of A. M.O. Smith (Long Beach: Horizons Publishing, Inc.,

1999), p. 32. I acknowledge with grateful appreciation notes and correspondence received from members of the D-558 design team in 1971-1972, including the late Edward Heinemann,

L. Eugene Root, A. M.O. Smith, Kermit Van Every, and Leo Devlin, illuminating the origins of the Skystreak and Skyrocket programs.

[57] Hallion, Supersonic Flight, pp. 151-152, based upon D-558 biweekly progress reports. As well, I thank the late Robert Champine for his assistance to my research. See also S. A. Sjoberg and R. A. Champine, "Preliminary Flight Measurements of the Static Longitudinal Stability and Stalling Characteristics of the Douglas D-558-II Research Airplane (BuAero No. 37974),’ NACA RM – L9H31a (1949); W. H. Stillwell, J. V. Wilmerding, and R. A. Champine, "Flight Measurements with the Douglas D-558-II (BuAero No. 37974) Research Airplane Low-Speed Stalling and Lift Characteristics,’ NACA RM-L50G10 (1950).

[58] Jack Fischel and Jack Nugent, "Flight Determination of the Longitudinal Stability in Accelerated Maneuvers at Transonic Speeds for the Douglas D-558-II Research Airplane Including the Effects of an Outboard Wing Fence," NACA RM-L53A16 (1953); Jack Fischel, "Effect of Wing Slats and Inboard Wing Fences on the Longitudinal Stability Characteristics of the Douglas D-558-II Research Airplane in Accelerated Maneuvers at Subsonic and Transonic Speeds," NACA RM-L53L16 (1954); Jack Fischel and Cyril D. Brunn, "Longitudinal Stability Characteristics in Accelerated Maneuvers at Subsonic and Transonic Speeds of the Douglas D-558-II Research Airplane Equipped with a Leading-Edge Wing Chord-Extension," NACA RM-H54H16 (1954); M. J. Queijo, Byron M. Jaquet, and Walter D. Wolmart, "Wind-Tunnel Investigation at Low Speed of the Effects of Chord – wise Wing Fences and Horizontal-Tail Position on the Static Longitudinal Stability Characteristics of an Airplane Model with a 35° Sweptback Wing," NACA Report 1 203 (1954); Jack Fischel and Donald Reisert, "Effect of Several Wing Modifications on the Subsonic and Transonic Longitudinal Handling Qualities of the Douglas D-558-II Research Airplane," NACA RM-H56C30 (1956).

[59] Robert O. Rahn, "XF4D Skyray Development: Now It Can Be Told,’ 22nd Symposium, Society of Experimental Test Pilots, Beverly Hills, CA, Sept. 30, 1978; and Edward H. Heinemann and Rosario Rausa, Ed Heinemann: Combat Aircraft Designer (Annapolis: Naval Institute Press, 1980), p. 192. Years later, another Skyray pilot at the Naval Air Test Center experienced a similar mishap, likewise making a near-miraculous recovery; the plane was so badly stressed that it never flew again.

[60] Meyer, Flight Journal, pp. 1 96-198; he was nearly killed on one low-altitude low-speed pitch-up that ended in a near-fatal spin. The Cougar’s approach behavior resulted in a Langley research program flown using a F9F-7 variant, which highlighted the need for more powerful, responsive, and controllable aircraft, such as the later McDonnell F4H-1 Phantom II. See Lindsay J. Lina, Garland J. Morris, and Robert A. Champine, "Flight Investigation of Factors Affecting the Choice of Minimum Approach Speed for Carrier-Type Landings of a Swept-Wing Jet Fighter Airplane,’ NACA RM-L57F1 3 (1957).

61 . Robert C. Little, "Voodoo! Testing McAir’s Formidable F-101,’ Air Power History, vol. 41, no.

1 (spring 1 994), pp. 6-7. In Britain, designer George Edwards likewise added anhedral (though more modest than the Phantom’s) to the Supermarine Scimitar, another pitch-up plagued swept wing fighter. See Robert Gardner, From Bouncing Bombs to Concorde: the Authorised Biography of Aviation Pioneer Sir George Edwards OM (Stroud, UK: Sutton Publishing, 2006), p. 125. Though not per se a swept wing aircraft, the Lockheed F-104 Starfighter, another T-tail design, likewise experienced tail-blanketing and consequent pitch-up, necessitating installation of a stick-kicker and imposing of limitations on high angle-of-attack maneuvering. At the time of its design, the benefits of a low-placed tail were already recognized, and it is surprising that Clarence "Kelly’ Johnson, Lockheed’s legendary designer, did not incorporate one. Certainly afterward, he recognized its value, for when, in 1971, he proposed a lineal derivative of the F-104, the CL-1 200 Lancer (subsequently designated the X-27 but never built and flown), as a lightweight NATO export fighter, it featured a low, not high, all-moving horizontal tail. For X-27 see Jay Miller, The X-Planes: X-1 to X-45 (Hinckley, UK: Midland Publishing, 2001), pp. 284-289.

[62] See Joseph Weil, Paul Comisarow, and Kenneth W. Goodson, "Longitudinal Stability and Control Characteristics of an Airplane Model Having a 42.8° Sweptback Circular-Arc Wing with Aspect Ratio 4.00, Taper Ratio 0.60, and Sweptback Tail Surfaces,’ NACA RM-L7G28 (1947). Considerable debate likewise existed on whether the XS-2 should have a shoulder-mounted wing with anhedral, a midwing (like the XS-1) without any dihedral or anhedral, or a low wing with or without dihedral. Bell opted for a low wing with slight dihedral.

[63] Harold F. Kleckner, "Preliminary Flight Research on an All-Movable Horizontal Tail as a Longitudinal Control for Flight at High Mach Numbers,’ NACA ARR-L5C08 (Mar. 1945), p. 1.

[64] Harold F. Kleckner, "Flight Tests of an All-Movable Horizontal Tail with Geared Unbalancing Tabs on the Curtiss XP-42 Airplane,’ NACA TN-1 1 39 (1946).

[65] Hubert M. Drake and John R. Carden, "Elevator-Stabilizer Effectiveness and Trim of the X-1 Airplane to a Mach Number of 1.06,’ NACA RM-L50G20 (1950). Despite the 1950 publication date, this report covers the results of XS-1 testing from Oct. 1946 through the first supersonic flight to M = 1 .06 on Oct. 14, 1 947. European designers recognized the value of such a tail layout as well. The Miles M.52, a jet-powered supersonic research airplane intemperately canceled by the British Labour government, would have incorporated similar surfaces; "This unfortunate decision,’

Sir Roy Fedden wrote a decade later, "cost us at least ten years in aeronautical progress.’ See

his Britain’s Air Survival: An Appraisement and Strategy for Success (London: Cassell & Co., Ltd., 1957), p. 20.

[66] Jack D. Brewer and Jacob H. Lichtenstein, "Effect of Horizontal Tail on Low-Speed Static Lateral Stability Characteristics of a Model Having 45° Sweptback Wing and Tail Surfaces,’ NACA TN – 2010 (1950); and Jacob H. Lichtenstein, "Experimental Determination of the Effect of Horizontal-Tail Size, Tail Length, and Vertical Location on Low-Speed Static Longitudinal Stability and Damping in Pitch of a Model Having 45° Sweptback Wing and Tail Surfaces,’ NACA Report 1 096 (1952).

[67] William J. Alford, Jr., and Thomas B. Pasteur, Jr., "The Effects of Changes in Aspect Ratio and Tail Height on the Longitudinal Stability Characteristics at High Subsonic Speeds of a Model with a Wing Having 32.6° Sweepback,’ NACA RM-L53L09 (1953), p. 1.

[68] Norman M. McFadden and Donovan R. Heinle, "Flight Investigation of the Effects of Horizontal-Tail Height, Moment of Inertia, and Control Effectiveness on the Pitch-up Characteristics of a 35° Swept-Wing Fighter Airplane at High Subsonic Speeds,’ NACA RM-A54F21 (1955).

[69] W. Hewitt Phillips, Journey in Aeronautical Research: A Career at NASA Langley Research Center, No. 1 2 in the Monographs in Aerospace History Series (Washington: NASA, 1 998), p. 70; for an excellent survey, see Richard E. Day, Coupling Dynamics in Aircraft: A Historical Perspective, SP-532 (Washington: NASA, 1997).

[70] Phillips, Journey in Aeronautical Research, p. 72.

71 . William H. Phillips, "Effect of Steady Rolling on Longitudinal and Directional Stability,’ NACA TN-1627 (1948), pp. 1-2.

[72] William H. Phillips, "Appreciation and Prediction of Flying Qualities," NACA Report No. 927 (1949), p. 32.

[73] James H. Parks, "Experimental Evidence of Sustained Coupled Longitudinal and Lateral Oscillations from a Rocket-Propelled Model of a 35° Swept Wing Airplane Configuration,’ NACA RM-L54D15 (1 954). For more on Wallops testing, see Joseph A. Shortal, A New Dimension: Wallops Island Flight Test Range: The First Fifteen Years, RP-1 028 (Washington: NASA, 1978), pp. 256-257. For the record, the wingspan-to-fuselage ratio of the model was 0.59, significantly lower than the XS-1.

[74] It is worth noting that the advanced X-1A (and X-1 B and X-1 D) had a wingspan-to-fuselage length ratio of 0.79, compared to the 0.90 XS-1, the drop model of which first encountered inertial coupling. Their longer fuselage forebody likewise contributed even further to their tendency toward lateral-directional instability.

[75] Yeager pilot report and attached transcript, Dec. 23, 1953; J. L. Powell, Jr., "X-1A Airplane Contract W33-038-ac-20062, Flight Test Progress Report No. 15, Period From 9 December through 20 December 1953,’ Bell Aircraft Corporation Report No. 58-980-019 (Feb. 3, 1954), both from AFFTC History Office archives. I thank the staff of the AFFTC History Office and the NASA DFRC Library and Archives for locating these and other documents.

[76] Hubert M. Drake and Wendell H. Stillwell, "Behavior of the Bell X-1A Research Airplane During Exploratory Flights at Mach Numbers Near 2.0 and at Extreme Altitude,’ NACA RM-H55G25 (1955), p. 10.

[77] Alfred D. Phillips and Lt. Col. Frank K. Everest, USAF, "Phase II Flight Test of the North American YF-100 Airplane USAF No. 52-5754,’ AFFTC TR-53-33 (1953), Appendix I, p. 8. For the difference between fighter pilots and test pilots in regarding the F-1 00, see Brig. Gen. Frank. K. Everest, Jr., with John Guenther, The Fastest Man Alive (New York: Bantam, 1 990 ed.), pp. 6, 11-1 3.

[78] James R. Peele, "Memorandum for Research Airplane Projects Leader [Hartley A. Soule, hereafter RAPL]: Results of Flights 2, 3, and 4 of the F-100A (52-5778) airplane’ (Nov. 19, 1954), DFRC Archives.

[79] Ronald-Bel Stiffler, The History of the Air Force Flight Test Center: 1 January 1954-30June 1954 (Edwards AFB: AFFTC, July 13, 1955), vol. 1, pp. 66-67, copy in AFFTC History Office archives.

[80] NACA High-Speed Flight Station, "Flight Experience with Two High-Speed Airplanes Having Violent Lateral-Longitudinal Coupling in Aileron Rolls,’ NACA RM-H55A1 3 (1955), p. 4.

81 . Joseph Weil, "Memo to RAPL: Visit of HSFS personnel to North American Aviation, Inc. on Nov. 8, 1954′ (Nov. 19, 1954), DFRC Archives.

[82] Peele memo to RAPL, Nov. 19, 1954; for Langley REAC studies, see Charles J. Donlan [NACA LRC], "Memo for Associate Director [Floyd Thompson]: Industry-Service-NACA Conference on F-100, Dec. 16, 1954′ (Dec. 28. 1954), DFRC Archives.

[83] Joseph Weil and Walter C. Williams, "Memo for RAPL: Meeting of NACA and Air Force personnel at North American Aviation, Inc on Monday, Nov. 22, 1 954, to discuss means of expediting solution of stability and control problems on the F-100A airplane’ (Nov. 26, 1954), DFRC Archives; Thomas W. Finch, "Memo for RAPL: Progress report for the F-1 00A (52-5778) airplane for the period Nov. 1 to Nov. 30, 1954′ (Dec. 20, 1954), DFRC Archives; Hubert M. Drake, Thomas W. Finch, and James R. Peele, "Flight Measurements of Directional Stability to a Mach Number of 1 .48 for an Airplane Tested with Three Different Vertical Tail Configurations,’ NACA RM-H55G26 (1955); Marion H. Yancey, Jr., and Maj. Stuart R. Childs, USAF, "Phase IV Stability Tests of the F-100A Aircraft, USAF S/N 52-5767,’ AFFTC TR-55-9 (1955); 1 st Lt. David C. Leisy, USAF, and Capt. Hugh P. Hunerwadel, USAF, "ARDC F-100D Category II Performance Stability and Control Tests,’ AFFTC TR-58-27 (1958).

[84] See, for example, Robert G. Hoey and Capt. Iven C. Kincheloe, USAF, "ARDC F-104A Stability and Control,’ AFFTC TR-58-14 (1958); and Capt. Slayton L. Johns, USAF, and Capt. James W. Wood, USAF, "ARDC F-104A Stability and Control with External Stores,’ AFFTC TR-58-14, Addendum 1 (July 1959).

[85] For example, Thomas R. Sisk and William H. Andrews, "Flight Experience with a Delta-Wing Airplane Having Violent Lateral-Longitudinal Coupling in Aileron Rolls,’ NACA RM-H55H03 (1955).

[86] William H. Phillips [NACA LRC], "Memo for Associate Director: Flight program for F-100A airplane’ (Aug. 10, 1953), DFRC Archives. Even odder, it was Phillips who had identified inertial coupling in TN-1627 in 1948!

[87] The best known was Capt. Milburn "Mel’ Apt, who died in late 1956. His Bell X-2 went out of control as he turned back to Edwards after having attained Mach 3.2, possibly because of lagging instrumentation readings leading him to conclude he was flying at a slower speed. Undoubtedly the nearly decade-old design of the X-2 contributed to its violent coupling tendencies. It is sobering that in 1 947 NACA had evaluated some design options (tail location, vertical fin design) that, had Bell incorporated them on the X-2, might have turned Apt’s accident into an incident. See Ronald Bel Stiffler, The Bell X-2 Rocket Research Aircraft: The Flight Test Program (Edwards AFB: Air Force Flight Test Center, 1957); and Richard E. Day and Donald Reisert, "Flight Behavior of the X-2 Research Airplane to a Mach Number of 3.20 and a Geometric Altitude of 1 26,200 Feet,’ NACA TM-X – 137 (1959).

[88] Keneth Owen, Concorde: Story of a Supersonic Pioneer (London: Science Museum, 2001), pp. 21-60; Andrew Nahum, "The Royal Aircraft Establishment from 1945 to Concorde,’ in Robert Bud and Philip Gummett, eds., Cold War, Hot Science: Applied Research in Britain’s Defence Laboratories, 1945-1990 (London: Science Museum, 1999), pp. 29-58; and Andersson, Saab Aircraft, pp. 1 24-1 29.

[89] Even the official Air Force history of the service’s postwar fighter development repeats the canard, though it does acknowledge that "low-aspect-ratio wing forms were also studied by the U. S. National Advisory Committee for Aeronautics.’ See Marcelle Size Knaack, Post-World War II Fighters 1945-1973, vol. 1 of Encyclopedia of U. S. Air Force Aircraft and Missile Systems (Washington: Office of Air Force History, 1978), p. 159, no. 1.

[90] Letter, Adolph Burstein to Richard P. Hallion, Jan. 25, 1972. Despite his "Germanic’ name, Burstein, one of the XF-92A’s designers, was not a German scientist or engineer who came to America after 1945. Rather, he was a Russian emigree from St. Petersburg who had come to the United States in 1925.

91 . See Hallion, "Lippisch Gluhareff, and Jones,’ and R. P. Hallion, "Convair’s Delta Alpha,’ Air Enthusiast Quarterly, No. 2 (1976).

[92] Edward H. Heinemann, "Design of High-Speed Aircraft,’ a paper presented at the Fifth International Aeronautical Conference, Royal Aeronautical Society-Institute of the Aeronautical Sciences, Los Angeles, CA, June 20-24, 1955, p. 3. Copy from the Boeing-McDonnell Douglas Archives.

[93] Ltr., Maj. Howard C. Goodell, USAF, to Paul E. Garber, "DM-1 Glider Disposal,’ Nov. 28,

1949, in Gluhareff Dart accession file, National Air and Space Museum.

[94] For Langley’s progressive evaluation and modification of the DM-1, see two reports by Herbert A. Wilson, Jr., and J. Calvin Lovell, "Full Scale Investigation of the Maximum Lift and Flow Characteristics of an Airplane Having Approximately Triangular Plan Form,’ NACA RM-L6K20 (1947); and "Langley Full-Scale Tunnel Investigation of Maximum Lift and Stability Characteristics of an Airplane Having Approximately Triangular Plan Form (DM-1 Glider), NACA RM-L7F16 (1947). Changes are detailed in RM L7F16, Fig. 4. The closest expression of Germanic delta philosophy in America was not a Convair delta, but a Douglas one: the Navy-Marine F4D-1 Skyray fighter. Its design was greatly influenced by German tailless and swept wing reports Douglas engineers L. Eugene Root and Apollo M. O. Smith had discovered while assigned to an Allied technical intelligence team examining the Messerschmitt advanced projects office at Oberammergau and interviewing its senior personnel, particularly chief designer Woldemar Voigt; I wish to acknowledge with gratitude notes on their experiences received in 1972 from both the late L. Eugene Root and A. M.O. Smith. See also Cebeci, ed., Legacy of a Gentle Genius, pp. 30-36.

[95] R. M. Cross, "Characteristics of a Triangular-Winged Aircraft: 2: Stability and Control,’ in NACA, Conference on Aerodynamic Problems of Transonic Airplane Design (1 947), pp. 163-1 86, and Figs. 6 and 1 2. See also Edward F. Whittle, Jr., and J. Calvin Lovell, "Full-Scale Investigation of an Equilateral Triangular Wing Having 10-percent-Thick Biconvex Airfoil Sections, NACA RM-L8G05 (1948), Fig. 2.

[96] Crossfield with Blair, Always Another Dawn, p. 167; Thomas R. Sisk and Duane O. Muhle – man, "Longitudinal Stability Characteristics in Maneuvering Flight of the Convair XF-92A Delta-Wing Airplane Including the Effects of Wing Fences," NACA RM-H4J27 (1955).

[97] Everest with Guenther, Fastest Man Alive, p. 109.

[98] R. T. Jones, "Characteristics of a Configuration with a Large Angle of Sweepback," in NACA, Conference on Aerodynamic Problems of Transonic Airplane Design (1947), pp. 165-168, Figs. 1 -6.

[99] Charles F. Hall and John C. Heitmeyer, "Aerodynamic Study of a Wing-Fuselage Combination Employing a Wing Swept Back 63°—Characteristics at Supersonic Speeds of a Model with the Wing Twisted and Cambered for Uniform Load," NACA RM-A9J24 (1950).

[100] Though no transport or military aircraft ever flew with such a slender swept wing, just such a configuration was subsequently employed on the largest swept wing tailless vehicle ever flown, the Northrop Snark intercontinental cruise missile. Though the Snark did not enter operational service for a variety of other reasons, it did demonstrate that, aerodynamically, such a wing configuration was eminently suitable for long-range transonic cruising flight.

[101] Charles F. Hall, "Lift, Drag, and Pitching Moment of Low-Aspect Ratio Wings at Subsonic and Supersonic Speeds," NACA RM-A53A30 (1953). For the views of an Ames onlooker, see Hartman, Adventures in Research, pp. 202-207.

[102] Quoted in William E. Andrews, Thomas R. Sisk, and Robert W. Darville, "Longitudinal Stability Characteristics of the Convair YF-102 Airplane Determined from Flight Tests,’ NACA RM-H56117 (1956), p. 1; see also Edwin J. Saltzman, Donald R. Bellman, and Norman T. Musialowski, "Flight-Determined Transonic Lift and Drag Characteristics of the YF-1 02 Airplane With Two Wing Configurations,’ NACA RM-H56E08 (1956).

[103] Although it still experienced some troubled sailing: like most of the Century series fighters, the F-1 02 had other, more tortuous acquisition and program management problems unrelated to its aerodynamics that contributed to its delayed service entry. See Thomas A. Marschak, The Role of Project Histories in the Study of R&D, Rand report P-2850 (Santa Monica: The Rand Corporation, 1965), pp. 66-81; and Knaack, Fighters, pp. 163-167.

[104] H. Julian Allen and A. J. Eggers, Jr., "A Study of the Motion and Aerodynamic Heating of Ballistic Missiles Entering the Earth’s Atmosphere at High Supersonic Speeds,’ NACA TR-1 381 (1 953); Hartman, Adventures in Research, pp. 215-21 8.

[105] A. J. Eggers, Jr., and Clarence A. Syvertson, "Aircraft Configurations Developing High Lift-Drag Ratios at High Supersonic Speeds,’ NACA RM-A55L05 (1956), p. 1.

[106] Ames staff, "Preliminary Investigation of a New Research Airplane for Exploring the Problems of Efficient Hypersonic Flight,’ (Jan. 18, 1957), copy in the archives of the Historical Office, NASA Johnson Space Center, Houston, TX. Drawings and more data on this concept can be found in Richard P. Hallion, ed., From Max Valier to Project PRIME (1924-1967), vol. 1 of The Hypersonic Revolution: Case Studies in the History of Hypersonic Technology (Washington: USAF, 1998), pp. II – vi-II-x. Round One, in NACA parlance, was the original X-1 and D-558 programs. Round Two was the X-1 5. Round Three was what eventually emerged as the X-20 Dyna-Soar development effort.

[107] See John V. Becker, "The Development of Winged Reentry Vehicles, 1952-1963,’ in Hallion, ed., Hypersonic Revolution, vol. 1, pp. 379-448. It is worth noting that one significant aircraft project did use the Eggers-Syvertson wing but in a modified form: the massive North American XB-70A Valkyrie Mach 3+ experimental bomber. The XB-70 had its six engines, landing gear, and weapons bays located under the wing in a large wedge-shaped centerbody. The long, cobralike nose ran forward from the wing and featured canard control surfaces. Its sharply swept delta wing had outer wing panels that could entrap the lateral momentum off the ventral centerbody and transfer it downward to furnish compression lift.

[108] For further detail, see R. Dale Reed with Darlene Lister, Wingless Flight: The Lifting Body Story, SP-4220 (Washington: NASA 1997); Milton O. Thompson and Curtis Peebles, Flying Without Wings: NASA Lifting Bodies and The Birth of the Space Shuttle (Washington: Smithsonian Institution Press, 1 999); and Johnny G. Armstrong, "Flight Planning and Conduct of the X-24B Research Aircraft Flight Test Program,’ Air Force Flight Test Center TR-76-1 1 (1977).

[109] Spacecraft Design Division, Summary of MSC Shuttle Configurations External HO Tanks! (Houston: Manned Spacecraft Center, June 30, 1972, rev. ed.), passim. I thank the late Dr. Edward C. Ezell for making a copy of this document available for my research. The range of configurations and wind tunnel testing done in support of Shuttle development is in A. Miles Whitnah and Ernest R. Hillje, "Space Shuttle Wind Tunnel Testing Summary,’ NASA Reference Publication 1 1 25 (1984), esp. pp. 5-7. See also Alfred C. Draper, Melvin L. Buck, and William H. Goesch, "A Delta Shuttle Orbiter.’ Astronautics & Aeronautics, vol. 9, No. 1 (Jan. 1971), pp. 26-35 (I acknowledge with gratitude the assistance and advice of the late Al Draper, while we both worked at Aeronautical Systems Division, Wright-Patterson AFB, in 1986-1987); Joseph Weil and Bruce G. Powers, "Correlation of Predicted and Flight Derived Stability and Control Derivatives with Particular Application to Tailless Delta Wing Configurations,’

NASA TM-81 361 (July 1981); and J. P. Loftus, Jr., et al. "The Evolution of the Space Shuttle Design,’ a reference paper prepared for the Rogers Commission, 1986 (copy in NASA JSC History Office archives). The evolution of Shuttle configuration evolution is examined more broadly in Richard P. Hallion and James O. Young, "Space Shuttle: Fulfillment of a Dream,’ in Hallion, ed., From Scramjet to the National Aero-Space Plane (1964-1986), vol. 2 of The Hypersonic Revolution: Case Studies in the History of Hypersonic Technology (Washington: USAF, 1998) pp. 947-1173.

1 1 0. The best survey of v-g origins remains Robert L. Perry’s Innovation and Military Requirements: A Comparative Study, Rand Report RM-51 82PR (Santa Monica: The Rand Corporation, 1967), upon which this account is based.

[111] The history of the X-5 is examined minutely in Warren E. Green’s The Bell X-5 Research Airplane (Wright-Patterson AFB: Wright Air Development Center, March 1954). For NACA work, see LRC staff, "Summary of NACA/NASA Variable-Sweep Research and Development Leading to the F-1 1 1 (TFX)," Langley Working Paper LWP-285 (Dec. 22, 1966).

1 1 2. Corwin H. Meyer, "Wild, Wild Cat: The XF10F," 20th Symposium, The Society of Experimental Test Pilots, Beverly Hills, CA, Sept. 15, 1976.

1 1 3. For meeting, see LRC staff, "Summary of NACA/NASA Variable-Sweep Research and Development,’ p. 8; and J. E. Morpurgo, Barnes Wallis: a Biography (Harmondsworth, UK: Penguin Books, 1973), p. 423. NASA Langley Photograph L58-771a, dated Nov. 1 3, 1958, documents the Stack-Wallis meeting; it is also catalogued as NASA LaRC image EL-2008-00001.

1 14. LRC, "Summary of NACA/NASA Variable-Sweep Research;" see also William J. Alford, Jr., and William P. Henderson, "An Exploratory Investigation of Variable-Wing-Sweep Airplane Configurations," NASA TM-X-142 (1959); William J. Alford, Jr., Arvo A. Luoma, and William P. Henderson, "Wind – Tunnel Studies at Subsonic and Transonic Speeds of a Multiple-Mission Variable-Wing-Sweep Airplane Configuration," NASA TM-X-206 (1959); and Gerald V. Foster and Odell A. Morris, "Aerodynamic Characteristics in Pitch at a Mach Number of 1.97 of Two Variable-Wing-Sweep V/STOL Configurations with Outboard Wing Panels Swept Back 75°," NASA TM-X-322 (1960).

1 15. Morpurgo, Wallis, p. 422, and Derek Wood, Project Cancelled: British Aircraft that Never Flew (Indianapolis: The Bobbs-Merrill Company, Inc., 1975), pp. 1 82-195. After the Nov. 1958 meeting, NASA tunnel tests revealed very great deficiencies attending his tailless concept that Stack and others reported back to Vickers in June 1959. In short, the outboard pivot was but one element necessary for making a successful v-g aircraft. Others were provision for a conventional tail and design of a practicable airframe. In short, Wallis had an idea, but it took Alford and Polhamus and other NASA researchers to refine it and render it achievable.

1 1 6. NASA F-111 tunnel research, analysis, and support is detailed in Testimony of Edward C. Pol – hamus, in U. S. Senate, TFX Contract Investigation (Second Series): Hearings Before the Permanent Subcommittee on Investigations of the Committee on Government Operations, United States Senate, 91st Congress, 2nd Session, Part 2 (Washington: GPO, 1970), pp. 339-363; for the F-1 1 1 in Desert Storm, see Tom Clancy with Gen. Chuck Horner (New York: G. P. Putnam’s Sons, 1999), pp. 318, 417, 424, and 450.

1 17. See Joseph R. Chambers, Partners in Freedom: Contributions of the Langley Research Center to U. S. Military Aircraft of the 1990s, SP-20004519 (Washington; NASA, 2000), which treats these and other programs in great and authoritative detail.

1 1 8. Robert W. Kress, "Variable Sweep Wing Design," AIAA Paper No. 83-1051 (1983) is an excellent survey. The Su-24 was clearly F-1 1 1 inspired, and the Tu-1 60 was embarrassingly similar in configuration to the American B-1.

1 19. John P. Campbell and Hubert M. Drake, "Investigation of Stability and Control Characteristics of an Airplane Model with Skewed Wing in the Langley Free-Flight Tunnel,’ NACA TN-1 208 (May 1947), p. 10.

1 20. Richard P. Hallion and Michael H. Gorn, On the Frontier: Experimental Flight at NASA Dryden (Washington: Smithsonian Books, 2002), pp. 256-260, and personal recollections of the program from the time.

[121] De E. Beeler, Donald R. Bellman, and John H. Griffith, "Flight Determination of the Effects of Wing Vortex Generators on the Aerodynamic Characteristics of the Douglas D-558-I Airplane,’ NACA RM-L51A23 (1951).

1 22. All three bore the imprint of Richard Whitcomb and thus, in this survey, are not examined in detail, since his work is more thoroughly treated in a companion essay by Jeremy Kinney.

1 23. LRC staff, "The Supersonic Transport—A Technical Summary,’ NASA TN-D-423 (1960), p. 93; this was the summary report of the briefings presented the previous fall to Quesada. NASA research on supersonic cruise is the subject of a companion essay in this study, by William Flanagan, and Whitcomb’s work is detailed in the previously cited Kinney study in this volume.

1 24. In FY 1 968, NASA expended $10.8 million in then-year dollars on SST research at Langley, Ames, and Lewis, against a total aeronautics research expenditure of $42.9 million at those Centers. See Testimony of James E. Webb in U. S. Senate, Aeronautical Research and Development Policy: Hearings Before the Committee on Aeronautical and Space Sciences, United States Senate, 90th Congress, 1st Session (Washington: GPO, 1967), p. 39.

1 25. Lyndon B. Johnson, "President’s Message on Transportation,’ Mar. 2, 1 966, reprinted in Legislative Reference Service of the Library of Congress, Policy Planning for Aeronautical Research and Development: Staff Report Prepared for Use of the Committee on Aeronautical and Space Sciences United States Senate by the Legislative Reference Service Library of Congress, Document No. 90, U. S. Senate, 89th Congress, 2nd Session (Washington: GPO, 1 966), pp. 50-51 .

1 26. For various perspectives on Anglo-French-Soviet-American SST development, see Kenneth Owen, Concorde: Story of a Supersonic Pioneer (London: Science Museum, 2001); Howard Moon, Soviet SST: The Technopolitics of the Tupolev Tu-144 (New York: Orion Books, 1989); R. E.G. Davies, Supersonic (Airliner) Non-Sense: A Case Study in Applied Market Research (McLean, VA: Paladwr Press,

1998); Mel Horwitch, Clipped Wings: The American SST Conflict (Cambridge: The MIT Press, 1982); and Eric M. Conway, High-Speed Dreams: NASA and the Technopolitics of Supersonic Transportation, 1945-1999 (Baltimore: The Johns Hopkins Press, 2005).

1 27. Deep stall is a dangerous condition wherein an airplane pitches to a high angle of attack, stalls, and then descends in a stabilized stalled attitude, impervious to corrective control inputs. It is more typically encountered by swept wing T-tail aircraft, and one infamous British accident, to a BAC 1-1 1 airliner, claimed the life of a crack flight-test crew captained by the legendary Mike Lithgow, an early supersonic and sweptwing pioneer.

1 28. Langley’s SCAT studies are summarized in David A. Anderton, Sixty Years of Aeronautical Research, 1917-1977, EP-145 (Washington: NASA, 1978), pp. 54-58. Relevant reports on specific configurations and predecessors include: Donald D. Baals, Thomas A. Toll, and Owen G. Morris, "Airplane Configurations for Cruise at a Mach Number of 3,” NACA RM-L58E 14a (1958); Odell A. Morris and A. Warner Robins, "Aerodynamic Characteristics at Mach Number 2.01 of an Airplane Configuration Having a Cambered and Twisted Arrow Wing Designed for a Mach Number of 3.0,’ NASA TM-X-1 15 (1959); Cornelius Driver, M. Leroy Spearman, and William A. Corlett, "Aerodynamic Characteristics at Mach Numbers From 1.61 to 2.86 of a Supersonic Transport Model With a Blended Wing-Body, Variable-Sweep Auxiliary Wing Panels, Outboard Tail Surfaces, and a Design Mach Number of 2.2,’ NASA TM-X-817 (1963); Odell A. Morris and James C. Patterson, Jr., "Transonic Aerodynamic Characteristics of Supersonic Transport Model With a Fixed, Warped Wing Having 74° Sweep,’ NASA TM-X-1 167 (1965); Odell A. Morris, and Roger H. Fournier, "Aerodynamic Characteristics at Mach Numbers 2.30, 2.60, and 2.96 of a Supersonic Transport Model Having Fixed, Warped Wing,’ NASA TM-X-1 115 (1965); A. Warner Robins, Odell A. Morris, and Roy V. Harris, Jr., "Recent Research Results in the Aerodynamics of Supersonic Vehicles,’ AIAA Paper 65-717 (1965); Donald D. Baals, A. Warner Robins, and Roy V. Harris, Jr., "Aerodynamic Design Integration of Supersonic Aircraft,’ AIAA Paper 68-101 8 (1968); Odell A. Morris, Dennis E. Fuller, and Carolyn B. Watson, "Aerodynamic Characteristics of a Fixed Arrow-Wing Supersonic Cruise Aircraft at Mach Numbers of 2.30, 2.70, and 2.95,’ NASA TM-78706 (1978); and John P. Decker and Peter F. Jacobs, "Stability and Performance Characteristics of a Fixed Arrow Wing Supersonic Transport Configuration (SCAT 15F-9898) at Mach Numbers from 0.60 to 1.20,’ NASA TM-78726 (1978).

1 29. Harry J. Hillaker, "The F-16: A Technology Demonstrator, a Prototype, and a Flight Demonstrator,’ AIAA Paper No. 83-1063 (1983). The "XL’ designation for the cranked-arrow F-16 reflected Harry Hillakers passionate interest in golf, for it echoed the name of a particularly popular longdistance golf ball, the Top Flite XL. See also Chambers, Innovation in Flight, pp. 42, 48, 58-59.

1 30. For Junkers, see Hugo Junkers, Gleiflieger mit zur Aufnahme von nicht Auftrieg erzeugen Teilen dienenden Hohlkorpen, Patentschrift Nr. 253788, Klasse 77h, Gruppe 5 (Berlin: Reichspatentamt, Nov. 14, 191 2). For Liebeck, see Robert H. Liebeck, Mark A. Page, Blaine K. Rawdon, Paul W. Scott, and Robert A. Wright, "Concepts for Advanced Subsonic Transports,’ NASA CR-4624 (1994); Robert H. Liebeck, "Design of the Blended Wing Body Subsonic Transport,’ Journal of Aircraft, vol. 41, no. 1 (Jan.-Feb. 2004). pp. 10-25; and Chambers, Innovation in Flight, pp. 86-92.

[131] These included heavy-lift cargo, air-refueling, and other military missions rather than use as a civil airliner. See NASA LRC, "The Blended-Wing-Body: Super Jumbo Jet Concept Would Carry 800 Passengers," NASA Facts, FS-1997-07-24-LaRC July 1997); and NASA LRC, "The Blended Wing Body: A Revolutionary Concept in Aircraft Design," NASA Facts, FS-2001-04-24-LaRC (Apr. 2001). For an early appreciation of the military value of BWB designs, see Gene H. McCall, et al., Aircraft & Propulsion, a volume in the New World Vistas: Air and Space Power for the 21st Century series (Washington: HQ USAF Scientific Advisory Board, 1995), p. 6.