Dryden Flight Research Center

NASA Dryden has a deserved reputation as a flight research and flighttesting center of excellence. Its personnel had been technically responsible for flight-testing every significant high-performance aircraft since the advent of the world’s first supersonic research airplane, the Bell XS-1. When this facility first became part of the NACA, as the Muroc Flight Test Unit in the late 1940s, there was no overall engineering functional organization. There was a small team attached to each test aircraft, consisting of a project engineer, an engineer, and "computers”—highly skilled women mathematicians. There were also three supporting groups: Flight Operations (pilots, crew chiefs, and mechanics), Instrumentation, and Maintenance. By 1954, however, the High-Speed Flight Station (as it was then called) had been organized into four divisions: Research, Flight Operations, Instrumentation, and Administrative. The Research division included three branches: Stability & Control, Loads, and Performance.

Shortly thereafter, Instrumentation became Data Systems, to include Computing and Simulation (sometimes together, sometimes separately). There were changes to the organization, mostly gradual, after that, but these essential functions were always present from that time forward.[862] There are approximately 50 people in the structures, structural dynamics, and loads disciplines.[863]

Analysis efforts at Dryden include establishing safety of flight for the aircraft tested there, flight-test and ground-test data analysis, and the development and improvement of computational methods for prediction. Commercially available codes are used when they meet the need, and inhouse development is undertaken when necessary. Methods development has been conducted in the fields of general finite element analysis, reentry problems, fatigue and structural life prediction, structural dynamics and flutter, and aeroservoelasticity.

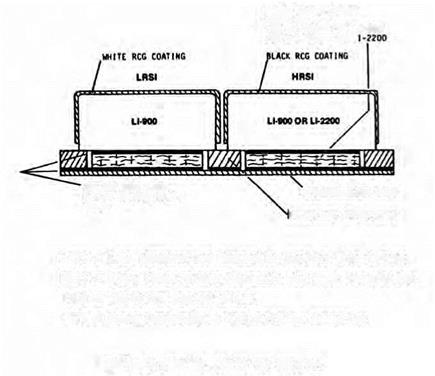

Reentry heating has been an important problem at Dryden since the X15 program. Extensive thermal research was conducted during the NASA YF-12 flight project, which is discussed in a later section. One very significant application of thermal-structural predictive methods was the thermal modeling of the Space Shuttle orbiter, using the Lewis-developed Structural Performance and Redesign (SPAR) finite element code. Prior to first flight, the conditions of the boundary layer on various parts of the vehicle in actual reentry conditions were not known. SPAR was used to model the temperature distribution in the Shuttle structure, for three different cases of aerodynamic heating: laminar boundary layer, turbulent boundary layer, and separated flow. Analysis was based on the space transportation system—trajectory 1 (STS-1) flight profile—and results were compared with temperature time histories from the first mission. The analysis showed that theflight data were best matched under the assumption of extensive laminar flow on the lower surface, and partial laminar flow on the upper surface. This was one piece of evidence confirming the important realization that laminar boundary layers could exist, under conditions of practical interest for hypersonic flight.[864]

Dryden has a unique thermal loads laboratory, large enough to house an SR-71 or similar-sized aircraft and heat the entire airframe to temperatures representative of high-speed flight conditions. This facility is used to calibrate flight instrumentation at expected temperatures and also to independently apply thermal and structural loads for the purpose of validating predictive methods or gaining a better understanding of the effects of each. It was built during the X15 program in the 1960s and is still in use today.

Aeroservoelastics—the interaction of air loads, flexible structures, and active control systems—has become increasingly important since the late 1970s. As active fly-by-wire control entered widespread use in high-performance aircraft, engineers at Dryden worked to integrate control system modeling with finite-element structural analysis and aerodynamic modeling. Structural Analysis Routines (STARS) and other programs were developed and improved from the 1980s through the present. Recent efforts have addressed the modeling of uncertainty and adaptive control.[865]

At Dryden, much of the technology transfer to industry comes not so much from the release of codes developed at Dryden, but from the interaction of the contractors who develop the aircraft with the technical groups at Dryden who participate in the analysis and testing. Dryden has been involved, for example, in aeroservoelastic analysis of the X-29; F15s and F18s in standard and modified configurations (including physical airframe modifications and/or modifications to the control laws); High Altitude Long Endurance (HALE) unpiloted vehicles, which have their own set of challenges, usually flying at lower speeds but also having longer and more flexible structures than fighter class aircraft; and many other aircraft types.

FILLER BAR. NOMCI PELT

FILLER BAR. NOMCI PELT