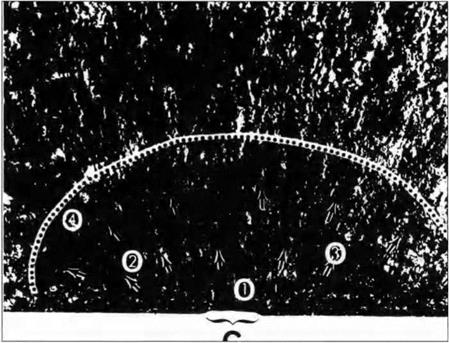

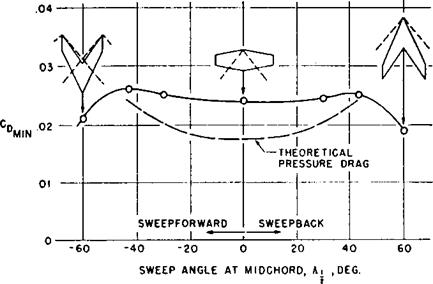

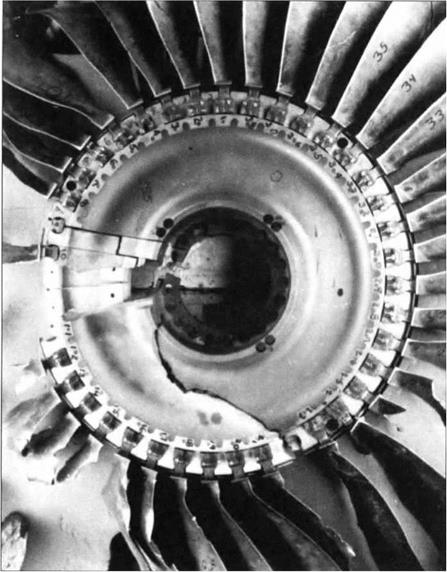

United Airlines flight 232 was 37,000 feet above Iowa traveling at 270 knots on 19 July 1989, when, according to the NTSB report, the flightcrew heard an explosion and felt the plane vibrate and shutter. From instruments, the crew of the DC-10-10 carrying 285 passengers could see that the number 2, tail-mounted engine, was no longer delivering power (see figure 5). The captain, A1 Haynes, ordered the engine shutdown checklist, and first officer Bill Records reported first that the airplane’s

Figure 5. DC-10 Engine Arrangement. Source: National Transportation Safety Board, Aircraft Accident Report, United Airlines Flight 232, McDonnell Douglas DC-10-10, Sioux Gateway Airport, Sioux City, Iowa, July 19, 1989, p. 2, figure 1. Hereafter, NTSB-232.

normal hydraulic systems gauges had just gone to zero. Worse, he notified the captain that the airplane was no longer controllable as it slid into a descending right turn. Even massive yoke movements were futile as the plane reached 38 degrees of right roll. It was about to flip on its back. Pulling power completely off the number 1 engine, Haynes jammed the number three throttle (right wing engine) to the firewall, and the plane began to level off. “I have been asked,” Haynes later wrote, “how we thought to do that; I do not have the foggiest idea.”28 No simulation training, no manual, and no airline publication had ever contemplated a triple hydraulic failure;29 understanding how it could have happened became the centerpiece of an extraordinarily detailed investigation, one that, like the inquiry into the crash of Air Florida 90, surfaced the irresolvable tension between a search for a localized, procedural error and fault lines embedded in a wide array of industries, design philosophies, and regulations.

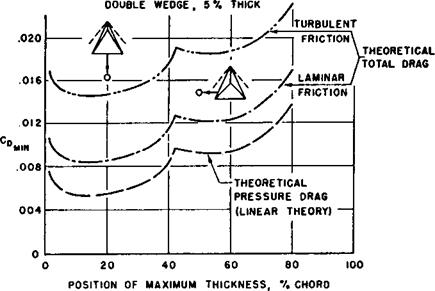

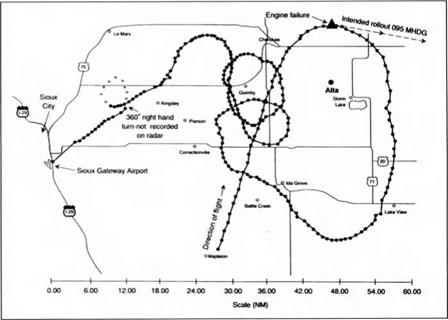

At 15:20, the DC-10 crew radioed Minneapolis Air Route Traffic Control Center declaring an emergency and requesting vectors to the nearest airport.30 Flying in a first class passenger seat was Dennis Fitch, a training check airman on the DC-10, who identified himself to a flight attendant, and volunteered to help in the cockpit. At 15:29 Fitch joined the team, where Haynes simply told him: “We don’t have any controls.” Haynes then sent Fitch back into the cabin to see what external damage, if any, he could see through the windows. Meanwhile, second officer Dudley Dvorak was trying over the radio to get San Francisco United Airlines Maintenance to help, but without much success: “He’s not telling me anything.” Haynes answered, “We’re not gonna make the runway fellas.” What Fitch had to say on his return was also not good: “Your inboard ailerons are sticking up,” presumably held up by aerodynamic forces alone, and the spoilers were down and locked. With flight attendants securing the cabin at 1532:02, the captain said, “They better hurry we’re gonna have to ditch.” Under the captain’s instruction, Fitch began manipulating the throttles to steer the airplane and keep it upright.31

Now it was time to experiment. Asking Fitch to maintain a 10-15° turn, the crew began to calculate speeds for a no-flap, no-slat landing. But the flight engineer’s response – 200 knots for clean maneuvering speed – was a parameter, not a procedure. Their DC-10-10 had departed from its very status as an airplane. It was an object lacking even ailerons, the fundamental flight controls that were, in the eyes of many historians of flight, Orville and Wilbur Wright’s single most important innovation. And that wasn’t all: flight 232 had no slats, no flaps, no elevators, no breaks. Haynes was now in command of an odd, unproven hybrid, half airplane and half lunar lander, controlling motion through differential thrust. Among other difficulties, the airplane was oscillating longitudinally with a period of40-60 seconds. In normal flight the plane will follow such long-period swings, accelerating on the downswing, picking up speed and lift, then rising with slowing airspeed. But in normal flight, these variations in pitch (phugoids) naturally damp out around the equilibrium position defined by the elevator trim. Here, however, the thrust of the numbers one and three engines which were below the center of gravity had no compensating force above the center of gravity (since the tail-mounted number two engine was now dead and gone). These phugoids could only be damped by a difficult and counter-intuitive out-of-phase application of power on the

|

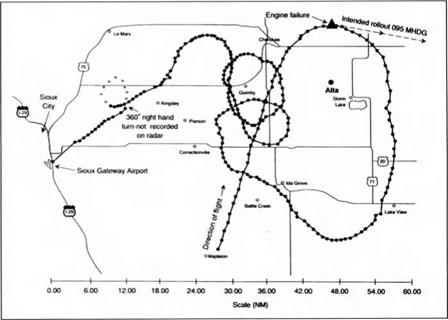

Figure 6. Ground Track of Flight 232. Source: NTSB-232, p. 4, figure 2.

|

downswing and, even more distressingly, throttling down on the slowing part of the cycle.32 At the same time, the throttles had become the only means of controlling airspeed, vertical speed, and direction: the flight wandered over several hundred miles as the crew began to sort out how they would attempt a landing (see figure 6).

To a flight attendant, Haynes explained that he expected to make a forced landing, allowed that he was not at all sure of the outcome, and that he expected serious difficulty in evacuating the airplane. His instructions were brief: on his words, “brace, brace, brace,” passengers and attendants should ready themselves for impact. At 15:51 Air Traffic Controller Kevin Bauchman radioed flight 232 requesting a wide turn to the left to enter onto the final approach for runway 31 – and to keep the quasi-controllable 370,000 pound plane clear of Sioux City itself. However difficult control was, Haynes concurred: “Whatever you do, keep us away from the city.” Then, at 15:53 the crew told the passengers they had about four minutes before the landing. By 15:58 it became clear their original plan to land on the 9,000 foot runway 31 would not happen, though they could make the closed runway 22. Scurrying to redeploy the emergency equipment that were lined up on 22 – directly in the landing path of the quickly approaching jet-Air Traffic Control began to order their last scramble, as tower controller Bauchman told them: “That’ll work sir, we’re gettin’ the equipment off the runway, they’ll line up for that one.” Runway 22 was only 6,600 feet long, but terminated in a field. It was the only runway they would have a chance to make and there would only be one chance. At

|

1559:44 the ground proximity warning came on… then Haynes called for the throttles to be closed, to which check airman Fitch responded “nah I can’t pull ‘em off or we’ll lose it that’s what’s turnin’ ya.” Four seconds later, the first officer began calling out “left A1 [Haynes]” “left throttle,” “left,” “left,” left.” As they plunged towards the runway, the right wing dipped and the nose dropped. Impact was at 1600:16 as the plane’s right wing tip, then the right main landing gear, slammed into the concrete. Cartwheeling and igniting, the main body of the fuselage lodged in a com field to the west of runway 17/35, and began to bum. The crew compartment and forward side of the fuselage settled east of mnway 17/35. Within a few seconds, some passengers were walking, dazed and hurt, down mnway 17, others gathered themselves up in the midst of seven-foot com stalks, disoriented and lost. A powerful fire began to bum along the exterior of the fuselage fragment, and emergency personnel launched an all-out barrage of foam on the center section as surviving passengers emerged. One passenger went back into the burning wreckage to pull out a crying infant. As for the crew, for over thirty-five minutes they lay wedged in a waist-high cmmpled remnant of the cockpit – rescue crews who saw the airplane fragment assumed anyone inside was dead. When he regained consciousness, Fitch was saying something was cmshing his chest, dirt was in the fragmented cockpit. Second officer Dvorak found some loose insulation which he waved out a hole in the aluminum to attract attention. Finally, pried loose, emergency personnel brought the four injured crewmembers (Haynes, Records, Dvorak, and Fitch) to the local hospital.33 Despite the loss of over a hundred lives, it was, in the view of many pilots, the single most impressive piece of airmanship ever recorded. Without any functional control surface, the crew saved 185 of the 296 passengers on flight 232.

|

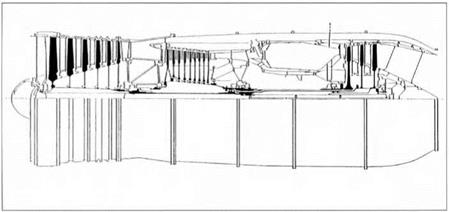

Figure 8. Planform Elevator Hydraulics. Source: NTSB-232, p. 34, figure 14.

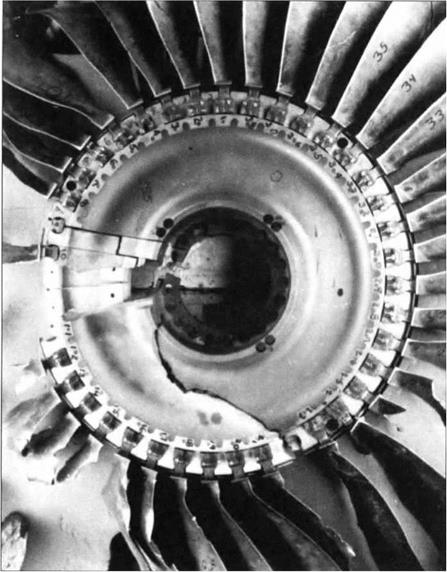

From the start, the search for probable cause centered on the number 2 (tail – mounted) engine. Not only had the crew witnessed the destruction wrought at the tail end of the plane, but Sioux City residents had photographed the damaged plane as it neared the airport; the missing conical section of the tail was immortalized in photographs. And the stage 1 fan (see figure 7), conspicuously missing from the number 2 engine after the crash, was almost immediately a prime suspect. It became, in its own right, an object of localized, historical inquiry.

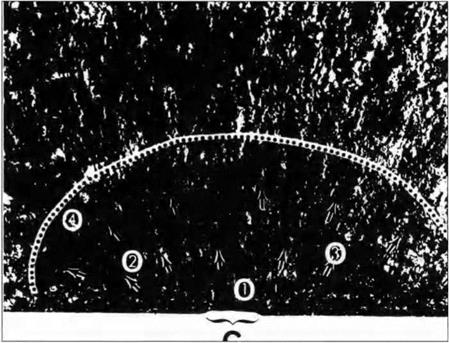

From records, the NTSB determined that this particular item was brought into the General Electric Aircraft Engines facility between 3 September and 11 December 1971. Once General Electric had mounted the titanium fan disk in an engine, they shipped it to the Douglas Aircraft Company on 22 January 1972 where it began life on a new DC-10-10. For seventeen years, the stage 1 fan worked flawlessly, passing six fluorescent penetrant inspections, clocking 41,009 engine-on hours and surviving 15,503 cycles (a cycle is a takeoff and landing).34 But the fan did fail on the afternoon of 19 July 1989, and the results were catastrophic. When the tail engine tore itself apart, one hydraulic system was lost. With tell-tale traces of titanium, shrapnel-like fan blades left their distinctive marks on the empennage (see figure 8). Worst of all, the flying titanium severed the two remaining hydraulic lines.

With this damage, what seemed an impossible circumstance had come to pass: in a flash, all three hydraulic systems were gone. This occurred despite the fact that each of the three independent systems was powered by its own engine. Moreover, each system had a primary and backup pump, and the whole system was further backstopped by an air-powered pump powered by the slipstream. Designers even physically isolated the hydraulic lines one from the other.35 And again, as in the Air Florida 90 accident, the investigators wanted to push back and localize the causal structure. In Flight 90, the NTSB passed from the determination that there was low thrust to why there was low thrust to why the captain had failed to command more thrust. Now they wanted to pass from the fact that the stage 1 fan disk had disintegrated to why it had blown apart, and eventually to how the faulty fan disk could have been in the plane that day.

Three months after the accident, in October of 1989, a farmer found two pieces of the stage 1 fan disk in his com fields outside Alta, Iowa. Investigators judged from the picture reproduced here that about one third of the disk had separated, with one fracture line extending radially and the other along a more circumferential path. (See figure 9.)

Upon analysis, the near-radial fracture appeared to originate in a pre-existing fatigue region in the disk bore. Probing deeper, fractographic, metallographic and chemical analysis showed that this pre-existing fault could be tracked back to a metal “error” that showed itself in a tiny cavity only 0.055 inches in axial length and 0.015 inches in radial depth: about the size of a slightly deformed period at the end of this typed sentence. Titanium alloys have two crystalline structures, alpha and beta, with a transformation temperature above which the alpha transforms into beta. By adding impurities or alloying elements, the allotropic temperature could be lowered to the point where the beta phase would be present even at room temperature. One such alloy, ТІ-6А1-4У was known to be hard, very strong, and was expected to maintain its strength up to 600 degrees Fahrenheit. Within normal ТІ-6А1-4У titanium, the two microscopic crystal structures should be present in about equal quantities. But inside the tiny cavity buried in the fan disk lay traces of a “hard alpha inclusion” titanium with a flaw-a small volume of pure alpha-type crystal structure, and an elevated hardness due to the presence of (contaminating) nitrogen.36

Putting the myriad of the many other necessary causes for the accident aside, the gaze of the NTSB investigators focused on the failed titanium, and even more closely on the tiny cavity with its traces of an alpha inclusion. What caused the alpha inclusion? There were, according to the investigation, three main steps in the production of titanium-alloy fan disks. First, foundry workers melted the various materials together in a “heat” or heats after which they poured the mix into a titanium alloy ingot. Second, the manufacturer stretched and reduced the ingot into “billets” that cutters could slice into smaller pieces (“blanks”). Finally, in the third and last stage of titanium production, machinists worked the blank into the appropriate geometrical shapes – the blanks could later be machined into final form.

Hard alpha inclusions were just one of the problems that titanium producers and consumers had known about for years (there were also high-density inclusions, and

|

Figure 9. Stage 1 Fan Disk (Reconstruction).Source: UAL 232 Docket, figure 1.10.2

|

the segregation of the alloy into flecks). To minimize the hard alpha inclusions, manufacturers had established various protective measures. They could melt the alloy components at higher heats, they could maintain the melt for a longer time, or they could conduct successive melting operations. But none of these methods offered (so to speak) an iron-clad guarantee that they would be able to weed out the impurities introduced by inadequately cleaned cutting, or sloppy welding residues. Nor could the multiple heats absolutely remove contamination from leakage into the furnace or even items dropped into the molten metal. Still, in 1970-71, General Electric was sufficiently worried about the disintegration of rotating engine parts that they ratcheted up the quality control on titanium fan rotor disks – after January 1972, the company demanded that only triple-vacuum-melted forgings be used. The last batch of alloy melted under the old, less stringent (double-melt) regime was Titanium Metals Corporation heat K8283 of February 23, 1971. Out of this heat, ALCOA drew the metal that eventually landed in the stage 1 fan rotor disk for flight 232.37

Chairman James Kolstad’s NTSB investigative team followed the metal, finding that the 7,000 pound ingot K8283 was shipped to Ohio for forging into billets of 16м diameter; then to ALCOA in Cleveland, Ohio, for cutting into 700 pound blanks; the blanks then passed to General Electric for manufacture. These 16м billets were tested with an ultrasonic probe. At General Electric, samples from the billet were tested numerous ways and for different qualities – tensile strength, microstructure, alpha phase content and amount of hydrogen. And, after being cut into its rectilinear machine-forged shape, the disk-to-be again passed an ultrasonic inquisition, this time by the more sensitive means of immersing the part in liquid. The ultrasonic test probed the rectilinear form’s interior for cracks or cavities, and it was supplemented by a chemical etching that aimed to reveal surface anomalies.38 Everything checked, and the fan was then machined and shot peened (that is, hammered smooth with a stream of metal shot) into its final form. On completion, the now finished disk fan passed a fluorescent penetrant examination – also designed to display surface cracking.39 It was somewhere at this stage – under the stresses of final machining and shot peening – that the investigators concluded cracking began around the hard alpha inclusion. But since no ultrasonic tests were conducted on the interior of the fan disk after the mechanical stresses of final machining, the tiny cavity remained undetected.^

The fan’s trials were not over, however, as the operator – United Airlines – would, from then on out, be required to monitor the fan for surface cracking. Protocol demanded that every time that maintenance workers disassembled part of the fan, they were to remove the disk, hang it on a steel cable, paint it with fluorescent penetrant, and inspect it with a 125-amp ultraviolet lamp. Six times over the disk’s lifetime, United Airlines personnel did the fluorescence check, and each time the fan passed. Indeed, by looking at the accident stage-1 fan parts, the Safety Board found that there were approximately the same number of major striations in the material pointing to the cavity as the plane had had cycles (15, 503). This led them to conclude that the fatigue crack had begun to grow more or less at the very beginning of the engine’s life. Then (so the fractographic argument went) with each takeoff and landing the crack began to grow, slowly, inexorably,

|

Figure 10. Cavity and Fatigue Crack Area. Source: NTSB-232, p. 46, figure 19B.

|

out from the 1/100" cavity surrounding the alpha inclusion, over the next 18 years. (See figure 10.)

By the final flight of 232 on 19 July 1989, both General Electric and the Safety Board believed the crack at the surface of the bore was almost fi" long.41 This finding exonerated the titanium producers, since interior faults, especially one with no actual cavity, were much harder to find. It almost exonerated General Electric because their ultrasonic test would not have registered such an interior filled cavity with no cracks, and their etching test was performed before the fan had been machined to its final shape. By contrast, the NTSB laid the blame squarely on the United Airlines San Francisco maintenance team. In particular, the report aimed its cross hairs on the inspector who last had the fan on the wire in February 1988 for the Fluorescent Penetrant Inspection. At that time, 760 cycles before the fan disk disintegrated, the Safety Board judged that the surface crack would have grown to almost fi". They asked: why didn’t the inspector see the crack glowing under the illumination of the ultraviolet lamp?42 The drive to localization had reached its target. We see in our mind’s eye an inculpatory snapshot: the suspended disk, the inspector turning away, the half-inch glowing crack unobserved.

United Airlines’ engineers argued that stresses induced by rotation could have closed the crack, or perhaps the shot peening process had hammered it shut,

preventing the fluorescent dye from entering.43 The NTSB were not impressed by that defense, and insisted that the fluorescent test was valid. After all, chemical analysis had shown penetrant dye inside the half-inch crack found in the recovered fan disk, which meant it had penetrated the crack. So again: why didn’t the inspector see it? The NTSB mused: the bore area rarely produces cracks, so perhaps the inspector failed to look intently where he did not expect to find anything. Or perhaps the crack was obscured by powder used in the testing process. Or perhaps the inspector had neglected to rotate the disk far enough around the cable to coat and inspect all its parts. Once again, a technological failure became a “human factor” at the root of an accident, and the “performance of the inspector” became the central issue. True, the Safety Board allowed that the UA maintenance program was otherwise “comprehensive” and “based on industry standards.” But non-destructive inspection experts had little supervision and not much redundancy. The CRM equivalent conclusion was that “a second pair of eyes” was needed (to ensure advocacy and inquiry). For just this reason the NTSB had come down hard on human factors in the inspection program that had failed to find the flaws leading to the Aloha Airlines accident in April 1988.44 Here then was the NTSB-certified source of flight 232’s demise: a tiny misfiring in the microstructure of a titanium ingot, a violated inspection procedure, a humanly-erring inspector. And, once again, the NTSB produced a single cause, a single agent, a violated protocol in a fatal moment.45

But everywhere the report’s trajectory towards local causation clashes with its equally powerful draw towards the many branches of necessary causation; in a sense, the report unstably disassembled its own conclusion. There were safety valves that could have been installed to prevent the total loss of hydraulic liquid, screens that would have slowed its leakage. Engineers could have designed hydraulic lines that would have set the tubes further from one another, or devised better shielding to minimize the damage from “liberated” rotating parts. There were other ways to have produced the titanium – as, for example, the triple-vacuum heating (designed to melt away hard alpha defects) that went into effect mere weeks after the fateful heat number 8283. Would flight 232 have proceeded uneventfully if the triple-vacuum heating had been implemented just one batch earlier? There are other diagnostic tests that could have been applied, including the very same immersion ultrasound that GEAE used – but applied to the final machine part. After all, the NTSB report itself noted that other companies were using final shape macroetching in 1971, and the NTSB also contended that a final shape macroetching would have caught the problem.46 Any list of necessary causes – and one could continue to list them ad libidum – ramified in all directions, and with this dispersion came an ever-widening net of agency. For example, in a section labeled “Philosophy of Engine/Airframe Design,” the NTSB registered that in retrospect design and certification procedures should have “better protected the critical hydraulic systems” from flying debris. Such a judgment immediately dispersed both agency and causality onto the entire airframe, engine, and regulatory apparatus that created the control mechanism for the airplane.47

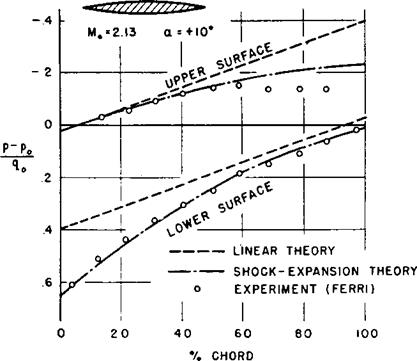

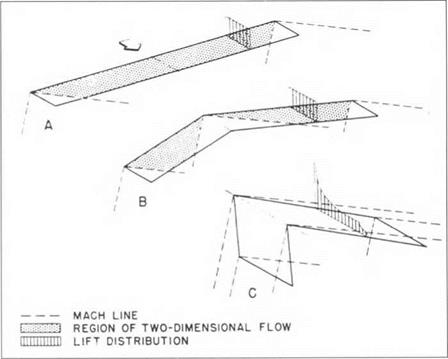

At an even broader level of criticism, the Airplane Pilots Association criticized the very basis of the “extremely improbable design philosophy” of the FAA. This “philosophy” was laid out in the FAA’s Advisory Circular 25.1309-1A of 21 June 1988, and displayed graphically in its “Probability versus Consequence” graph (figure 11) for aircraft system design.48 Not surprisingly, the FAA figured that catastrophic failures ought to be “extremely improbable,” (by which they meant less likely than one in a billion) while nuisances and abnormal procedures could be “probable” (1 in a hundred thousand). Recognizing that component failure rates were not easy to render numerically precise, the FAA explained that this was why they had drawn a wide line on figure 11, and why they added ‘The expression ‘on the order of when describing quantitative assessments.”49 A triple hydraulic failure was supposed to lie squarely in the one in a billion range – essentially so unlikely that nothing in further design, protection, or flight training would be needed to counter it. The pilots union disagreed. For the pilots, the FAA was missing the boat when it argued that the assessment of failure should be “so straightforward and readily obvious that… any knowledgeable, experienced person would unequivocally conclude that the failure mode simply would not occur, unless it is associated with a wholly unrelated failure condition that would itself be catastrophic.” For as they pointed out, a crash like that of 232 was precisely a catastrophic failure in one place (the engine) causing one in another (the flight control system). So while the hydraulic system might well be straightforwardly and obviously proof against independent failure, a piece of flying titanium could knock it out even if all three levels of pumps were churning away successfully. Such externally induced failures of the hydraulic system had, they pointed out, already occurred in a DC-10 (Air Florida), a 747 (Japan Air Lines) and an L-1011 (Eastern). “One in a billion” failures might be so in a make-believe world where hydraulic systems flew by themselves. But they don’t. Specifically, the pilots wanted a control system that was completely independent of the hydraulics. More generally, the pilots questioned the procedure of risk assessment. Hydraulic systems do not fly alone, and because they don’t, any account of causality and agency must move away from the local and into the vastly more complex world of systems interacting with systems.50 The NTSB report – or more precisely one impulse of the NTSB report – concurred: “The Safety Board believes that the engine manufacturer should provide accurate data for future designs that would allow for a total safety assessment of the airplane as a whole.”51 But a countervailing impulse pressed agency and cause into the particular and localized.

When I say that instability lay within the NTSB report it is all this, and more. For contained in the conclusions to the investigation of United 232 was a dissenting opinion by Jim Burnett, one of the lead investigators. Unlike the majority, Burnett saw General Electric, Douglas Aircraft and the Federal Aviation Agency as equally responsible.

I think that the event which resulted in this accident was foreseeable, even though remote, and that neither Douglas nor the FAA was entitled to dismiss a possible rotor failure as remote when reasonable and feasible steps could have been taken to “minimize” damage in the event of engine rotor failure. That additional steps could have been taken is evidenced by the corrections readily made, even as retrofits, subsequent to the occurrence of the “remote” event.52

Figure 11. Probability Versus Consequence. Source: UAL 232 Docket, U. S. Department of Transportation, Federal Aviation Administration, “System Design and Analysis,” 6/21/88, AC No. 25.1309-1 A, fiche 7, p. 7.

Like a magnetic force from a needle’s point, the historical narrative finds itself drawn to condense cause into a tiny space-time volume. But the narrative is constantly broken, undermined, derailed by causal arrows pointing elsewhere, more globally towards aircraft design, the effects of systems on systems, towards risk – assessment philosophy in the FAA itself. In this case that objection is not implicit but explicit, and it is drawn and printed in the conclusion of the report itself.

Along these same lines, I would like, finally, to return to the issue of pilot skill and CRM that we examined in the aftermath of Air Florida 90. Here, as I already indicated, the consensus of the community was that Haynes, Fitch, Dvorak, and Records did an extraordinary job in bringing the crippled DC-10 down to the threshold of Sioux City’s runway 22. But it is worth considering how the NTSB made the determination that they were not, in fact, contributors to the final crash landing of Flight 232. After the accident, simulators were set up to mimic a total, triple hydraulic failure of all control surfaces of the DC-10. Production test pilots were brought in, as were line DC-10 pilots; the results were that flying a machine in

that state was simply impossible, the skills required to manipulate power on the engines in such a way as to control simultaneously the phugoid oscillations, airspeed, pitch, descent rate, direction, and roll were quite simply “not trainable.” While individual features could be learned, “landing at a predetermined point and airspeed on a runway was a highly random event”53 and the NTSB concluded that “training… would not help the crew in successfully handling this problem. Therefore, the Safety Board concluded that the damaged airplane, although flyable, could not have been successfully landed on a runway with the loss of all hydraulic flight controls.” “[U]nder the circumstances,” the Safety Board concluded, “the UA flightcrew performance was highly commendable, and greatly exceeded reasonable expectations.”54 Haynes himself gave great credit to his CRM training, saying it was “the best preparation we had.”55

While no one doubted that flight 232 was an extraordinary piece of flying, not everyone concurred that CRM ought take the credit. Buck, ever dissenting from the CRM catechism, wrote that he would wager, whatever Haynes’s view subsequently was, that Haynes had the experience to handle the emergency of 232 with or without the aid of earthbound psychologists.56 But beyond the particular validity of cockpit resource management, the reasoning behind the NTSB satisfaction with the flightcrew is worth reviewing. For again, the Safety Board used post hoc simulations to evaluate performance. In the Air Florida Flight 90, the conclusion was that the captain could have aborted the takeoff safely, and so he was condemned for not aborting; because the simulator pilots could fly out of the stall by powering up quickly, the captain was damned for not having done so. In the case of flight 232, because the simulator-flying pilots were not able to land safely consistently, the crew was lauded. Historical re-enactments were used differently, but in both cases functioned to confirm the localization of cause and agency.